CC 310 Textbook

This is the homepage

This is the homepage

Welcome to CC 310!

Hello and welcome to the Computational Core program!

My name is Russ Feldhausen, and I’ll be one of the instructors for this program. My contact information is shown here, and is also listed on the syllabus

[Slide 2]

There are many other instructors and TAs for this program that you may interact with or see in the tutorial videos. They all have been instrumental in the development of this program. Specifically, I’d like to recognize the work of Nathan Bean, the developer of the CIS 400 course on which this course is based.

[Slide 3]

In this course we will primarily use a KSU email group (cc410-help or cc410-help@ksuemailprod.onmicrosoft.com) to communicate. Email sent to this address is forwarded to all instructors and TAs. Our replies to you will also be shared amongst the instructors and TAs so we all have access to the assistance you have already received. We will respond to you within a business day, so be aware that a question emailed Friday night may not receive an answer before Monday. Please read and adhere to the guidance on Netiquette in the syllabus for all electronic communications.

[Slide 3]

In addition to email and Canvas, we’ll be using the online learning platform Codio for most of the programming tutorials and projects in this program. We’ll also discuss how to use Codio later in this module.

[Slide 5]

The Computational Core program consists of several courses, and each course contains a number of learning modules. In general, there are about 12-15 modules per course. Each module will usually consist of an interactive tutorial using Codio, followed by a quiz through Canvas, and lastly a programming project in Codio. In CC 410, there will also be several guided examples for you to follow and submit. The modules themselves are gated, which means that you much complete each item in the module before continuing. In addition, the modules enforce prerequisite requirements from other modules. For CC 410 you must complete them in order starting with module 0.

You are welcome to work on this course at any time during the week as your schedule allows, provided that you complete each module before the listed due date. There will be roughly one module due each week. Unlike other Computational Core courses, CC 410 does not include many auto-graded assignments. This is primarily due to the open-ended nature of the course. Instead, your code will be reviewed by an instructor or TA and you’ll receive feedback through Canvas and Codio. In some instances, you may be encouraged to redo parts of an assignment for additional credit. We will strive to provide feedback on an assignment within one week of it being submitted.

[Slide 6]

Looking ahead to the rest of this introductory module, you’ll see that there are a few more items to be completed before you can move on. In the next video, I’ll discuss a bit more information about navigating through this course on Canvas and using the Codio learning environment.

[Slide 7]

One thing I highly encourage each of you to do is read the syllabus for this course in its entirety, and let us know if you have any questions. My view is that the syllabus is a contract between me as your teacher and you as a student, defining how each of us should treat each other and what we should expect from each other. We have made a few changes to the standard syllabus template for this program, and those changes are clearly highlighted. Finally, the syllabus itself is subject to change as needed as we adapt this program to meet the needs of its students, and all changes will be clearly communicated to everyone before they take effect.

[Slide 8]

One very important part of the syllabus that every student should read is the late work policy. First off, each module has a due date, and you may work on that module at any time before it is due, provided you have met the prerequisites. As discussed before, you must do all the readings and assignments in a module, preferably in listed order, before moving on, so you cannot jump ahead. A module is considered completed when all items have been completed.

[Slide 9]

For the purposes of grading, we will use the date and time that the confirmation quiz was submitted at the end of each module to determine when the module was completed. This is due to the way that Codio handles grading, as it may resubmit previously graded assignments if an error in the module is corrected, making a previously completed assignment appear to be submitted late.

If a module is completed after the due date, a penalty of 10% of the total points of each assignment will be deducted for each day the assignment is late. Therefore, if an assignment is submitted 3 days late, it will be subject to a 30% penalty of the total number of points possible on that assignment. After 10 days, no points will be awarded for a late submission.

However, even if a module is late, it still must be completed before you can move on to a later module. So, it is very important to avoid getting behind in this course, as it can be very difficult to get back on track. If you ever find that you are struggling to keep up, please don’t be afraid to contact either the instructors or GTAs for assistance. We’d be happy to help you get caught back up quickly.

The grading in this course is very simple. First, 10% of your final grade will depend on the grades you receive from each of the tutorials and quizzes throughout the course. Next, 10% of your grade will come from the interactive examples that precede several projects. The next 40% of your grade will come from the numerous project milestones throughout the course, of which there will be approximately 10. There will also be a couple of “concept quizzes” throughout the semester, which are a bit longer than a normal quiz and will ask you to apply what you’ve learned to a novel situation. Those are worth 15% of your grade. Finally, the last 25% of your grade will come from the final project in the course, which will be discussed in a later video. In this program, the standard “90-80-70-60” grading scale will apply, though I reserve the right to curve grades up to a higher grade level at my discretion. Therefore, you will never be required to get higher than 90% for an A, but you may get an A if you score slightly below 90% if I choose to curve the grades.

[Slide 10]

This is intended to be a completely online, self-paced course. There are no mandatory scheduled course times. All of the content is available online, so you can work whenever and wherever you want. It could be a 3-hour block once a week, or a few minutes here and there between classes. It’s really up to you and your schedule. However, remember that each module may require 12 to 16 or more hours of work to complete, so make sure you have plenty of time available to devote to this course.

In addition, due to the flexible online format of this class, there won’t be any long lecture videos to watch. Instead, each module will consist of a guided tutorial and several short videos, each focused on a particular topic or task. Likewise, there won’t be any textbooks required, since all of the information will be presented in the interactive tutorials through Codio. Finally, since we are using Codio as our learning platform, you won’t have to deal with installing and using a clunky integrated development environment, or IDE, just to learn how to program. Codio helps make learning to program quick and painless by moving everything to the web.

[Slide 11]

What hasn’t changed, though, is the basic concept of a college course. You’ll still be expected to watch or read about 6-9 hours of content to complete each module. In addition to that, each project assignment may require another 6-9 hours of work to complete. If you plan on doing a module each week, that roughly equates to 6 hours of content and 6 hours of homework each week, which is the expected workload from a 3-4 credit hour college course.

From my experience, I can definitely share that the number one reason students struggle in this class is due to poor time management, not the complexity of the material. So, make sure you are planning to dedicate enough time to this course, and strive to start assignments as soon as you receive them so you have lots of time to get help if you get stuck.

[Slide 12]

For this course, the only supplies you’ll need as a student are access to a modern web browser and a broadband internet connection. No other special hardware or software is necessary! However, in this course you will also be able to do some development on your own computer using Visual Studio Code and Ubuntu. We’ll provide some short videos to help you get started if you choose to go that route, but it is not required. Due to the complex nature of this course, we do not recommend using phones, tablets, or Chromebooks if you choose to do development on your own systems.

[Slide 13]

Finally, as you are aware, this course is always subject to change. This is a relatively new program here at K-State, and we’re always working on new and interesting ideas to integrate into the courses. The best advice I have is to look upon this graphic with the words “Don’t Panic” written in large, friendly letters. If you find yourself falling behind, or not understanding seek our help via cc410-help.

[Slide 14]

So, to complete this module, there are a few other things that you’ll need to do. The next step is to watch the video on navigating Canvas and Codio, which will give you a good idea of how to most effectively work through the content in this course.

[Slide 15]

To get to that video, click the “Next” button at the bottom right of this page.

This course makes extensive use of several features of Canvas which you may or may not have worked with before. To give you the best experience in this course, this video will briefly describe those features and the best way to access them.

When you first access the course on Canvas, you will be shown this homepage. It contains quick links to the course syllabus and Piazza discussion boards. This is handy if you just need to jump to a particular area.

Let’s walk through the options in the main menu to the left. The first section is Modules, which is where you’ll primarily interact with the course. You’ll notice that I’ve disabled several of the common menu items in this course, such as Files and Assignments. This is to simplify things for you as students, so you remember that all the course content is available in one place.

When you first arrive at the Modules section, you’ll see all of the content in the course laid out in order. If you like, you can minimize the modules you aren’t working on by clicking the arrow to the left of the module name. I’ll do so, leaving the introductory module open.

As you look at each module, you’ll see that it gives quite a bit of information about the course. At the top of each module is an item telling you what parts of the module you must complete to continue. In this case, it says “Complete All Items.” Likewise, the following modules may list a number of prerequisite modules, which you must complete before you can access it.

Within each module is a set of items, which must be completed in listed order. Under each item you’ll see information about what you must do in order to complete that item. For many of them, it will simply say view, which means you must view the item at least once to continue. Others may say contribute, submit, or give a minimum score required to continue. For assignments, it also helpfully gives the number of points available, and the due date.

Let’s click on the first item, Course Introduction, to get started. You’ve already been to this page by this point. Many course pages will consist of an embedded video, followed by links to any resources used or referenced in the video, including the slides and a downloadable version of the video. Finally, a rough video script will be posted on the page for your quick reference.

While I cannot force you to watch each video in its entirety, I highly recommend doing so. The script on the page may not accurately reflect all of the content in the video, nor can it show how to perform some tasks which are purely visual.

When you are ready to move to the next step in a module, click the Next button at the bottom of the page. Canvas will automatically add Next and Previous buttons to each piece of content which is accessed through the Modules section, which makes it very easy to work through the course content. I’ll click through a couple of items here.

At any point, you may click on the Modules link in the menu to the left to return to the Modules section of the site. You’ll notice that I’ve viewed the first few items in the first module, so I can access more items here. This is handy if you want to go back and review the content you’ve already seen, or if you leave and want to resume where you left off. Canvas will put green checkmarks to the right of items you’ve completed.

Continuing down the menu to the left, you’ll find the usual Canvas links to view your grades in the course, as well as a list of fellow students taking the course.

===

Now, let’s go back to Canvas and load up one of the Codio projects. To load the first Codio projects, click the Next button at the bottom of this page to go to the next part of this module, which is the Codio Introduction tutorial. On that page, there will be a button to click, which opens Codio in a new browser window or tab.

Once Codio loads, it should give you the option to start the Guide for that module. You’ll definitely want to select that option whenever you load a Codio project for the first time.

From there, you can follow the steps in that guide to learn more about the Codio interface. The first page of the guide continues this video. I’ll see you there!

As you work on the materials in this course, you may run into questions or problems and need assistance. This video reviews the various types of help available to you in this course.

First and foremost, anytime you have a questions or need assistance in the Computational Core program, please send an email to the appropriate help group for this course. In this case, it would be cc410-help, or cc410-help@ksuemailprod.onmicrosoft.com. That email goes to the instructors and GTAs, and is your best chance to get a quick response. We’ll respond to your email within one business day.

Beyond email, there are a few resources you should be aware of. First, if you have any issues working with K-State Canvas, K-State IT resources, or any other technology related to the delivery of the course, your first source of help is the K-State IT Helpdesk. They can easily be reached via email at helpdesk@ksu.edu. Beyond them, there are many online resources for using Canvas, all of which are linked in the resources section below the video. As a last resort, you may also want to email the help group, but in most cases we may simply redirect you to the K-State helpdesk for assistance.

Similarly, if you have any issues using the Codio platform, you are welcome to refer to their online documentation. Their support staff offers a quick and easy chat interface where you can ask questions and get feedback within a few minutes.

If you have issues with the technical content of the course, specifically related to completing the tutorials and projects, there are several resources available to you. First and foremost, make sure you consult the vast amount of material available in the course modules, including the links to resources. Usually, most answers you need can be found there.

If you are still stuck or unsure of where to go, the next best thing is to post your question as an email to the help group. As discussed earlier, the instructors and GTAs will do their best to help you as soon as they can.

Of course, as another step you can always exercise your information-gathering skills and use online search tools such as Google to answer your question. While you are not allowed to search online for direct solutions to assignments or projects, you are more than welcome to use Google to access programming resources such as StackOverflow, language documentation, and other tutorials. I can definitely assure you that programmers working in industry are often using Google and other online resources to solve problems, so there is no reason why you shouldn’t start building that skill now.

Next, we have grading and administrative issues. This could include problems or mistakes in the grade you received on a project, missing course resources, or any concerns you have regarding the course and the conduct of myself and your peers. Since this is an online course, you’ll be interacting with us on a variety of online platforms, and sometimes things happen that are inappropriate or offensive. There are lots of resources at K-State to help you with those situations. First and foremost, please email me directly as soon as possible and let me know about your concern, if it is appropriate for me to be involved. If not, or if you’d rather talk with someone other than me about your issue, I encourage you to contact either your academic advisor, the CS department staff, College of Engineering Student Services, or the K-State Office of Student Life. Finally, if you have any concerns that you feel should be reported to K-State, you can do so at https://www.k-state.edu/report/. That site also has links to a large number of resources at K-State that you can use when you need help.

Finally, if you find any errors or omissions in the course content, or have suggestions for additional resources to include in the course, email the help group. There are some extra credit points available for helping to improve the course, so be on the lookout for anything that you feel could be changed or improved.

So, in summary, reviewing the existing course content should always be your first stop when you have a question or run into a problem, since most issues can be solved there. If you are still stuck, email cc410-help to ask for assistance, and we’ll get back to you within a business day. For issues with Canvas or Codio, you are also welcome to refer directly to the resources for those platforms. For grading questions and errors in the course content or any other issues, please email cc410-help or the instructors directly for assistance.

Our goal in this program is to make sure that you have the resources available to you to be successful. Please don’t be afraid to take advantage of them and ask questions whenever you want.

Before we launch into the course itself, I wanted to take a few minutes to share some information with you regarding what we know about how students learn to program. This isn’t just anecdotal evidence from computer science teachers like me, but theories and research from education researchers who study how humans learn new skills and abilities throughout their lives.If I had to summarize all of this information in as few words as possible, I’d simply say “do the work.” Learning to program is difficult, and the only way to really get good at it is through constant practice and learning. However, that greatly oversimplifies the information that I want to share, and I’m hoping that you’ll find some helpful takeaways from this video that you can incorporate into your learning process.

Before I begin, I want go give all the credit to Nathan Bean for developing this information as part of his CIS 400 course. He graciously allowed me to use his hard work here, and I encourage you to check out his original version, which is available at the URL shown on this slide.

The statement “do the work” is a shorter version of a very common quote from educators, which is “the person doing the work is the person doing the learning.” I couldn’t find a solid reference for who said it first, so I’ll just attributed it to various educators throughout time. This really highlights one of the biggest struggles many students run into when learning to program. There are so many guides online, and the answer to many simple problems can be found through a quick Google search. You can just copy and paste the code, and then your program works. However, did you really learn how to write that program and what it does, or just how to find a quick answer? While this may be a useful tactic from time to time, if you rely too much on other people to do your coding, you really won’t learn it yourself. This is just like learning to shoot free throws on a basketball court or beating your best time in a speedrun - you can’t just watch someone do it and expect to do it yourself (believe me, I’ve tried). So, if you aren’t doing the work, you aren’t really learning.

Next, let’s address a major myth in computer science. I’ve heard this many times: “some people are just natural born programmers, and others simply cannot learn to program.” And yes, on the surface, it may appear to be this way. Some students just seem to have a knack for programming, and you may sit and struggle and not really get anywhere. However, there is no innate skill or ability that makes you good at programming.

Instead, let’s reframe what it means to learn programming. At its core, programming is learning to write steps to solve problems in a way that a computer can perform those steps. That’s really what we are doing when we learn programming.

So, we must focus on learning how to write those steps with the proper exactitude and precision so that they make sense, and we must understand how a computer functions to be able to program that computer effectively. So, when you see someone who is good at programming, it’s not because they are good at some esoteric skill that you’ll never have - they just know how to express their steps properly and know enough about how a computer works to make their program do what they want. That’s really it! And, to be honest, after a single semester of learning to program, you’ll have all the skills you need to do both of those things! If you know how to make conditionals, loops, functions, and use simple variables and arrays, that’s really all you need. Everything else that comes after that is just refining those skills to make your programs more powerful and your coding more efficient.

So, how do we learn these skills? Well, there are a couple of important pieces we need to make sure are in the right place first. For starters, we need to have the correct mindset. Many times I’ll see students struggle to learn how to program, and they’ll say things like what you see on this slide. “Its too hard.” “I don’t understand this.” “I give up.” Statements like this are the sign of a “fixed mindset,” and they can be one of the greatest blockers preventing you from really learning to program. Just like learning any other skill, you have to be open to instruction and willing to learn, or else you’ve failed before you even started.

Instead, we want to focus on building a growth mindset. In the TED talk by Carol Dweck that is linked below this video, which I encourage you to watch, she talks about the power of “yet.” We can turn these statements around by simply adding positive power of “yet” - “I don’t understand this yet.” “I love a good challenge.” “I’ll keep trying until I get it.” Going into a programming project with a mindset that is open to growth and change is really an important first steps. When I feel like I’m getting a fixed mindset, I like to think about how difficult it would be to teach a child to tie their shoes if they don’t want to learn. As soon as I realize that, it is pretty easy to recognize that same problem in myself and work to correct it.

So, once we have our growth mindset, how do we actually learn to program? To understand that, let’s dive a bit into the world of educational theory and the work of Jean Piaget. Piaget was a biologist and psychologist who studied how young children acquired new knowledge, and he helped pioneer the concept of Constructivism, one of the most influential philosophies in education. You can read more about Constructivism in the links below this video.

One particular thing that Piaget worked on was a theory of genetic epistemology. Epistemology is the term for the study of human knowledge, so genetic epistemology is the study of the origins, or genesis, of that knowledge. Put more clearly, it’s the study of how humans create new knowledge. This concept was inspired by research done on snails - he was able to prove that two previously distinct species of snails were actually the same by moving snails from one habitat to another and observing how they modified their behaviors and how their shells grew to match the snails in the new habitat. Put clearly, the snails displayed an altered behavior based on their environment. They tried to exist in equilibrium with their environment by adapting their behaviors to fit what they now experienced in the word.

Piaget suspected that something similar happens when humans try to learn something - the brain tries to adapt itself to maintain an equilibrium in its environment, which in this case is the existing knowledge it contains. So, when the brain is exposed to new ideas, it must somehow adjust to account for that new information. Piaget proposed two different mechanisms for how this occurs: assimilation and accommodation. In assimilation, new knowledge can be added to existing structures in the brain. For example, if you are exposed to a new color, such as periwinkle, you can see that it falls somewhere between blue and violet, two colors you already know. So, you can assimilate that new knowledge into the existing knowledge without a major disruption to your mental structure of existing colors. Accommodation, on the other hand, happens when your brain must radically adapt to new information for which no existing structures exist. This can be very difficult, and can lead to a lot of struggle and frustration when trying to get “over the hump” on a new subject. Think about learning algebra or a new language for the first time - you really don’t have anything you can use to help understand this new material, so you just have to keep at it until those new structures are formed in your brain.

Unfortunately, to achieve accommodation, your brain simply has to build brand new structures to store and represent all of this new information, and that process is difficult and takes time. Put another way, it takes significant stimulus, usually in the form of doing homework, struggling with difficult problems and wrestling with the new information to try and understand it all, to create enough disequilibrium in your brain that, coupled with a growth mindset, will allow accommodation to occur. However, when all the pieces are in the right place, and you work hard and have a growth mindset, then…

EUREKA! The structures will form, and you’ll get over that huge hurdle, and things will start falling into place. It may not happen all at once, but it does happen (you’ve probably had it happen to you several times already - think about some eureka moments from your past - were they related to learning a new skill?). Of course, there’s a good chance that your brain might form a few incorrect structures in the process, so you’ll have to overcome those as you continue to learn. I still struggle to spell some words because my brain formed incorrect structures when I was still learning. But, if you continue to work hard and be open to learning, you’ll eventually sort those errors out as well.

Let’s look at one other concept in education, which is called stage theory. Piaget identified four stages that children go through as they learn to reason about the world. Those four stages are shown on this slide. In the sensorimotor stage, the child is just using their senses to interact with the world, without any real understanding of what will happen when they perform an action. This is best represented by babies and toddlers, who touch and taste everything in their surroundings. Next, the preoperational stage is represented in young children as they start to think symbolically about the world, using pictures and words to represent actions and objects. They then progress to the concrete operational stage, where they can begin to think logically and understand how concrete events happen. They can also start to think inductively, building the general principles of the world from their specific experiences. For example, if they observe that cooked spaghetti is better than raw spaghetti, they might reason that other foods like potatoes are better cooked than raw. Finally, the last stage is the formal operational stage. This stage is represented by the ability to work fully with an abstract work, formulating and testing hypotheses to truly understand how the world works and predict how new items will work before experiencing them firsthand.

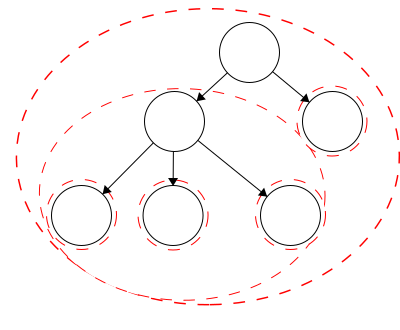

Many later researchers built upon this model to show that adults learn in much the same way. They also discovered that the stages are not rigid, and you may exhibit behaviors from multiple stages at any given time. This is called the “overlapping waves” model, and is shown here in this diagram. So, as you learn new skills, you may be at the operational stage in some areas, but still at the preoperational stage in other areas. This explains why some concepts may make sense while others don’t for a while - you just have to keep going until it all fits together.

So, how can we apply all of this information to programming? One theory comes from the work of Lister and Teague, who proposed a developmental epistemology of computer programming. Put another way, they applied this theory to computer science education, and gave us a unique way to think about the different stages of learning to program.

At the sensorimotor stage, we’re just getting the basics. So, when given a piece of code and asked to trace what it does, we still make lots of errors and get the answer incorrect. If we want to get a program to work ourselves, it usually involves a lot of trial and error, and many times when it does end up working we don’t even know exactly why it worked that time, but we’re building up a baseline of information that we can use to construct our mental model of how a computer works.

As we progress into the preoperational stage, we become better at tracing code correctly, but we still struggle to understand what the program itself does. We see each line of code as a separate instruction, but not the entire program. A great analogy is reading a recipe that calls for flour, water, salt, and yeast. Will it make bread? Biscuits? Pie crust? We’re not sure yet, but at least we can recognize the ingredients. To solve problems at this stage, we typically will randomly adjust pieces of our code that we don’t quite understand and see what it does, trying to form a better idea of the importance of each line in the code.

Eventually, we’ll get to the concrete operational stage. At this stage, we can construct our own programs, but many times we are simply piecing together parts that we’ve used before and performing some futile patches and bugfixes as we refine the program. We can also work backwards to figure out what a program does from execution results, but we still aren’t very good at deducing the results from the code itself. However, we’re starting to work with abstraction, though we tend to simplify things to a level that we are more comfortable with.

Finally, we’ll reach the formal operational stage. At this stage, we can comfortable read and understand code without executing it, quickly seeing what it does and how it works without fully tracing it ourselves. We can also start to form hypotheses for how to build new programs and code, and reason about whether different approaches would work better or worse than others. This is the goal stage for any programmer! Once you have reached this stage, then you’ll feel totally at home working in code and developing your own programs from scratch.

So, how can we enable ourselves to be the best learners we can be? There is lots of interesting research in that area, best summarized in the book “The New Science of Learning” that is linked below this video. Let’s go through a few of the big concepts.

First, getting ample and regular sleep is important, because it allows your brain to build those knowledge structures we discussed earlier and store the memories from the day in long-term storage. Without enough sleep, your brain is unable to process memories offline and make them ready for retrieval later on, an important step in learning. Also, consuming large amounts of caffeine or alcohol can disrupt your sleep patterns, so keep that in mind before you pour that next cup of coffee or go out partying. You can also take advantage of modern technology to help you track your sleep - most smart watches and smartphones today can help with that!

Likewise, regular exercise is important to both your physical and mental health. When you exercise, especially aerobic exercise that gets your heart rate up, your body releases neurochemicals that help your brain cells communicate. In addition, just getting up and moving around regularly helps keep your body healthy, so take regular breaks, and consider getting a standing desk for some extra benefits.

Research also shows that engaging your senses is an important part in learning. This is why we, as teachers, try to vary our lessons with pictures, videos, activities, and more. It is also the basis of the cognitive apprenticeship style of learning that we use, which you can learn more about in the links below this video. We show you the code we are writing, engaging your sense of vision, while talking about it so you are also listening, and then you are writing your own version, using your sense of touch. You can build upon this by using your senses while you learn by taking notes during a lecture video, building concept maps, and even printing out and writing on your code and these lecture scripts. All of these processes help engage different parts of your brain and make it that much easier to build new knowledge structures.

Looking for patterns is another important way to understand programming. There are many common patterns in computer programs, such as using a for loop to iterate through an array, or an if-else statement to determine if a particular variable is set to a valid value. By recognizing and understanding those patterns, we can more quickly understand new programs that use slightly different versions of the same code. Humans are naturally very good at pattern recognition, and it is one of the reasons why we see the same code structures time and time again - not because they are the only way to accomplish that goal, but because that structure is commonly used across many programs and therefore is easier to understand.

There is quite a bit of research into how memories are formed and how we can adjust our studying habits to take advantage of that. For example, cognitive science shows that the parts of our brain responsible for memory creation are active up to one hour after a learning experience has ended, such as a lecture video or activity. So, instead of jumping to the next task, you may want to take a little while to reflect on what you just did and let it sink in before moving on. Likewise, to build strong memories, it is important to constantly recall the memory or use the skills you’ve learned to strengthen their structures in the brain. This is why teachers like to throw in a few questions from a previous exam or quiz every once in a while - it helps strengthen those structures by forcing you to recall information you’ve learned previously. On the other hand, many students try to “cram” a bunch of information right before an exam, only to forget it soon after because it wasn’t recalled more than once. As you progress further, we’ll continue to come back to concepts you’ve already learned and build upon them, a process called elaboration that helps reinforce what you’ve already learned while building new, related knowledge.

Finally, it is important to remember that we must give our brains the space it needs to focus on the task at hand. Multitasking while learning, such as watching YouTube or Twitch, chatting with friends, or listening to a lecture video while coding can all reduce your brain’s ability to form strong memories and do well. In fact, research shows that individuals who try to multitask tend to make 50% more errors and spend 50% more time on both tasks. So, instead of giving yourself distractions, try to find things that will help you focus better - there are some great playlists online for music without lyrics that can help you focus or code better, and you can easily mute notifications on your phone and on your computer for an hour or so while you work.

So, let’s summarize what we’ve covered here. First, and most importantly, remember that you can learn to program, just like the many students who have done it before you. However, it can be difficult and frustrating at times, and it will take lots of hard work on your part to make it happen. That means that you’ll need to read and write a lot of code before it really starts to make sense. In short, you must do the work to learn to program.

That said, you can help make the process easier by getting good sleep, exercising regularly, and engaging fully with all of the content in the course. That means you’ll need to take your own notes, maybe draw some diagrams, and annotate code you write and code you read to help you understand it. While you are working, try not to multitask so you can focus. If you are given some code to include in your program, don’t copy/paste it - rewrite it, and make sure you completely understand what each line does. Finally, take some time to read code written by others! GitHub is a great place to discover all sorts of code and see how others write code. If you want to write good poetry you have to read lots of good poetry, and the same goes for coding.

With that in mind, I hope you are able to make the best of this course and continue to develop your programming skills. If you are interested in this topic and would like to know more about things you can do to be a better learner, let us know! As you can imagine, teachers like me love to talk about this stuff, so don’t be afraid to ask. Good luck!

Exploration of data structures & related algorithms in computer programming. Basic concepts of complexity analysis. Object-oriented design concepts.

This course introduces simple data structures such as sets, lists, stacks, queues, and maps. Students learn how to create data structures and the algorithms that use them. Students are introduced to algorithm analysis to determine the efficiency of algorithms.

After completing this course, a successful student will be able to:

These courses are being taught 100% online, and each module is self-paced. There may be some bumps in the road as we refine the overall course structure. Students will work at their own pace through a set of modules, with approximately one module being due each week. Material will be provided in the form of recorded videos, online tutorials, links to online resources, and discussion prompts. Each module will include a coding project or assignment, many of which will be graded automatically through Codio. Assignments may also include portions which will be graded manually via Canvas or other tools.

A common axiom in learner-centered teaching is “the person doing the work is the person doing the learning.” What this really means is that students primarily learn through grappling with the concepts and skills of a course while attempting to apply them. Simply seeing a demonstration or hearing a lecture by itself doesn’t do much in terms of learning. This is not to say that they don’t serve an important role - as they set the stage for the learning to come, helping you to recognize the core ideas to focus on as you work. The work itself consists of applying ideas, practicing skills, and putting the concepts into your own words.

There is no shortcut to becoming a great programmer. Only by doing the work will you develop the skills and knowledge to make you a successful computer scientist. This course is built around that principle, and gives you ample opportunity to do the work, with as much support as we can offer.

Tutorials, Quizzes & Examples: Each module will include many tutorial assignments, quizzes, and examples that will take you step-by-step through using a particular concept or technique. The point is not simply to complete the example, but to practice the technique and coding involved. You will be expected to implement these techniques on your own in the milestone assignment of the module - so this practice helps prepare you for those assignments.

Programming Assignments: Throughout the semester you will be building several programming projects that explore the topics, data structures, and algorithms introduced in this class. Each programming project may include multiple tasks and an automated grading system.

In theory, each student begins the course with an A. As you submit work, you can either maintain your A (for good work) or chip away at it (for less adequate or incomplete work). In practice, each student starts with 0 points in the gradebook and works upward toward a final point total earned out of the possible number of points. In this course, each assignment constitutes a portion of the final grade, as detailed below:

Up to 5% of the total grade in the class is available as extra credit. See the Extra Credit - Bug Bounty & Extra Credit - Helping Hands assignments for details.

Letter grades will be assigned following the standard scale:

In this course, all work submitted by a student should be created solely by the student without any outside assistance beyond the instructor and TA/GTAs. Students may seek outside help or tutoring regarding concepts presented in the course, but should not share or receive any answers, source code, program structure, or any other materials related to the course. Learning to debug coding problems is a vital skill, and students should strive to ask good questions and perform their own research instead of just sharing broken source code when asking for assistance.

Read this late work policy very carefully! If you are unsure how to interpret it, please contact the instructors via email. Not understanding the policy does not mean that it won’t apply to you!

Since this course is entirely online, students may work at any time and at their own pace through the modules. However, to keep everyone on track, there will be approximately one module due each week. Each graded item in the module will have a specific due date specified. Any assignment submitted late will have that assignment’s grade reduced by 10% of the total possible points on that project for each day it is late. This penalty will be assessed automatically in the Canvas gradebook. For the purposes of record keeping, a combination of the time of a submission via Canvas and the creation of a release in GitHub will be used to determine if the assignment was submitted on time.

However, even if a module is not submitted on time, it must still be completed before a student is allowed to begin the next module. So, students should take care not to get too far behind, as it may be very difficult to catch up.

Finally, all course work must be submitted on or before the last day of the semester in which the student is enrolled in the course in order for it to be graded on time.

If you have extenuating circumstances, please discuss them with the instructor as soon as they arise so other arrangements can be made. If you find that you are getting behind in the class, you are encouraged to speak to the instructor for options to make up missed work.

Students should strive to complete this course in its entirety before the end of the semester in which they are enrolled. However, since retaking the course would be costly and repetitive for students, we would like to give students a chance to succeed with a little help rather than immediately fail students who are struggling.

If you are unable to complete the course in a timely manner, please contact the instructor to discuss an incomplete grade. Incomplete grades are given solely at the instructor’s discretion. See the official K-State Grading Policy for more information. In general, poor time management alone is not a sufficient reason for an incomplete grade.

Unless otherwise noted in writing on a signed Incomplete Agreement Form, the following stipulations apply to any incomplete grades given in Computational Core courses:

To participate in this course, students must have access to a modern web browser and broadband internet connection. All course materials will be provided via Canvas and Codio. Modules may also contain links to external resources for additional information, such as programming language documentation.

The details in this syllabus are not set in stone. Due to the flexible nature of this class, adjustments may need to be made as the semester progresses, though they will be kept to a minimum. If any changes occur, the changes will be posted on the Canvas page for this course and emailed to all students. All changes may also be posted to Canvas.

The statements below are standard syllabus statements from K-State and our program. The latest versions are available online here.

Kansas State University has an Honor and Integrity System based on personal integrity, which is presumed to be sufficient assurance that, in academic matters, one’s work is performed honestly and without unauthorized assistance. Undergraduate and graduate students, by registration, acknowledge the jurisdiction of the Honor and Integrity System. The policies and procedures of the Honor and Integrity System apply to all full and part-time students enrolled in undergraduate and graduate courses on-campus, off-campus, and via distance learning. A component vital to the Honor and Integrity System is the inclusion of the Honor Pledge which applies to all assignments, examinations, or other course work undertaken by students. The Honor Pledge is implied, whether or not it is stated: “On my honor, as a student, I have neither given nor received unauthorized aid on this academic work.” A grade of XF can result from a breach of academic honesty. The F indicates failure in the course; the X indicates the reason is an Honor Pledge violation.

For this course, a violation of the Honor Pledge will result in sanctions such as a 0 on the assignment or an XF in the course, depending on severity. Actively seeking unauthorized aid, such as posting lab assignments on sites such as Chegg or StackOverflow, or asking another person to complete your work, even if unsuccessful, will result in an immediate XF in the course.

This course assumes that all your course work will be done by you. Use of AI text and code generators such as ChatGPT and GitHub Copilot in any submission for this course is strictly forbidden unless explicitly allowed by your instructor. Any unauthorized use of these tools without proper attribution is a violation of the K-State Honor Pledge.

We reserve the right to use various platforms that can perform automatic plagiarism detection by tracking changes made to files and comparing submitted projects against other students’ submissions and known solutions. That information may be used to determine if plagiarism has taken place.

At K-State it is important that every student has access to course content and the means to demonstrate course mastery. Students with disabilities may benefit from services including accommodations provided by the Student Access Center. Disabilities can include physical, learning, executive functions, and mental health. You may register at the Student Access Center or to learn more contact:

Students already registered with the Student Access Center please request your Letters of Accommodation early in the semester to provide adequate time to arrange your approved academic accommodations. Once SAC approves your Letter of Accommodation it will be e-mailed to you, and your instructor(s) for this course. Please follow up with your instructor to discuss how best to implement the approved accommodations.

All student activities in the University, including this course, are governed by the Student Judicial Conduct Code as outlined in the Student Governing Association By Laws, Article V, Section 3, number 2. Students who engage in behavior that disrupts the learning environment may be asked to leave the class.

At K-State, faculty and staff are committed to creating and maintaining an inclusive and supportive learning environment for students from diverse backgrounds and perspectives. K-State courses, labs, and other virtual and physical learning spaces promote equitable opportunity to learn, participate, contribute, and succeed, regardless of age, race, color, ethnicity, nationality, genetic information, ancestry, disability, socioeconomic status, military or veteran status, immigration status, Indigenous identity, gender identity, gender expression, sexuality, religion, culture, as well as other social identities.

Faculty and staff are committed to promoting equity and believe the success of an inclusive learning environment relies on the participation, support, and understanding of all students. Students are encouraged to share their views and lived experiences as they relate to the course or their course experience, while recognizing they are doing so in a learning environment in which all are expected to engage with respect to honor the rights, safety, and dignity of others in keeping with the K-State Principles of Community.

If you feel uncomfortable because of comments or behavior encountered in this class, you may bring it to the attention of your instructor, advisors, and/or mentors. If you have questions about how to proceed with a confidential process to resolve concerns, please contact the Student Ombudsperson Office. Violations of the student code of conduct can be reported using the Code of Conduct Reporting Form. You can also report discrimination, harassment or sexual harassment, if needed.

This is our personal policy and not a required syllabus statement from K-State. It has been adapted from this statement from K-State Global Campus, and theRecurse Center Manual. We have adapted their ideas to fit this course.

Online communication is inherently different than in-person communication. When speaking in person, many times we can take advantage of the context and body language of the person speaking to better understand what the speaker means, not just what is said. This information is not present when communicating online, so we must be much more careful about what we say and how we say it in order to get our meaning across.

Here are a few general rules to help us all communicate online in this course, especially while using tools such as Canvas or Discord:

As a participant in course discussions, you should also strive to honor the diversity of your classmates by adhering to the K-State Principles of Community.

I am part of the SafeZone community network of trained K-State faculty/staff/students who are available to listen and support you. As a SafeZone Ally, I can help you connect with resources on campus to address problems you face that interfere with your academic success, particularly issues of sexual violence, hateful acts, or concerns faced by individuals due to sexual orientation/gender identity. My goal is to help you be successful and to maintain a safe and equitable campus.

Kansas State University is committed to maintaining academic, housing, and work environments that are free of discrimination, harassment, and sexual harassment. Instructors support the University’s commitment by creating a safe learning environment during this course, free of conduct that would interfere with your academic opportunities. Instructors also have a duty to report any behavior they become aware of that potentially violates the University’s policy prohibiting discrimination, harassment, and sexual harassment, as outlined by PPM 3010.

If a student is subjected to discrimination, harassment, or sexual harassment, they are encouraged to make a non-confidential report to the University’s Office for Institutional Equity (OIE) using the online reporting form. Incident disclosure is not required to receive resources at K-State. Reports that include domestic and dating violence, sexual assault, or stalking, should be considered for reporting by the complainant to the Kansas State University Police Department or the Riley County Police Department. Reports made to law enforcement are separate from reports made to OIE. A complainant can choose to report to one or both entities. Confidential support and advocacy can be found with the K-State Center for Advocacy, Response, and Education (CARE). Confidential mental health services can be found with Lafene Counseling and Psychological Services (CAPS). Academic support can be found with the Office of Student Life (OSL). OSL is a non-confidential resource. OIE also provides a comprehensive list of resources on their website. If you have questions about non-confidential and confidential resources, please contact OIE at equity@ksu.edu or (785) 532–6220.

Kansas State University is a community of students, faculty, and staff who work together to discover new knowledge, create new ideas, and share the results of their scholarly inquiry with the wider public. Although new ideas or research results may be controversial or challenge established views, the health and growth of any society requires frank intellectual exchange. Academic freedom protects this type of free exchange and is thus essential to any university’s mission.

Moreover, academic freedom supports collaborative work in the pursuit of truth and the dissemination of knowledge in an environment of inquiry, respectful debate, and professionalism. Academic freedom is not limited to the classroom or to scientific and scholarly research, but extends to the life of the university as well as to larger social and political questions. It is the right and responsibility of the university community to engage with such issues.

Kansas State University is committed to providing a safe teaching and learning environment for student and faculty members. In order to enhance your safety in the unlikely case of a campus emergency make sure that you know where and how to quickly exit your classroom and how to follow any emergency directives. Current Campus Emergency Information is available at the University’s Advisory webpage.

Kansas State University prohibits the possession of firearms, explosives, and other weapons on any University campus, with certain limited exceptions, including the lawful concealed carrying of handguns, as provided in the University Weapons Policy.

You are encouraged to take the online weapons policy education module to ensure you understand the requirements of the policy, including the requirements related to concealed carrying of handguns on campus. Students possessing a concealed handgun on campus must be lawfully eligible to carry and either at least 21 years of age or a licensed individual who is 18-21 years of age. All carrying requirements of the policy must be observed in this class, including but not limited to the requirement that a concealed handgun be completely hidden from view, securely held in a holster that meets the specifications of the policy, carried without a chambered round of ammunition, and that any external safety be in the “on” position.

If an individual carries a concealed handgun in a personal carrier such as a backpack, purse, or handbag, the carrier must remain within the individual’s exclusive and uninterrupted control. This includes wearing the carrier with a strap, carrying or holding the carrier, or setting the carrier next to or within the immediate reach of the individual.

During this course, you will be required to engage in activities, such as interactive examples or sharing work on the whiteboard, that may require you to separate from your belongings, and thus you should plan accordingly.

Each individual who lawfully possesses a handgun on campus shall be wholly and solely responsible for carrying, storing and using that handgun in a safe manner and in accordance with the law, Board policy and University policy. All reports of suspected violation of the weapons policy are made to the University Police Department by picking up any Emergency Campus Phone or by calling 785-532-6412.

K-State has many resources to help contribute to student success. These resources include accommodations for academics, paying for college, student life, health and safety, and others. Check out the Student Guide to Help and Resources: One Stop Shop for more information.

Student academic creations are subject to Kansas State University and Kansas Board of Regents Intellectual Property Policies. For courses in which students will be creating intellectual property, the K-State policy can be found at University Handbook, Appendix R: Intellectual Property Policy and Institutional Procedures (part I.E.). These policies address ownership and use of student academic creations.

Your mental health and good relationships are vital to your overall well-being. Symptoms of mental health issues may include excessive sadness or worry, thoughts of death or self-harm, inability to concentrate, lack of motivation, or substance abuse. Although problems can occur anytime for anyone, you should pay extra attention to your mental health if you are feeling academic or financial stress, discrimination, or have experienced a traumatic event, such as loss of a friend or family member, sexual assault or other physical or emotional abuse.

If you are struggling with these issues, do not wait to seek assistance.

For Kansas State Salina Campus:

For Global Campus/K-State Online:

K-State has a University Excused Absence policy (Section F62). Class absence(s) will be handled between the instructor and the student unless there are other university offices involved. For university excused absences, instructors shall provide the student the opportunity to make up missed assignments, activities, and/or attendance specific points that contribute to the course grade, unless they decide to excuse those missed assignments from the student’s course grade. Please see the policy for a complete list of university excused absences and how to obtain one. Students are encouraged to contact their instructor regarding their absences.

© The materials in this online course fall under the protection of all intellectual property, copyright and trademark laws of the U.S. The digital materials included here come with the legal permissions and releases of the copyright holders. These course materials should be used for educational purposes only; the contents should not be distributed electronically or otherwise beyond the confines of this online course. The URLs listed here do not suggest endorsement of either the site owners or the contents found at the sites. Likewise, mentioned brands (products and services) do not suggest endorsement. Students own copyright to what they create.

“On my honor, as a student, I have neither given nor received unauthorized aid on this academic work.” - K-State Honor Pledge

Plagiarism is a very serious concern in this course, and something that we do not take lightly. Computer programs and code are especially easy targets for plagiarism due to how easy it is to copy and manipulate code in such a way that it is unrecognizable as the original source but still performs correctly.

At its core, plagiarism is taking someone else’s work and passing it off as your own without giving appropriate credit to the original source. As a student at K-State, you are bound by the K-State Honor Code not to accept any unauthorized aid, and this includes plagiarized code.

When it comes to plagiarism in computer code, there is a fine line between using resources appropriately and copying code. In this program, you should strive to avoid plagiarism issues by doing the following:

In general, copying or adapting small pieces of code to perform auxiliary functions in the assignment is permitted. Copying or adapting code that is the general goal of the assignment should be avoided. For example, if the assignment is to create a bubble sort algorithm, you should write the algorithm from scratch yourself since that is the goal of the assignment. If the assignment is to create a program for displaying data that you feel should be sorted, you may choose to adapt an existing sorting algorithm for your needs (or use one from a library).

If you aren’t sure about whether it is OK to use an online resource or piece of code in this course, please contact the instructors using the course discussion forums or help email address. You will not get in trouble for asking, and it will help you determine what the best course of action is. Plagiarism can really only occur when you submit the assignment for grading, so you are welcome to ask for clarification or a judgement on whether a particular usage is acceptable at any time before you submit the assignment.

Codio has features that will compare your submissions against those of your fellow students. Any submissions with a high degree of similarity may be subjected to additional scrutiny by the instructors to determine if plagiarism has occurred.

In this course, any violation of the K-State Honor Code will result in a 0 on that assignment and a report made to the K-State Honor Council. A second violation will result in an XF in this course, as well as any additional sanctions imposed by the K-State Honor Council.

For more information on the K-State Honor & Integrity system, please visit their website, which is linked in the resources section below this video.

All the stuff you should know already!

Programming is the act of writing source code for a computer program in such a way that a modern computer can understand and perform the steps described in the code. There are many different programming languages that can be used, such as high-level languages like Java and Python.

To run code written in those languages, we can use a compiler to convert the code to a low-level language that can be directly executed by the computer, or we can use an interpreter to read the code and perform the requested operations on the computer.

At this point, we have most likely written some programs already. This chapter will review the important aspects of our chosen programming language, giving us a solid basis to build upon. Hopefully most of this will be review, but there may be a few new terms or concepts introduced here as well.

In this course, we will primarily be learning different ways to store and manipulate data in our programs. Of course, we could do this using the source code of our chosen programming language, but in many cases that would defeat the purpose of learning how to do it ourselves!

Instead, we will use several different ways to represent the steps required to build our programs. Let’s review a couple of them now.

One of the simplest ways to describe a computer program is to simply write what it does using our preferred language, such as English. Of course, natural language can be very ambiguous, so we must be careful to make our written descriptions as precise as possible. So, it is a good idea to limit ourselves to simple, clear sentences that aren’t written as prose. It may seem a bit boring, but this is the best way to make sure our intent is completely understood.

A great example is a recipe for baking. Each step is written clearly and concisely, with enough descriptive words used to allow anyone to read and follow the directions.

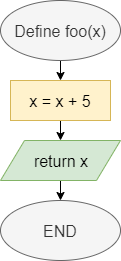

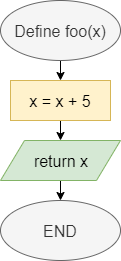

One method of representing computer programs is through the use of flowcharts. A flowchart consists of graphical blocks representing individual operations to be performed, connected with arrows which describe the flow of the program. The image above gives the basic building blocks of the flowcharts that will be used in this course. We will mostly follow the flowchart design used by the Flowgorithm program available online. The following pages in this chapter will introduce and discuss each block in detail.

We can also express our computer programs through the use of pseudocode. Pseudocode is an abstract language that resembles a high-level programming language, but it is written in such a way that it can be easily understood by any programmer who is familiar with any one of several common languages. The pseudocode may not be directly executable as written, but it should contain enough detail to be easily understood and adapted to an actual programming language by a skilled programmer.

There are many standards that exist for pseudocode, each with their own unique features and uses. In this course, we will mostly follow the standards from the International Baccalaureate Organization. In the following pages in this chapter, we’ll also introduce pseudocode for each of the flowchart blocks shown above.

Let’s discuss some of the basic concepts we need to understand about the Python programming language.

To begin, let’s look at a simple Hello World program written in Python:

def main():

print("Hello World!")

# main guard

if __name__ == "__main__":

main()This program contains multiple important parts:

main(). Python does not require us to do this, since we can write our code directly in the file and it will execute. However, since we are going to be building larger programs in this course, it is a good idea to start using functions now.:, and then the code inside of that function comes directly after it. The code contained in the function must be indented a single level. By convention, Python files should use 4 spaces to indent the code. Thankfully, Codio does that for us automatically.main() function to run the program.Of course, this is a very brief overview for the Python programming language. To learn more, feel free to refer to the references listed below, as well as the textbook content for previous courses.

See if you can use the code above to write your own Hello World program a file named HelloWorld.py. We’ll learn how to compile and run that program on the next page.

Now that we’ve written our first Python program, we must run the program to see the fruits of our labors. There are many different ways to do this using the Codio platform. We’ll discuss each of them in detail here.

Codio includes a built-in Linux terminal, which allows us to perform actions directly on a command-line interface just like we would on an actual computer running Linux. We can access the Terminal in many ways:

Additionally, some pages may already open a terminal window for us in the left-hand pane, as this page so helpfully does. As we can see, we’re never very far away from a terminal.

No worries! We’ll give you everything you need to know to run your Python programs in this course.

If you’d like to learn a bit more about the Linux terminal and some of the basic commands, feel free to check out this great video on YouTube:

Let’s go to the terminal window and navigate to our program. When we first open the Terminal window, it should show us a prompt that looks somewhat like this one:

There is quite a bit of information there, but we’re interested in the last little bit of the last line, where it says ~/workspace. That is the current directory, or folder, our terminal is looking at, also known as our working directory. We can always find the full location of our working directory by typing the pwd command, short for “Print Working Directory,” in the terminal. Let’s try it now!

Enter this command in the terminal:

pwdand we should see output similar to this:

In that output, we’ll see that the full path to our working directory is /home/codio/workspace. This is the default location for all of our content in Codio, and it’s where everything shown in the file tree to the far left is stored. When working in Codio, we’ll always want to store our work in this directory.

Next, let’s use the ls command, short for “LiSt,” to see a list of all of the items in that directory:

lsWe should see a whole list of items appear in the terminal. Most of them are directories containing examples for the chapters this textbook, including the HelloWorld.py file that we edited in the last page. Thankfully, the directories are named in a very logical way, making it easy for us to find what we need. For example, to find the directory for Chapter 1 that contains examples for Python, look for the directory with the name starting with 1p. In this case, it would be 1p-hello.

Finally, we can use the cd command, short for “Change Directory,” to change the working directory. To change to the 1p-hello directory, type cd into the terminal window, followed by the name of that directory:

cd 1p-helloWe are now in the 1p-hello directory, as we can see by observing the ~/workspace/1p-hello on the current line in the terminal. Finally, we can do the ls command again to see the files in that directory:

lsWe should see our HelloWorld.py file! If it doesn’t appear, try using this command to get to the correct directory: cd /home/codio/workspace/1p-hello.

Once we’re at the point where we can see the HelloWorld.py file, we can move on to actually running the program.

To run it, we just need to type the following in the terminal:

python3 HelloWorld.pyThat’s all there is to it! We’ve now successfully run our first Python program. Of course, we can run the program as many times as we want by repeating the previous python3 command. If we make changes to the HelloWorld.py file that instruct the computer to do something different, we should see those changes the next time we run the file..

If the python3 command doesn’t give you any output, or gives you an error message, that most likely means that your code has an error in it. Go back to the previous page and double-check that the contents of HelloWorld.py exactly match what is shown at the bottom of the page. You can also read the error message output by python3 to determine what might be going wrong in your file.

Also, make sure you use the python3 command and not just python. The python3 command references the newer Python 3 interpreter, while the python command is used for the older Python 2 interpreter. In this book, we’ll be using Python 3, so you’ll need to always make sure you use python3 when you run your code.

We’ll cover information about simple debugging steps on the next page as well. If you get stuck, now is a great time to go to the instructors and ask for assistance. You aren’t in this alone!

See if you can change the HelloWorld.py file to print out a different message. Once you’ve changed it, use the python3 command to run the file again. Make sure you see the correct output!

Last, but not least, many of the Codio tutorials and projects in this program will include assessments that we must solve by writing code. Codio can then automatically run the program and check for specific things, such as the correct output, in order to give us a grade. For most of these questions, we’ll be able to make changes to our code as many times as we’d like to get the correct answer.

As we can see, there are many different ways to compile and run our code using Codio. Feel free to use any of these methods throughout this course.

Codio also includes an integrated debugger, which is very helpful when we want to determine if there is an error in our code. We can also use the debugger to see what values are stored in each variable at any point in our program.

To use the debugger, find the Debug Menu at the top of the Codio window. It is to the right of the Run Menu we’ve already been using. On that menu, we should see an option for Python - Debug File. Select that option to run our program in the Codio debugger.

As we build more complex programs in this course, we’ll be able to configure our own debugger configurations that allow us to test multiple files and operations.

The Codio debugger only works with input from a file, not from the terminal. So, to use the debugger, we’ll need to make sure the input we’d like to test is stored in a file, such as input.txt, before debugging. We can then give that file as an argument to our program in our debugger configuration, and write our program to read input from a file if one is provided as an argument.

Learning how to use a debugger is a hands-on process, and is probably best described in a video. You can find more information in the Codio documentation to get up to speed on working in the Codio debugger.

Codio Documentation - Debugger

We can always use the debugger to help us find problems in our code.

Codio now includes support for Python Tutor, allowing us to visualize what is happening in our code. We can see that output in the second tab that is open to the left.

Unfortunately, students are not able to open the visualizer directly, so it must be configured by an instructor in the Codio lesson. If you find a page in this textbook where you’d like to be able to visualize your code, please let us know!

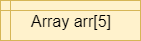

A variable in a programming language is an abstraction that allows storing one value in each instant of time, but this value can change along with the program execution. A variable can be represented as a box holding a value. If the variable is a container, e.g., a list (or array or vector), a matrix, a tuple, or a set of values, each box in the container contains a single value.

A variable is characterized by:

results, number_of_nodes, number_of_edges. For writing variable names composed of two or more words in Python we can use underscores to separate the words.Depending on the programming language, we could also specify for a variable:

A programming language allows to perform two basic operations with a variable:

+, and subtraction -. They allow performing basic arithmetic operations with numbers.<, and greater than >. Usually, they allow to comparing two operands, each of which could be a variable. The result of the comparison is either the Boolean value true or the Boolean value false.and , or, and not. This operator allows us to relate logical conditions together to create more complex statements.+ to concatenate the strings “Hello” and the string “world” to produce the string “Hello world”. These operators allow us to manipulate strings.a = b.The table below lists the flowchart blocks used to represent variables, as well as the corresponding pseudocode:

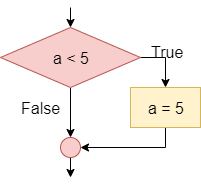

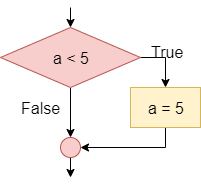

| Operation | Flowchart | Pseudocode |

|---|---|---|

| Declare |

|

X = 0 |

| Assign |

|

X = 5 |

| Declare & Assign |

|

X = 5 |

Notice that variables must be assigned a value when declared in pseudocode. By default, most programming languages automatically assign the value $0$ to a new integer variable, so we’ll use that value in our pseudocode as well.

Likewise, variables in a flowchart are given a type, whereas variables in pseudocode are not. Instead, the data type of those variables can be inferred by the values stored in them.

Variables in Python are simply defined by giving them a value. The type of the variable in inferred from the data stored in it at any given time, and a variable’s type may change throughout the program as different values are assigned to it.

To define a variable, we can simply use an assignment statement to give it a value:

x = 5

y = 3.5We can also convert, or cast, data between different types. When we do this, the results may vary a bit due to how computers store and calculate numbers. So, it is always best to fully test any code that casts data between data types to make sure it works as expected.

To cast, we can simply use the new type as a function and place the value to be converted in parentheses:

x = 1.5

y = int(x)This will convert the floating point value stored in x to an integer value stored in y.

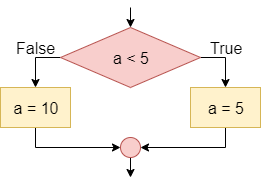

The conditional statement, also known as the If-Then statement, is used to control the program’s flow by checking the value of a Boolean statement and determining if a block of code should be executed based on that value. This is the simplest conditional instruction. If the condition is true, the block enclosed within the statement is executed. If it is false, then the code in the block is skipped.

A more advanced conditional statement, the If-Then-Else or If-Else statement, includes two blocks. The first block will be executed if the Boolean statement is true. If the Boolean statement is false, then the second block of code will be executed instead.

Simple conditions are obtained by means of the relational operators, such as <, >, and ==, which allow you to compare two elements, such as two numbers, or a variable and a number, or two variables. Compound conditions are obtained by composing two or more simple conditions through the logical operators and, or, and not.

Recall that the Boolean logic operators and, or, and not can be used to construct more complex Boolean logic statements.

For example, consider the statement x <= 5. This could be broken down into two statements, combined by the or operation: x < 5 or x == 5. The table below, called a truth table, gives the result of the or operation based on the values of the two operands:

| Operand 1 | Operand 2 | Operand 1 or Operand 2 |

|---|---|---|

| False | False | False |

| False | True | True |

| True | False | True |

| True | True | True |

As shown above, the result of the or operation is True if at least one of the operands is True.

Likewise, to express the mathematical condition 3 < a < 5 we can use the logical operator and by dividing the mathematical condition into two logical conditions: a > 3 and a < 5. The table below gives the result of the and operation based on the values of the two operands:

| Operand 1 | Operand 2 | Operand 1 or Operand 2 |

|---|---|---|

| False | False | False |

| False | True | False |

| True | False | False |

| True | True | True |

As shown above, the result of the and operation is True if both of the operands are True.