Homepage

CC 315 Textbook

This is the textbook for CC 315.

This is the textbook for CC 315.

This page is the main page for the introductory material.

Instructor: Safia Malallah (safia AT ksu DOT edu) _I use she/her pronouns. If you need assistance, please send an email to safia @ ksu.edu, cc’ing cis115-help@KSUemailProd.onmicrosoft.com. Avoid using the Canvas email function, as emailing this way ensures that your message reaches all instructors for this course, guaranteeing a swifter response. You can expect a response by the end of the next business day; feel free to reach out again if you don’t receive a response within 24 business hours.

Office: DUE 2161

Virtual Office Hours: Schedule a meeting with me: https://calendly.com/safiamalallah Appointments held via Zoom.

Advanced data structures and related algorithms. Formal software development methods and software engineering fundamentals. Introduction to requirements analysis processes that provide the specification of algorithmic requirements.

This course introduces advanced data structures, such as trees, graphs, and heaps. Several new algorithms using these data structures are covered. Students also learn software development methods and software engineering fundamentals and use those skills to develop projects of increasing size and scope effectively.

These courses are being taught 100% online, and each module is self-paced. There may be some bumps in the road as we refine the overall course structure. Students will work at their own pace through a set of modules, with approximately one module being due each week. Material will be provided in the form of recorded videos, online tutorials, links to online resources, and discussion prompts. Each module will include a coding project or assignment, many of which will be graded automatically through Codio. Assignments may also include portions which will be graded manually via Canvas or other tools.

The assigments for this course is delivered through Python programming language.

All activities are individual effort. ‘Group’ work is not permitted.

Students are granted the opportunity to

You are expected to use the Codio Editor. It is deliberately feature poor to place the burden of programming syntax, vocabulary and logic flow on the student. DO NOT CUT AND PASTE into the editor while coding your projects.

Exception, you may cut and paste from Codio, so if you accidently delete the starter code, or want to modify a section of code you made in the tutorial. INCLUDE a comment stating from where you ‘sourced’ the pasted section.

from Codio tutorial 3.4.P.7 with open(sys.argv[1]) as input_file:

try:

reader = input_file.readlines()

except:

reader = sys.stdin

In theory, each student begins the course with an A. As you submit work, you can either maintain your A (for good work) or chip away at it (for less adequate or incomplete work). In practice, each student starts with 0 points in the gradebook and works upward toward a final point total earned out of the possible number of points. In this course, each assignment constitutes a portion of the final grade, as detailed below:

Up to 5% of the total grade in the class is available as extra credit. See the Extra Credit - Bug Bounty assignment for details.

Letter grades will be assigned following the standard scale:

The Projects you submit in Codio may have both an automatic and manual grading component.

Automatic Components: Codio automatically checks certain aspects of your code’s structure and functionality. The grade assigned by Codio is generally the ceiling of the score you can receive.

Manual Components: Once submitted your code may be reviewed and deductions take for:

Forbidden statements/Required statements: Some assignments may prohibit the use of certain built in functions and methods. Others may require the use of certain libraries and methods. Using prohibited statements and or skipping required statements will result in a ZERO for the project.

Cutting and Pasting into the Codio IDE (Project assignments): You are expected to do your work in Codio. Cutting and pasting from outside Codio is prohibited, may result in a zero and will trigger a closer plagiarism review.

Running from the terminal: Codio does not typically check for proper terminal input behavior. A project that does not compile and/or run from the terminal will result in a 25% reduction in your final score. The project should work for the sample inputs, and should not crash or print extraneous information.

Having extra methods/missing required methods: up to 10% deduction

Style Guide: see Pages

Read this late work policy very carefully! If you are unsure how to interpret it, please contact the instructors via the help email. Not understanding the policy does not mean that it won’t apply to you!

Since this course is entirely online, students may work at any time and at their own pace through the modules. However, to keep everyone on track, there will be approximately one module due each week. Each graded item in the module will have a specific due date specified. All of the components of a module will be subject to the late policy if the module is submitted late. This penalty will be assessed via a single separate assignment entry in the gradebook, containing the sum of all grade reductions in the course for that student. Late assignments will not be accepted if they have been submitted more than 3 days after the due date, unless a special reason is provided and prior approval for an extension has been obtained before the due date.

For the purposes of recordkeeping, the submission time of the confirmation quiz in each module will be used to establish the completion time of the entire module in case of a discrepancy. This is because Codio may update submission times when assignments are regraded, but the quiz in Canvas should only be completed once.

However, even if a module is not submitted on time, it must still be completed before a student is allowed to begin the next module. So, students should take care not to get too far behind, as it may be very difficult to catch up.

Finally, all course work must be submitted on or before the last day of the semester in which the student is enrolled in the course in order for it to be graded on time.

If you have extenuating circumstances, please discuss them with the instructor as soon as they arise so other arrangements can be made. If you find that you are getting behind in the class, you are encouraged to speak to the instructor for options to make up missed work.

Students should strive to complete this course in its entirety before the end of the semester in which they are enrolled. However, since retaking the course would be costly and repetitive for students, we would like to give students a chance to succeed with a little help rather than immediately fail students who are struggling.

If you are unable to complete the course in a timely manner, please contact the instructor to discuss an incomplete grade. Incomplete grades are given solely at the instructor’s discretion. See the official K-State Grading Policy for more information. In general, poor time management alone is not a sufficient reason for an incomplete grade.

Unless otherwise noted in writing on a signed Incomplete Agreement Form, the following stipulations apply to any incomplete grades given in Computational Core courses:

All graded work is individual effort. You are authorized to use:

course’s materials, direct web-links from this course the appropriate languages documentation (https://docs.python.org/3/ or https://docs.oracle.com/javase/Links to an external site.) Email help received through 315 help email, CC - Instructors, GTAs Zoom/In-person help received from Instructors or GTA ACM help session (an on campus only resource) Most Tuesdays in EH 1116, 6:30PM. Tutors from the Academic Assistance Center or provided by K-State Athletics Use of on-line solutions whether for reference or code is prohibited. Use of previous semester’s answers, whether your own or another student’s is prohibited. Use of code-completion/suggestion tool’s, other than those we have installed in the Codio editor, is prohibitied.

CC-315 is a three-hour course; most students find it more time consuming than CC-310.

Modules have been assigned so that the average amount of work (hours), based on past student performance, is somewhat consistent.

You may work ahead, but modules must be worked strictly in order. Also, if you are far ahead, it may take a bit longer to get help. We review the lessons every week so the support you get is not ’too advanced’; if you are pretty far ahead, we will need to ‘catch up’ to where you are to provide support.

Please note, modules are not equally weighted, some are worth far more than others.

To participate in this course, students must have access to a modern web browser and broadband internet connection. All course materials will be provided via Canvas and Codio. Modules may also contain links to external resources for additional information, such as programming language documentation.

The details in this syllabus are not set in stone. Due to the flexible nature of this class, adjustments may need to be made as the semester progresses, though they will be kept to a minimum. If any changes occur, the changes will be posted on the Canvas page for this course and emailed to all students.

The statements below are standard syllabus statements from K-State and our program. The latest versions are available online here.

Kansas State University has an Honor and Integrity System based on personal integrity, which is presumed to be sufficient assurance that, in academic matters, one’s work is performed honestly and without unauthorized assistance. Undergraduate and graduate students, by registration, acknowledge the jurisdiction of the Honor and Integrity System. The policies and procedures of the Honor and Integrity System apply to all full and part-time students enrolled in undergraduate and graduate courses on-campus, off-campus, and via distance learning. A component vital to the Honor and Integrity System is the inclusion of the Honor Pledge which applies to all assignments, examinations, or other course work undertaken by students. The Honor Pledge is implied, whether or not it is stated: “On my honor, as a student, I have neither given nor received unauthorized aid on this academic work.” A grade of XF can result from a breach of academic honesty. The F indicates failure in the course; the X indicates the reason is an Honor Pledge violation.

For this course, a violation of the Honor Pledge will result in sanctions such as a 0 on the assignment or an XF in the course, depending on severity. Actively seeking unauthorized aid, such as posting lab assignments on sites such as Chegg or StackOverflow, or asking another person to complete your work, even if unsuccessful, will result in an immediate XF in the course.

This course assumes that all your course work will be done by you. Use of AI text and code generators such as ChatGPT and GitHub Copilot in any submission for this course is strictly forbidden unless explicitly allowed by your instructor. Any unauthorized use of these tools without proper attribution is a violation of the K-State Honor Pledge.

We reserve the right to use various platforms that can perform automatic plagiarism detection by tracking changes made to files and comparing submitted projects against other students’ submissions and known solutions. That information may be used to determine if plagiarism has taken place.

At K-State it is important that every student has access to course content and the means to demonstrate course mastery. Students with disabilities may benefit from services including accommodations provided by the Student Access Center. Disabilities can include physical, learning, executive functions, and mental health. You may register at the Student Access Center or to learn more contact:

Students already registered with the Student Access Center please request your Letters of Accommodation early in the semester to provide adequate time to arrange your approved academic accommodations. Once SAC approves your Letter of Accommodation it will be e-mailed to you, and your instructor(s) for this course. Please follow up with your instructor to discuss how best to implement the approved accommodations.

All student activities in the University, including this course, are governed by the Student Judicial Conduct Code as outlined in the Student Governing Association By Laws, Article V, Section 3, number 2. Students who engage in behavior that disrupts the learning environment may be asked to leave the class.

At K-State, faculty and staff are committed to creating and maintaining an inclusive and supportive learning environment for students from diverse backgrounds and perspectives. K-State courses, labs, and other virtual and physical learning spaces promote equitable opportunity to learn, participate, contribute, and succeed, regardless of age, race, color, ethnicity, nationality, genetic information, ancestry, disability, socioeconomic status, military or veteran status, immigration status, Indigenous identity, gender identity, gender expression, sexuality, religion, culture, as well as other social identities.

Faculty and staff are committed to promoting equity and believe the success of an inclusive learning environment relies on the participation, support, and understanding of all students. Students are encouraged to share their views and lived experiences as they relate to the course or their course experience, while recognizing they are doing so in a learning environment in which all are expected to engage with respect to honor the rights, safety, and dignity of others in keeping with the K-State Principles of Community.

If you feel uncomfortable because of comments or behavior encountered in this class, you may bring it to the attention of your instructor, advisors, and/or mentors. If you have questions about how to proceed with a confidential process to resolve concerns, please contact the Student Ombudsperson Office. Violations of the student code of conduct can be reported using the Code of Conduct Reporting Form. You can also report discrimination, harassment or sexual harassment, if needed.

This is our personal policy and not a required syllabus statement from K-State. It has been adapted from this statement from K-State Global Campus, and theRecurse Center Manual. We have adapted their ideas to fit this course.

Online communication is inherently different than in-person communication. When speaking in person, many times we can take advantage of the context and body language of the person speaking to better understand what the speaker means, not just what is said. This information is not present when communicating online, so we must be much more careful about what we say and how we say it in order to get our meaning across.

Here are a few general rules to help us all communicate online in this course, especially while using tools such as Canvas or Discord:

As a participant in course discussions, you should also strive to honor the diversity of your classmates by adhering to the K-State Principles of Community.

Kansas State University is committed to maintaining academic, housing, and work environments that are free of discrimination, harassment, and sexual harassment. Instructors support the University’s commitment by creating a safe learning environment during this course, free of conduct that would interfere with your academic opportunities. Instructors also have a duty to report any behavior they become aware of that potentially violates the University’s policy prohibiting discrimination, harassment, and sexual harassment, as outlined by PPM 3010.

If a student is subjected to discrimination, harassment, or sexual harassment, they are encouraged to make a non-confidential report to the University’s Office for Institutional Equity (OIE) using the online reporting form. Incident disclosure is not required to receive resources at K-State. Reports that include domestic and dating violence, sexual assault, or stalking, should be considered for reporting by the complainant to the Kansas State University Police Department or the Riley County Police Department. Reports made to law enforcement are separate from reports made to OIE. A complainant can choose to report to one or both entities. Confidential support and advocacy can be found with the K-State Center for Advocacy, Response, and Education (CARE). Confidential mental health services can be found with Lafene Counseling and Psychological Services (CAPS). Academic support can be found with the Office of Student Life (OSL). OSL is a non-confidential resource. OIE also provides a comprehensive list of resources on their website. If you have questions about non-confidential and confidential resources, please contact OIE at equity@ksu.edu or (785) 532–6220.

Kansas State University is a community of students, faculty, and staff who work together to discover new knowledge, create new ideas, and share the results of their scholarly inquiry with the wider public. Although new ideas or research results may be controversial or challenge established views, the health and growth of any society requires frank intellectual exchange. Academic freedom protects this type of free exchange and is thus essential to any university’s mission.

Moreover, academic freedom supports collaborative work in the pursuit of truth and the dissemination of knowledge in an environment of inquiry, respectful debate, and professionalism. Academic freedom is not limited to the classroom or to scientific and scholarly research, but extends to the life of the university as well as to larger social and political questions. It is the right and responsibility of the university community to engage with such issues.

Kansas State University is committed to providing a safe teaching and learning environment for student and faculty members. In order to enhance your safety in the unlikely case of a campus emergency make sure that you know where and how to quickly exit your classroom and how to follow any emergency directives. Current Campus Emergency Information is available at the University’s Advisory webpage.

K-State has many resources to help contribute to student success. These resources include accommodations for academics, paying for college, student life, health and safety, and others. Check out the Student Guide to Help and Resources: One Stop Shop for more information.

Student academic creations are subject to Kansas State University and Kansas Board of Regents Intellectual Property Policies. For courses in which students will be creating intellectual property, the K-State policy can be found at University Handbook, Appendix R: Intellectual Property Policy and Institutional Procedures (part I.E.). These policies address ownership and use of student academic creations.

Your mental health and good relationships are vital to your overall well-being. Symptoms of mental health issues may include excessive sadness or worry, thoughts of death or self-harm, inability to concentrate, lack of motivation, or substance abuse. Although problems can occur anytime for anyone, you should pay extra attention to your mental health if you are feeling academic or financial stress, discrimination, or have experienced a traumatic event, such as loss of a friend or family member, sexual assault or other physical or emotional abuse.

If you are struggling with these issues, do not wait to seek assistance.

For Kansas State Salina Campus:

For Global Campus/K-State Online:

K-State has a University Excused Absence policy (Section F62). Class absence(s) will be handled between the instructor and the student unless there are other university offices involved. For university excused absences, instructors shall provide the student the opportunity to make up missed assignments, activities, and/or attendance specific points that contribute to the course grade, unless they decide to excuse those missed assignments from the student’s course grade. Please see the policy for a complete list of university excused absences and how to obtain one. Students are encouraged to contact their instructor regarding their absences.

© The materials in this online course fall under the protection of all intellectual property, copyright and trademark laws of the U.S. The digital materials included here come with the legal permissions and releases of the copyright holders. These course materials should be used for educational purposes only; the contents should not be distributed electronically or otherwise beyond the confines of this online course. The URLs listed here do not suggest endorsement of either the site owners or the contents found at the sites. Likewise, mentioned brands (products and services) do not suggest endorsement. Students own copyright to what they create.

This page is the main page for the Strings and StringBuilders Section

This page is the main page for the Strings and StringBuilders chapter

In CC310 we covered various data structures: stacks, sets, lists, queues, and hash tables. When we looked at these structures, we considered how to access elements within the structures, how we would create our own implementation of the structure, and tasks that these structures would be fitting for as well as ill fitting. Throughout this course we will introduce and implement a variety of data structures as we did in CC310.

We begin this course with an often overlooked structure: strings. By the end of this chapter, we will understand how strings are data structures, how we access elements, what types of tasks are appropriate for strings, and how we can improve on strings in our code.

In many data science positions, programmers often work with text-based data. Some examples of text-based data include biology for DNA sequencing, social media for sentiment classification, online libraries for citation networks, and many other types of businesses for data analytics. Currently, strings are often used for word embeddings, which determine how similar or dissimilar words are to one another. An industry leading software for this application is Tensorflow for Python, which generated the image below.

In an immense oversimplification, the process used for word embeddings is to read in a large amount of text and then use machine learning algorithms to determine similarity by using the words around each word. This impacts general users like us in search engines, streaming services, dating applications, and much more! For example, if you were to search Python topics in your search results may appear referring to the coding language, the reptile, the comedy troupe, and many more. When we use machine learning to determine word meanings, it is important that the data is first parsed correctly and stored in efficient ways so that we can access elements as needed. Understanding how to work with strings and problems that can arise with them is important to utilizing text successfully.

Reference: https://projector.tensorflow.org/

Strings are data structures which store an ordered set of characters. Recall that a character can be a: letter, number, symbol, punctuation mark, or white space. Strings can contain any number and any combination of these. As such, strings can be single characters, words, sentences, and even more.

Let’s refresh ourselves on how strings work, starting with the example string: s = "Go Cats!".

| Character | G | o | C | a | t | s | ! | |

| Index | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

As with other data structures, we can access elements within the string itself. Using the example above, s[0] would contain the character ‘G’, s[1] would contain the character ‘o’, s[2] would contain the character ’ ‘, and so on.

We can determine the size of a string by using length functions; in our example, the length of s would be 8. It is also important to note that when dealing with strings, null is not equivalent to “”. For string s = "", the length of s would be 0. However for string s = null, accessing the length of s would return an error that null has no length property.

We can also add to strings or append on a surface level; though it is not that simple behind the scenes. The String class is immutable. This means that changes will not happen directly to the string; when appending or inserting, code will create a new string to hold that value. More concisely, the state of an immutable object cannot change.

We cannot assign elements of the string directly and more broadly for any immutable object. For example, if we wanted the string from our example to be ‘Go Cat!!’, we cannot assign the element through s[6] = '!'. This would result in an item assignment error.

For an example, consider string s = ‘abc’. If we then state in code s = s + ‘123’, this will create a new place in memory for the new definition of s. We can verify this in code by using the id() function.

string s = 'abc'

id(s)

Output: 140240213073680 #may be different on your personal device

string s = s + '123'

id(s)

Output: 140239945470168 While on the surface it appears that we are working with the same variable, our code will actually refer to a different one. There are many other immutable data types as well as mutable data types.

On the topic of immutable, we can also discuss the converse: mutable objects. Being a mutable object means that the state of the object can change. In CC310, we often worked with arrays to implement various data structures. Arrays are mutable, so as we add, change, or remove elements from an array, the array changes its state to accommodate the change rather than creating a new location in memory.

| Data Type | Immutable? |

|---|---|

| Lists | ☐ |

| Sets | ☐ |

| Byte Arrays | ☐ |

| Dictionaries | ☐ |

| Strings | ☑ |

| Ints | ☑ |

| Floats | ☑ |

| Booleans | ☑ |

Reference: http://people.cs.ksu.edu/~rhowell/DataStructures/strings/strings.html

Consider the following block of pseudocode:

1. function APPENDER(NUMBER, BASE)

2. RESULT = ""

3. loop I from 1 to NUMBER

4. RESULT = RESULT + BASE

5. if I MOD 2 = 0

6. RESULT = RESULT + " "

7. else

8. RESULT = RESULT + ", "

9. end loop

10. return RESULT

11. end functionLets step through the function call with APPENDER(4,'abc') and analyze the memory that the code takes.

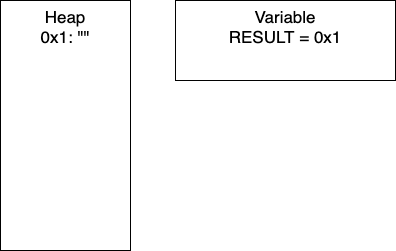

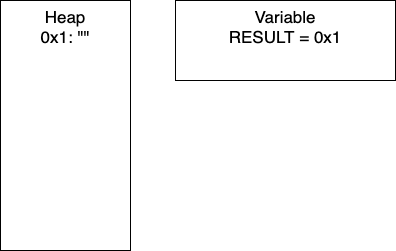

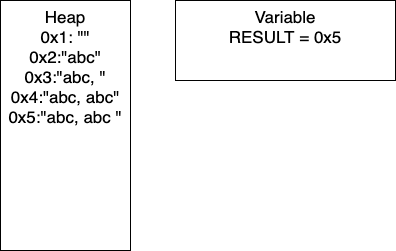

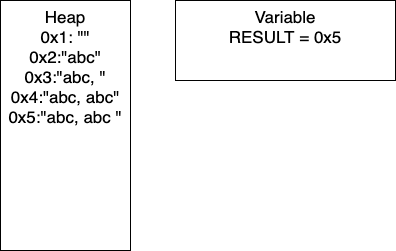

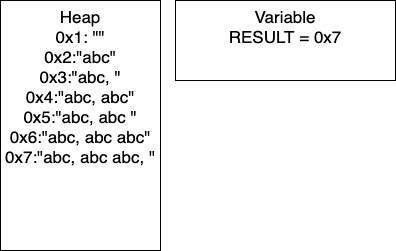

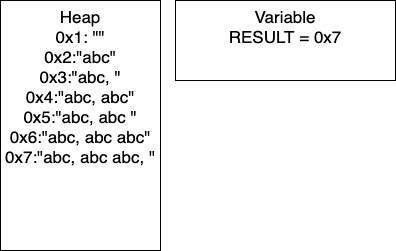

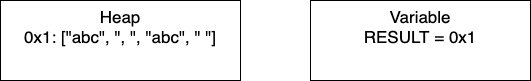

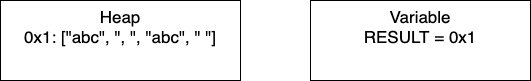

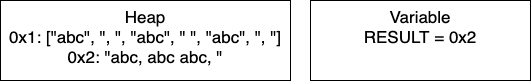

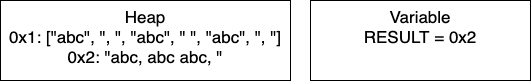

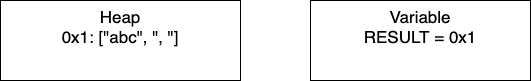

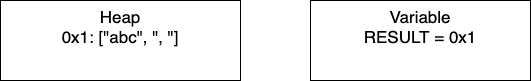

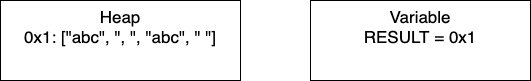

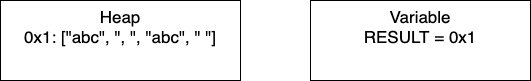

Recall that strings are reference variables. As such, string variables hold pointers to values and the value is stored in memory. For the following example, the HEAP refers to what is currently stored in memory and VARIABLE shows the current value of the variable RESULT.

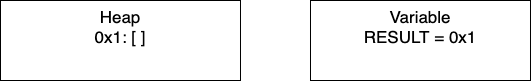

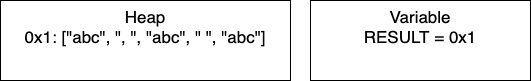

Initialization: In line two, we initialize RESULT as an empty string. In the heap, we have the empty string at memory location 0x1. Thus, RESULT is holding the pointer 0x1.

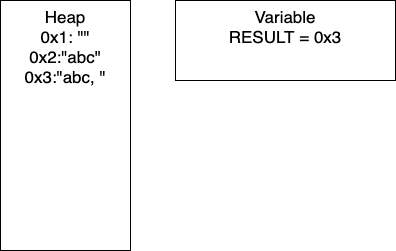

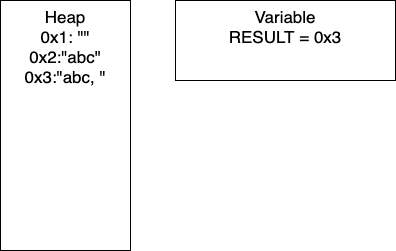

I = 1: Now we have entered the loop and on line 4, we add more characters to our string. At this point, we would have entry 0x2 in our heap and our variable RESULT would have the pointer 0x2. Continuing through the code, line 5 determines if I is divisible by 2. In this iteration I = 1, so we take the else branch. We again add characters to our string, resulting in a new entry in 0x3 and our variable RESULT containing the pointer 0x3. In total, we have written 8 characters. We then increment I and move to the next iteration.

I = 2: We continue the loop and on line 4, we add more characters to our string. At this point, we would have entry 0x4 in our heap and our variable RESULT would have the pointer 0x4. Continuing through the code, line 5 determines if I is divisible by 2. In this iteration I = 2, so we take the if branch. We again add characters to our string, resulting in a new entry in 0x5 and our variable RESULT containing the pointer 0x5. In this iteration, we have written 17 characters. We then increment I and move to the next iteration of the loop.

I = 3: We continue the loop and on line 4, we add more characters to our string. At this point, we would have entry 0x6 in our heap and our variable RESULT would have the pointer 0x6. Continuing through the code, line 5 determines if I is divisible by 2. In this iteration I = 3, so we take the if branch. We again add characters to our string, resulting in a new entry in 0x7 and our variable RESULT containing the pointer 0x7. In this iteration, we have written 26 characters. We then increment I and thus I = 4 breaking out of the loop.

We can do some further analysis of the memory that is required for this particular block.

| Iteration | Memory Entries | Total Character Copies |

|---|---|---|

| 1 | 3 | 8 |

| 2 | 5 | 8 + 17 = 25 |

| 3 | 7 | 25 + 26 = 51 |

| 4 | 9 | 51 + 35 = 86 |

| . | . | . |

| n | 2n + 1 | (9n2 + 7n)/2 |

You need not worry about creating the equations! Based on this generalization, if the user wanted to do 100K iterations, say for gene sequencing, there would be (2x100,000 - 1) = 200,001 memory entries and (9x100,0002 + 7x100,000)/2 = 45 billion character copies. This behavior is not exclusive to strings; this will occur for any immutable type.

While this example is contrived, it is not too far off the mark. Another caveat to this analysis is that, depending on our programming language, there will be a periodic ‘memory collection’; there wont be 200K memory addresses occupied at one time. Writing to memory in this way can be costly in terms of time, which in industry is money.

As a result of being immutable, strings can be cumbersome to work with in certain applications. When long strings or strings that we are continually appending to, such as in the memory example, we end up creating a lot of sizable copies.

Recall from the memory example the block of pseudocode.

1. function APPENDER(NUMBER, BASE)

2. RESULT = ""

3. loop I from 1 to NUMBER

4. RESULT = RESULT + BASE

5. if I MOD 2 = 0

6. RESULT = RESULT + " "

7. else

8. RESULT = RESULT + ", "

9. end loop

10. return RESULT

11. end functionIn this example, what if we changed RESULT to a mutable type, say a list of strings for Python or a StringBuilder in Java. Once the loop is done, we can cast RESULT to a string. By changing just the one aspect of the code, we would make only one copy of RESULT and have far less character copies.

Java specifically has a StringBuilder class which was created for this precise reason.

Consider the following, and note the slight changes on lines 2, 4, 6, 8 and the additional line 10.

1. function APPENDER_LIST(NUMBER, BASE)

2. RESULT = []

3. loop I from 1 to NUMBER

4. RESULT.APPEND(BASE)

5. if I MOD 2 = 0

6. RESULT.APPEND(" ")

7. else

8. RESULT.APPEND(", ")

9. end loop

10. RESULT = "".JOIN(RESULT)

11. return RESULT

12. end functionNow consider APPENDER_LIST(4,‘abc’)

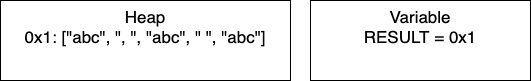

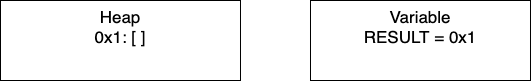

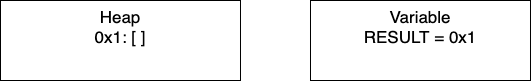

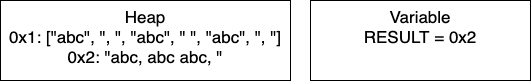

Initialization: We start by initializing the empty array. RESULT will hold the pointer 0x1.

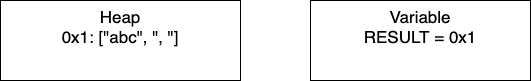

I = 1: Now we have entered the loop and on line 4, we add more characters to our array. At this point, we would have only entry 0x1 in our heap and our variable RESULT would have the pointer 0x1. Continuing through the code, line 5 determines if I is divisible by 2. In this iteration I = 1, so we take the else branch. We again add characters to our array. In total, we have written 5 characters. We then increment I and move to the next iteration.

I = 2: We continue the loop and on line 4, we add more characters to our array. We still have just one entry in memory and our pointer is still 0x1. In this iteration, we have written 4 characters. We then increment I and move to the next iteration of the loop.

I = 3: We continue the loop and on line 4, we add more characters to our array. In this iteration, we have written 5 characters. We then increment I and thus I = 4 breaking out of the loop.

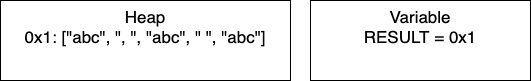

Post-Loop: Once the loop breaks, we join the array to create the final string. This creates a new place in memory and changes RESULT to contain the pointer 0x2.

We can do some further analysis of the memory that is required for this particular block.

| Iteration | Memory Entries | Character Copies |

|---|---|---|

| 1 | 2 | 8 |

| 2 | 2 | 17 |

| 3 | 2 | 26 |

| 4 | 2 | 35 |

| . | . | . |

| n | 2 | 9n - 1 |

Again, you need not worry about creating these equations for this course. To illustrate the improvement even more explicitly, let’s consider our previous example with 100K iterations. For APPENDER there were (2x100,000 - 1) = 200,001 memory entries and (9x100,0002 + 7x100,000)/2 = 45 billion character copies. For APPENDER_LIST we now have just 2 memory entries and (9x100,000 - 1) = 899,999 character copies. This dramatic improvement was a result of changing our data structure ever so slightly.

Reference: http://people.cs.ksu.edu/~rhowell/DataStructures/strings/stringbuilders.html

As a result of being immutable, strings can be cumbersome to work with in certain applications. When working with long strings or strings that we are continually appending to, such as in the memory example, we end up creating a lot of sizable copies.

Recall from the memory example the block of pseudocode.

1. function APPENDER(NUMBER, BASE)

2. RESULT = ""

3. loop I from 1 to NUMBER

4. RESULT = RESULT + BASE

5. if I MOD 2 = 0

6. RESULT = RESULT + " "

7. else

8. RESULT = RESULT + ", "

9. end loop

10. return RESULT

11. end functionIn this example, what if we changed RESULT to a mutable type, such as a StringBuilder in Java. Once the loop is done, we can cast RESULT to a string. By changing just the one aspect of the code, we would make only one copy of RESULT and have far less character copies.

Java specifically has a StringBuilder class which was created for this precise reason.

Consider the following, and note the slight changes on lines 2, 4, 6, 8 and the additional line 10.

1. function APPENDER_LIST(NUMBER, BASE)

2. RESULT = []

3. loop I from 1 to NUMBER

4. RESULT.APPEND(BASE)

5. if I MOD 2 = 0

6. RESULT.APPEND(" ")

7. else

8. RESULT.APPEND(", ")

9. end loop

10. RESULT = "".JOIN(RESULT)

11. return RESULT

12. end functionNow consider APPENDER_LIST(4,‘abc’)

Initialization: We start by initializing the empty array. RESULT will hold the pointer 0x1.

I = 1: Now we have entered the loop and on line 4, we add more characters to our array. At this point, we would have only entry 0x1 in our heap and our variable RESULT would have the pointer 0x1. Continuing through the code, line 5 determines if I is divisible by 2. In this iteration I = 1, so we take the else branch. We again add characters to our array. In total, we have written 5 characters. We then increment I and move to the next iteration.

I = 2: We continue the loop and on line 4, we add more characters to our array. We still have just one entry in memory and our pointer is still 0x1. In this iteration, we have written 4 characters. We then increment I and move to the next iteration of the loop.

I = 3: We continue the loop and on line 4, we add more characters to our array. In this iteration, we have written 5 characters. We then increment I and thus I = 4 breaking out of the loop.

Post-Loop: Once the loop breaks, we join the array to create the final string. This creates a new place in memory and changes RESULT to contain the pointer 0x2.

We can do some further analysis of the memory that is required for this particular block.

| Iteration | Memory Entries | Character Copies |

|---|---|---|

| 1 | 2 | 8 |

| 2 | 2 | 17 |

| 3 | 2 | 26 |

| 4 | 2 | 35 |

| . | . | . |

| n | 2 | 9n - 1 |

Again, you need not worry about creating these equations for this course. To illustrate the improvement even more explicitly, let’s consider our previous example with 100K iterations. For APPENDER there were (2x100,000 - 1) = 200,001 memory entries and (9x100,0002 + 7x100,000)/2 = 45 billion character copies. For APPENDER_LIST we now have just 2 memory entries and (9x100,000 - 1) = 899,999 character copies. This dramatic improvement was a result of changing our data structure ever so slightly.

Reference: http://people.cs.ksu.edu/~rhowell/DataStructures/strings/stringbuilders.html

To start this course, we have looked into strings. They are a very natural way to represent data, especially in real world applications. Often though, the datapoints can be very large and require multiple modifications. We also examined how strings work: element access, retrieving the size, and modifying them. We looked into some alternatives which included StringBuilders for Java and character arrays for Python.

To really understand this point, we have included a comparison. We have implemented the APPENDER and APPENDER_LIST functions in both Python and Java. For the Java implementation, we utilized StringBuilders.

1. function APPENDER(NUMBER, BASE)

2. RESULT = ""

3. loop I from 1 to NUMBER

4. RESULT = RESULT + BASE

5. if I MOD 2 = 0

6. RESULT = RESULT + " "

7. else

8. RESULT = RESULT + ", "

9. end loop

10. return RESULT

11. end function1. function APPENDER_LIST(NUMBER, BASE)

2. RESULT = []

3. loop I from 1 to NUMBER

4. RESULT.APPEND(BASE)

5. if I MOD 2 = 0

6. RESULT.APPEND(" ")

7. else

8. RESULT.APPEND(", ")

9. end loop

10. RESULT = "".JOIN(RESULT)

11. return RESULT

12. end functionFor the tests of 108 and 109 in Java, the string implementation took over 24 hours and the StringBuilder implementation ran out of memory. For these reasons, they are omitted from the figure.

These figures compare Strings and lists for Python and Strings and StringBuilders for Java. The intention of these is not to compare Python and Java.

In both languages, we see that the string function and the respective alternative performed comparably until approximately 106 (1,000,000 characters). Again, these are somewhat contrived examples with the intention of understanding side effects of using strings.

As we have discussed, modern coding languages will have clean up protocols and memory management strategies. With the intention of this class in mind, we will not discuss the memory analysis in practice.

When modifying strings we need to be cognizant of how often we will be making changes and how large those changes will be. If we are just accessing particular elements or only doing a few modifications then using plain strings is a reasonable solution. However, if we are looking to build our own DNA sequence this is not a good way to go as strings are immutable.

This page is the main page for the Trees Section. This section has chapters which cover:

This page is the main page for Introduction to Trees

For the next data structure in the course, we will cover trees, which are used to show hierarchical data. Trees can have many shapes and sizes and there is a wide variety of data that can be organized using them. Real world data that is appropriate for trees can include: family trees, management structures, file systems, biological classifications, anatomical structures and much more.

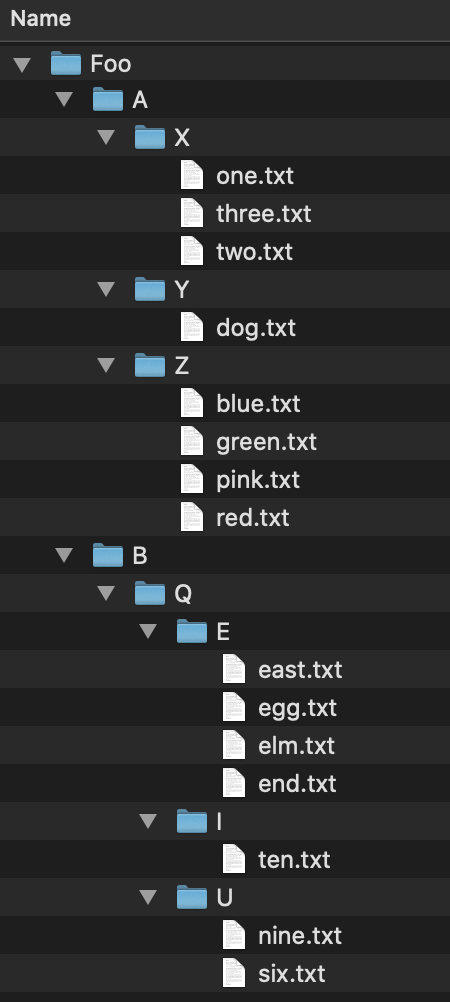

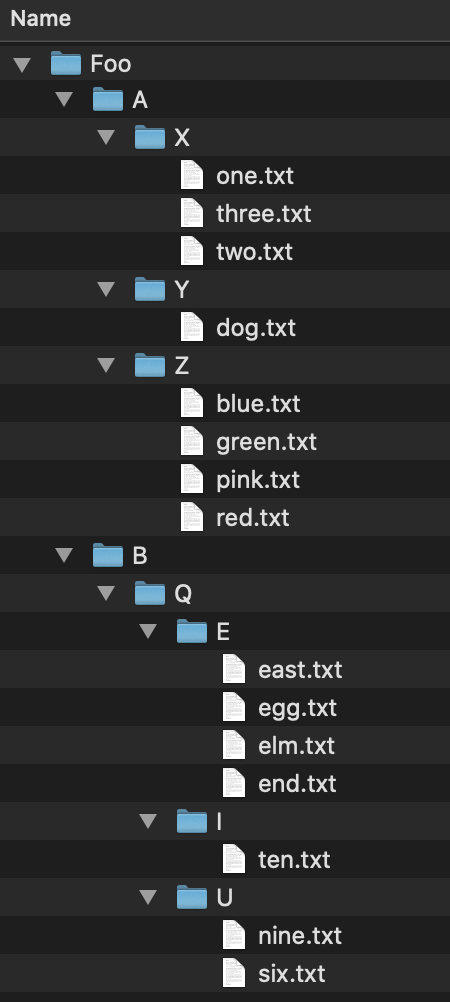

We can look at an example of a tree and the relationships they can show. Consider this file tree; it has folders and files in folders.

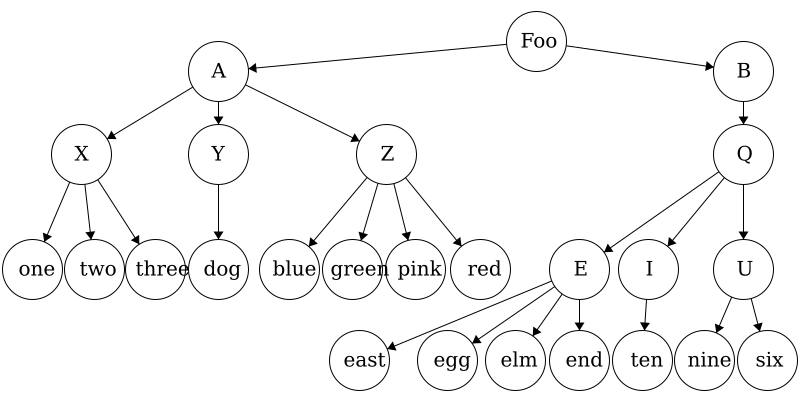

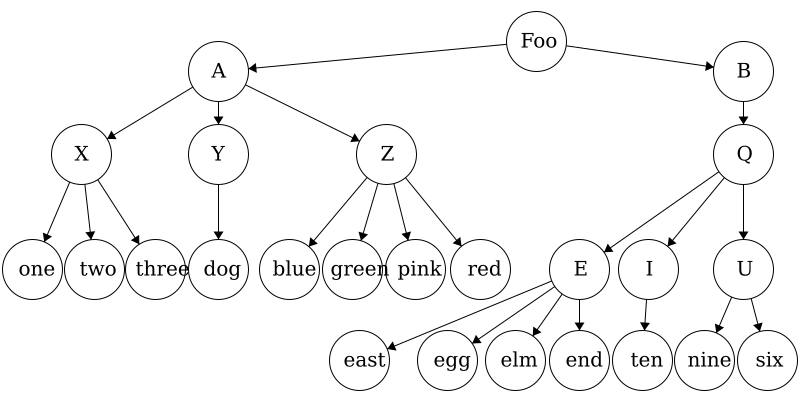

If we wanted to access the file elm.txt, we would have to follow this file path: Foo/B/Q/E/elm.txt. We can similarly store the file structure as a tree like we below. As before, if we wanted to get to the file elm.txt we would navigate the tree in the order: Foo -> B -> Q -> E -> elm.txt. As mentioned before, trees can be used on very diverse data sets; they are not limited to file trees!

In the last module we talked about strings which are a linear data structure. To be explicit, this means that the elements in a string form a line of characters. A tree, by contrast, is a hierarchal structure which is utilized best in multidimensional data. Going back to our file tree example, folders are not limited to just one file, there can be multiple files contained in a single folder- thus making it multidimensional.

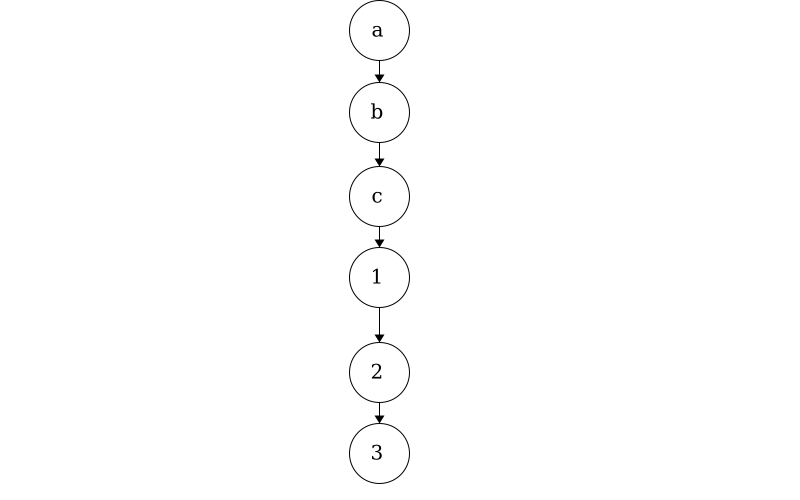

Consider the string “abc123”; this is a linear piece of data where there is exactly one character after another. We can use trees to show linear data as well.

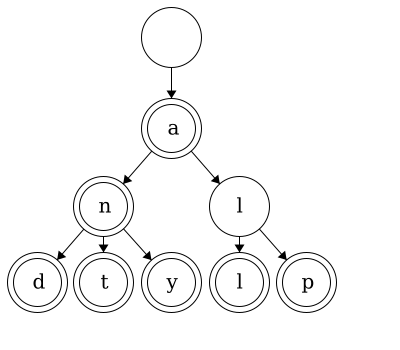

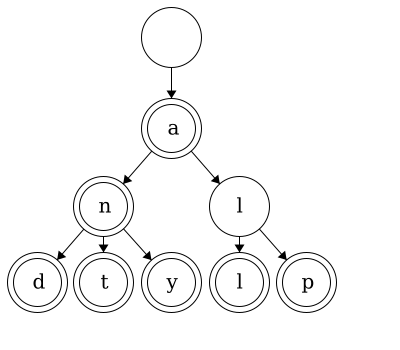

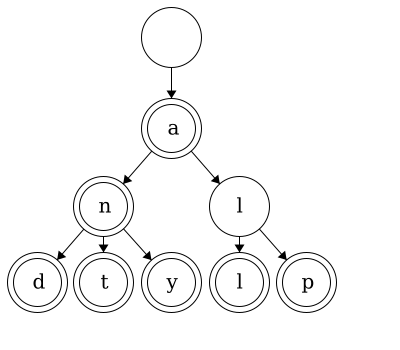

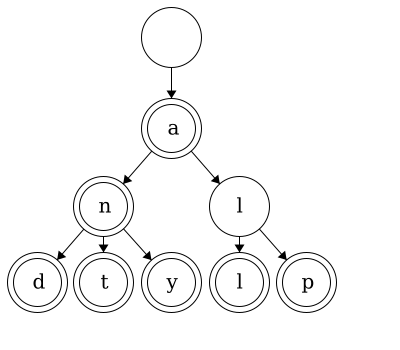

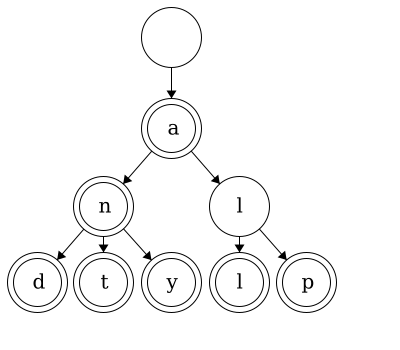

While trees can be used for linear data, it would be excessive and inefficient to implement them for single strings. In an upcoming module, we will see how we can use trees to represent any number of strings! For example, this tree below contains 7 words: ‘a’, ‘an’, ‘and’, ‘ant’, ‘any’, ‘all’, and ‘alp’.

In the next sections, we will discuss the properties of a tree data structure and how we would design one ourselves. Once we have a good understanding of trees and the properties of trees, we will implement our own.

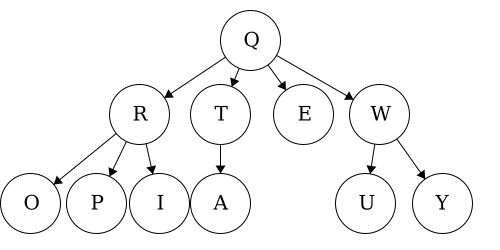

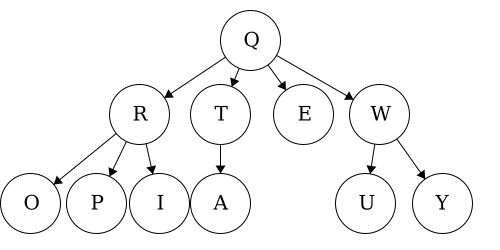

To get ourselves comfortable in working with trees, we will outline some standard vocabulary. Throughout this section, we will use the following tree as a guiding example for visualizing the definitions.

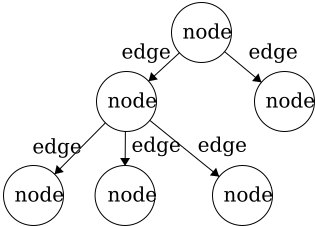

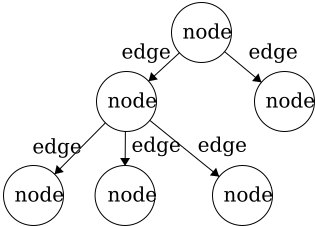

Node - the general term for a structure which contains an item, such as a character or even another data structure.Edge - the connection between two nodes. In a tree, the edge will be pointing in a downward direction.

This tree has five edges and six nodes. There is no limit to the number of nodes in a tree. The only stipulation is that the tree is

This tree has five edges and six nodes. There is no limit to the number of nodes in a tree. The only stipulation is that the tree is fully connected. This means that there cannot be disjoint portions of the tree. We will look at examples in the next section.

A rule of thumb for discerning trees is this: if you imagine holding the tree up by the root and gravity took effect, then all edges must be pointing downward. If an edge is pointing upward, we will have a cycle within our structure so it will not be a tree.

Root - the topmost node of the tree

To be a tree, there must be exactly one root. Having multiple roots, will result in a

To be a tree, there must be exactly one root. Having multiple roots, will result in a cycle or a tree that is not fully connected. In short, a cycle is a loop in the tree.

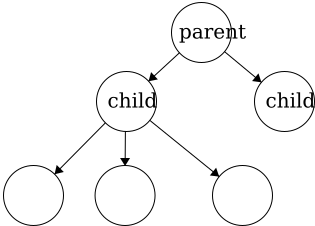

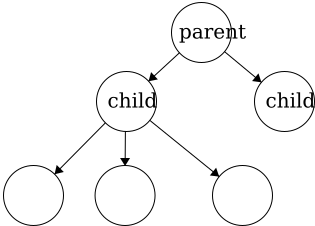

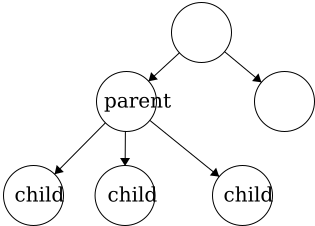

Parent - a node with an edge that connects to another node further from the root. We can also define the root of a tree with respect to this definition; Root: a node with no parent.Child - a node with an edge that connects to another node closer to the root.

In a tree, child nodes must have exactly one parent node. If a child node has more than one parent, then a

In a tree, child nodes must have exactly one parent node. If a child node has more than one parent, then a cycle will occur. If there is a node without a parent node, then this is necessarily the root node. There is no limit to the number of child nodes a parent node can have, but to be a parent node, the node must have at least one child node.Leaf - a node with no children.

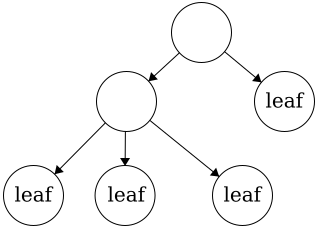

This tree has four leaves. There is no limit to how many leaves can be in a tree.

This tree has four leaves. There is no limit to how many leaves can be in a tree.Degree

Degree of a node - the number of children a node has. The degree of a leaf is zero.Degree of a tree - the number of children the root of the tree has.

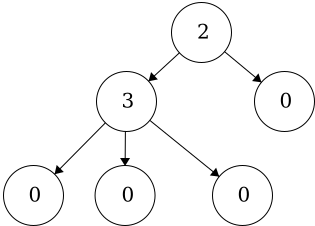

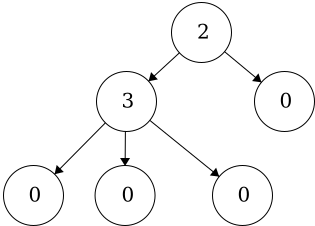

The degree of the nodes are shown as the values on the nodes in this example. The degree of the tree is equal to the degree of the root. Thus, the degree for this tree is 2.

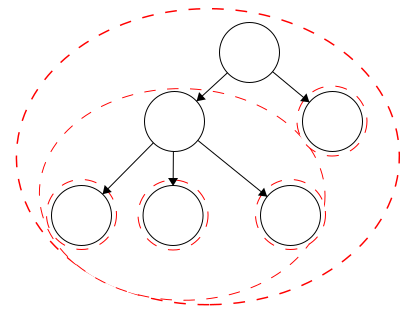

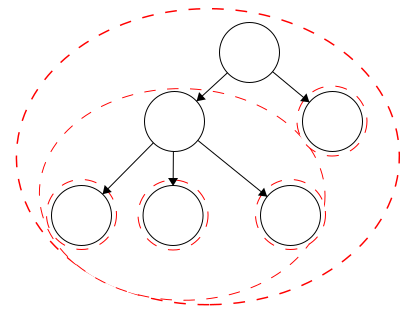

The degree of the nodes are shown as the values on the nodes in this example. The degree of the tree is equal to the degree of the root. Thus, the degree for this tree is 2.tree is defined recursively. This means that each child of the root is the root of another tree and the children of those are roots to trees as well. Again, this is a recursive definition so it will continue to the leaves. The leaves are also trees with just a single node as the root.

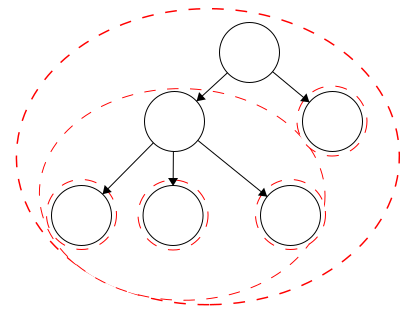

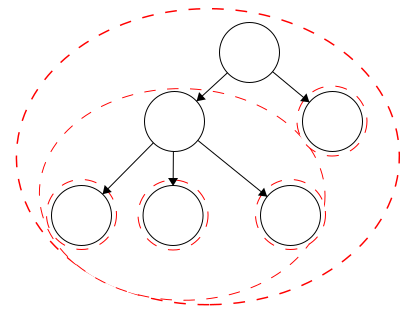

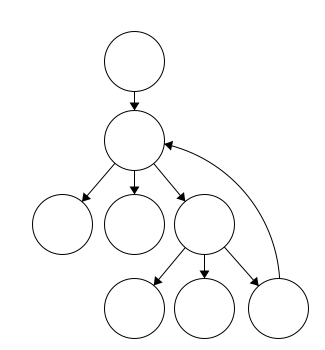

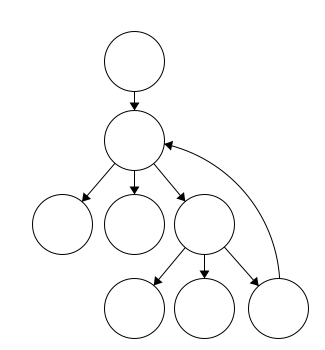

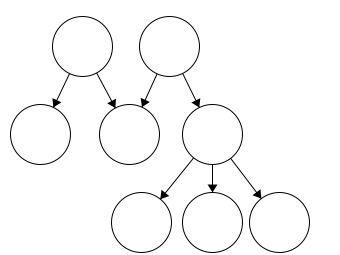

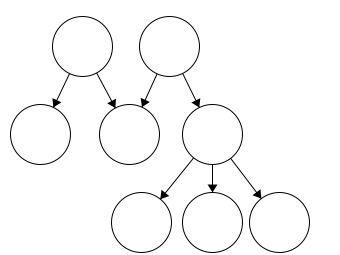

In our example tree, we have six trees. Each tree is outlined in a red dashed circle:

In our example tree, we have six trees. Each tree is outlined in a red dashed circle:

Trees can come in many shapes and sizes. There are, however some constraints to making a valid tree.

Any combination of the following represents a valid tree:

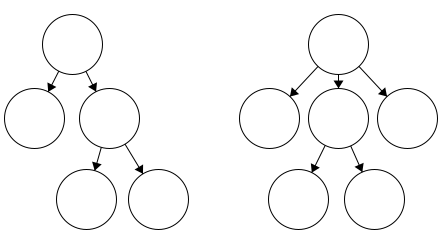

Below are some examples of invalid trees.

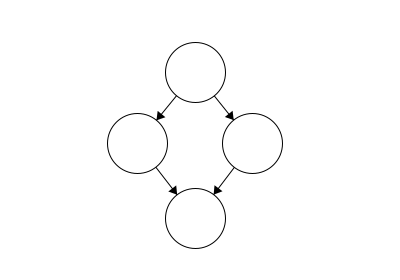

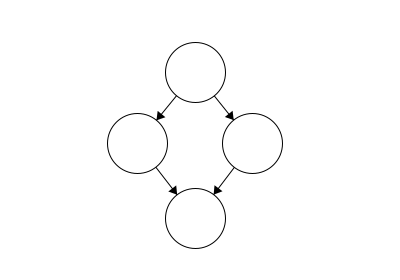

A cycle

Again, cycles are essentially loops that occur in our tree. In this example, we see that our leaf has two parents. One way to determine whether your data structure has a cycle is if there is more than one way to get from the root to any node.

Again, cycles are essentially loops that occur in our tree. In this example, we see that our leaf has two parents. One way to determine whether your data structure has a cycle is if there is more than one way to get from the root to any node.

A cycle

Here we can see another cycle. In this case, the node immediately after the root has two parents, which is a clue that a cycle exists. Another test

Here we can see another cycle. In this case, the node immediately after the root has two parents, which is a clue that a cycle exists. Another test

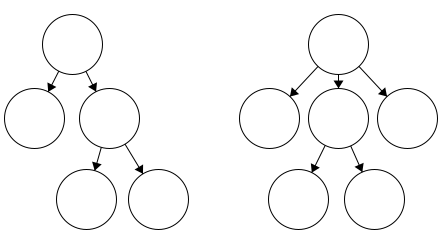

Two Roots

Trees must have a single root. In this instance, it may look like we have a tree with two roots. Working through this, we also see that the node in the center has two parents.

Trees must have a single root. In this instance, it may look like we have a tree with two roots. Working through this, we also see that the node in the center has two parents.

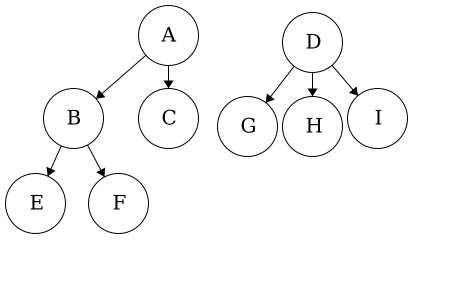

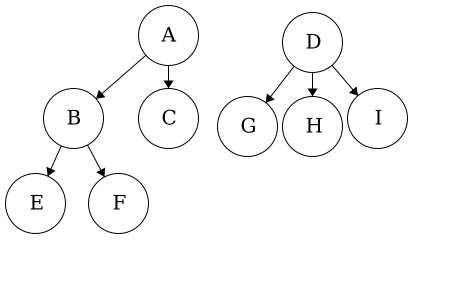

Two Trees

This example would be considered two trees, not a tree with two parts. In this figure, we have two fully connected components. Since they are not connected to each other, this is not a single tree.

This example would be considered two trees, not a tree with two parts. In this figure, we have two fully connected components. Since they are not connected to each other, this is not a single tree.

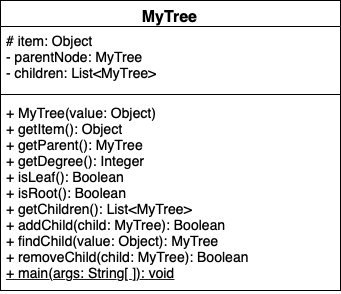

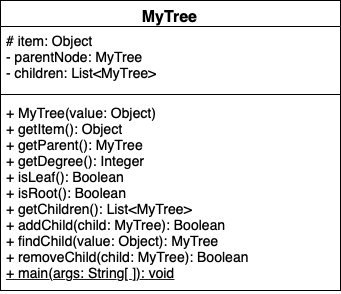

Along with understanding how trees work, we want to also be able to implement a tree of our own. We will now outline key components of a tree class.

Recall that trees are defined recursively so we can build them from the leaves up where each leaf is a tree itself. Each tree will have three properties: the item it contains as an object, its parent node of type MyTree, and its children as a list of MyTrees. Upon instantiation of a new MyTree, we will set the item value and initialize the parent node to None and the children to an empty list of type MyTree.

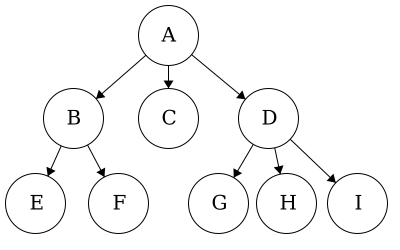

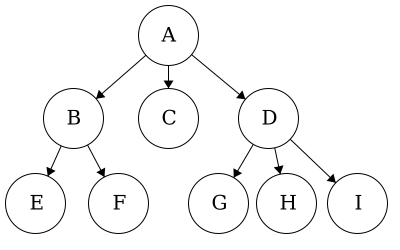

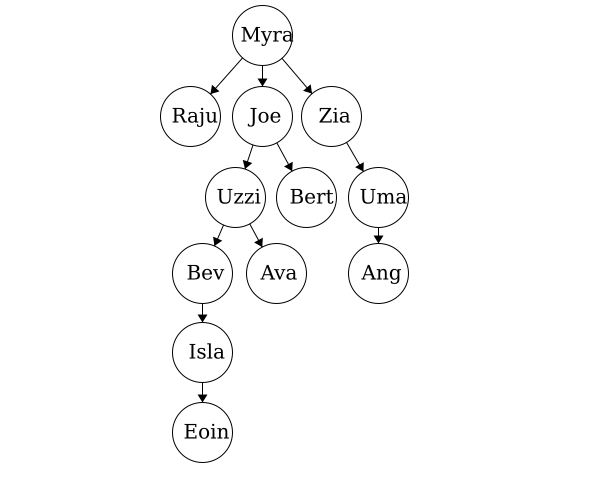

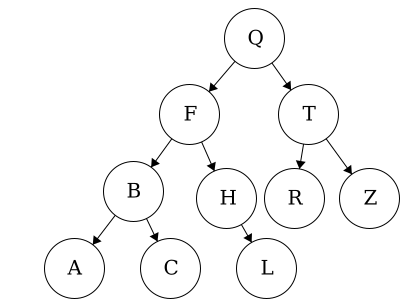

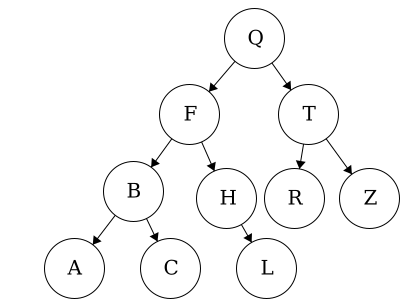

Suppose that we wanted to construct the following tree.

We would start by initializing each node as a tree with no parent, no children, and the item in this instance would be the characters. Then we build it up level by level by add the appropriate children to the respective parent.

We would start by initializing each node as a tree with no parent, no children, and the item in this instance would be the characters. Then we build it up level by level by add the appropriate children to the respective parent.

Disclaimer: This implementation will not prevent all cycles. In the next module, we will introduce steps to prevent cycles and maintain true tree structures.

In this method, we will take a value as input and then check if that value is the item of a child of the current node. If the value is not the item for any of the node’s children then we should return none.

function FINDCHILD(VALUE)

FOR CHILD in CHILDREN

IF CHILD's ITEM is VALUE

return CHILD

return NONE

end functionEach of these will be rather straight forward; children, item, and parent are all attributes of our node, so we can have a getter function that returns the respective values. The slightly more involved task will be getting the degree. Recall that the degree of a node is equal to the number of children. Thus, we can simply count the number of children and return this number for the degree.

We will have two functions to check the node type: one to determine if the node is a leaf and one to determine if it is a root. The definition of a leaf is a node that has no children. Thus, to check if a node is a leaf, we can simply check if the number of children is equal to zero. Similarly, since the definition of a root is a node with no parent, we can check that the parent attribute of the node is None.

When we wish to add a child, we must fisrt make sure we are able to add the child.

MyTreeWe will return true if the child was successfully added and false otherwise while raising the appropriate errors.

function ADDCHILD(CHILD)

IF CHILD has PARENT

throw exception

IF CHILD is CHILD of PARENT

return FALSE

ELSE

append CHILD to PARENT's children

set CHILD's parent to PARENT

return TRUE

end functionAs an example, lets walk through the process of building the tree above:

a with item ‘A’b with item ‘B’c with item ‘C’d with item ‘D’e with item ‘E’f with item ‘F’g with item ‘G’h with item ‘H’i with item ‘I’g to tree dh to tree di to tree de to tree bf to tree bOnce we have completed that, visually, we would have the tree above and in code we would have:

a with parent_node = None, item = ‘A’, children = {b,c,d}b with parent_node = a, item = ‘B’, children = {e,f}c with parent_node = a, item = ‘C’, children = { }d with parent_node = a, item = ‘D’, children = {g,h,i}e with parent_node = b, item = ‘E’, children = { }f with parent_node = b, item = ‘F’, children = { }g with parent_node = d, item = ‘G’, children = { }h with parent_node = d, item = ‘H’, children = { }i with parent_node = d, item = ‘I’, children = { }Note: When adding a child we must currently be at the node we want to be the parent. Much like when you want to add a file to a folder, you must specify exactly where you want it. If you don’t, this could result in a wayward child.

In the case of removing a child, we first need to check that the child we are attempting to remove is an instance of MyTree. We will return true if we successfully remove the child and false otherwise.

function REMOVECHILD(CHILD)

IF CHILD in PARENT'S children

REMOVE CHILD from PARENT's children

SET CHILD's PARENT to NONE

return TRUE

ELSE

return FALSE

end functionAs with adding a child, we need to ensure that we are in the ‘right place’ when attempting to remove a child. When removing a child, we are not ’erasing’ it, we are just cutting the tie from parent to child and child to parent. Consider removing d from a. Visually, we would have two disjoint trees, shown below:

In code, we would have:

a with parent_node = None, item = ‘A’, children = {b,c}b with parent_node = a, item = ‘B’, children = {e,f}c with parent_node = a, item = ‘C’, children = { }d with parent_node = None, item = ‘D’, children = {g,h,i}e with parent_node = b, item = ‘E’, children = { }f with parent_node = b, item = ‘F’, children = { }g with parent_node = d, item = ‘G’, children = { }h with parent_node = d, item = ‘H’, children = { }i with parent_node = d, item = ‘I’, children = { }In this module we have introduce vocabulary related to trees and what makes a tree a tree. To recap, we have introduced the following:

Child - a node with an edge that connects to another node closer to the root.Degree

Degree of a node - the number of children a node has. The degree of a leaf is zero.Degree of a tree - the number of children the root of the tree has.Edge - connection between two nodes. In a tree, the edge will be pointing in a downward direction.Leaf - a node with no children.Node - the general term for a structure which contains an item, such as a character or even another data structure.Parent - a node with an edge that connects to another node further from the root. We can also define the root of a tree with respect to this definition;Root - the topmost node of the tree; a node with no parent.Now we will work on creating our own implementation of a tree. These definitions will serve as a resource to us when we need refreshing on meanings; feel free to refer back to them as needed.

This page is the main page for Tree Traversal

In the last module, we covered the underlying vocabulary of trees and how we can implement our own tree. To recall, we covered: node, edge, root, leaf, parent, child, and degree.

For this module we will expand on trees and gain a better understanding of how powerful trees can be. As before, we will use the same tree throughout the module for a guiding visual example.

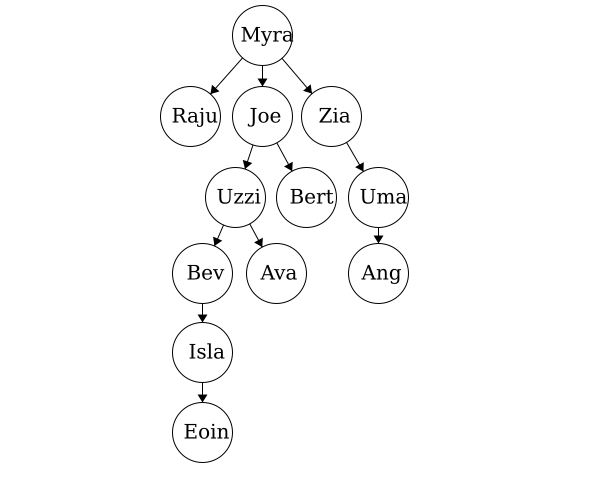

Many of the terms used in trees relate to terms used in family trees. Having this in mind can help us to better understand some of the terminology involved with abstract trees. Here we have a sample family tree.

Ancestor - The ancestors of a node are those reached from child to parent relationships. We can think of this as our parents and our parent’s parents, and so on.

Descendant - The descendants of a node are those reached from parent to child relationships. We can think of this as our children and our children’s children and so on.

Siblings - Nodes which share the same parent

A recursive program is broken into two parts:

In principle, the recursive case breaks the problem down into smaller portions until we reach the base case. Recursion presents itself in many ways when dealing with trees.

Trees are defined recursively with the base case being a single node. Then we recursively build the tree up. With this basis for our trees, we can define many properties using recursion rather effectively.

We can describe the sizes of trees and position of nodes using different terminology, like level, depth, and height.

Level - The level of a node characterizes the distance between the node and the root. The root of the tree is considered level 1. As you move away from the tree, the level increases by one.

Depth - The depth of a node is its distance to the root. Thus, the root has depth zero. Level and depth are related in that: level = 1 + depth.

Height of a Node - The height of a node is the longest path to a leaf descendant. The height of a leaf is zero.

Height of a Tree - The height of a tree is equal to the height of the root.

When working with multidimensional data structures, we also need to consider how they would be stored in a linear manner. Remember, pieces of data in computers are linear sequences of binary digits. As a result, we need a standard way of storing trees as a linear structure.

Path - a path is a sequence of nodes and edges, which connect a node with its descendant. We can look at some paths in the tree above:

Q to O: QROTraversal is a general term we use to describe going through a tree. The following traversals are defined recursively.

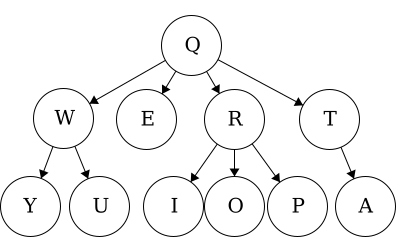

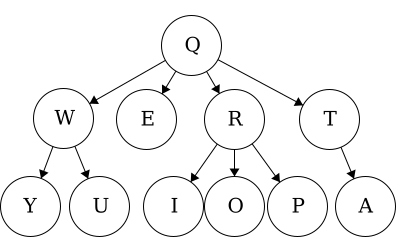

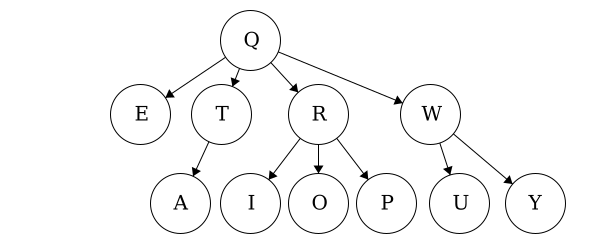

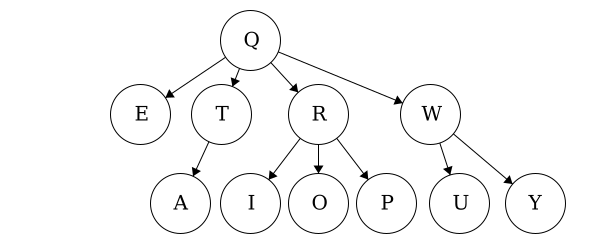

Pre refers to the root, meaning the root goes before the children.QWYUERIOPTA

Post refers to the root, meaning the root goes after the children.YUWEIOPRATQ

When we talk about traversals for general trees we have used the phrase ’the traversal could result in’. We would like to expand on why ‘could’ is used here. Each of these general trees are the same but their traversals could be different. The key concept in this is that for a general tree, the children are an unordered set of nodes; they do not have a defined or fixed order. The relationships that are fixed are the parent/child relationships.

| Tree | Preorder | Postorder |

|---|---|---|

| Tree 1 | QWYUERIOPTA |

YUWEIOPRATQ |

| Tree 2 | QETARIOPWUY |

EATIOPRUYWQ |

| Tree 3 | QROPITAEWUY |

OPIRATEUYWQ |

Again, we want to be able to implement a working version of a tree. From the last module, we had functions to add children, remove children, get attributes, and instantiate MyTree. We will now build upon that implementation to create a true tree.

A recursive program is broken into two parts:

Recall that in the previous module, we were not yet able to enforce the no cycle rule. We will now enforce this and add other tree functionality.

Disclaimer: In the previous module we had a disclaimer that stated our implementation would not prevent cycles. The following functions and properties will implement recursion. Thus, we can maintain legal tree structures!

In the first module, we discussed how we can define trees recursively, meaning a tree consists of trees. We looked at the following example. Each red dashed line represented a distinct tree, thus we had five trees within the largest tree making six trees in total.

We will use our existing implementation from the first module. Now to make our tree recursive, we will include more getter functions as well as functions for traversals and defining node relationships.

We can define each of these recursively.

YouTube VideoDepth - The depth of a node is its distance to the root. Thus, the root has depth zero.We can define the depth of a node recursively:

function GETDEPTH()

if ROOT

return 0

else

return 1 + PARENT.GETDEPTH()

end functionHeight of a Node - The height of a node is the longest path to a leaf descendant. The height of a leaf is zero.We can define the height of a node recursively:

function GETHEIGHT()

if LEAF

return 0

else

MAX = 0

for CHILD in CHILDREN

CURR_HEIGHT = CHILD.GETHEIGHT()

if CURR_HEIGHT > MAX

MAX = CURR_HEIGHT

return 1 + MAX

end functionRoot - the topmost node of the tree; a node with no parent.We can define returning the root recursively:

function GETROOT()

if ISROOT()

return this tree

else

return PARENT.GETROOT()

end functionWe define the size of a tree as the total number of children.

function GETSIZE()

SIZE = 1

for CHILD in CHILDREN

SIZE += CHILD.GETSIZE()

return SIZE

end functionTo find a value within our tree, we will traverse down a branch as far as we can until we find the value. This will return the tree that has the value as the root.

function FIND(VALUE)

if ITEM is VALUE

return this node

for CHILD in CHILDREN

FOUND = CHILD.FIND(VALUE)

if FOUND is not NONE

return FOUND

return NONE

end functionWe can determine many relationships within the tree. For example, given a node is it an ancestor of another node, a descendant, or a sibling?

YouTube VideoFor this function, we are asking: is this node an ancestor of the current instance? In this implementation, we will start at our instance and work down through the tree trying to find the node in question. With that in mind, we can define this process recursively:

function ISANCESTOR(TREE)

if at TREE

return true

else if at LEAF

return false

else

for CHILD in CHILDREN

FOUND = CHILD.ISANCESTOR(TREE)

if FOUND

return true

return false

end functionFor this function, we are asking: is this node a descendant of the current instance? In this implementation, we will start at our instance and work up through the tree trying to find the node in question. With that in mind, we can define this process recursively:

function ISDESCENDANT(TREE)

if at TREE

return true

else if at ROOT

return false

else

return PARENT.ISDESCENDANT(TREE)

end functionFor this function, we are asking: is this node a sibling of the current instance? To determine this, we can get the parent of the current instance and then get the parents children. Finally, we check if the node in question is in that set of children.

function ISSIBLING(TREE)

if TREE in PARENT's CHILDREN

return true

else

return false

end functionIn any tree, we can say that the root is a common ancestor to all of the nodes. We would like to get more information about the common ancestry of two nodes. For this function, we are asking: which node is the first place where this instance and the input node’s ancestries meet? Similar to our ISDESCENDANT, we will work our way up the tree to find the point where they meet

function LOWESTANCESTOR(TREE)

if at TREE

return TREE

else if ISANCESTOR(TREE)

return instance

else if at ROOT

return NONE

else

return PARENT.LOWESTANCESTOR(TREE)

end functionThis function will generate the path which goes from the root to the current instance.

function PATHFROMROOT(PATH)

if NOT ROOT

PARENT.PATHFROMROOT(PATH)

append ITEM to PATH

end functionIn this module we have talked about two traversals: preorder and postorder. Both of these are defined recursively and the prefix refers to the order of the root.

In a preorder traversal, first we access the root and then run the preorder traversal on the children.

function PREORDER(RESULT)

append ITEM to RESULT

FOR CHILD in CHILDREN

CHILD.PREORDER(RESULT)

end functionIn a postorder traversal, first we run the postorder traversal on the children then we access the root.

function POSTORDER(RESULT)

FOR CHILD in CHILDREN

CHILD.POSTORDER(RESULT)

append ITEM to RESULT

end functionIn this section, we discussed more terminology related to trees as well as tree traversals. To recap the new vocabulary:

Ancestor - The ancestors of a node are those reached from child to parent relationships. We can think of this as our parents and the parents of our parents, and so on.Depth - The depth of a node is its distance to the root. Thus, the root has depth zero. Level and depth are related in that: level = 1 + depth.Descendant - The descendants of a node are those reached from parent to child relationships. We can think of this as our children and our children’s children and so on.Height of a Node - The height of a node is the longest path to a leaf descendant. The height of a leaf is zero.Height of a Tree - The height of a tree is equal to the height of the root.Level - The level of a node characterizes the distance the node is from the root. The root of the tree is considered level 1. As you move away from the tree, the level increases by one.Path - a sequence of nodes and edges which connect a node with its descendant.Siblings - Nodes which share the same parentTraversal is a general term we use to describe going through a tree. The following traversals are defined recursively.

This page is the main page for Tries

Recall that in the beginning of our discussions about trees, we looked at a small tree which contained seven strings as motivation for trees. This was a small example of a trie (pronounced ’try’) which is a type of tree that can represent sets of words.

Tries can be used for a variety of tasks ranging from leisurely games to accessibility applications. One example is ‘Boggle’ where players have a set of random letters and try to make as many words as possible. To code this game, we could create a vocabulary with a trie then traverse it to determine if players have played legal words. We can also use tries to provide better typing accessibility. Users could type a few letters of a word and our code could traverse the trie and suggest what letters or words they may be trying to enter.

A trie is a type of tree with some special characteristics. First it must follow the guidelines of being a tree:

The special characteristics for tries are:

In this course, we will display nodes with two circles as a convention to show which nodes are the end of words. Looking at this small trie as an example, we can determine which words are contained in our trie.

We start at the root, which will typically be an empty string, and traverse to a double lined node.

We start at the root, which will typically be an empty string, and traverse to a double lined node. "" -> a -> l -> l. Thus, the word ‘all’ is contained in our trie. Words within our tries do not have to end at leaves. For example, we can traverse "" -> a for the word ‘a’. We say this trie ‘contains’ seven words: ‘a’, ‘an’, ‘and’, ‘ant’, ‘any’, ‘all’, and ‘alp’.

Let’s look at another example of a trie. Here we have a larger trie. Think about how many words are captured by the tree; click the tree to see how many!

While the ‘a’, ‘at’, and ‘bee’ are words in the English language, they are not recognized by our trie. Depending on what the user intended, this could be by design. When we build our tries, users will input words that are valid for their vocabulary. Tries are not limited to the English language and can be created for any vocabulary.

To implement our own trie, we will build off of MyTree that we built recursively. We will add an attribute to our tree to reinforce which nodes are words and which ones are not.

We have the existing attributes of MyTree: parent, children, and item. For MyTrie, we introduce the boolean attribute is_word to delineate if our trie is a word.

To add a word to our trie, we traverse through the trie letter by letter. We can define this recursively.

function ADDWORD(WORD)

if WORD length is 0

if already a word

return false

else

set is_word to true

return true

else

FIRST = first character of WORD

REMAIN = remainder of WORD

CHILD = FINDCHILD(FIRST)

if CHILD is NONE

NODE = new MyTrie with item equal FIRST

insert NODE into our existing trie

CHILD = NODE

return CHILD.ADDWORD(REMAIN)

end functionSimilar to adding a word, we traverse our trie letter by letter. Once we get to the end of the word set is_word to false. If the word ends at a leaf, we will remove the leaf (then if the second to last character is a leaf, we remove the leaf and so on). If the word does not end in a leaf, meaning another word uses that node, we will not remove the node.

is_word is false and it is a leaf, remove the node.function REMOVEWORD(WORD)

if WORD length is 0

if already not a word

return false

else

set is_word to false

return true

else

FIRST = first character of WORD

REMAIN = remainder of WORD

CHILD = FINDCHILD(FIRST)

if CHILD is NONE

return false

else

RET = CHILD.REMOVEWORD(REMAIN)

if CHILD is not a word AND CHILD is a leaf

REMOVECHILD(CHILD)

return RET

end functionAgain, we will traverse the trie letter by letter. Once we get to the last letter, we can return that nodes is_word attribute. There is a chance that somewhere in our word, the letter is not a child of the previous node. If that is the case, then we return false.

function CONTAINSWORD(WORD)

if WORD length is 0

return `is_word`

else

FIRST = first character of WORD

REMAIN = remainder of WORD

CHILD = FINDCHILD(FIRST)

if CHILD is NONE

return false

else

return CHILD.CONTAINSWORD(REMAIN)

end functionFor this function, we want to get the total number of words that are contained within our trie. We will fan out through all of the children and count all of the nodes that have their is_word attribute equal to true.

function WORDCOUNT()

COUNT = 0

if is_word

COUNT = 1

for CHILD in CHILDREN

COUNT += CHILD.WORDCOUNT()

return COUNT

end functionNext, we want to get the longest word contained in our trie. To do this, we will recurse each child and find the maximum length of the child.

function MAXWORD()

if LEAF and is_word

return 0

else

MAX = -1

for CHILD in CHILDREN

COUNT = CHILD.MAXWORD()

if COUNT greater than MAX

MAX = COUNT

return MAX + 1

end functionThis function will act as an auto-complete utility of sorts. A user will input a string of characters and we will return all of the possible words that are contained in our trie. This will happen in two phases. First, we traverse the trie to get to the end of the input string (lines 1-12). The second portion then gets all of the words that are contained after that point in our trie (lines 14-21).

function COMPLETIONS(WORD)

1. if WORD length greater than 0

2. FIRST = first character of WORD

3. REMAIN = remainder of WORD

4. CHILD = FINDCHILD(FIRST)

5. if CHILD is none

6. return []

7. else

8. COMPLETES = CHILD.COMPLETIONS(REMAIN)

9. OUTPUT = []

10. for COM in COMPLETES

11. append CHILD.item + COM to OUTPUT

12. return OUTPUT

13. else

14. OUTPUT = []

15. if is_word

16. append ITEM to OUTPUT

17. for CHILD in CHILDREN

18. COMPLETES = CHILD.COMPLETIONS("")

19. for COM in COMPLETES

20. append CHILD.item + COM to OUTPUT

21. reutrn OUTPUT

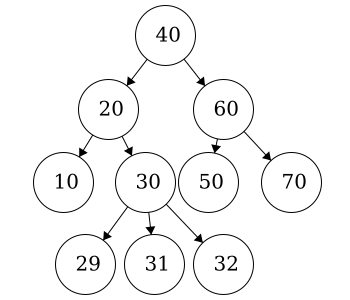

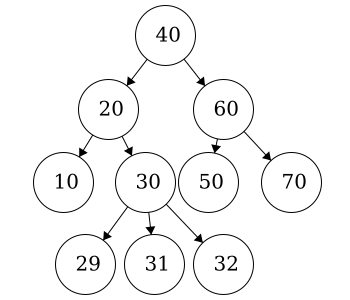

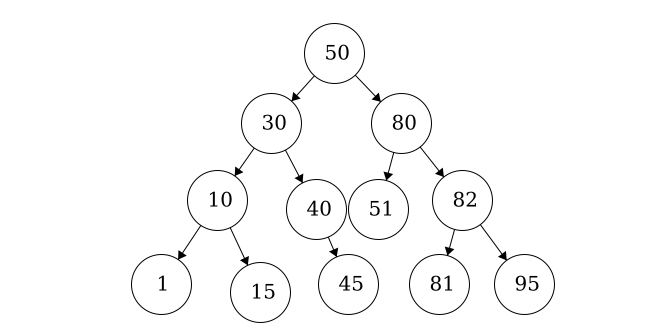

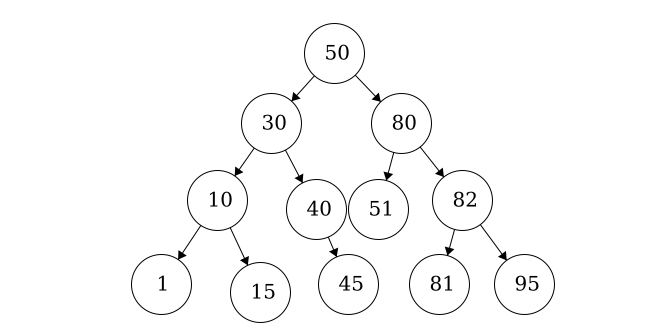

end functionThis page is the main page for Binary Trees

A binary tree is a type of tree with some special conditions. First, it must follow the guidelines of being a tree:

The special conditions that we impose on binary trees are the following:

To reinforce these concepts, we will look at examples of binary trees and examples that are not binary trees.

This is a valid binary tree. We have a single node, the root, with no children. As with general trees, binary trees are built recursively. Thus, each node and its child(ren) are trees themselves.

This is a valid binary tree. We have a single node, the root, with no children. As with general trees, binary trees are built recursively. Thus, each node and its child(ren) are trees themselves.

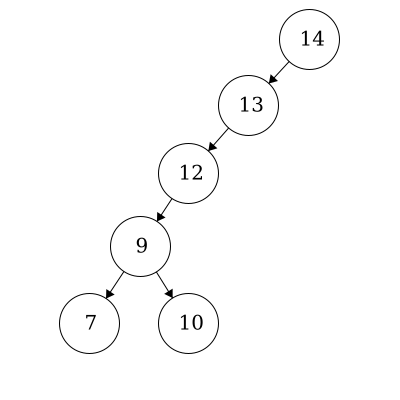

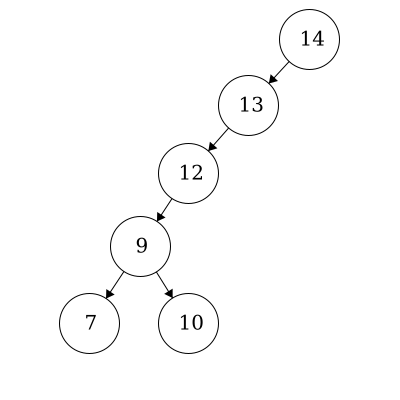

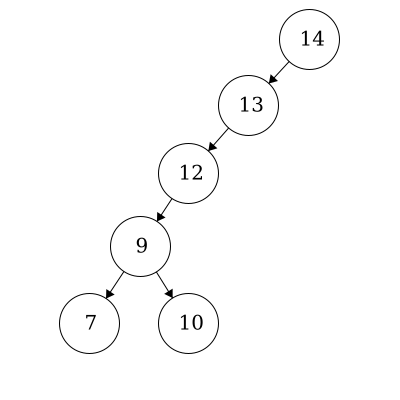

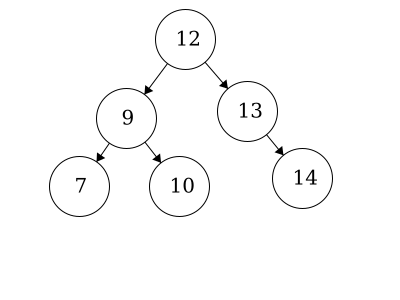

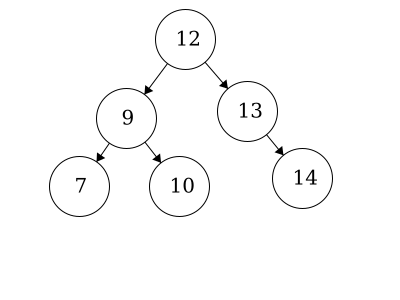

This is also a valid binary tree. All of the left children are less than their parent. The node with item ‘10’ is also in the correct position as it is less than 12, 13, and 14 but greater than 9.

This is also a valid binary tree. All of the left children are less than their parent. The node with item ‘10’ is also in the correct position as it is less than 12, 13, and 14 but greater than 9.

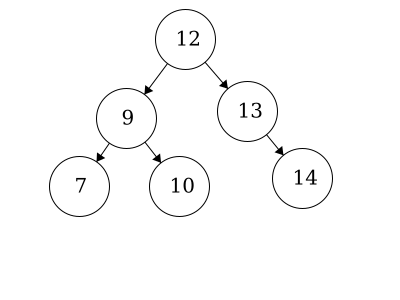

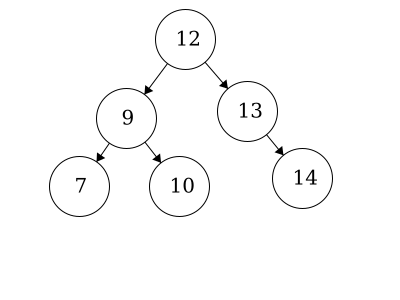

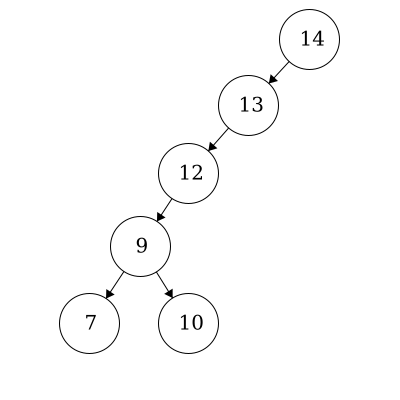

We have the same nodes but our root is now 12 whereas before it was 14. This is also a valid binary tree.

We have the same nodes but our root is now 12 whereas before it was 14. This is also a valid binary tree.

Here we have an example of a binary tree with alphabetical items. As long as we have items which have a predefined order, we can organize them using a binary tree.

Here we have an example of a binary tree with alphabetical items. As long as we have items which have a predefined order, we can organize them using a binary tree.

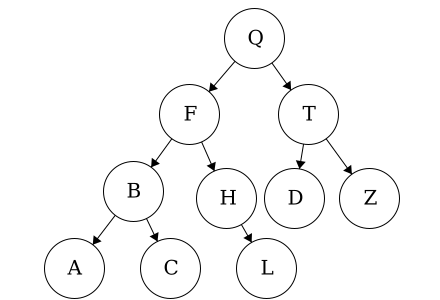

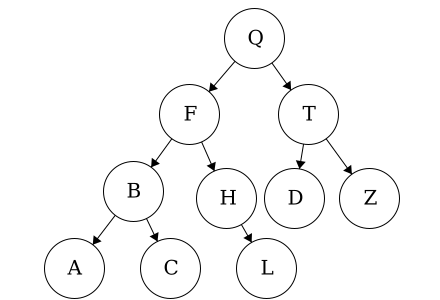

We may be inclined to say that this is a binary tree: each node has 0, 1, or 2 children and amongst children and parent nodes, the left child is smaller than the parent and the right child is greater than the parent. However, in binary trees, all of the nodes in the left tree must be smaller than the root and all of the nodes in the right tree must be larger than the root. In this tree,

We may be inclined to say that this is a binary tree: each node has 0, 1, or 2 children and amongst children and parent nodes, the left child is smaller than the parent and the right child is greater than the parent. However, in binary trees, all of the nodes in the left tree must be smaller than the root and all of the nodes in the right tree must be larger than the root. In this tree, D is out of place. Node D is less than node T but it is also less than node Q. Thus, node D must be on the right of node Q.

In this case, we do not have a binary tree. This does fit all of the criteria for being a tree but not the criteria for a binary tree. Nodes in binary trees can have at most 2 children. Node

In this case, we do not have a binary tree. This does fit all of the criteria for being a tree but not the criteria for a binary tree. Nodes in binary trees can have at most 2 children. Node 30 has three children.

In the first module we discussed two types of traversals: preorder and postorder. Within that discussion, we noted that for general trees, the preorder and postorder traversal may not be unique. This was due to the fact that children nodes are an unordered set.

We are now working with binary trees which have a defined child order. As a result, the preorder and postorder traversals will be unique! These means that for a binary tree when we do a preorder traversal there is exactly one string that is possible. The same applies for postorder traversals as well.

Recall that these were defined as such:

Now for binary trees, we can modify their definitions to be more explicit:

Let’s practice traversals on the following binary tree.

Since we have fixed order on the children, we can introduce another type of traversal: in-order traversal.

In-order Traversal:

Our implementation of binary trees will inherit from our MyTree implementation as binary trees are types of trees. Thus, MyBinaryTree will have the functionality of MyTree in addition to the following.

The binary tree has two attributes

MyBinaryTree, the item should be less than the item of the parent.MyBinaryTree, the item should be greater than the item of the parent.Get Size

MyTree size function. If the tree is empty then we return zero. If the tree is not empty then call the MyTree size function.Is Empty

To Sorted List

function TOSORTEDLIST()

LIST = []

if there`s LEFTCHILD

LIST = LIST + LEFTCHILD.TOSORTEDLIST

LIST = LIST + ITEM

if there`s RIGHTCHILD

LIST = LIST + RIGHTCHILD.TOSORTEDLIST

return LISTWhen inserting children to a binary tree, we must take some special considerations. All of the node items in the left tree must be less than the parent node item and all of the node items in the right tree must be greater than the parent node item.

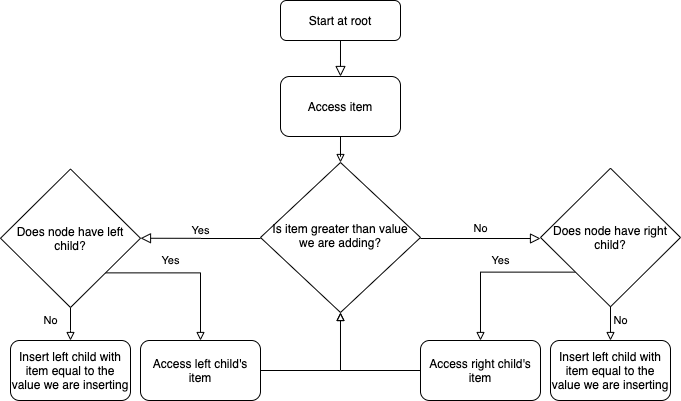

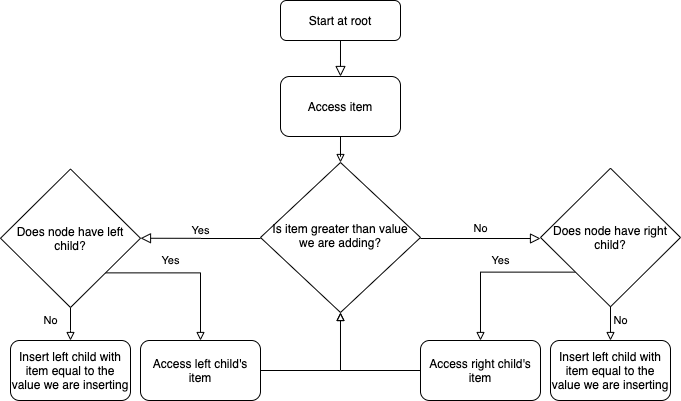

The general procedure for adding a child is the following:

Suppose that we have the following tree and we want to add a node with item ‘85’. Click the binary tree to see the resulting tree.

function INSERT(VALUE)

if node is empty:

set nodes item to value

else:

if node.ITEM is VALUE

return false

else if node.ITEM > VALUE

LC = node`s left child

if LC is NONE

CHILD = new BINARYTREE with root.ITEM equal VALUE

add CHILD to nodes children

set node.LEFTCHILD equal to CHILD

return true

else

return LC.INSERT(VALUE)

else

RC = node`s right child

if RC is NONE

CHILD = new BINARYTREE with root.ITEM equal VALUE

add CHILD to nodes children

set node.RIGHTCHILD equal to CHILD

return true

else

return RC.INSERT(VALUE)

end functionRemoving children is not as straightforward as inserting them. The general procedure for removing a child is to replace that nodes value with its smallest right descendant. First we will traverse the binary tree until we find the node with the value we are trying to remove (lines 18-32 below). Then we have three separate cases, discussed in detail below.

Removing a leaf is the most straightforward. We remove the value from the node and then sever the connection between parent and child. (lines 5-7 below)

Suppose we have this binary tree and we want to remove value 5. What do you think the resulting binary tree will look like? Click the binary tree to see the result.

When we remove a value from a node that does not have a right child, we cannot replace the value with the smallest right child. In this instance we will instead replace the value with the smallest left child then prune the tree to clean it up. Once we replace the value, we must switch the node’s left child to be the right child in order to maintain proper binary tree structure. (lines 8-13 below)

Suppose we have this binary tree and we want to remove value 4. What do you think the resulting binary tree will look like? Click the binary tree to see the result.

When we remove a value from a node that has a right child, we can replace the value with the nodes smallest right child. (Lines 14-17 Below)

Suppose we have this binary tree and we want to remove value 10. What do you think the resulting binary tree will look like? Click the binary tree to see the result.

1. function REMOVE(VALUE)

2. if node is empty:

3. error

4. if node.ITEM is VALUE

5. if node is a leaf

6. set node.ITEM to none

7. return TRUE

8. else if node has no right child

9. node.ITEM = LEFTCHILD.REMOVESMALLEST()

10. prune left-side

11. store left child in right child

12. set left child to none

13. return TRUE

14. else

15. node.ITEM = RIGHTCHILD.REMOVESMALLEST()

16. prune right-side

17. return TRUE

18. else

19. if node.ITEM > VALUE

20. if node has LEFTCHILD

21. SUCCESS = LEFTCHILD.REMOVE(VALUE)

22. prune left-side

23. return SUCCESS

24. else

25. return FALSE

26. else

27. if node has RIGHTCHILD

28. SUCCESS = RIGHTCHILD.REMOVE(VALUE)

29. prune right-side

30. return SUCCESS

31. else

32. return FALSE

33. end functionWe use the pruning functions to severe the tie between parent and child nodes.

function PRUNERIGHT()

if RIGHTCHILD has no value

REMOVECHILD(RIGHTCHILD)

set this nodes RIGHTCHILD former RIGHTCHILDs RIGHTCHILD

if RIGHTCHLID is not none

ADDCHILD(RIGHTCHILD)

end functionfunction PRUNELEFT()

if LEFTCHILD has no value

REMOVECHILD(LEFTCHILD)

set this nodes LEFTCHILD former LEFTCHILDs RIGHTCHILD

if LEFTCHILD is not none

ADDCHILD(LEFTCHILD)

end functionWe use the remove smallest function to retrieve the smallest value in the binary tree which will replace our value.

function REMOVESMALLEST()

if node has left child

REPLACEMENT = LEFTCHILD.REMOVESMALLEST

prune left-side

return REPLACEMENT

else

REPLACEMENT = node.ITEM

if node has right child

node.ITEM = RIGHTCHILD.REMOVESMALLEST()

prune right-side

else

node.ITEM = NONE

return REPLACEMENT

end function

While this is a valid binary tree, it is not balanced. Let’s look at the following tree.

While this is a valid binary tree, it is not balanced. Let’s look at the following tree.

We have the same nodes but our root is now 12 whereas before it was 14. This is a valid binary tree. We call this a