Subsections of Introduction

CC 520 Syllabus - Spring 2026

Content in this syllabus is subject to change

CC 520 Spring 2026

Info

The preferred method of contact for help will be through the Edstem Discussion board, linked in the course navigation menu. Any questions or feedback can be posted there. More detail on using this platform can be found below and in Canvas.

All emails for the course should be sent to cc520-help@KSUemailProd.onmicrosoft.com (sorry I know it’s a long address). This will contact the professors and ALL the TAs for the course and guarantee the fastest response time if contacting via email (please allow one full business day for response). You are welcome to send emails that may contain more sensitive information directly to intended recipients.

Communication can also be done through Microsoft Teams.

Professor: Josh Weese – weeser@ksu.edu

- Office: 2214 Engineering Hall

- Office Hours: See my calendar. Office hours are always available online and in-person. For online help during office hours, please send a direct message in MS Teams (busy times will utilize https://officehours.cs.ksu.edu/).

Prerequisites

- CC 315 - Data Structures & Algorithms II

- CC 410 - Advanced Programming (Prerequisite or Concurrent Enrollment)

- Optional: MATH 312 - Finite Applications of Mathematics or MATH 510 - Discrete Mathematics

Recommended Texts

These books are not required. I will be providing notes, videos, and walk through examples during the course. If you are looking for more traditional text-book material, I have found these books to be helpful.

- T-SQL Fundamentals (Third Edition) by Itzik Ben-Gan

- Database Systems: The Complete Book (Second Edition) by Hector Garcia-Molina, Jeffrey D. Ullman, Jennifer Widom

- SQL Success - Database Programming Proficiency by Stephane Faroult

Required Software

We will be utilizing MS SQL Server for this course. For information about accessing SQL Server for the course, see SQL Server Access.

How to Get Help in this Course

CC 520 Database Essentials is not like your standard programming course. We will be doing some programming, but our focus will be primarily with data; how to store it, retrieve it, manipulate it, and use it with an application. Some of the topics we will cover are easier than others, but some can be fairly tricky. That, coupled with this being an online course, you are encouraged to seek help whenever you feel you are being overwhelmed or don’t understand a topic. You are not alone! We will always be willing to help students with any questions you may have about the class or other issues related to Computing Science. So please, don’t be afraid to ask questions. Get help early and often!

Here are the 5 recommended ways to get help in this course:

- Review the course materials posted on K-State Canvas and the course website

- Check the Edstem Discussion board to see if a similar question has been asked, otherwise, post a new question.

- Visit your professor’s office hours, or the office hours for your TA if available

- Send a message to the CIS 520 Help email (cc520-help@KSUemailProd.onmicrosoft.com)).

- Ask your teammates for help or advice on assignments or projects (be mindful of the honor code!)

- Schedule a one-on-one meeting with your professor/TA

This semester, we will be using edstem.org, specifically, their Ed Discussion platform. Ed Discussion is a reddit/forum style web app that allows students to post and ask questions. This will be our preferred way of communication when it comes to questions/etc. in the course. Please adhere to the following guidelines:

- Before creating a new thread, please make sure there isn’t a similar one already made.

- If you are asking a question in Ed Discussion, please correctly mark it as such along with the correct tags.

- Please make your thread public when possible in case others have the same questions.

- Threads can be made anonymous when needed. Course staff may anonymize private threads and make them public if they find it to be beneficial for the class.

- When posting code, please do not post solutions or part of solutions to homework. If you need to share your code with us, please make your thread private.

- If you would like a new category or tag made, please let us know!

If you need help getting started with the platform, please go through the following links:

Course Overview

Introduction to concepts and techniques in database management. Overview of relational databases, NoSQL databases, and related topics. Database programming and use of databases in applications. Theory and architecture of database management systems (DBMS).

Course Description

The purpose of this course is to introduce concepts, approaches, and techniques in database management. This includes exploring the representation of information as data, data storage techniques, foundations of data models, data retrieval, database design, transaction management, integrity and security.

Student Learning Outcomes

After completing this course, a successful student will be able to:

- Read and Write SQL, including queries, relations, database modifications, constraints, triggers, transactions, and views.

- Recognize the difference between NoSQL and its philosophy compared to SQL.

- Design and create databases utilizing entity relationship models, functional dependencies, and normalization.

- Design queries and databases that are optimized in storage, retrieval, and processing of data.

- Create an application that utilizes a database.

Major Course Topics

- SQL Language

- NoSQL & its relation to SQL

- DBMS design

- Programming with databases

- Database system architecture

- Database efficiency

- Practical applications of databases

Course Structure

This course is being taught 100% online. There may be some bumps in the road as we refine the overall course structure. Students will work through each set of modules, with weekly or bi-weekly due dates. Material will be provided in the form of recorded videos, links to online resources, and discussion prompts. Each module will include a hands-on assignment, which will be graded interactively by the instructor. Assignments may also include written portions or presentations, which will be submitted online.

Grading

There will be three exams for the course. Students will be evaluated based on exams, homework assignments, and a term project. Assignments are to be completed without any collaboration with classmates or other outside help unless otherwise stated. Any unauthorized aid may result in a 0 for the assignment or an XF for the course and a report submitted to the Academic Honor Council. All assignments will be submitted through Canvas. The specific grading scheme is shown below:

- Exams/Quizzes: 25%

- Homework assignments: 30%

- Final Project: 45%

Assignments

Warning

All work is expected to be done individually unless otherwise stated. Work submitted to canvas must be 100% your own work (without unauthorized assistance, including AI). A violation of the Honor Code Policy (see below) will result in an automatic 0 for the assignment and the violation will be reported to the Honor System. A second violation will result in an XF in the course. The sanctions will apply to ALL parties involved in the violation.

Note that depending on the severity of the first violation, the sanction may be worse than just a 0 for the assignment

There will be some programming and written assignments (majority will be SQL-based, but may involve some coding like the final project). It is acceptable to communicate with other students about the concepts in the assignment if you do not understand it, but you should not discuss the details of how the assignment should be completed (never share your code/work with another student!). Your submission should be your own work, or the work of your small group if allowed by the instructor. *When in doubt, ask!*

In order to avoid turning in code or SQL that does not work (or maybe even the wrong file) please double check your solution after you submitted it to Canvas. Redownload what you submitted from Canvas and run it again to assure that the program you submitted is working as intended.

Late Work

Warning

Poor planning/procrastination on your part does not constitute as an emergency on ours.

Every student should strive to turn in work on time. Late work will receive penalty of 10% of the possible points for each day its late. Some assignments will NOT be accepted late! Others will be limited to a maximum of three days late (not always 3 days). Note that this penalty is applied on a per hour basis (i.e. if your assignment is 12 hours late, it will receive a ~5% deduction)

Artificial Intelligence Usage Policy

This course uses a stoplight approach regarding the use of generative artificial intelligence (GenAI) tools, such as ChatGPT, Claude, Copilot, and others. Most assignments or group of assignments will be clearly labelled with one of three labels, indicating what level of GenAI usage is allowed. If an assignment does not have a label, it should be assumed that GenAI usage is prohibited. The three labels are described below:

Details

RED: GenAI Prohibited You may not use any GenAI tools to complete this assignment. This assignment's main goal is to develop your own skills related to a particular task or topic, or to assess your own understanding of the concepts and skills required for this course. GenAI tools are therefore prohibited for these assignments, as they do not reflect or enhance your own learning journey. stoplight CSS generated by ChatGPT-4.1

Policy Violations: Any usage of GenAI for this assignment will be treated as a violation of the K-State Honor Pledge and may result in a grade of 0 for the assignment and other sanctions approved through the K-State Honor Council.

Details

YELLOW: Limited GenAI Usage Allowed You may use GenAI tools in a limited way to complete this assignment. The assignment description may provide additional information about what tools are allowed and how they can be used. The goal of this assignment is to allow you to work with GenAI to complete a task or achieve a goal, but the completed work should still be a majority your own effort. stoplight CSS generated by ChatGPT-4.1

Citations Required: Any usage of GenAI must be noted and cited directly in the work, either in source code comments or text citations in written work. Citations should include the tool used, the prompt(s) given, context provided to the tool (e.g. existing code), and a discussion of how the results were used to complete the assignment.

No Direct AI Results: For this assignment, you may not include the GenAI results directly in your submission - it must be used to inform and adapted to fit your own work. For example, you may not prompt GenAI tools to just write your code and submit that directly; instead, you should ask for help performing specific tasks and then use the results within your own work.

Understand Your Work: To ensure compliance with this policy, the instructor reserves the right to request additional discussion or explanation of any work submitted by a student. The student should understand and be able to clearly explain all submitted work and code, even materials directly or indirectly produced by GenAI. A student who is unable to explain a submission to the satisfaction of the instructor may be considered to be in violation of this policy.

Policy Violations: Any usage of GenAI that involves direct submission of the GenAI outputs without additional work done by the student, or use of GenAI without proper citation, may be treated as a violation of the K-State Honor Pledge and may result in a grade of 0 for the assignment and other sanctions approved through the K-State Honor Council.

Details

GREEN: GenAI Encouraged You may make unlimited use of GenAI tools to complete this assignment. The goal of this assignment is to ensure you are comfortable with using GenAI tools to specifically meet a need or achieve a goal. stoplight CSS generated by ChatGPT-4.1

Citations Required: Any usage of GenAI must be noted and cited directly in the work, either in source code comments or text citations in written work. Citations should include the tool used, the prompt(s) given, context provided to the tool (e.g. existing code), and a discussion of how the results were used to complete the assignment.

Direct AI Results Allowed: For this assignment, you may include the GenAI results directly in your submission. It is still your responsibility to ensure the submission meets the assignment’s goals and is correct and factual - remember that GenAI is not infallible and may produce incorrect results. You are still solely responsible for ensuring the submission meets the stated assignment goals, and assignments in this category may receive additional scrutiny for correctness and accuracy.

Understand Your Work: To ensure compliance with this policy, the instructor reserves the right to request additional discussion or explanation of any work submitted by a student. The student should understand and be able to clearly explain all submitted work and code, even materials directly or indirectly produced by GenAI. A student who is unable to explain a submission to the satisfaction of the instructor may be considered to be in violation of this policy.

Policy Violations: Any usage of GenAI without proper citation may be treated as a violation of the K-State Honor Pledge and may result in a grade of 0 for the assignment and other sanctions approved through the K-State Honor Council.

Please contact the instructor if you have any questions about this GenAI policy. It is your responsibility to understand it and proactively ask questions if you are unsure; ignorance of this policy is not an excuse for violating it.

Artificial Intelligence Disclosure

In keeping with the expectation for transparency and citation regarding the use of generative artificial intelligence (GenAI), the instructors of this course will clearly denote any usage of GenAI tools in the process of teaching this class. Specific policies for the usage of GenAI by the instructors and TAs of this course are given below:

- RED: GenAI Prohibited Grading and Feedback - GenAI will never be used to review student submissions or produce grading feedback. All grades and feedback will be provided by the instructors and TAs without any GenAI assistance.

- RED: GenAI Prohibited Student Communication - GenAI will never be used to when communicating with students. We believe it is important for students to receive real, authentic communication from instructors and TAs

- YELLOW: Limited GenAI Usage Allowed Lesson & Learning Content - GenAI may be used in a limited way to construct lessons and learning content, such as homework scenarios or simple graphics. All usage of GenAI will be clearly marked and cited. (As of January 2026, no GenAI content exists in the course).

Subject to Change

The details in this syllabus are not set in stone. Due to the flexible nature of this class, adjustments may need to be made as the semester progresses, though they will be kept to a minimum. If any changes occur, the changes will be posted on the K-State Canvas page for this course and emailed to all students.

Safe Zone Statement

I am part of the SafeZone community network of trained K-State faculty/staff/students who are available to listen and support you. As a SafeZone Ally, I can help you connect with resources on campus to address problems you face that interfere with your academic success, particularly issues of sexual violence, hateful acts, or concerns faced by individuals due to sexual orientation/gender identity. My goal is to help you be successful and to maintain a safe and equitable campus.

Standard Syllabus Statements

Info

The statements below are standard syllabus statements from K-State and our program. The latest versions are available online here.

Academic Honesty

Kansas State University has an Honor and Integrity System based on personal integrity, which is presumed to be sufficient assurance that, in academic matters, one’s work is performed honestly and without unauthorized assistance. Undergraduate and graduate students, by registration, acknowledge the jurisdiction of the Honor and Integrity System. The policies and procedures of the Honor and Integrity System apply to all full and part-time students enrolled in undergraduate and graduate courses on-campus, off-campus, and via distance learning. A component vital to the Honor and Integrity System is the inclusion of the Honor Pledge which applies to all assignments, examinations, or other course work undertaken by students. The Honor Pledge is implied, whether or not it is stated: “On my honor, as a student, I have neither given nor received unauthorized aid on this academic work.” A grade of XF can result from a breach of academic honesty. The F indicates failure in the course; the X indicates the reason is an Honor Pledge violation.

For this course, a violation of the Honor Pledge will result in sanctions such as a 0 on the assignment or an XF in the course, depending on severity. Actively seeking unauthorized aid, such as posting lab assignments on sites such as Chegg or StackOverflow, or asking another person to complete your work, even if unsuccessful, will result in an immediate XF in the course.

This course assumes that all your course work will be done by you. Use of AI text and code generators such as ChatGPT and GitHub Copilot in any submission for this course is strictly forbidden unless explicitly allowed by your instructor. Any unauthorized use of these tools without proper attribution is a violation of the K-State Honor Pledge.

We reserve the right to use various platforms that can perform automatic plagiarism detection by tracking changes made to files and comparing submitted projects against other students’ submissions and known solutions. That information may be used to determine if plagiarism has taken place.

Students with Disabilities

At K-State it is important that every student has access to course content and the means to demonstrate course mastery. Students with disabilities may benefit from services including accommodations provided by the Student Access Center. Disabilities can include physical, learning, executive functions, and mental health. You may register at the Student Access Center or to learn more contact:

- Manhattan/Olathe/Global Campus – Student Access Center

- K-State Salina Campus – Julie Rowe; Student Success Coordinator

Students already registered with the Student Access Center please request your Letters of Accommodation early in the semester to provide adequate time to arrange your approved academic accommodations. Once SAC approves your Letter of Accommodation it will be e-mailed to you, and your instructor(s) for this course. Please follow up with your instructor to discuss how best to implement the approved accommodations.

Expectations for Conduct

All student activities in the University, including this course, are governed by the Student Judicial Conduct Code as outlined in the Student Governing Association By Laws, Article V, Section 3, number 2. Students who engage in behavior that disrupts the learning environment may be asked to leave the class.

Mutual Respect and Inclusion in K-State Teaching & Learning Spaces

At K-State, faculty and staff are committed to creating and maintaining an inclusive and supportive learning environment for students from diverse backgrounds and perspectives. K-State courses, labs, and other virtual and physical learning spaces promote equitable opportunity to learn, participate, contribute, and succeed, regardless of age, race, color, ethnicity, nationality, genetic information, ancestry, disability, socioeconomic status, military or veteran status, immigration status, Indigenous identity, gender identity, gender expression, sexuality, religion, culture, as well as other social identities.

Faculty and staff are committed to promoting equity and believe the success of an inclusive learning environment relies on the participation, support, and understanding of all students. Students are encouraged to share their views and lived experiences as they relate to the course or their course experience, while recognizing they are doing so in a learning environment in which all are expected to engage with respect to honor the rights, safety, and dignity of others in keeping with the K-State Principles of Community.

If you feel uncomfortable because of comments or behavior encountered in this class, you may bring it to the attention of your instructor, advisors, and/or mentors. If you have questions about how to proceed with a confidential process to resolve concerns, please contact the Student Ombudsperson Office. Violations of the student code of conduct can be reported using the Code of Conduct Reporting Form. You can also report discrimination, harassment or sexual harassment, if needed.

Netiquette

Info

This is our personal policy and not a required syllabus statement from K-State. It has been adapted from this statement from K-State Online, and theRecurse Center Manual. We have adapted their ideas to fit this course.

Online communication is inherently different than in-person communication. When speaking in person, many times we can take advantage of the context and body language of the person speaking to better understand what the speaker means, not just what is said. This information is not present when communicating online, so we must be much more careful about what we say and how we say it in order to get our meaning across.

Here are a few general rules to help us all communicate online in this course, especially while using tools such as Canvas or Discord:

- Use a clear and meaningful subject line to announce your topic. Subject lines such as “Question” or “Problem” are not helpful. Subjects such as “Logic Question in Project 5, Part 1 in Java” or “Unexpected Exception when Opening Text File in Python” give plenty of information about your topic.

- Use only one topic per message. If you have multiple topics, post multiple messages so each one can be discussed independently.

- Be thorough, concise, and to the point. Ideally, each message should be a page or less.

- Include exact error messages, code snippets, or screenshots, as well as any previous steps taken to fix the problem. It is much easier to solve a problem when the exact error message or screenshot is provided. If we know what you’ve tried so far, we can get to the root cause of the issue more quickly.

- Consider carefully what you write before you post it. Once a message is posted, it becomes part of the permanent record of the course and can easily be found by others.

- If you are lost, don’t know an answer, or don’t understand something, speak up! Email and Canvas both allow you to send a message privately to the instructors, so other students won’t see that you asked a question. Don’t be afraid to ask questions anytime, as you can choose to do so without any fear of being identified by your fellow students.

- Class discussions are confidential. Do not share information from the course with anyone outside of the course without explicit permission.

- Do not quote entire message chains; only include the relevant parts. When replying to a previous message, only quote the relevant lines in your response.

- Do not use all caps. It makes it look like you are shouting. Use appropriate text markup (bold, italics, etc.) to highlight a point if needed.

- No feigning surprise. If someone asks a question, saying things like “I can’t believe you don’t know that!” are not helpful, and only serve to make that person feel bad.

- No “well-actually’s.” If someone makes a statement that is not entirely correct, resist the urge to offer a “well, actually…” correction, especially if it is not relevant to the discussion. If you can help solve their problem, feel free to provide correct information, but don’t post a correction just for the sake of being correct.

- Do not correct someone’s grammar or spelling. Again, it is not helpful, and only serves to make that person feel bad. If there is a genuine mistake that may affect the meaning of the post, please contact the person privately or let the instructors know privately so it can be resolved.

- Avoid subtle -isms and microaggressions. Avoid comments that could make others feel uncomfortable based on their personal identity. See the syllabus section on Diversity and Inclusion above for more information on this topic. If a comment makes you uncomfortable, please contact the instructor.

- Avoid sarcasm, flaming, advertisements, lingo, trolling, doxxing, and other bad online habits. They have no place in an academic environment. Tasteful humor is fine, but sarcasm can be misunderstood.

As a participant in course discussions, you should also strive to honor the diversity of your classmates by adhering to the K-State Principles of Community.

Discrimination, Harassment, and Sexual Harassment

Kansas State University is committed to maintaining academic, housing, and work environments that are free of discrimination, harassment, and sexual harassment. Instructors support the University’s commitment by creating a safe learning environment during this course, free of conduct that would interfere with your academic opportunities. Instructors also have a duty to report any behavior they become aware of that potentially violates the University’s policy prohibiting discrimination, harassment, and sexual harassment, as outlined by PPM 3010.

If a student is subjected to discrimination, harassment, or sexual harassment, they are encouraged to make a non-confidential report to the University’s Office of Civil Rights and Title IX (OCR & TIX) using the online reporting form. Incident disclosure is not required to receive resources at K-State. Reports that include domestic and dating violence, sexual assault, or stalking, should be considered for reporting by the complainant to the Kansas State University Police Department or the Riley County Police Department. Reports made to law enforcement are separate from reports made to OIE. A complainant can choose to report to one or both entities. Confidential support and advocacy can be found with the K-State Center for Advocacy, Response, and Education (CARE). Confidential mental health services can be found with Lafene Counseling and Psychological Services (CAPS). Academic support can be found with the Student Support and Accountability (SSA) office. SSA is a non-confidential resource. OCR & TIX also provides a comprehensive list of resources on their website. If you have questions about non-confidential and confidential resources, please contact OCR & TIX at civilrights@k-state.edu or (785) 532–6220.

Academic Freedom Statement

Kansas State University is a community of students, faculty, and staff who work together to discover new knowledge, create new ideas, and share the results of their scholarly inquiry with the wider public. Although new ideas or research results may be controversial or challenge established views, the health and growth of any society requires frank intellectual exchange. Academic freedom protects this type of free exchange and is thus essential to any university’s mission.

Moreover, academic freedom supports collaborative work in the pursuit of truth and the dissemination of knowledge in an environment of inquiry, respectful debate, and professionalism. Academic freedom is not limited to the classroom or to scientific and scholarly research, but extends to the life of the university as well as to larger social and political questions. It is the right and responsibility of the university community to engage with such issues.

Campus Safety

Kansas State University is committed to providing a safe teaching and learning environment for student and faculty members. In order to enhance your safety in the unlikely case of a campus emergency make sure that you know where and how to quickly exit your classroom and how to follow any emergency directives. Current Campus Emergency Information is available at the University’s Advisory webpage.

Student Resources

K-State has many resources to help contribute to student success. These resources include accommodations for academics, paying for college, student life, health and safety, and others. Check out the Student Guide to Help and Resources: One Stop Shop for more information.

Student Academic Creations

Student academic creations are subject to Kansas State University and Kansas Board of Regents Intellectual Property Policies. For courses in which students will be creating intellectual property, the K-State policy can be found at University Handbook, Appendix R: Intellectual Property Policy and Institutional Procedures (part I.E.). These policies address ownership and use of student academic creations.

Mental Health

Your mental health and good relationships are vital to your overall well-being. Symptoms of mental health issues may include excessive sadness or worry, thoughts of death or self-harm, inability to concentrate, lack of motivation, or substance abuse. Although problems can occur anytime for anyone, you should pay extra attention to your mental health if you are feeling academic or financial stress, discrimination, or have experienced a traumatic event, such as loss of a friend or family member, sexual assault or other physical or emotional abuse.

If you are struggling with these issues, do not wait to seek assistance.

For Kansas State Salina Campus:

For Global Campus/K-State Online:

University Excused Absences

K-State has a University Excused Absence policy (Section F62). Class absence(s) will be handled between the instructor and the student unless there are other university offices involved. For university excused absences, instructors shall provide the student the opportunity to make up missed assignments, activities, and/or attendance specific points that contribute to the course grade, unless they decide to excuse those missed assignments from the student’s course grade. Please see the policy for a complete list of university excused absences and how to obtain one. Students are encouraged to contact their instructor regarding their absences.

Face Coverings

Kansas State University strongly encourages, but does not require, that everyone wear masks while indoors on university property, including while attending in-person classes. For additional information and the latest updates, see K-State’s face covering policy.

Copyright Notification

Copyright 2026 (Joshua L. Weese) as to this syllabus and all lectures. During this course students are prohibited from selling notes to or being paid for taking notes by any person or commercial firm without the express written permission of the professor teaching this course. In addition, students in this class are not authorized to provide class notes or other class-related materials to any other person or entity, other than sharing them directly with another student taking the class for purposes of studying, without prior written permission from the professor teaching this course. The digital materials included here come with the legal permissions and releases of the copyright holders. These course materials should be used for educational purposes only; the contents should not be distributed electronically or otherwise beyond the confines of this online course. The URLs listed here do not suggest endorsement of either the site owners or the contents found at the sites. Likewise, mentioned brands (products and services) do not suggest endorsement. Students own copyright to what they create.

MS SQL Server Access

Info

With the new policies regarding the K-State VPN, I am working on an alternative for those of you who cannot install MS SQL Server on your own machine. I will post updates as soon as possible!

In order to complete homework assignments and practice on your own, you will need access to SQL Server. Your access requires a client tool and a server.

For this course, you will need to use a SQL client tool to access a SQL Server instance. Here are a few client tools that are available for use; however, we will be focusing our use on VS Code this semester:

Database Server

A database server is needed to host a database in addition to client tools which are used to access the database and run queries. In this course, we will use Microsoft SQL Server.

Option 1: Docker/Github Codespace

If you are unable to install SQL Server on your own machine, you can use a GitHub Codespace or Docker that has SQL Server already installed. This is provided via a GitHub classroom link in Canvas, along with instructions.

Option 2: Install Your Own

Installing your own copy of Microsoft SQL Server is highly encouraged. Most versions of SQL Server will work for this course…but I recommend the one below for a lightweight option (the full version of MS SQL Server is not needed for this course and can take up quite a bit of resources when installed).

Info

Note that since SQL Server Express typically runs under its own user in Windows, you may run into some file permission issues (Access Denied errors) when trying to run the above commands. If this is the case, move the .bak file to the Data folder inside your SQL Server install location. In most cases, this would be where that folder is located (version might be different): C:\Program Files\Microsoft SQL Server\MSSQL15.SQLEXPRESS\MSSQL\DATA. Try running the commands above again, but now with the .bak file at this location.

Subsections of Introduction to Tables and Constraints

Introduction to Tables and Constraints

YouTube Video

Video Transcription

Welcome everyone. In this video, we’re going to take our first look into some sequels, specifically t SQL, which is Microsoft’s implementation of the SQL standard.So to start off, we’ll talk a little bit about basic table structure. We’ve talked up already in a previous lecture about general table structure, but not necessarily how we actually create that in our database. We’ll also do a basic introduction to constraints today, which includes the not null constraint, primary keys, unique keys, foreign keys, check constraints and default constraints. These are by no means all of the constraints that are possible to use in SQL, as well as SQL Server, which is the database system that we’ll be using. But this should get you a good introduction into them. And we’ll be covering and using these throughout the course.

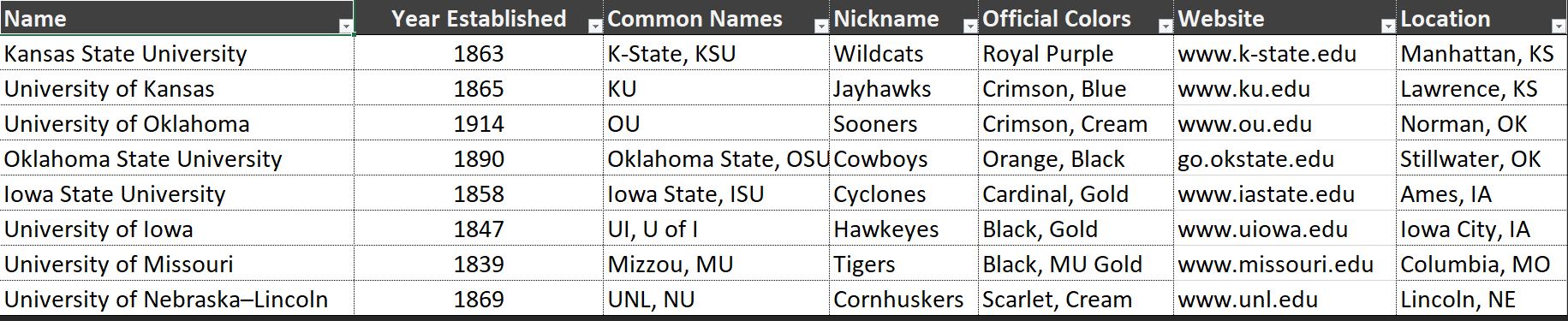

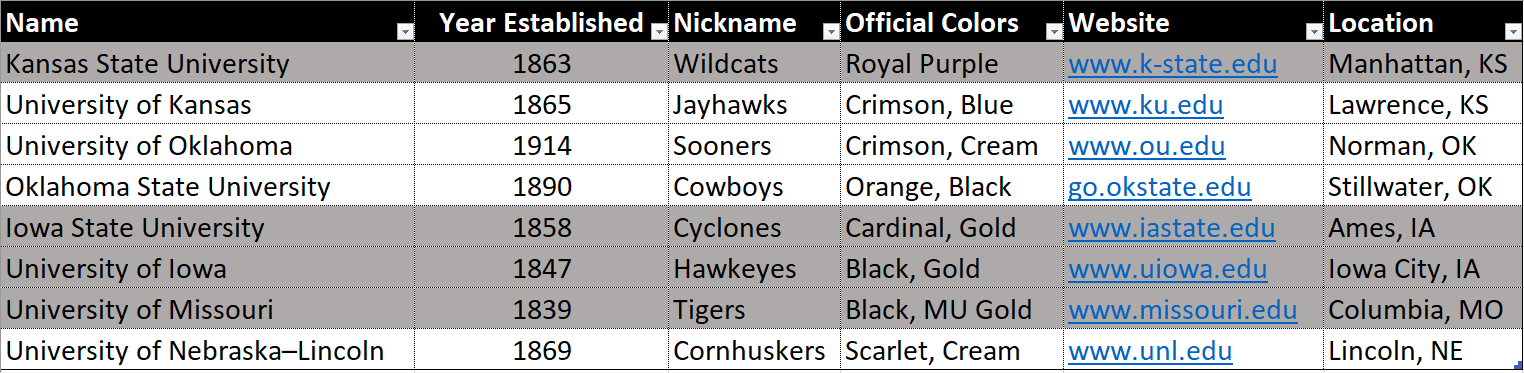

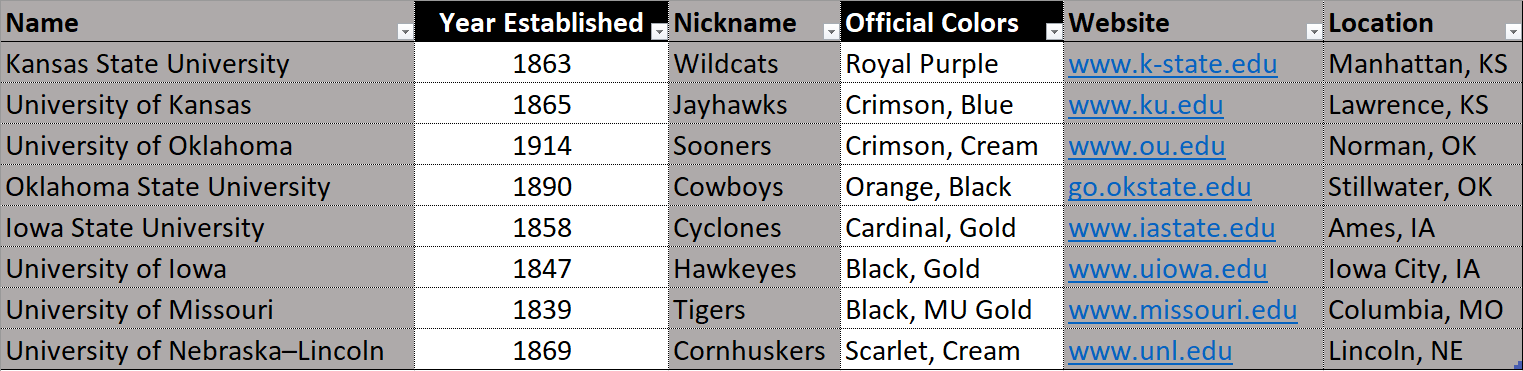

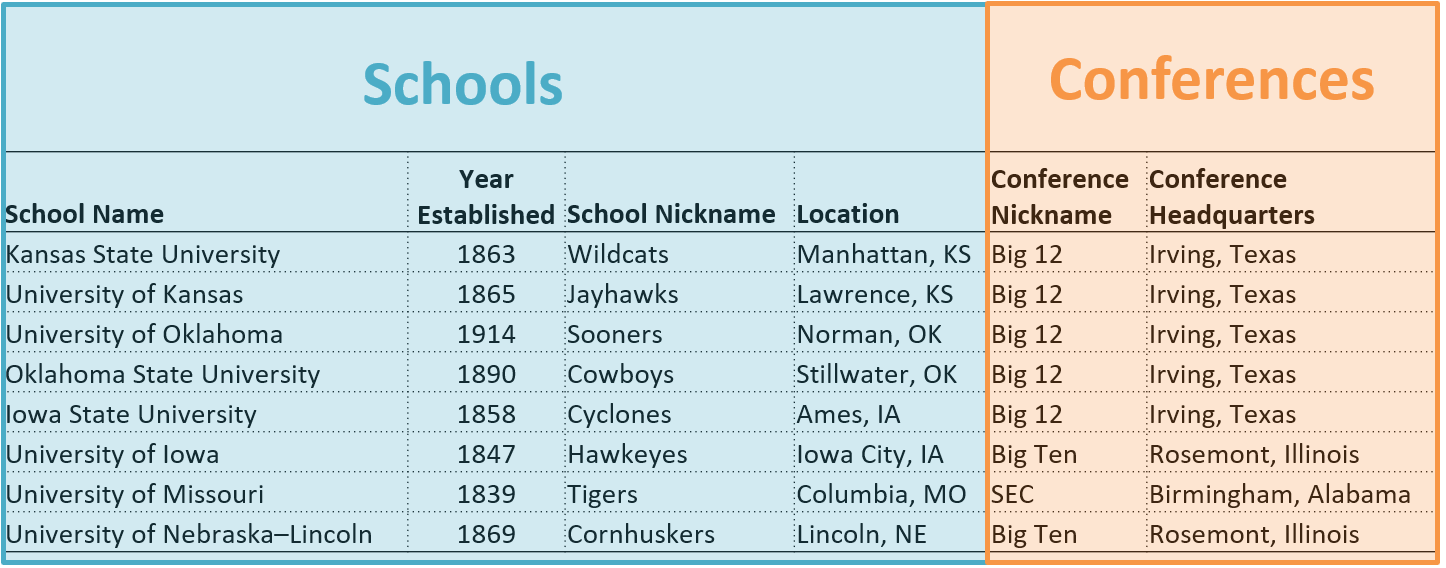

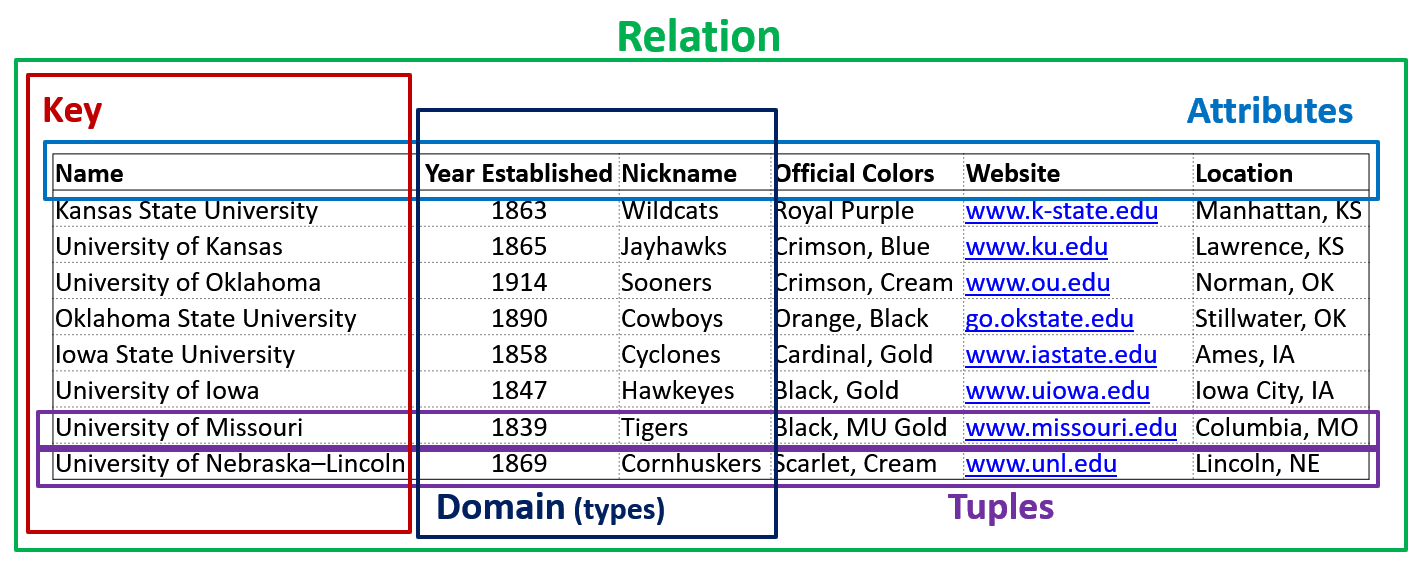

So as we noted before, tables are a physical form of a relation. Remember, relations are collections of attributes, tuples, and all of that, basically, what we saw with the university data, so the table is the physical manifestation of that. Now a table inside of your database is going to contain one or more columns, it cannot contain zero columns. So our database can’t contain a table that has no columns, it must contain at least one column, but it does not have to have rows or columns are a physical form of attributes, right. So remember, a relation is made up of attributes and tuples. Those attributes take the physical form of a column. Columns have a lot of different properties to them. Again, they are a set, not a list. So therefore, all columns inside of a table must be uniquely named right. So in this particular example, here, it would be impossible for us to have two columns called nickname, right. And so we have to have unique names for each column.

Name, all columns must also have a data type, and no ability. So whether or not that column can have data or whether or not that data is actually required. So a lot of records have inconsistent data. And some data is optional, right? When you fill out a form on the internet, or even in general, right, not all fields are typically required, depending on the situation that you have, the non required fields are allowed to be no, right. This diagram that I have here on my slide is an entity relationship diagram. This will look very similar to since you’ve encountered UML. Before, this is just the database version of that typical style of diagram. So on the left hand side, we have the indication of whether or not this column is a key, we have the individual columns listed here, the columns data type on the right, and then of course, the table name here at the top. Now also notice that we typically will also include the schema that that table belongs to remember schemas act kind of like a namespace inside of a database. So all tables for a particular database are contained inside of a schema.

And so and we’ll talk in a variety of different lectures about the individual data types here. And I’ll talk a little bit more about them. And this particular lecture as well. But we won’t get into a lot of the details just yet. But let’s take a look at some examples here on how to actually create our first table inside of our database.

So by this point, you should have watched the video and read the material on how to get Microsoft SQL Server installed, as well as as your data studio or how to reuse the remote connection in order to access an already fixed or correct installation. We’ll be using this throughout the class in order to actually write to do our exercises, or the notes that we that I showcase in lecture are in these videos. And we’ll also be utilizing this for our projects and for our homeworks but for now let’s create our first database. If this is your first time watching this video, and first time going through these examples, you will have to create your first database and the syntax or the the way we actually do that is using the Create statement, and then the name of the database that we want to create and in this case, I’m going to create a database called CC 520. And we need to also make sure that we are connected to our local installation of our database. And so in this case, I’m just going to connect to my local installation of SQL Express that is running. And then if you already have other databases in your local installation that are already created, you’ll see those pop up here.

But if you’re on your master connection here, you can actually change down here towards the bottom, you can actually change whichever database that you’re actually connecting to, just as a refresher, and if you ever want to change that connection, you can just change it up here to where it says attached to. But for now, let’s go ahead and say create, sorry, create database CC 520. So create databases, the statement, and then the name of our actual database there are remember each, just like what we do with programming each SQL statement reach query that we execute as part of our database or for our database, we need to end that with a semicolon. Now, as you’ll soon find out, not every line of SQL code will actually have a semi colon following after it. But let’s go ahead and create our database here.

So commands completed successfully. Now, keep in mind as well, if you’ve already created this database on your system, you will get an error. It says, Well, this database cc 520 already exists. So you don’t have to run this more than once. So we also need to create our first schema as part of our database as well. So just like what we did with our database above, create schema, and then we’re going to create a demo the demo schema, and then we’re going to set authorization to be DBO. We kind of hand wave over the authorization part here. This deals with permissions set for the schema, and we’ll talk about that later in the course. But for now, we just want to create this schema called demo. And note that the square brackets are TC equals or SQL servers, syntax for denoting names for database objects. Now, you can also use quotation marks here as well, quotation marks will work just the same. But the Microsoft SQL Server way of doing it would be the square brackets. Now, also note that before you actually run this create schema, we also need to make sure that we are using the correct database. Otherwise, your schema will be created in the database that whatever that your whatever your connection is actually physically attached to. And so since my connection is not connected, or directly connected to my individual database that I just created, I need to actually set use the go statement or use statements and then say, go.

So what go is going to do is it’s going to first execute this UCC 520 and then execute the Create schema. And so if I run this, both of those commands were done successfully. So this command was executed first, and then this command was executed second. Now, in theory as well, what we can do to fix this, at least as far as as your Data Studio goes, is that we can actually change our connection here. And instead of connecting to master, so we click on master first to load up all of this. And then down here for our database, we can say cc 520. Instead, press Connect. And now our connection is set to cc 520. And we don’t actually have to use the using statement or use statement to make sure we’re on the correct database.

But now that we have our basic schema and our basic database, let’s go ahead and add our first table. now. I’ll in the notes, you’ll find that I have this drop table if exists command. This is only there to if you’ve already ran through these notes. If the table exists already, you’ll get an error as a result. So as far as this example goes, you’ll want to drop the table first. So delete the table from the database. And then you can run that CREATE TABLE statement again. So let’s go ahead and do that. So we’ll do this and a couple ghosts. So drop it, drop it if it exists. And then we’re going to say create Table Table demo dot school.

So when we create table, when we create a table, the schema name needs to be included as part of the naming conventions. So that way your table, if you have more than one schema, you know which table that schema actually belongs to. So that is good practice there. So schema dot table, just like what we had with our diagram, right there. So we can see that our table name would be demo dot school. And we have each of our individual, we have each of our individual columns here. So this is the column name. Now note, I do have to put the square brackets around name here, because if I take those out, you notice that the color changes to blue. That means that it’s being recognized as a reserved word in T SQL. And so we need to either put double quotes around it or square brackets around it. So it knows that I’m intending to use it as an actual name of the column, and not part of what the reserved word represents in T SQL. And then right next to that, we’ll have our data type for the column. And then we’ll also have any constraints associated with that as well. But we’ll add those later, and in just a few minutes, but each of the columns are separated out by column as are, each of the columns are separated out by a comma, as you see here. And then again, all of those columns are wrapped inside of parentheses. And then of course, we end our SQL statement with a semi colon.

But if we run this, we see that our command is running successfully. And now I can actually browse over here into my databases. And if I refresh, I go to my cc 520, expand my tables. And now here is my demo dot school. And I can actually see all of the individual individual columns here, along with their data types associated with them. Now notice, as well, by default, if they are not, if it’s not specified, the columns are set to be not meaning that I don’t actually have to store any data for those particular columns, right? They’re nullable. And we’ll talk about the not null constraint here in just a minute. But let’s go ahead and also add some basic data associated with this. So if we look, let me go ahead and actually just touch it to the cell here. So we have inserts, which is the SQL statement to actually add more data to our data or a particular table. And we’ll talk about the insert statement later in the semester, as we get closer to that to data modification. But for now, just know that the insert statement will take a list of columns for a table, and then all of the values associated with it. So each of these row, each of these sets of parentheses are tuples, right. So this is one tuple, comma, another tuple. Remember, each tuple represents a row in our relation or table.

And each of these is in order, corresponding to each of the columns that I have up above. Now, if we run this, eight rows affected, so that means all of my eight rows of data got inserted into my table. So let us do a little bit more before we actually start moving on. So I’m going to hide this real quick. And then I’m going to…let’s go ahead and make sure our data got inserted. So I’m going to select star from demo school. Notice that my IntelliSense is thinking that demo school demo dot school does not exist. And you can safely ignore that I’m connected to the right database and I am that table is actually created, but some caching actually occurs here. And so as your Data Studio doesn’t always properly refresh right away. And so if you just created a table, and during this session, as your Data Studio may not recognize that that table actually exists yet.

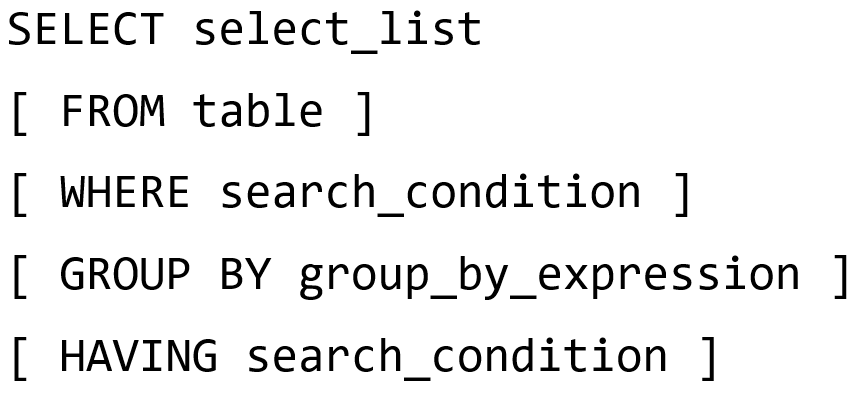

But once you run that SELECT statement, so select star, and remember, select is the projection. So this picks which columns in our database that we actually want, star is a wildcard, meaning I want all of the columns. And then from is going to pick which tables I want to pull data from. And so in this case, demo dot school. But again, a lot of these SQL statements here, we’ll be covering in much more greater detail in future talks. But for now, we can see that all of our data exists in our particular table, as we inserted it just before.

So let’s take a look at a another example. So let’s move my screen just a little bit higher here. So here’s another big example. And if you want to take a pause here to type this out, now would be a really good time to pause the video to actually get this SQL code into your Azure Data Studio. Or you can copy this from the sequel notes as well. But I encourage you to start typing these out to get that muscle memory in. So just like when he first started to learn how to code, typing out SQL statements is going to be the best practice to actually get familiar with the syntax.

But let’s take a look at what happens here. So I had added, I added a new column here called a website, just to kind of explore here, what would happen down here in my table, so this was not included in my original table that I have up here. But it is included in this CREATE TABLE statement. But my insert has no website column. So if I run this, and then if I pull my statement from up there, and run by select star, you’ll notice that my website column all contains null values. That’s because I did not say that this website column could be or could not be no. So by default, it is normal, right? Meaning that I don’t actually have to insert data for that particular column, when I run an insert statement, I could just pick and choose which columns that I wanted.

So, but a lot of times, the vast majority of times anyways, most columns in the database would be required, so meaning they could not be not. And that’s really important, because if we have a lot of data attributes that are normal in our table, we’re actually really not being very efficient with our database design. And likewise, not being very efficient with our data storage. So it’s really important that we be very careful and allowing nullable columns. But let’s take a look at what the do what the our basic constraints could start to.

But let’s take a look at some constraints that we can use to prevent no values being inserted in our database. So constraints are essentially declarative role. So just like what we have rules and logic, we can have rules in our database as well. So our database management system, or DBMS, will check these constraints. Every time new data is added. Data is modified. And in some cases, when data is deleted, and any operation that violates a constraint will fail and return an error. So typically, this would be an exception that gets thrown. So SQL Server, our database management system will manage these constraints for us. And so when we work with our data, adding new data, deleting or modifying data, those constraints can be checked and to verify that our data or our data maintains its integrity. And that is one of the biggest reasons why we actually are using a database over something like Microsoft Excel.

But let’s take a look at our first constraint here. So as we talked about just a little bit ago, we want to make sure that We don’t have as much nor data as part of our database, right? knowable data is okay, it’s perfectly acceptable. But if we have a lot of it, our database design and storage is not that efficient. So the knowability constraint allows us to indicate which values are mandatory and which ones are not. So a value that a value is really anything that fits the domain of that column that is not null.

So in terms of a invar, char, a variable length string, that is 64 bits in length, or 64 characters in length, anything that is text or string will actually fit into that domain unless it’s no right meaning non existent. Similar thing for integers are n chars, which is a fixed length string. Again, we’ll talk about these different domain types or different data types in another class period.

Now, take a look at our ER diagram here. Now as well, we have changed a little bit of the of the structure here just a little bit, but pay really close attention to the that the name year stablish, nicknamed colors, city and state code are now a talyc. thing. So in an ER diagram, if your column name is italicized, it means that it is knowable, meaning that it is not required. If the text is not italicized, that means that column is required. So now, in this diagram, we’re making the website field required.

So let’s take a look at what that would look like in SQL. So if we recreate our table here, let’s go ahead and copy this in here. And so I know this is a lot of SQL code. But these videos would be extremely long if I sat here and typed out all of the SQL by hand, every single for every single example. So please bear with me, if you want to take some time to read through these SQL statements very carefully, please do pause the video for a moment. So you can read what’s going on here.

So we’re going to remove our old table. And now we’re going to create the demo duck school table again, and search the same values. And then we’re going to select everything to see what is left in it. Now keep in mind here, the change that I made is in the creation statement, I added a constraint now. And so we have our column name here, followed by our domain or column type. So this is a string that is variable length up to 128 characters. Now I am specifying that this column is not No. So by default, these columns if you do not include the knowability constraint, it by default has no in its place. And so it allows that column to be optional. But if we say not know, that column all of a sudden becomes required. So let’s go ahead and try to run this code again.

Ah, so cannot insert the value null into column website. Table cc 520 dot demo dot school column does not allow nulls insert fails, that statement has been terminated. And so when we do our select, nothing actually happens, right? Nothing is actually there inside of our table, because no data got inserted to begin with. So we have essentially restricted this. So any data that we actually try to add in our table, our database management system, SQL Server prevents us from inserting a record into the school table without the school’s website associated with it.

Now, let’s go ahead then and also make the rest of our columns not null. So here we go. And notice as well, I went ahead and took out the I went ahead and took out the website column here. But this should now work, right. But note if I ever happened to take out, let’s say one rep one value here and try to run this again. Notice that the insert fails because the number of columns for each row have to match the values up here, unlike what we had here, because we have unknowable, so we have name, year. Nickname, colors, the state, but now, right website is not included as as a column as part of my insert because I’m not actually inserting that value. So just keep in mind that that is also a restriction that we have for insertion.

But if we actually started to try to say, no here, huh, that statement has been terminated because of the error cannot insert null into the column state code, because that column does not allow nulls. And so we want to take this back. If I run this now. Everything is all good again.

All right. So these sorts of constraints may seem very simplistic at first, but especially when you have a database that is connected to a some sort of form, or anything like that is really important, as far as the database goes is to help ensure that your data is valid, ensure that your data is the data integrity actually holds up really well. A lot of times applications don’t have consistency with data. And so the database can help us enforce that consistency. Right. And again, that is one of the big reasons one of the big benefits that we are trying to leverage moving away from an Excel like file like an Excel sheet or txt file, something like that to something that is managed like a database

Key, Check, and Default Constraints

YouTube Video

Video Transcription

Welcome back, everyone. This is part two of our introduction into tables and constraints. But now let’s start talking about key constraints. Key constraints are really important as part of our database design, because it enforces relationships and uniqueness of data throughout our individual tables. And across the relationships between those tables. Our first one here is a primary key constraint. Primary Key constraints make particular values for a column mandatory are required because a primary key uniquely identifies a row as part of an part of a table. That value being that uniquely identifies a particular record inside of a table must be unique. So we can’t have two identifiers are two values and as a primary key that are the same otherwise, we wouldn’t be able to uniquely identify a record or row in a table. And in our previous discussion, that is one of the major requirements in a relational database for table is that we must be able to uniquely identify a single tuple or row inside of a table to that primary key is really important to be unique. Also, notice here that in my ER diagram, I’ve changed a little bit of things here, the website column is gone, all of my columns here are also not italicized anymore, so everything is going to be required. And then we have our first key constraint added to our ER diagram here, PK for primary key, now more than one column can be a primary key.

So we can have a composite primary key. But for now, we’re going to shoot for just doing a primary key of school ID, which is of type Ent. But let’s take a look at a few examples here. So here’s another example of us creating a the demo dot school table. This is exactly what I have up here in my slide as well. So the new addition here is that we have a new column called school ID of type int, that cannot be null. And it is a primary key, right. So this is our primary key constraint and our not null constraint all together. And one. Also quick note here naming convention wise that we’re going here, you’ve noticed that my column, all my column names are capitalized. And then Personally, I do capital I capital D, but you may also see a lowercase D as part of this. But we have our typical insert statement down here. Notice that we do have school ID added as part of this now as well. And then school ID is listed throughout there. But if we run this, our SQL query fails, right, so invalid column name, invalid column name, school ID. So do if you are continuing on the same database that we had from our previous video, you may also have to add a go statement in between these two, or just execute these separately, because we have, we’re adding a new column here. And so our insert statement would actually fail. Since these queries are these statements are all ran on the database management system in one big batch. And again, we will talk about batch how SQL SQL Server runs queries in batches in a future video. But for now, you can run the drop and create in one in one cell in Azure Data Studio, or add the go statement in between these two.

But now if we run this, we can see that there is a violation of primary key constraint, PK school cannot insert duplicate key an object demo dot school, the duplicate key value is eight. So note that all of our key constraints are all of our constraints. inside of our SQL Server instance, for our database objects will be automatically named by SQL Server. We will talk about in a future video on how we could actually name those constraints to be a little bit more user friendly. But for now, we will use the auto generated names for them. But the reason why we have an issue here is that line 25 and line 26. Both of these tuples have the same school ID so you need University of Nebraska University of Nebraska I have the same name, same ID, but one is you when l. So University of Nebraska Lincoln, and one is University of Nebraska, Omaha. And so what we can do to fix that issue is just to say, nine here. So if we run this again, Ah, there we go. So nine rows affected. And then if we go down a little bit here and add a select star, and then from demo dot school and run that, we get our nine records out of it. Alright, so that is the basic implementation of how we would work with a primary key. Like I said, we’ll get more into more complicated keys in the future. But this gets your feet wet into some of the basics and how those primary keys actually work, and how that’s enforced when we insert data into our tables.

Now, let’s look at our next constraints. So unique constraints are very similar to how primary key constraints work. Unique constraints enforce uniqueness within that column or within those columns, it can be a composite unique key. But unlike a primary key, you can have more than one unique constraint. So this will allow this will allow Knowles inside of it as well, where a primary key cannot be no. So that is some of the primary differences between a unique key and a primary key. So we can have a composite primary key meaning that more than one column together can be the primary key. But with a unique key we can have. So for example, we could have name be unique key. And then we could also have, let’s say colors be unique or nickname be a unique key. And they could be two entirely different keys separated out with each other. But again, when we start talking more about database design and consensus consistency of data, we’ll talk a little bit more about the more complicated key constraints that we can have as part of our database. But for now, let’s just take a look at an example. So if you notice, and my ER diagram on my slide, we have the UK in the key in the key area of the diagram next to name so we’re trying to enforce unique names as part of our university table. And so what we want to do first here, let’s go ahead and do a new create statement. And again, if you get an error, you might have to add a go statement in between these. But we’re going to run the CREATE TABLE school again, primary key school ID. But now we added the unique constraint to our name. So if we run this, ah, notice that my unique, my unique key constraint was violated in our insert duplicate key and object demo dot School, which is our table, the duplicate key is University of Nebraska. So even though our primary key is not violated, the unique constraint that we have as part of our name column is because we still have this two same names University of Nebraska, and a university in Nebraska.

But what we could do is we could just add something that is a little bit more unique as part of the name University of Nebraska Lincoln, and University of Nebraska, Omaha here. And if we run this now, all nine rows were affected. And now if we run this, we can see that all of my records here are added and I correctly get University of Nebraska Lincoln and Omaha down here at the bottom. Keep in mind though, while unique keys do operate similarly to primary keys, they aren’t necessarily don’t necessarily do the exact same thing as being noted above there in the corner in our slide. And again, once we start talking about database design, and specifically normalizing our tables normalizing our information that we store in our database, we’ll get more into the harder details of why there is a big difference between these two types of PII constraints.

Alright, so then let’s take a look at our next constraint, which is check constraints. So check constraints are really cool feature inside our relational database languages, check constraints that allow us to enforce certain kinds of information for our particular columns. If you would have noticed earlier, when I’ve been doing my insert statements, we had that last row that had zero for the for the year established. So check constraints could actually enforce a particular range of data, it could can enforce a discrete set. So if we had a column we could enforce yes, no, or maybe, or yes, no, true false. And we can enforce a lot of different things here. So specific range of data, discrete values, we could also use a comparison here. So we could say, well, this column has to be between, or the end date has to be greater than or equal to the start date.

So essentially, any predicate that we can use, comparing columns of the table can be a valid check constraint. So we can use any of the columns as part of the table. And we can pretty much do any Boolean operation on those columns. That’s what we’re referring to as a predicate here. So we can do less than greater than not equal to, and, and so on. So this is a really powerful tool to verify certain, verify that our column, the data that’s being inserted into it or modified, meet certain conditions. But for now, let’s take a look at how this looks in our SQL code. And you’ll notice that I didn’t really include there’s no ER diagram here, some er diagrams, and I’ll draw some later in the class. But some er diagrams will include check constraints as part of it, but it’s not very common. But you may see them on some database design documents. But let’s see what we can do for check constraints. So the same this year, similar kind of statement that we just had before. But now we’re doing a CREATE TABLE demo dot school, and still have the unique constraint and the primary key constraint. But now what I’m actually adding here is a check constraint.

So for a year established, because we have a zero, a pesky zero down here and part of our data, so we don’t want to get an invalid year, or some really weird years. And even this, we’re I’m maxing out at your 9999. But just for a second example, we can kind of show this here. But what we’re doing here is we’re checking that the year established column. So the data that is contained inside of this column is between 1009 1999. And of course, we could put more realistic values here. But let’s go ahead and run this as an example. And so you can see that an error is generated that says that we have violated our check constraint for this particular for this particular column. So what we can do is actually create or improve our data accuracy here. So u and o was founded in 1908. So if we change that value, run it our table gets created, and all of our data gets inserted just fine. And there we have it. So there we have universe, Nebraska, Omaha with the correct date in mind. But check constraints are a very, very useful tool to enforce data integrity, data consistency, as well. Now in practice, not not all data can be cleaned this way through the database. There is some data, some data cleaning that must be done at the application level. So the tool that’s being used the to create data in certain data into the database, that tool or application recode program will need to actually do some of that data cleaning before it gets to the database. But the database management system can do some of that for you, which is a really cool thing.

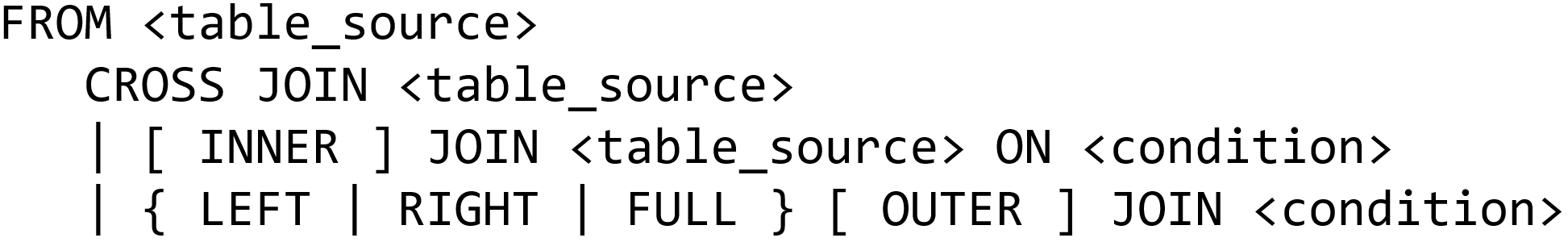

Lets take a look at a another another key constraint, which is the foreign key constraint. Again, we will be talking about foreign keys throughout this class. This is just a gentle introduction. But foreign key constraints enforces what we call referential integrity. These are there are a few rules that go along with foreign keys. So foreign keys are going to just as they sound before, and so a foreign key is going to be in let’s say, table B. So table B has a foreign key. And that foreign key is going to reference a column in another table. And so the columns that are referenced must either be a primary key or a unique key name. So it can’t be just some random column, it has to be a unique inside of that particular table. So unique columns are unique keys, of course, and then primary keys which are forced to be unique in the referencing column. So the actual foreign key itself must match the type of the reference column. So the column in table B must be the same type and column A. But the constraint does work in both directions. So it’s a bi directional inference. So the referencing table is checked when the foreign key value is inserted. And when it’s updated, as well as deleted, but when when a value is deleted, and the referenced table is checked, the reference table is checked. So if a new record is inserted, the referencing table is chaps. And then vice versa for when a record is actually deleted. But this will make a lot more sense when we start looking into relationships. And we’ll talk more about foreign keys.

So this may be a little bit much initially. But again, we’ll be explaining this in much greater detail here in the new few near future. But this is what a foreign key constraint might look like inside of a database. And this is going to be the example that I’m going to show here. But we have demo dot school here, but we now have a new table called demo conference. And then notice here that we have a foreign key now and a school table or school table. So conference, which is a foreign key fk, and then we have a conference table. And then we have a relationship that’s drawn between these. So this is a crows foot notation to reference the, the, the relationship between these two tables, so one, so one and only one conference. So a school can be can be in one and only one conference. And a conference can be in it can be found in zero or more schools. And again, we’ll talk more about the crows foot notation in a future lecture. But just note this, this is what you’ll see for how a foreign key is referenced in the ER diagram. But again, let’s go ahead and take a look at an example because things make a little bit more sense when you actually see it in practice. So I’ll leave my diagram up there at the top corner for you. But let’s take a look at some new code here.

So this is a lot of code. But all I’m doing here is creating my demo conference table and my demo school. I have a bunch of data that’s being inserted here. And notice here that we have a foreign key constraint now in our school table. So conference, that foreign key references demo conference nickname. So references that table up there, they’ll be run this invalid name, invalid column name conference. Again, that’s because we’re doing we have batch processing issues. So the conference table must be created first. So if you’re using as your Data Studio, and using a Jupyter Notebook, you’ll need to make sure that that conferences ran first, and I’ll try to make sure that this is set up more properly in your SQL notes as well. But let’s go ahead and run this again. Ah, there we go. So five rows affected eight rows affected there. So if we run demo dot school, so all of that is and we can actually see the conference name up here now. And then if we also change this to a conference, we can actually see all of our conferences were inserted as well.

So cool, everything looks good so far. Let’s go ahead and look at how we can insert some more values. So demo dot school, inserting into school and inserting into conference. So here I am trying to insert University of Nebraska Omaha, which isn’t here yet. I took that out. We have University of Missouri University, Nebraska Lincoln, and both put them on here. And they’re going to be in the summit conference. And I’m also inserting summit into the conference table. But if I try to run this, haha, alright, so the insert statement conflicted with the foreign key constraint. School conference, the conflict occurred and the database cc 520 table demo conference column and nickname. So foreign key constraint, right. So the issue here, hey, let me for the sub summit, it does not actually. So we have you and oh here and then 1908, Mavericks, black, crimson, Omaha, everything here is the same. But this line here was not actually ran yet. So since summit and Summit League, right, because summit, if we scroll up here, the conference, right is that primary key is nickname.

And so since the summit here, did not exist when I ran this line of code, this one. So this failed, because of our foreign key constraint, we were still able to insert this into our conference table, because this executed so that’s what this one row affected down here. This one worked, but our insert did not because the summit did not exist yet. But now that it does exist, we can actually execute this in our our table gets in our value gets inserted just fine. And now when we actually run our demo dot school, University of Nebraska, Omaha is there. And then we can also pull from our conference table as well. And see that summit is there. Um, but that is the basics of how our foreign key constraints are done. But let’s go ahead and look at our next constraint thing.

Our next one here is a default constraint. Default constraints are extremely useful for information or columns that are knowable, right? So if a column is knowable, but you want data to actually be there, we can use a default value, which is really, really handy. So of course, just as it sounds, assigns a default value for a column, right, this is used on inserts when a value is not provided for that particular insult insert. So if a column is knowable, and that column is not provided as part of an insert, then a D, the default value is provided as part of the default constraint. So you can provide a value, you can still override that default value. So just because you have a default constraint does not mean that you can’t have a value insert into that column. It just means that if you don’t insert a value, the default value is actually used, I think, we can also utilize what we call an identity property that provides similar behavior. Although for an identity column, you’re not actually supposed to override those values. So that’s a little bit different. And identity columns are a little bit special. And again, right we will be talking more about what the identity column does in a future lecture. But I’ll showcase the basics here for this demo. But for now, let’s take a look at what this looks like in code. So let’s go ahead and re create our table here.

And so similar kind of thing. We had a school here. So our same school table that we had before, we do have our foreign keys still. So demo conference. So that still exists. I haven’t taken that on out. But I’ve added two new constraints here, right, two new constraints. So I’ve added an identity constraint. So identity column is going to actually auto increment that column. So every record that gets inserted, it will be one more than the previous record that was inserted. So in this case, I am telling it to start counting at one and counting up. Sorry, I’m telling it to start at one and then count up by one each time. So increment each value up by one. The other new constraint that added here is the default constraint down here. This is the identity. And this is the default constraint. In this case, I’m using assist date, time offset. So what this is going to do is it’s going to get the current time on the SQL Server instance, when this data or when this record is actually inserted. And that’s the value that will be defaulted there, created or updated on and that sort of thing is a very useful or very useful columns to provide some extra information in terms of debugging, and tracking, when records are actually inserted into your database. So notice down here, for my insert statement here, I don’t have school ID or created on as part of my insert those records are left out, because school ID is taken care of for us by the identity column.

So that will count up by one, and then my created on and I don’t need to create, I don’t need to add that manually, right? It takes the timestamp of when that record is inserted and adds that timestamp to our comm by default. So if we scroll down here, we can see our results. Notice that my school ID is auto incrementing. So k state was the first record u and l was the last one that I added and incremented by one each time I actually inserted it for that column. And then we also have my created on column where it did a timestamp when these records were actually inserted now looks like it appears like it is all at the same time. Let’s just because all these records were able to be inserted instantaneously because there’s not very many. But that does change as we add more information. So for example, if I add a new record here. So notice your an O is missing again. So if I add you and oh, I can actually provide a timestamp if I wanted to. So let’s do you just like to 21 run that. Notice that here is my 110101 University of Nebraska, Omaha, my dignity column is there. And then we can also leave that off if we wanted to as well just like what we did up here. Now, you’re not supposed to do this, where we where we actually put a value in for an identity column. So you cannot actually explicitly insert value for an identity column. But you can override that behavior.

So in SQL Server, there is a a setting for your actual database itself, where you can turn this protective feature off. If you wanted to, I do not recommend this. That’s the whole point of an identity column. If you find yourself needing to override the value and identity column, this probably means that you’re using identity column in a wrong spot. But rather than using an identity column, what we can actually do is use a sequence object. And again, we can cover this more in detail in future. But a sequence object is very similar to an identity column and the sense that The sequence object is going to auto increment, it’s going to be a default value. But instead, we’re actually having more control over that value. And so this is my sequence object up here. So create sequence, demo dot school ID, and then as int, so that’s the data type. And then this is the value that it starts at. So min value is two increments by two every time and then we have a no cycle.

So our sequence doesn’t wrap around itself. So you can have a pattern that way too, if you want your sequence to wrap, which is something that the identity column cannot do. And so to use this as part of our table, we use a default constraint. And then inside of that, we’re going to say next value four, and then our sequence object, that would be the syntax here. And so now if we run this, you can kind of notice down here, school ID now starts at zero and goes up by two every time because that is what our sequence object up here starts. But if I wanted to make it just like what I had up above, and my identity column, I can say one and one year. Aha, there we go. Now 12345678. But just two different ways to do the exact same or very similar idea very similar concept. Again, though, this can also be done programmatically in an application that’s utilizing this database as well. But that is going to conclude our introduction into database constraints for our tables. Next time we’ll take a deeper dive into some SQL code, and look how all of this works in action.

Subsections of Joins

Introduction to Joins

YouTube Video

Video Transcription