Subsections of C# Syntax

Reference Types and Value Types

Reference Types and Value Types

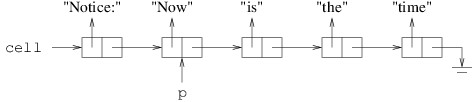

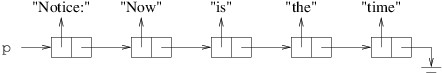

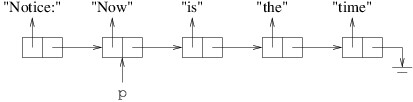

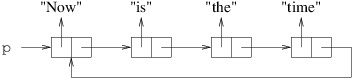

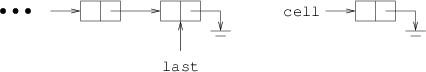

Data types in C# come in two distinct flavors: value types and reference types. In order to understand the distinction, it helps to consider how space is allocated in C#. Whenever a method is called, the space needed to execute that method is allocated from a data structure known as the call stack. The space for a method includes its local variables, including its parameters (except for out or ref parameters). The organization of the call stack is shown in the following figure:

When the currently-running method makes a method call, space for that method is taken from the beginning of the unused stack space. When the currently-running method returns, its space is returned to the unused space. Thus, the call stack works like the array-based implementation of a stack, and this storage allocation is quite efficient.

What is stored in the space allocated for a variable depends on whether the variable is for a value type or a reference type. For a value type, the value of the variable is stored directly in the space allocated for it. There are two kinds of value types: structures and enumerations. Examples of structures include numeric types such as int, double, and char. An example of an enumeration is DialogResult (see "MessageBoxes" and “File Dialogs”).

Because value types are stored directly in variables, whenever a value is assigned to a variable of a value type, the entire value must be written to the variable. For performance reasons, value types therefore should be fairly small.

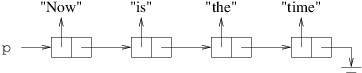

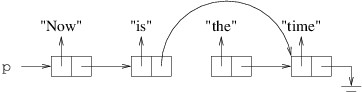

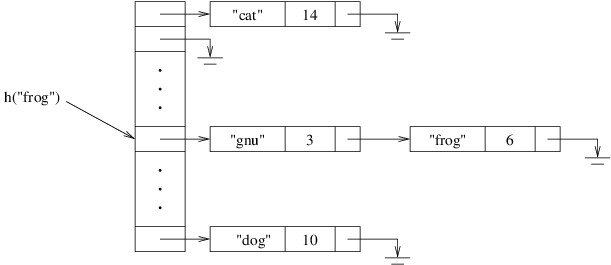

For reference types, the values are not stored directly into the space allocated for the variable. Instead, the variable stores a reference, which is like an address where the value of the variable can actually be found. When a reference type is constructed with a new expression, space for that instance is allocated from a large data structure called the heap (which is unrelated to a heap used to implement a priority queue). Essentially, the heap is a large pool of available memory from which space of different sizes may be allocated at any time. We will not go into detail about how the heap is implemented, but suffice it to say that it is more complicated and less efficient than the stack. When space for a reference type is allocated from the heap, a reference to that space is stored in the variable. Larger data types are more efficiently implemented as reference types because an assignment to a variable of a reference type only needs to write a reference, not the entire data value.

There are three kinds of reference types: classes, interfaces, records, and delegates. Records and delegates are beyond the scope of this course.

Variables of a reference type do not need to refer to any data value. In this case, they store a value of null (variables of a value type cannot store null). Any attempt to access a method, property, or other member of a null or to apply an index to it will result in a NullReferenceException.

The fields of classes or structures are stored in a similar way, depending on whether the field is a value type or a reference type. If it is a value type, the value is stored directly in the field, regardless of whether that field belongs to an object allocated from the stack or the heap. If it is a reference type, it stores either null or a reference to an object allocated from the heap.

The difference between value types and reference types can be illustrated with the following code example:

private int[] DoSomething(int i, int j)

{

Point a = new(i, j);

Point b = a;

a.X = i + j;

int[] c = new int[10];

int[] d = c;

c[0] = b.X;

return d;

}

Suppose this method is called as follows:

int[] values = DoSomething(1, 2);

The method contains six local variables: i, j, a, b, c, and d. int is a structure, and hence a value type. Point is a structure (and hence a value type) containing public int properties X and Y, each of which can be read or modified. int[ ], however, is a reference type. Space for all six of these variables is allocated from the stack, and the space for the two Points includes space to store two int fields for each. The values 1 and 2 passed for i and j, respectively, are stored directly in these variables.

The constructor in the first line of the method above sets the X property of a to 1 and the Y property of a to 2. The next statement simply copies the value of a - i.e., the point (1, 2) - to b. Thus, when the X property of a is then changed to 3, b is unchanged - it still contains the point (1, 2).

On the other hand, consider what happens when something similar is done with array variables. When c is constructed, it is assigned a new array allocated from the heap and containing 10 locations. These 10 locations are automatically initialized to 0. However, because an array is a reference type, the variable c contains a reference to the actual array object, not the array itself. Thus, when c is copied to d, the array itself is not copied - the reference to the array is copied. Consequently, d and c now refer to the same array object, not two different arrays that look the same. Hence, after we assign c[0] a value of 1, d[0] will also contain a value of 1 because c and d refer to the same array object. (If we want c and d to refer to different array objects, we need to construct a new array for each variable and make sure each location of each array contains the value we want.) The array returned therefore resides on the heap, and contains 1 at index 0, and 0 at each of its other nine locations. The six local variables are returned to unused stack space; however, because the array was allocated from the heap, the calling code may continue to use it.

It is sometimes convenient to be able to store a null in a variable of a value type. For example, we may want to indicate that an int variable contains no meaningful value. In some cases, we can reserve a specific int value for this purpose, but in other cases, there may be no int value that does not have some other meaning within the context. In such cases, we can use the ? operator to define a nullable version of a value type; e.g.,

We can do this with any value type. Nullable value types such as int? are the only value types that can store null.

Beginning with C# version 8.0, similar annotations using the ? operator are allowed for reference types. In contrast to its use with value types, this operator has no effect on the code execution when it is used with a reference type. Instead, such annotations are used to help programmers to avoid NullReferenceExceptions. For example, the type string is used for variables that should never be null, but string? is used for variables that might be null. Assigning null to a string variable will not throw an exception (though it might lead to a NullReferenceException later); however, starting with .NET 6, the compiler will generate a warning whenever it cannot determine that a value assigned to a non-nullable variable is not null. One way to avoid this warning is to use the nullable version of the type; e.g.,

The compiler uses a technique called static analysis to try to determine whether a value assigned to a variable of a non-nullable reference type is non-null. This technique is limited, resulting in many cases in which the value assigned cannot be null, but the compiler gives a warning anyway. (This technique is especially limited in its ability to analyze arrays.) In such cases, the null-forgiving operator ! can be used to remove the warning. Whenever you use this operator, the CIS 300 style requirements specify that you must include a comment explaining why the value assigned cannot be null (see “Comments”).

For example, a StreamReader’s ReadLine method returns null when there are no more lines left in the stream, but otherwise returns a non-null string (see “Advanced Text File I/O”). We can use the StreamReader’s EndOfStream property to determine whether all lines have been read; for example, if input is a StreamReader:

while (!input.EndOfStream)

{

string line = input.ReadLine();

// Process the line

}

However, because ReadLine has a return type of string? and the type of line is string, the compiler generates a warning - even though ReadLine will never return null in this context. We can eliminate the warning as follows:

while (!input.EndOfStream)

{

// Because input is not at the end of the stream, ReadLine won't return null.

string line = input.ReadLine()!;

// Process the line

}

Because classes are reference types, it is possible for the definition of a class C to contain one or more fields of type C or, more typically, type C?; for example:

public class C

{

private C? _nextC;

. . .

}

Such circularity would be impossible for a value type because there would not be room for anything else if we tried to include a value of type C? within a value of type C. However, because C is a class, and hence a reference type, _nextC simply contains either null or a reference to some object of type C. When the runtime system constructs an instance of type C, it just needs to make it large enough to hold a reference, along with any other fields defined within C. Such recursive definitions are a powerful way to link together many instances of a type. See “Linked Lists” and “Trees” for more information.

Because all types in C# are subtypes of object, which is a reference type, every value type is a subtype of at least one reference type (however, value types cannot themselves have subtypes). It is therefore possible to assign an instance of a value type to a variable of a reference type; for example:

When this is done, a boxed version of the value type is constructed,

and the value copied to it. The boxed version of the value type is

just like the original value type, except that it is allocated from

the heap and accessed by reference, not by value. A reference to this boxed version is then assigned to the variable of the reference type. Note that multiple variables of the reference type may refer to the same boxed instance of the value type.

Note also that boxing may also occur when passing parameters. For example, suppose we have a method:

private object F(object x)

{

}

If we call F with a parameter of 3, then 3 will need to be copied to a boxed int, and a reference to this boxed int will be assigned to x within F.

Enumerations

Enumerations

An enumeration is a value type containing a set of named constants. An example of an enumeration is DialogResult (see "MessageBoxes" and “File Dialogs”). The DialogResult type contains the following members:

- DialogResult.Abort

- DialogResult.Cancel

- DialogResult.Ignore

- DialogResult.No

- DialogResult.None

- DialogResult.OK

- DialogResult.Retry

- DialogResult.Yes

Each of the above members has a different constant value. In many cases, we are not interested in the specific value of a given member. Instead, we are often only interested in whether two expressions of this type have the same value. For example, the following code fragment is given in the "MessageBoxes" section:

DialogResult result = MessageBox.Show("The file is not saved. Really quit?", "Confirm Quit", MessageBoxButtons.YesNo);

if (result == DialogResult.Yes)

{

Application.Exit();

}

In the if-statement above, we are only interested in whether the user closed the MessageBox with the “Yes” button; i.e., we want to know whether the Show method returned the same value as DialogResult.Yes. For this purpose, we don’t need to know anything about the value of DialogResult.Yes or any of the other DialogResult members.

However, there are times when it is useful to know that the values in an enumeration are always integers. Using a cast, we can assign a member of an enumeration to an int variable or otherwise use it as we would an int; for example, after the code fragment above, we can write:

As a more involved example, we can loop through the values of an enumeration:

for (DialogResult r = 0; (int)r < 8; r++)

{

MessageBox.Show(r.ToString());

}

The above loop will display 8 MessageBoxes in sequence, each displaying the name of a member of the enumeration (i.e., “None”, “OK”, etc.).

Variables of an enumeration type may be assigned any value of the enumeration’s underlying type (usually int, as we will discuss below). For example, if we had used the condition (int)r < 10 in the above for statement, the loop would continue two more iterations, showing 8 and 9 in the last two MessageBoxes.

An enumeration is defined using an enum statement, which is similar to a class statement except that in the simplest case, the body of an enum is simply a listing of the members of the enumeration. For example, the DialogResult enumeration is defined as follows:

public enum DialogResult

{

None, OK, Cancel, Abort, Retry, Ignore, Yes, No

}

This definition defines DialogResult.None as having the value 0, DialogResult.OK as having the value 1, etc.

As mentioned above, each enumeration has underlying type. By default, this type is int, but an enum statement may specify another underlying type, as follows:

public enum Beatles : byte

{

John, Paul, George, Ringo

}

The above construct defines the underlying type for the enumeration Beatles to be byte; thus, a variable of type Beatles may be assigned any byte value. The following integer types may be used as underlying types for enumerations:

- byte (0 through 255)

- sbyte (-128 through 127)

- short (-32,768 through 32,767)

- ushort (0 through 65,535)

- int (-2,147,483,648 through 2,147,483,647)

- uint (0 through 4,294,967,295)

- long (-9,223,372,036,854,775,808 through 9,223,372,036,854,775,807)

- ulong (0 through 18,446,744,073,709,551,615)

It is also possible to define members of an enumeration so that they are not simply the values 0, 1, etc. For example, we might alter the Beatles enumeration as follows:

public enum Beatles : byte

{

John = 1, Paul, George = 5, Ringo

}

This defines the following values for the members:

- Beatles.John: 1

- Beatles.Paul: 2

- Beatles.George: 5

- Beatles.Ringo: 6

Thus, if a value is explicitly assigned to a member, that member takes

on that value; otherwise, that member takes on the next value greater

than the previous member listed, or 0 if that member is the first

listed. Note that using this technique, it is possible to define two

members with the same value, although this is usually undesirable. If

assigning values in this way would lead to a value outside the range of

the underlying type, a syntax error results (for example, if George

were assigned 255 in the above definition, thus causing Ringo to have a value outside the range of a byte).

One reason we might want to define explicit values for members of an

enumeration is if we want to use the members as flags. For example, one

of the

MessageBox.Show

methods takes as one of its parameters a

MessageBoxOptions,

which is an enumeration containing the following members:

- MessageBoxOptions.DefaultDesktopOnly

- MessageBoxOptions.RightAlign

- MessageBoxOptions.RtlReading

- MessageBoxOptions.ServiceNotification

The meaning of each of these members is unimportant for the purposes of

this discussion. The point is that the values of these members are

chosen in such a way that more than one of them can be combined into a

single value. The way this is done is to define each member as a

different power of 2. The binary representation of a power of 2 contains

exactly one bit with a value of 1. Thus, these values can be combined

using a logical OR operator, and the original values can be retrieved

using a logical AND operator.

For example, suppose the MessageBoxOptions enumeration is defined as

follows:

public enum MessageBoxOptions

{

DefaultDesktopOnly = 1,

RightAlign = 2,

RtlReading = 4,

ServiceNotification = 8

}

Note

The definition in .NET 6 uses different powers of 2, but

the priciple is the same.

Now suppose we want to create a

MessageBox that will be displayed on the default desktop with

right-aligned text. We can combine these options using the expression

MessageBoxOptions.DefaultDesktopOnly | MessageBoxOptions.RightAlign

This expression combines corresponding bits of the two operands using a

logical OR. Recall that the logical OR of two bits is 1 if at least one

of the two bits is 1. If both operands are 0, the result is 0. In this

example, the operands have a 1 in different bit locations. When we

combine them using logical OR, both of these bit positions will contain

a 1:

0000 0000 0000 0000 0000 0000 0000 0001

0000 0000 0000 0000 0000 0000 0000 0010

---------------------------------------

0000 0000 0000 0000 0000 0000 0000 0011

We can therefore specify both of these options to the Show method as

folows:

MessageBox.Show("Hello\nworld!", "Hello", MessageBoxButtons.OK,

MessageBoxIcon.Information, MessageBoxDefaultButton.Button1,

MessageBoxOptions.DefaultDesktopOnly |

MessageBoxOptions.RightAlign);

The \n in the above example specifies the end of a line; hence,

“Hello” and “world!” will be displayed on separate lines, aligned on

the right:

The Show method determines which bits are 1 in the

MessageBoxOptions parameter using a logical AND. Recall that a

logical AND of two bits is 1 only if both bits are 1. In all othercases,

the result is 0. Suppose, then, that options is a

MessageBoxOptions variable with an unknown value. Because each named

member of the MessageBoxOptions enumeration (e.g.,

MessageBoxOptions.RightAlign) has exactly one bit with a value of 1,

an expression like

options & MessageBoxOptions.RightAlign

can have only two possible values:

- If the bit position containing the 1 in

MessageBoxOptions.RightAlign also contains a 1 in

options,

then the expression’s value is MessageBoxOptions.RightAlign. - Otherwise, the expression’s value is 0.

Thus, the Show method can use code like:

if ((options & MessageBoxOptions.RightAlign) == MessageBoxOptions.RightAlign)

{

// Code to right-align the text

}

else

{

// Code to left-align the text

}

Defining enumerations to be used as flags in this way can be made easier

by writing the powers of 2 in hexadecimal, or base 16. Each hex digit

contains one of 16 possible values: the ten digits 0-9 or the six

letters a-f (in either lower or upper case). A hex digit is exactly four

bits; hence, the hex values containing one occurrence of either 1, 2, 4,

or 8, with all other digits 0, are exactly the powers of 2. To write a

number in hex in a C# program, start with 0x, then give the hex

digits. For example, we can define the following enumeration to

represent the positions a baseball player is capable of playing:

public enum Positions

{

Pitcher = 0x1,

Catcher = 0x2,

FirstBase = 0x4,

SecondBase = 0x8,

ThirdBase = 0x10,

Shortstop = 0x20,

LeftField = 0x40,

CenterField = 0x80,

RightField = 0x100

}

We can then encode that a player is capable of playing 1st base, left

field, center field, or right field with the expression:

Positions.FirstBase | Positions.LeftField | Positions.CenterField | Positions.RightField

This expression would give a value having four bit positions containing

1:

0000 0000 0000 0000 0000 0001 1100 0100

For more information on enumerations, see the section,

Enumeration Types in the

C# Reference.

Structures

Structures

A structure is similar to a class, except that it is a value type, whereas a class is a reference type. A structure definition looks a lot like a class definition; for example, the following defines a structure for storing information associated with a name:

/// <summary>

/// Stores a frequency and a rank.

/// </summary>

public readonly struct FrequencyAndRank

{

/// <summary>

/// Gets the Frequency.

/// </summary>

public float Frequency { get; }

/// <summary>

/// Gets the Rank.

/// </summary>

public int Rank { get; }

/// <summary>

/// Initializes a FrequencyAndRank with the given values.

/// </summary>

/// <param name="freq">The frequency.</param>

/// <param name="rank">The rank.</param>

public FrequencyAndRank(float freq, int rank)

{

Frequency = freq;

Rank = rank;

}

/// <summary>

/// Obtains a string representation of the frequency and rank.

/// </summary>

/// <returns>The string representation.</returns>

public override string ToString()

{

return Frequency + ", " + Rank;

}

}

Note that the above definition looks just like a class definition, except that the keyword struct is used instead of the keyword class, and the readonly modifier is used. The readonly modifier cannot be used with a class definition, but is often used with a structure definition to indicate that the structure is immutable. The compiler then verifies that the structure definition does not allow any fields to be changed; for example, it verifies that no property has a set accessor.

A structure can be defined anywhere a class can be defined. However, one important restriction on a structure definition is that no field can be of the same type as the structure itself. For example, the following definition is not allowed:

public struct S

{

private S _nextS;

}

The reason for this restriction is that because a structure is a value type, each instance would need to contain enough space for another instance of the same type, and this instance would need enough space for another instance, and so on forever. This type of circular definition is prohibited even if it is indirect; for example, the following is also illegal:

public struct S

{

public T NextT { get; }

}

public struct T

{

public S? NextS { get; }

}

Because the NextT property uses the default implementation, each instance of S contains a hidden field of type T. Because T is a value type, each instance of S needs enough space to store an instance of T. Likewise, because the NextS property uses the default implementation, each instance of T contains a hidden field of type S?. Because S is a value type, each instance of T - and hence each instance of S - needs enough space to store an instance of S?, which in turn needs enough space to store an instance of S. Again, this results in circularity that is impossible to satisfy.

Any structure must have a constructor that takes no parameters. If one is not explicitly provided, a default constructor containing no statements is included. If one is explicitly provided, it must be public. Thus, an instance of a structure can always be constructed using a no-parameter constructor. If no code for such a constructor is provided, each field that does not contain an initializer is set to its default value.

If a variable of a structure type is assigned its default value, each of its fields is set to its default value, regardless of any initializers in the structure definition. For example, if FrequencyAndRank is defined as above, then the following statement will set both x.Frequency and x.Rank to 0:

FrequencyAndRank x = default;

Warning

Because the default value of a type can always be assigned to a variable of that type, care should be taken when including fields of reference types within a structure definition. Because the default instance of this structure will contain null values for all fields of reference types, these fields should be defined to be nullable. The compiler provides no warnings about this.

For more information on structures, see the section, “Structure types” in the C# Language Reference.

The decimal Type

The decimal Type

A

decimal

is a structure representing

a floating-point decimal number. The main difference between a

decimal and a float or a double is that a decimal can

store any value that can be written using no more than 28 decimal

digits, a decimal point, and optionally a ‘-’, without rounding. For

example, the value 0.1 cannot be stored exactly in either a float or

a double because its binary representation is infinite

(0.000110011…); however, it can be stored exactly in a decimal.

Various types, such as int, double, or string, may be

converted to a decimal using a Convert.ToDecimal method; for

example, if i is an int, we can convert it to a decimal with:

decimal d = Convert.ToDecimal(i);

A decimal is represented internally with the following three components:

- A 96-bit value v storing the digits

(0 ≤ v ≤ 79,228,162,514,264,337,593,543,950,335).

- A sign bit s, where 1 denotes a negative value.

- A scale factor d to indicate where the decimal point is

(0 ≤ d ≤ 28).

The value represented is then (-1)sv/10d.

For example, 123.456 can be represented by setting v to 123,456,

s to 0, and d to 3.

Read-Only and Constant Fields

Read-Only and Constant Fields

Field declarations may contain one of the the keywords readonly or const to indicate that these fields will always contain the same values. Such declarations are useful for defining a value that is to be used throughout a class or structure definition, or throughout an entire program. For example, we might define:

public class ConstantsExample

{

public readonly int VerticalPadding = 12;

private const string _humanPlayer = "X";

. . .

}

Subsequently throughout the above class, the identifier _humanPlayer will refer to the string, “X”. Because VerticalPadding is public, the VerticalPadding field of any instance of this ConstantsExample will contain the value 12 throughout the program. Such definitions are useful for various reasons, but perhaps the most important is that they make the program more maintainable. For example, VerticalPadding may represent some distance within a graphical layout. At some point in the lifetime of the software, it may be decided that this distance should be changed to 10 in order to give a more compact layout. Because we are using a readonly field rather than a literal 12 everywhere this distance is needed, we can make this change by simply changing the 12 in the above definition to 10.

When defining a const field, an initializer is required. The value assigned by the initializer must be a value that can be computed at compile time. For this reason, a constant field of a reference type can only be a string or null. The assigned value may be an expression, and this expression may contain other const fields, provided these definitions don’t mutually depend on each other. Thus, for example, we could add the following to the above definition:

private const string _paddedHumanPlayer = " " + _humanPlayer + " ";

const fields may not be declared as static, as they are already implicitly static.

A readonly field differs from a const field mainly in that it is initialized at runtime, whereas a const field is initialized at compile time. This difference has several ramifications. First, a readonly field may be initialized in a constructor as an alternative to using an initializer. Second, a readonly field may be either static or non-static. These differences imply that in different instances of the same class or structure, a readonly field may have different values.

One final difference between a readonly field and a const field is that a readonly field may be of any type and contain any value. Care must be taken, however, when defining a readonly reference type. For example, suppose we define the following:

private readonly string[] _names = { "Peter", "Paul", "Mary" };

Defining _names to be readonly guarantees that this field will always refer to the same array after its containing instance is constructed. However, it does not guarantee that the three array locations will always contain the same values. For this reason, the use of readonly for public fields of mutable reference types is discouraged.

readonly is preferred over const for a public field whose value may change later in the software lifecycle. If the value of a public const field is changed by a code revision, any code using that field will need to be recompiled to incorporate that change.

Properties

Properties

A property is used syntactically like a field of a class or structure,

but provides greater flexibility in implementation. For example, the

string class contains a public property called

Length. This

property is accessed in code much as if it were a public int

field; i.e., if s is a string variable, we can access its

Length property with the expression s.Length,

which evaluates to an int. If Length were a public int field, we would access it in just the same way. However, it turns out that we cannot assign a value to this property, as we can to a public field; i.e., the statement,

is not allowed. The reason for this limitation is that properties can be defined to restrict whether they can be read from or written to. The Length property is defined so that it can be read from, but not written to. This flexibility is one of the two main differences between a field and a property. The other main difference has to do with maintainability and is therefore easier to understand once we see how to define a property.

Suppose we wish to provide full read/write access to a double value. Rather than defining a public double field, we can define a simple double property as follows:

public double X { get; set; }

This property then functions just like a public field - the get keyword allows code to read from the property, and the set keyword allows code to write to the property. A property definition requires at least one of these keywords, but one of them may be omitted to define a read-only property (if set is omitted) or a write-only property (if get is omitted). For example, the following defines X to be a read-only property:

Although this property is read-only, the constructor for the class or structure containing this definition is allowed to initialize it. Sometimes, however we want certain methods of the containing class or structure to be able to modify the property’s value without allowing user code to do so. To accomplish this, We can define X in this way:

public double X { get; private set; }

The above examples are the simplest ways to define properties. They all rely on the default implementation of the property. Unlike a field, the name of the property is not actually a variable; instead, there is a hidden variable that is automatically defined. The only way this hidden variable can be accessed is through the property.

Warning

Don’t define a private property using the default implementation. Use a private field instead.

The distinction between a property and its hidden variable may seem artificial at first. However, the real flexibility of a property is revealed by the fact that we can define our own implementation, rather than relying on the default implementation. For example, suppose a certain data structure stores a StringBuilder called _word, and we want to provide read-only access to its length. We can facilitate this by defining the following property:

public int WordLength

{

get => _word.Length;

}

In fact, we can abbreviate this definition as follows:

public int WordLength => _word.Length;

In this case, the get keyword is implied. In either case, the code to the right of the “=>” must be an expression whose type is the same as the property’s type. Note that when we provide such an expression, there is no longer a hidden variable, as we have provided explicit code indicating how the value of the property is to be computed.

We can also provide an explicit implementation for the set accessor. Suppose, for example, that we want to allow the user read/write access to the length of _word. In order to be able to provide write access, we must be able to acquire the value that the user wishes to assign to the length. C# provides a keyword value for this purpose - its type is the same as the type of the property, and it stores the value that user code assigns to the property. Hence, we can define the property as follows:

public int WordLength

{

get => _word.Length;

set => _word.Length = value;

}

It is this flexibility in defining the implementation of a property that makes public properties more maintainable than public fields. Returning to the example at the beginning of this section, suppose we had simply defined X as a public double field. As we pointed out above, such a field could be used by user code in the same way as the first definition of the property X. However, a field is part of the implementation of a class or structure. By making it public, we have exposed part of the implementation to user code. This means that if we later change this part of the implementation, we will potentially break user code that relies on it. If, instead, we were to use a property, we can then change the implementation by modifying the get and/or set accessors. As long as we don’t remove either accessor (or make it private), such a change is invisible to user code. Due to this maintainability, good programmers will never use public fields (unless they are constants); instead, they will use public properties.

In some cases, we need more than a single to expression to define a get or set accessor. For example, suppose a data structure stores an int[ ] _elements, and we wish to provide read-only access to this array. In order to ensure read-only access, we don’t want to give user code a reference to the array, as the code would then be able to modify its contents. We therefore wish to make a copy of the array, and return that array to the user code (though a better solution might be to define an indexer). We can accomplish this as follows:

public int[ ] Elements

{

get

{

int[] temp = new int[_elements.Length];

_elements.CopyTo(temp, 0);

return temp;

}

}

Thus, arbitrary code may be included within the get accessor, provided it returns a value of the appropriate type; however, it is good programming practice to avoid changing the fields of a class or structure within the get accessor of one of its properties. In a similar way, arbitrary code may be used to implement a set accessor. As we can see from this most general way of defining properties, they are really more like methods than fields.

Given how similar accessors are to methods, we might also wonder why we don’t just use methods instead of properties. In fact, we can do just that - properties don’t give any functional advantage over methods, and in fact, some object-oriented languages don’t have properties. The advantage is stylistic. Methods are meant to perform actions, whereas properties are meant to represent entities. Thus, we could define methods GetX and SetX to provide access to the private field _x; however, it is stylistically cleaner to define a property called X.

Indexers

Indexers

Recall that the System.Collections.Generic.Dictionary<TKey, TValue> class (see “The Dictionary<TKey, TValue> Class”) allows keys to be used as indices for the purpose of adding new keys and values, changing the value associated with a key, and retrieving the value associated with a key in the table. In this section, we will discuss how to implement this functionality.

An indexer in C# is defined using the following syntax:

public TValue this[TKey k]

{

get

{

// Code to retrieve the value with key k

}

set

{

// Code to associate the given value with key k

}

}

Note the resemblance of the above code to the definition of a property. The biggest differences are:

- In place of a property name, an indexer uses the keyword

this. - The keyword

this is followed by a nonempty parameter list enclosed in square brackets.

Thus, an indexer is like a property with parameters. The parameters are the indices themselves; i.e., if d is a Dictionary<TKey, TValue> and key is a TKey, d[key] invokes the indexer with parameter key. In general, either the get accessor or the set accessor may be omitted, but at least one of them must be included. As in a property definition, the set accessor can use the keyword value for the value being assigned - in this case, the value to be associated with the given key. The value keyword and the return type of the get accessor will both be of type TValue, the type given prior to the keyword this in the above code.

We want to implement the indexer to behave in the same way as the indexer for System.Collections.Generic.Dictionary<TKey, TValue>. Thus, the get accessor is similar to the TryGetValue method, as outlined in “A Simple Hash Table Implementation”, with a few important differences. First, the get accessor has no out parameter. Instead, it returns the value that TryGetValue assigns to its out parameter when the key is found. When the key is not found, because it can’t return a bool to indicate this, it instead throws a KeyNotFoundException.

Likewise, the set accessor is similar to the Add method, as outlined in “A Simple Hash Table Implementation”. However, whereas the Add method has a TValue parameter through which to pass the value to be associated with the given key, the set accessor gets this value from the value keyword. Furthermore, we don’t want the set accessor to throw an exception if the key is found. Instead, we want it to replace the Data of the cell containing this key with a new KeyValuePair containing the key with the new value.

The Keywords static and this

The Keywords static and this

Object-oriented programming languages such as C# are centered on the concept of an object. Class and structure definitions give instructions for constructing individual objects of various types, normally by using the new keyword. When an object is constructed, it has its own fields in which values may be stored. Specifically, if type T has an int field called _length, then each object of type T will have have such a field, and each of these fields may store a different int. Thus, for example, if x and y are instances of type T, then x._length may contain 7, while y._length may contain 12.

Likewise, we can think of each object as having its own methods and properties, as when any of these methods or properties use the fields of the containing class or structure, they will access the fields belonging to a specific object. For example, if type T contains an Add method that changes the value stored in the _length filed, then a call x.Add will potentially change the value stored in x._length.

However, there are times when we want to define a field, method, or property, but we don’t want it associated with any specific object. For example, suppose we want to define a unique long value for each instance of some class C. We can define a private long field _id within this class and give it a value within its constructor. But how do we get this value in a way that ensures that it is unique? One way is to define a private static long field _nextId, as in the following code:

public class C

{

private static long _nextId = 0;

private long _id;

public C()

{

_id = _nextId;

_nextId++;

}

// Other members could also be defined.

}

By defining _nextId to be static, we are specifying that each instance of C will not contain a _nextId field, but instead, there is a single _nextId field belonging to the entire class. As a result, code belonging to any instance of C can access this one field. Thus, each time an instance of C is constructed, this one field is incremented. This field therefore acts as a counter that keeps track of how many instances of C have been constructed. On the other hand, because _id is not static, each instance of C contains an _id field. Thus, when the assignment,

is done, the value in the single _nextId field is copied to the value of the _id field belonging to the instance being constructed. Because the single _nextId field is incremented every time a new instance of C is constructed, each instance receives a different value for _id.

We can also define static methods or properties. For example, the MessageBox.Show(string text) method is static. Because it is static, we don’t need a MessageBox object in order to call this method - we simply call something like:

MessageBox.Show("Hello world!");

static methods can also be useful for avoiding NullReferenceExceptions. For example, there are times when we want to determine whether a variable x contains null, but x is of an unknown type (perhaps its type is defined by some type parameter T). In such a case, we cannot use == to make the comparison because == is not defined for all types. Furthermore, the following will never work:

Such code will compile, but if x is null, then calling its Equals method will throw a NullReferenceException. In all other cases, the if-condition will evaluate to false. Fortunately, a static Equals method is available to handle this situation:

Because this method is defined within the object class, which is a supertype of every other type in C#, we can refer to this method without specifying the containing class, just as if we had defined it in the class or structure we are writing. Because this method does not belong to individual objects, we don’t need any specific object available in order to call it. It therefore avoids a NullReferenceException.

Because a static method or property does not belong to any instance of its type, it cannot access any non-static members directly, as they all belong to specific instances of the type. If however, the code has access to a specific instance of the type (for example, this instance might be passed as a parameter), the code may reference non-static members of that instance. For example, suppose we were to add to the class C above a method such as:

public static int DoSomething(C x)

{

}

Code inside this method would be able to access _nextID, but not _id. Furthermore, it would be able to access any static methods or properties contained in the class definition, as well as all constructors, but no non-static methods or properties. However, it may access x._id, as well as any other members of x.

Code within a constructor or a non-static method or property can also access the object that contains it by using the keyword this. Thus, in the constructor code above, we could have written the line

as

In fact, the way we originally wrote the code is simply an abbreviation of the above line. Another way of thinking of the restrictions on code within a static method or property is that this code cannot use this, either explicitly or implicitly.

out and ref Parameters

out and ref Parameters

Normally, when a method is called, the call-by-value mechanism is used. Suppose, for example, we have a method:

private void DoSomething(int k)

{

}

We can call this method with a statement like:

provided n is an initialized variable consistent with the int type. For example, suppose n is an int variable containing a value of 28. The call-by-value mechanism works by copying the value of n (i.e., 28) to k. Whatever the DoSomething method may do to k has no effect on n — they are different variables. The same can be said if we had instead passed a variable k — the k in the calling code is still a different variable from the k in the DoSomething method. Finally, if we call DoSomething with an expression like 9 + n, the mechanism is the same.

If a parameter is of a reference type, the same mechanism is used, but it is worth considering that case separately to see exactly what happens. Suppose, for example, that we have the following method:

private void DoSomethingElse(int[] a)

{

a[0] = 1;

a = new int[10];

a[1] = 2;

}

Further suppose that we call this method with

int[] b = new int[5];

DoSomethingElse(b);

The initialization of b above assigns to b a reference to an array containing five 0s. The call to DoSomethingElse copies the value of b to a. Note, however, that the value of b is a reference; hence, after this value is copied, a and b refer to the same five-element array. Therefore, when a[0] is assigned 1, b[0] also becomes 1. When a is assigned a new array, however, this does not affect b, as b is a different variable — b still refers to the same five-element array. Furthermore, when a[1] is assigned a value of 2, because a and b now refer to different arrays, the contents of b are unchanged. Thus, when DoSomethingElse completes, b will refer to a five-element array whose element at location 0 is 1, and whose other elements are 0.

While the call-by-value mechanism is used by default, another mechanism, known as the call-by-reference mechanism, can be specified. When call-by-reference is used, the parameter passed in the calling code must be a variable, not a property or expression. Instead of copying the value of this variable into the corresponding parameter within the method, this mechanism causes the variable within the method to be an alias for the variable being passed. In other words, the two variables are simply different names for the same underlying variable (consequently, the types of the two variables must be identical). Thus, whatever changes are made to the parameter within the method are reflected in the variable passed to the method in the calling code as well.

One case in which this mechanism is useful is when we would like to have a method return more than one value. Suppose, for example, that we would like to find both the maximum and minimum values in a given int[ ]. A return statement can return only one value. Although there are ways of packaging more than one value together in one object, a cleaner way is to use two parameters that use the call-by-reference mechanism. The method can then change the values of these variables to the maximum and minimum values, and these values would be available to the calling code.

Specifically, we can define the method using out parameters:

private void MinimumAndMaximum(int[] array, out int min, out int max)

{

min = array[0];

max = array[0];

for (int i = 1; i < array.Length; i++)

{

if (array[i] < min)

{

min = array[i];

}

if (array[i] > max)

{

max = array[i];

}

}

}

The out keyword in the first line above specifies the call-by-reference mechanism for min and max. We could then call this code as follows, assuming a is an int[ ] containing at least one element:

int minimum;

int maximum;

MinimumAndMaximum(a, out minimum, out maximum);

When this code completes, minimum will contain the minimum element in a and maximum will contain the maximum element in a.

Warning

When using out parameters, it is important that the keyword

out is placed prior to the variable name in both the method call

and the method definition. If you omit this keyword in one of these

places, then the parameter lists won’t match, and you’ll get a syntax

error to this effect.

Tip

As a shorthand, you can declare an out parameter in the parameter

list of the method call. Thus, the above example could be shortened

to the following single line of code:

MinimumAndMaximum(a, out int minimum, out int maximum);

Note that out parameters do not need to be initialized prior to the method call in which they are used. However, they need to be assigned a value within the method to which they are passed. Another way of using the call-by-reference mechanism places a slightly different requirement on where the variables need to be initialized. This other way is to use ref parameters. The only difference between ref parameters and out parameters is that ref parameters must be initialized prior to being passed to the method. Thus, we would typically use an out parameter when we expect the method to assign it its first value, but we would use a ref parameter when we expect the method to change a value that the variable already has (the method may, in fact, use this value prior to changing it).

For example, suppose we want to define a method to swap the contents of two int variables. We use ref parameters to accomplish this:

private void Swap(ref int i, ref int j)

{

int temp = i;

i = j;

j = temp;

}

We could then call this method as follows:

int m = 10;

int n = 12;

Swap(ref m, ref n);

After this code is executed, m will contain 12 and n will contain 10.

The foreach Statement

The foreach Statement

C# provides a foreach statement that is often useful for iterating through the elements of certain data structures. A foreach can be used when all of the following conditions hold:

- The data structure is a subtype of either IEnumerable or IEnumerable<T> for some type T.

- You do not need to know the locations in the data structure of the individual elements.

- You do not need to modify the data structure with this loop.

Warning

Many of the data structures provided to you in CIS 300, as well as

many that you are to write yourself for this class, are not subtypes

of either of the types mentioned in 1 above. Consequently, we cannot

use a foreach loop to iterate through any of these data

structures. However, most of the data structures provided in the .NET

Framework, as well as all arrays, are subtypes of one of these types.

For example, the string class is a subtype of both IEnumerable

and IEnumerable<Char>. To see that this is the case, look in

the documentation for the string

class. In

the “Implements” section, we see all of the

interfaces implemented by string.

Because string implements both of these interfaces, it is a

subtype of each. We can therefore iterate through the elements (i.e., the characters) of a string using a foreach statement, provided we don’t need to know the location of each character in the string (because a string is immutable, we can’t change its contents).

Suppose, for example, that we want to find out how many times the letter ‘i’ occurs in a string s. Because we don’t need to know the locations of these occurrences, we can iterate through the characters with a foreach loop, as follows:

int count = 0;

foreach (char c in s)

{

if (c == 'i')

{

count++;

}

}

The foreach statement requires three pieces of information:

- The type of the elements in the data structure (char in the above example).

- The name of a new variable (

c in the above example). The type of this variable will be the type of the elements in the data structure (i.e., char in the above example). It will take on the values of the elements as the loop iterates. - Following the keyword in, the data structure being traversed (

s in the above example).

The loop then iterates once for each element in the data structure (unless a statement like return or break causes it to terminate prematurely). On each iteration, the variable defined in the foreach statement stores one of the elements in the data structure. Thus, in the above example, c takes the value of a different character in s on each iteration. Note, however, that we have no access to the location containing c on any particular iteration - this is why we don’t use a foreach loop when we need to know the locations of the elements. Because c takes on the value of each character in s, we are able to count how many of them equal ‘i’.

Occasionally, it may not be obvious what type to use for the

foreach loop variable. In such cases, if the data structure is a

subtype of IEnumerable<T>, then the type should be whatever type

is used for T. Otherwise, it is safe to use object. Note,

however, that if the data structure is not a subtype of

IEnumerable<T>, but you know that the elements are some specific

subtype of object, you can use that type for the loop variable -

the type will not be checked until the code is executed. For example,

ListBox

is a class that implements a GUI control displaying a list of

elements. The elements in the ListBox are accessed via its

Items

property, which gets a data structure of type

ListBox.ObjectCollection. Any

object can be added to this data structure, but we often just add strings. ListBox.ObjectCollection is a subtype of IEnumerable; however, it is permissible to set up a foreach loop as follows:

foreach (string s in uxList.Items)

{

}

where uxList is a ListBox variable. As long as all of the elements in uxList.Items are strings, no exception will be thrown.

While the foreach statement provides a clean way to iterate through a data structure, it is important to keep in mind its limitations. First, it can’t even be used on data structures that aren’t subtypes of IEnumerable or IEnumerable<T>. Second, there are many cases, especially when iterating through arrays, where the processing we need to do on the elements depends on the locations of the elements. For example, consider the problem of determining whether two arrays contain the same elements in the same order. For each element of one array, we need to know if the element at the same location in the other array is the same. Because the locations are important, a foreach loop isn’t appropriate - we should use a for loop instead. Finally, a foreach should never be used to modify a data structure, as this causes unpredictable results.

Even when a foreach would work, it is not always the best choice. For example, in order to determine whether a data structure contains a given element, we could iterate through the structure with a foreach loop and compare each element to the given element. While this would work, most data structures provide methods for determining whether they contain a given element. These methods are often far more efficient than using a foreach loop.

Enumerators

Enumerators

As we saw in the previous section, in order for a data structure to support a foreach loop, it must be a subtype of either IEnumerable or IEnumerable<T>, where T is the type of the elements in the data structure. Thus, because Dictionary<TKey, TValue> is a subtype of IEnumerable<KeyValuePair<TKey, TValue>>, we can use a foreach loop to iterate through the key-value pairs that it stores. Likewise, because its Keys and Values properties get objects that are subtypes of IEnumerable<TKey> and IEnumerable<TValue>, respectively, foreach loops may be used to iterate through these objects as well, in order to process all the keys or all the values stored in the dictionary. IEnumerable and IEnumerable<T> are interfaces; hence, we must define any subtypes so that they implement these interfaces. In this section, we will show how to implement the IEnumerable<T> interface to support a foreach loop.

The IEnumerable<T> interface requires two methods:

- public IEnumerator<T> GetEnumerator()

- IEnumerator IEnumerable.GetEnumerator()

The latter method is required only because IEnumerable<T> is a subtype of IEnumerable, and that interface requires a GetEnumerator method that returns a non-generic IEnumerator. Both of these methods should return the same object; hence, because IEnumerator<T> is also a subtype of IEnumerator, this method can simply call the first method:

IEnumerator IEnumerable.GetEnumerator()

{

return GetEnumerator();

}

The public GetEnumerator method returns an IEnumerator<T>. In order to get instances of this interface, we could define a class that implements it; however, C# provides a simpler way to define a subtype of this interface, or, when needed, the IEnumerable<T> interface.

Defining such an enumerator is as simple as writing code to iterate through the elements of the data structure. As each element is reached, it is enumerated via a yield return statement. For example, suppose a dictionary implementation uses a List<KeyValuePair<TKey, TValue>> called _elements to store its key-value pairs. We can then define its GetEnumerator method as follows:

public IEnumerator<KeyValuePair<TKey, TValue>> GetEnumerator()

{

foreach (KeyValuePair<TKey, TValue> p in _elements)

{

yield return p;

}

}

Suppose user code contains a Dictionary<string, int> called d and a foreach loop structured as follows:

foreach (KeyValuePair<string, int> x in d)

{

}

Then the GetEnumerator method is executed until the yield return is reached. The state of this method is then saved, and the value p is used as the value for x in the first iteration of the foreach in the user code. When this loop reaches its second iteration, the GetEnumerator method resumes its execution until it reaches the yield return a second time, and again, the current value of p is used as the value of x in the second iteration of the loop in user code. This continues until the GetEnumerator method finishes; at this point, the loop in user code terminates.

Before continuing, we should mention that there is a simpler way of implementing the public GetEnumerator method in the above example. Because List<T> implements IEnumerable<T>, we can simply use its enumerator:

public IEnumerator> GetEnumerator()

{

return _elements.GetEnumerator();

}

However, the first solution illustrates a more general technique that can be used when we don’t have the desired enumerator already available. For instance, continuing the above example, suppose we wish to define a Keys property to get an IEnumerable<TKey> that iterates through the keys in the dictionary. Because the dictionary now supports a foreach loop, we can define this code to iterate through the key-value pairs in the dictionary, rather than the key-value pairs stored in the List<KeyVauePair<TKey, TValue>>:

public IEnumerable<TKey> Keys

{

get

{

foreach (KeyValuePair<TKey, TValue> p in this)

{

yield return p.Key;

}

}

}

The above code is more maintainable than iterating through the List<KeyValuePair<TKey, TValue>> as it doesn’t depend on the specific implementation of the dictionary.

While this technique usually works best with iterative code, it can also be used with recursion, although the translation usually ends up being less direct and less efficient. Suppose, for example, our dictionary were implemented as in “Binary Search Trees”, where a binary search tree is used. The idea is to adapt the inorder traversal algorithm. However, we can’t use this directly to implement a recursive version of the GetEnumerator method because this method does not take any parameters; hence, we can’t apply it to arbitrary subtrees. Instead, we need a separate recursive method that takes a BinaryTreeNode<KeyValuePair<TKey, TValue>> as its parameter and returns the enumerator we need. Another problem, though, is that the recursive calls will no longer do the processing that needs to be done on the children - they will simply return enumerators. We therefore need to iterate through each of these enumerators to include their elements in the enumerator we are returning:

private static IEnumerable<KeyValuePair<TKey, TValue>>

GetEnumerable(BinaryTreeNode<KeyValuePair<TKey, TValue>>? t)

{

if (t != null)

{

foreach (KeyValuePair<TKey, TValue> p in GetEnumerable(t.LeftChild))

{

yield return p;

}

yield return t.Data;

foreach (KeyValuePair<TKey, TValue> p in GetEnumerable(t.RightChild))

{

yield return p;

}

}

}

Note that we’ve made the return type of this method IEnumerable<KeyValuePair<TKey, TValue>> because we need to use a foreach loop on the result of the recursive calls. Then because any instance of this type must have a GetEnumerator method, we can implement the GetEnumerator method for the dictionary as follows:

public IEnumerator<KeyValuePair<TKey, TValue>> GetEnumerator()

{

return GetEnumerable(_elements).GetEnumerator();

}

In transforming the inorder traversal into the above code, we have introduced some extra loops. These loops lead to less efficient code. Specifically, if the binary search tree is an AVL tree or other balanced binary tree, the time to iterate through this enumerator is in $ O(n \lg n) $, where $ n $ is the number of nodes in the tree. The inorder traversal, by contrast, runs in $ O(n) $ time. In order to achieve this running time with an enumerator, we need to translate the inorder traversal to iterative code using a stack. However, this code isn’t easy to understand:

public IEnumerator<KeyValuePair<TKey, TValue>> GetEnumerator()

{

Stack<BinaryTreeNode<KeyValuePair<TKey, TValue>>> s = new();

BinaryTreeNode<KeyValuePair<TKey, TValue>>? t = _elements;

while (t != null || s.Count > 0)

{

while (t != null)

{

s.Push(t);

t = t.LeftChild;

}

t = s.Pop();

yield return t.Data;

t = t.RightChild;

}

}

The switch Statement

The switch Statement

The switch statement provides an alternative to the if statement for certain contexts. It is used when different cases must be handled based on the value of an expression that can have only a few possible results.

For example, suppose we want to display a MessageBox containing “Abort”, “Retry”, and “Ignore” buttons. The user can respond in only three ways, and we need different code in each case. Assuming message and caption are strings, we can use the following code:

switch (MessageBox.Show(message, caption, MessageBoxButtons.AbortRetryIgnore))

{

case DialogResult.Abort:

// Code for the "Abort" button

break;

case DialogResult.Retry:

// Code for the "Retry" button

break;

case DialogResult.Ignore:

// Code for the "Ignore" button

break;

}

The expression to determine the case (in this example, the call to MessageBox.Show) is placed within the parentheses following the keyword switch. Because the value returned by this method is of the enumeration type DialogResult, it will be one of a small set of values; in fact, given the buttons placed on the MessageBox, this value must be one of three possibilities. These three possible results are listed in three case labels. Each of these case labels must begin with the keyword case, followed by a constant expression (i.e., one that can be fully evaluated by the compiler, as explained in the section, “Constant Fields”), followed by a colon (:). When the expression in the switch statement is evaluated, control jumps to the code following the case label containing the resulting value. For example, if the result of the call to MessageBox.Show is DialogResult.Retry, control jumps to the code following the second case label. If there is no case label containing the value of the expression, control jumps to the code following the switch statement. The code following each case label must be terminated by a statement like break or return, which causes control to jump elsewhere. (This arcane syntax is a holdover from C, except that C allows control to continue into the next case.) A break statement within a switch statement causes control to jump to the code following the switch statement.

The last case in a switch statement may optionally have the case label:

This case label is analogous to an else on an if statement in that if the value of the switch expression is not found, control will jump to the code following the default case label. While this case is not required in a switch statement, there are many instances when it is useful to include one, even if you can explicitly enumerate all of the cases you expect. For example, if each case ends by returning a value, but no default case is included, the compiler will detect that not all paths return a value, as any case that is not enumerated will cause control to jump past the entire switch statement. There are various ways of avoid this problem:

- Make the last case you are handling the default case.

- Add a default case that explicitly throws an exception to handle any cases that you don’t expect.

- Add either a return or a throw following the switch statement (the first two options are preferable to this one).

It is legal to have more than one case label on the same code block. For example, if i is an int variable, we can use the following code:

switch (i)

{

case 1:

// Code for i = 1

break;

case 2:

case 3:

case 5:

case 7:

// Code for i = 2, 3, 5, or 7

break;

case 4:

case 6:

case 8:

// Code for i = 4, 6, or 8

break;

default:

// Code for all other values of i

break;

}

If the value of the switch expression matches any one of the case labels on a block, control jumps to that block. The same case label may not appear more than once.

The Remainder Operator

The Remainder Operator

The remainder operator % computes the remainder that results when one number is divided by another. Specifically, suppose m and n are of some numeric type, where n ≠ 0. We can then define a quotient q and a remainder r as the unique values such that:

- qn + r = m;

- q is an integer;

- |qn| ≤ |m|; and

- |r| < |n|.

Then m % n gives r, and we can compute q by:

Another way to think about m % n is through the following algorithm to compute it:

- Compute |m| / |n|, and remove any fractional part.

- If m and n have the same sign, let q be the above result; otherwise, let q be the negative of the above result.

- m % n is m - qn.

Examples:

- 7 % 3 = 1

- -9 % 5 = -4

- 8 % -3 = 2

- -10 % -4 = -2

- 6.4 % 1.3 = 1.2

Subsections of Visual Studio

Installing Visual Studio

Installing Visual Studio

Visual Studio is available on the machines we use for CIS 300 labs, as well as on machines in other lab classrooms. This edition of Visual Studio is also freely available for installation on your own PC for your personal and classroom use. This section provides instructions for obtaining this software from Microsoft and installing it on your PC. The labs currently use Visual Studio 2022, though I encourage you to install the latest version available on your own machine (currently Visual Studio 2026).

Tip

Visual Studio can also be accessed via a remote desktop server. However, this server can only be accessed either from on-campus or through the campus VPN. See https://www.k-state.edu/it/cybersecurity/vpn// for details on the campus VPN. For more details on the remote desktop server, see the CS Department Support Wiki (this page can be accessed only from on-campus or through the campus VPN).

The enterprise edition of Visual Studio is also available through the Azure Dev Tools for Teaching program. See the CS Department Support Wiki (this page can be accessed only from on-campus or through the campus VPN) for details. A direct link to the Azure Dev Tools for Teaching portal is https://azureforeducation.microsoft.com/devtools.

While Microsoft also produces a version of Visual Studio for Mac, we recommend the Windows version. If you don’t have a Microsoft operating system, you can obtain one for free from the Azure Portal — see the CS Department Support Wiki (accessible only from on-campus or through the campus VPN) for details.

You will need to install the operating system either on a separate bootable partition or using an emulator such as VMware Fusion. VMware Fusion is also available for free through the VMware Academic Program — see the CS Department Support Wiki for details. To download Visual Studio, go to Microsoft’s Visual Studio Site, and click the “Download Visual Studio” button. This should normally begin downloading an installation file; if not, click the “click here to retry” link near the top of the page. When the download has completed, run the file you downloaded. This will start the installation process.

As the installation is beginning, you will be shown a window asking for the components to be installed. Click the “Workloads” tab in this window, and select “.NET desktop development” (under “Desktop & Mobile”). You can select other workloads or components if you wish, but this workload will install all you need for CIS 300.

The first time you run Visual Studio, you will be asked to sign in to your Microsoft account. You can either do this or skip it by clicking, “Not now, maybe later.” You will then be shown a window resembling the following:

Next to “Development Settings:”, select “Visual C#”. You can select whichever color scheme you prefer. At this point, Visual Studio should be fully installed and ready to use.

Git Repositories

Git Repositories

In CIS 300, start code for each assignment will be distributed via a Git repository. Git is a source control system integrated into Visual Studio 2022. Source control systems are powerful mechanisms for teams of programmers and other collaborators to manage multiple copies of various source files and other documents that all collaborators may be modifying. While CIS 300 does not involve teamwork, source control provides a convenient mechanism for distribution of code and submission of assignment solutions. In addition, as we will discuss later, source control provides mechanisms for accessing your code on multiple machines and for “checkpointing” your code.

At the heart of Git is the concept of a Git repository. A Git

repository is essentially a folder on your local machine. As you make

changes within this folder, Git tracks these changes. From time to

time, you will commit these changes. If you see that you have gone

down a wrong path, you can revert to an earlier commit. Git

repositories may be hosted on a server such as GitHub. Various users

may have copies of a Git repository on their own local machines. Git

provides tools for synchronizing local repositories with the

repository hosted on the server in a consistent way.

Note

The above description is a bit of an oversimplification, as the folder

comprising a local copy of a repository typically contains some files

and/or folders that are not part of the repository. One example of

such “extra” files might be

executables that are generated whenever source code within the

repository is compiled. However, when Visual Studio is managing a Git

repository, it does a good job of including within the repository any

files the user

places within the folder comprising the repository.

For each lab and homework assignment in CIS 300, you will be provided a URL that will create your own private Git repository on GitHub. The only people who will have access to your GitHub repositories are you, the CIS 300 instructors, and the CIS 300 lab assistants. These repositories will initially contain start code and perhaps data files for the respective assignments. You will copy the repository to your local machine by cloning it. When you are finished with the assignment, you will push the repository back to GitHub and submit its URL for grading. In what follows, we will explain how to create and clone a GitHub repository. Later in this chapter, we will explain how to commit changes, push a repository, and use some of the other features of Git.

Before you can access GitHub, you will need a GitHub account. If you don’t already have one, you can sign up for one at github.com. At some point after you have completed the sign-up process, GitHub will send you an email asking you to verify the email address you provided during the sign-up process. After you have done this, you will be able to set up GitHub repositories.

For each assignment in CIS 300, you will be given an invitation URL, such as:

Over the next few sections, we will be working through a simple example based on the above invitation link. If you wish to work through this example, click on the above link. You may be asked to sign in to GitHub, but once you are signed in, you will be shown a page asking you to accept the assignment. Clicking the “Accept this assignment” button will create a GitHub repository for you. You will be given a link that will take you to that repository. From that page you will be able to view all of the files in the repository.

In order to be able to use this repository, you will need to clone it

to your local machine. To do this, first open Visual Studio 2022, and

click on the “Clone a Repository” button on the right. In your web

browser, navigate to the GitHub repository that you wish to clone, and

click on the “Code” button. This will display a URL - click on the

button to its right to copy this URL to your clipboard. Then go back

to Visual Studio and paste this URL into the text

box labeled, “Repository location”. In the text box below that, fill

in a new folder you want to use for this repository on your machine,

then click the “Clone” button (if you are asked to sign in to GitHub, click the link to sign in through your web browser). This will copy the Git repository from

GitHub into the folder you selected, and open the solution it contains.

The following sections give an overview of how to use Visual Studio to edit and debug an application, as well as how to use Git within Visual Studio to maintain the local Git repository and synchronize it with the GitHub repository.

Visual Studio Solutions

Visual Studio Solutions

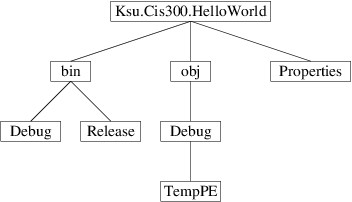

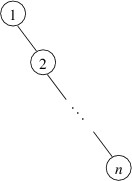

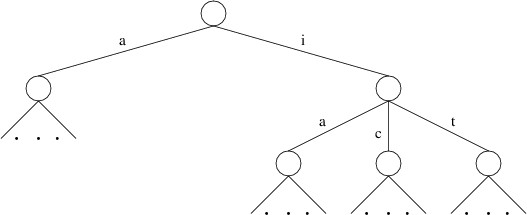

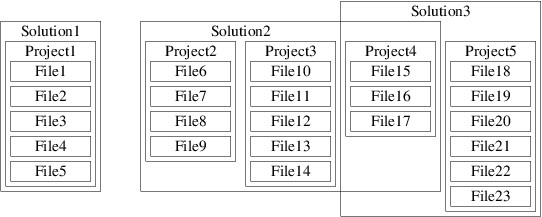

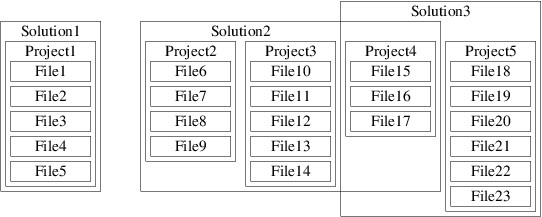

All code developed within Visual Studio 2022 must belong to one or more solutions. When you are using Visual Studio to develop a program, you will be working with a single solution. A solution will contain one or more projects. Each of these projects may belong to more than one solution. Each project typically contains several files, including source code files. Each file will typically belong to only one project. The following figure illustrates some of the possible relationships between solutions, projects, and files.

Note that in the above figure, Project4 is contained in both Solution2 and Solution3. In this section, we will focus on solutions that contain exactly one project, which in turn belongs to no other solutions (e.g., Solution1 in the above figure).

Whenever you open a solution in Studio 2022, the Solution Explorer

(which you can always find on the “View” menu) will give you a view of

the structure of your solution; for example, opening the solution in

the repository given in the previous section may result in the

following being shown in the Solution Explorer:

If you see the above, you will need to change to the Solution view, which you can get by double clicking the line that ends in “.sln”. This will give you the following view:

Warning

You ordinarily will not want to use Folder view, as this will cause files to be edited without any syntax or consistency checking. As a result, you can end up with a solution that is unusable. If your Solution Explorer ever looks like this:

(note the indication “Folder View” at the top and the absence of any boldface line), then it is in Folder view. To return to Solution view, click the icon indicated by the arrow in the above figure. This will return the Solution Explorer to the initial view shown above, where you can double-click the solution to select Solution view.

If you click on the small triangle to the left of

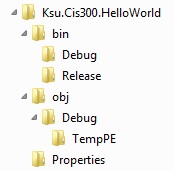

“Ksu.Cis300.HelloWorld”, you will get a more-detailed view:

Near the top, just under the search box, is the name of the solution with an indication of how many projects it contains. Listed under the name of the solution is each project, together with the various components of the project. One of the projects is always shown in bold face. The bold face indicates that this project is the startup project; i.e., it is the project that the debugger will attempt to execute whenever it is invoked (for more details, see the section, “The Debugger”).

The project components having a suffix of “.cs” are C# source code files. When a Windows Forms App is created, its project will contain the following three source code files:

Form1.cs: This file contains code that you will write in order to implement the main GUI for the application. It will be discussed in more detail in “The Code Window”.

Form1.Designer.cs: You will need to click the triangle to the

left of “Form1.cs” in the Solution Explorer in order to reveal this

file name. This contains automatically-generated code that completes

the definition of the main GUI. You will build this code indirectly

by laying out the graphical components of the GUI in the design window (see the section, “The Design Window” for more details). Ordinarily, you will not need to look at the contents of this file.

Program.cs: This file will contain something like the following:

namespace Ksu.Cis300.HelloWorld

{

internal static class Program

{

/// <summary>

/// The main entry point for the application.

/// </summary>

[STAThread]

static void Main()

{

// To customize application configuration such as set high DPI settings or default font,

// see https://aka.ms/applicationconfiguration.

ApplicationConfiguration.Initialize();

Application.Run(new Form1());

}

}

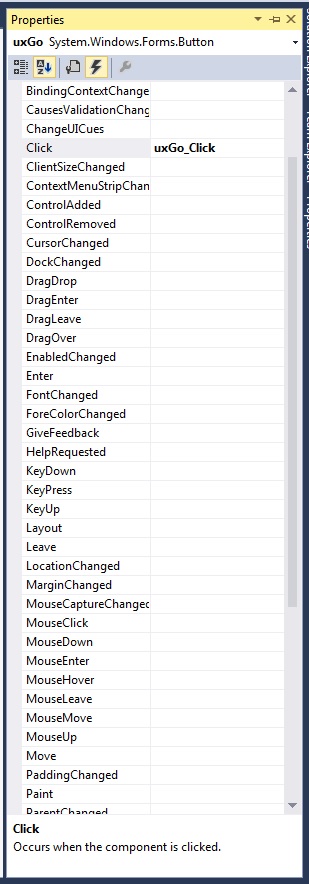

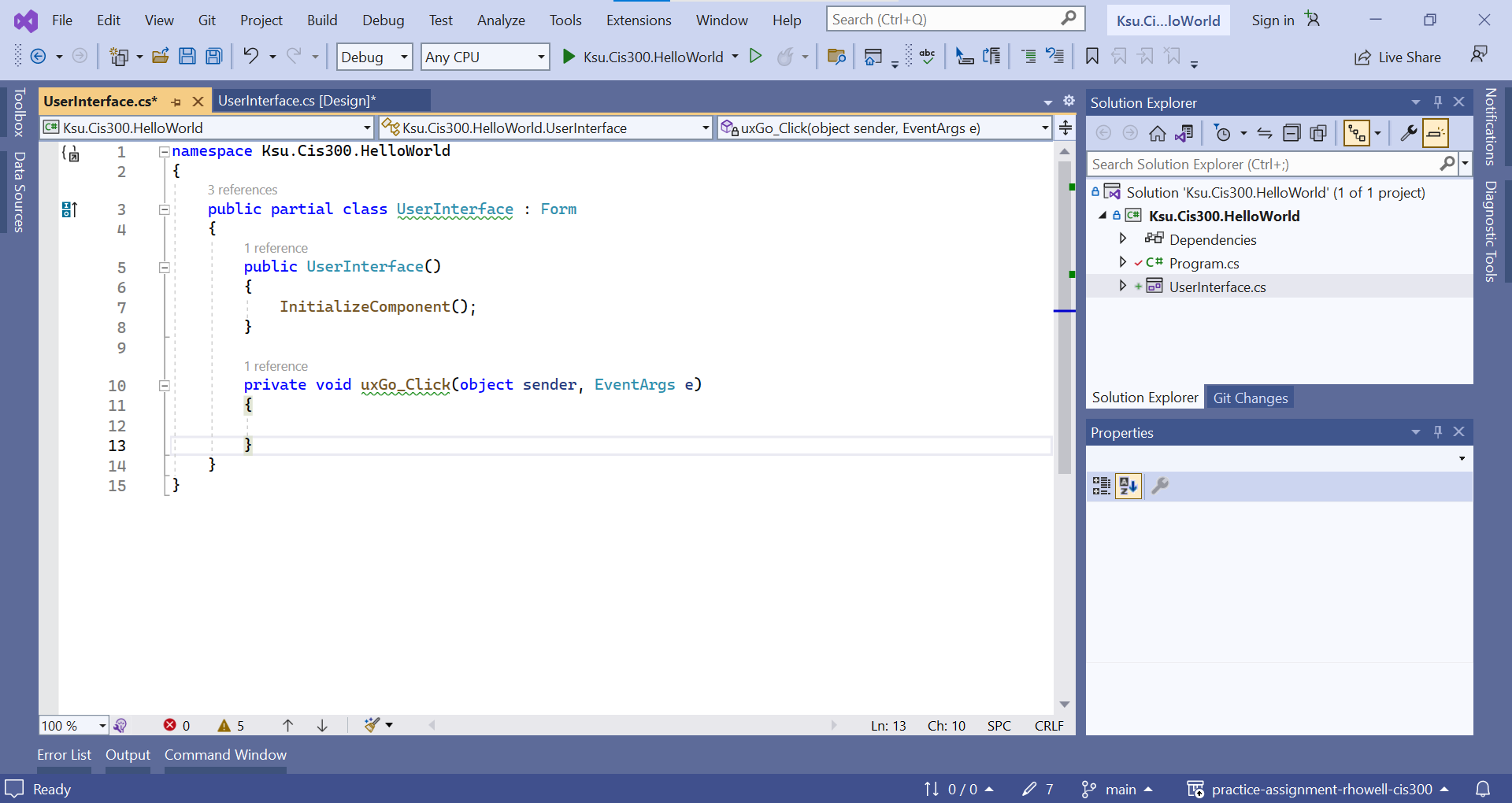

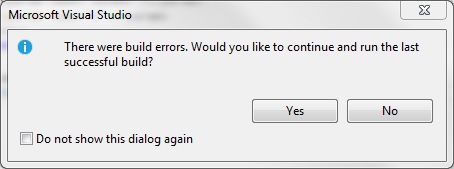

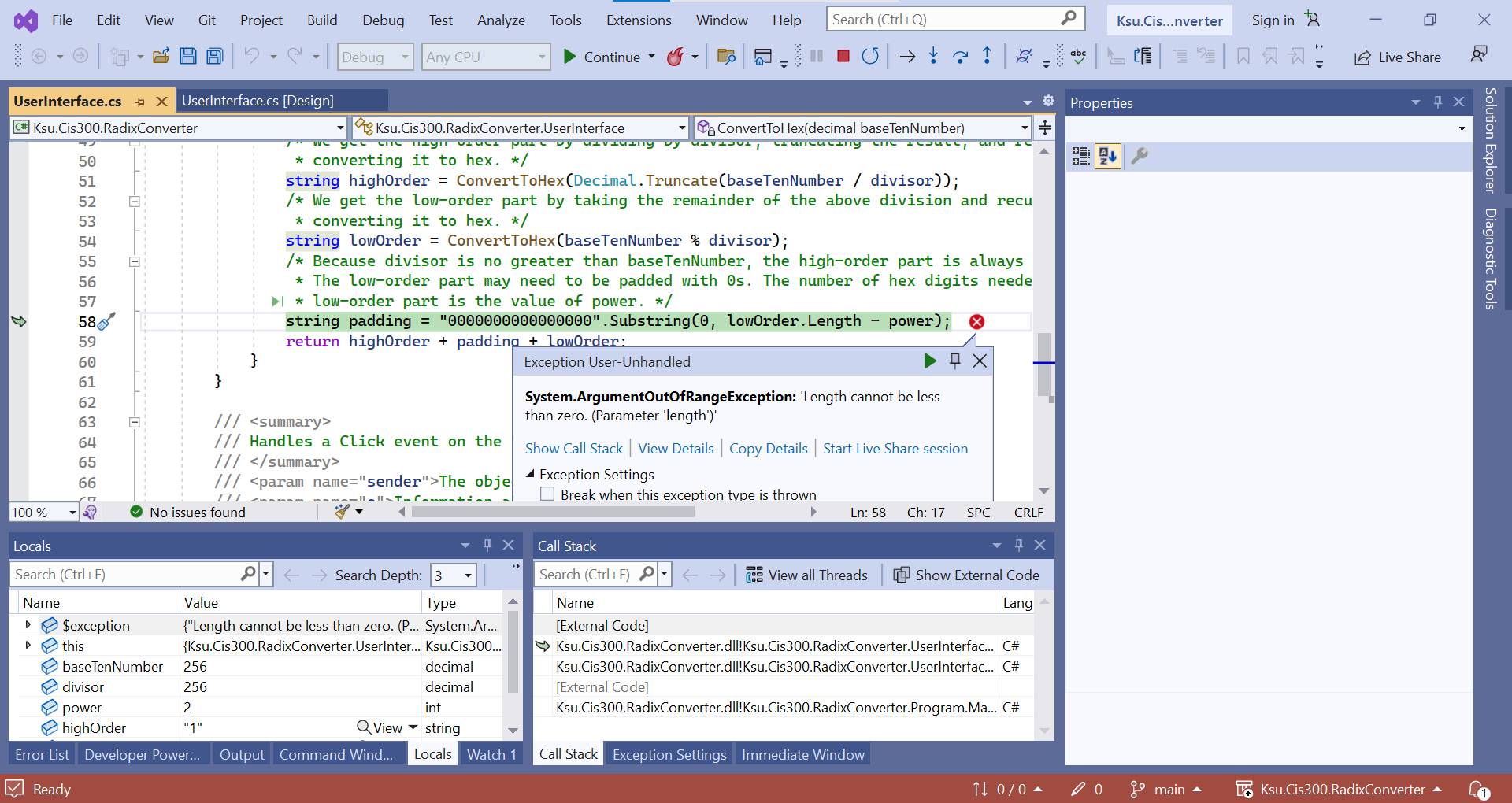

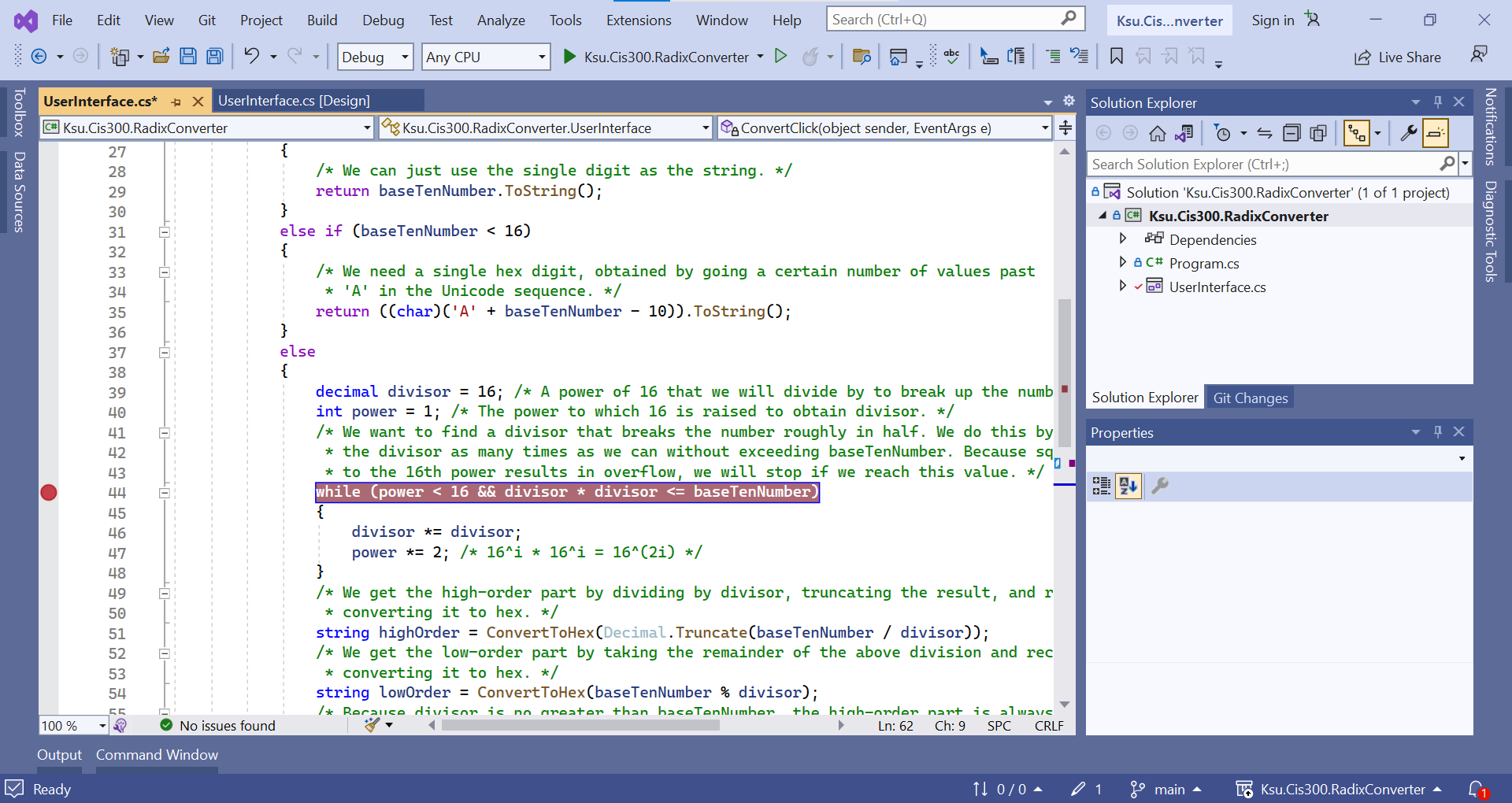

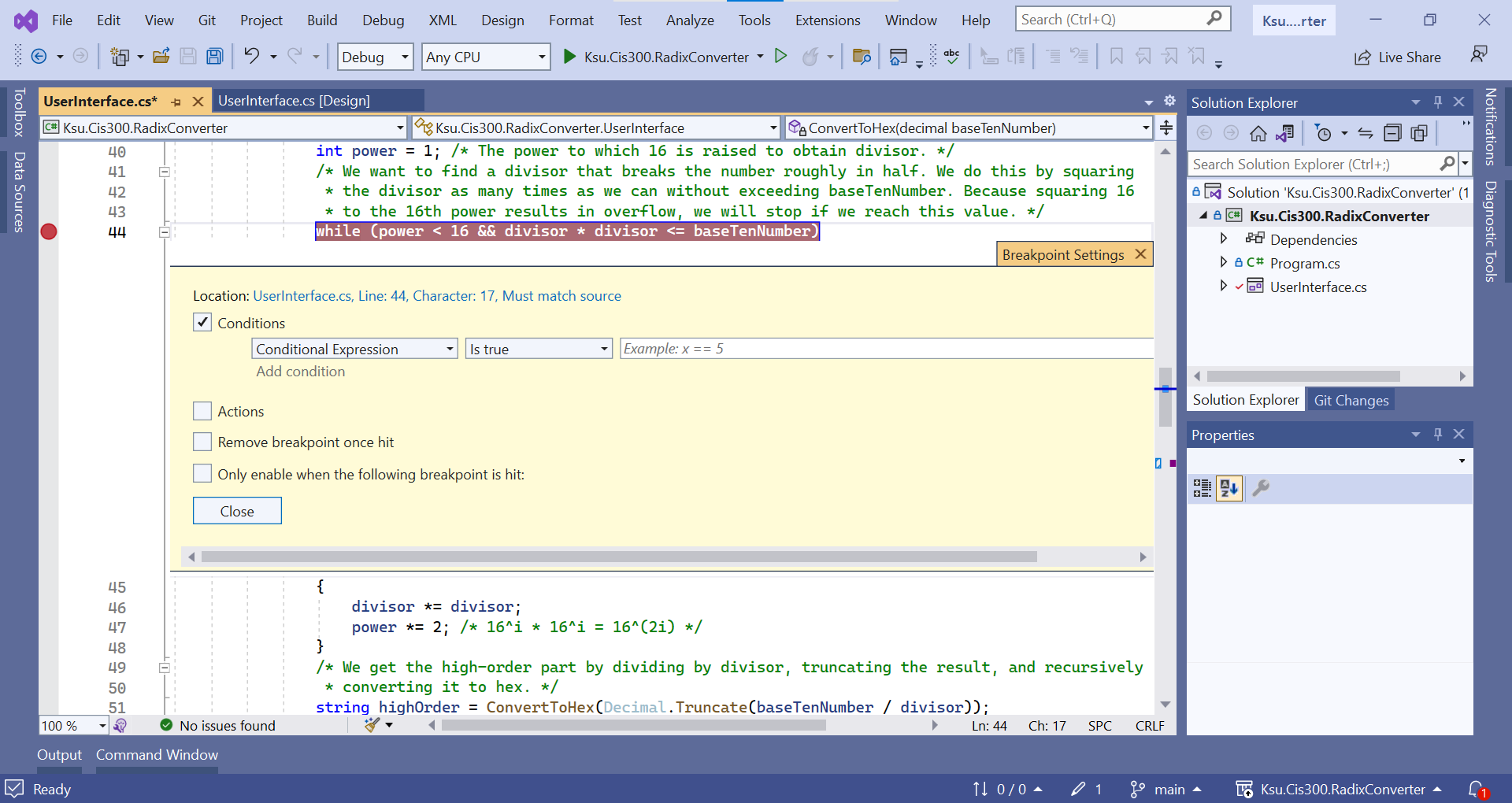

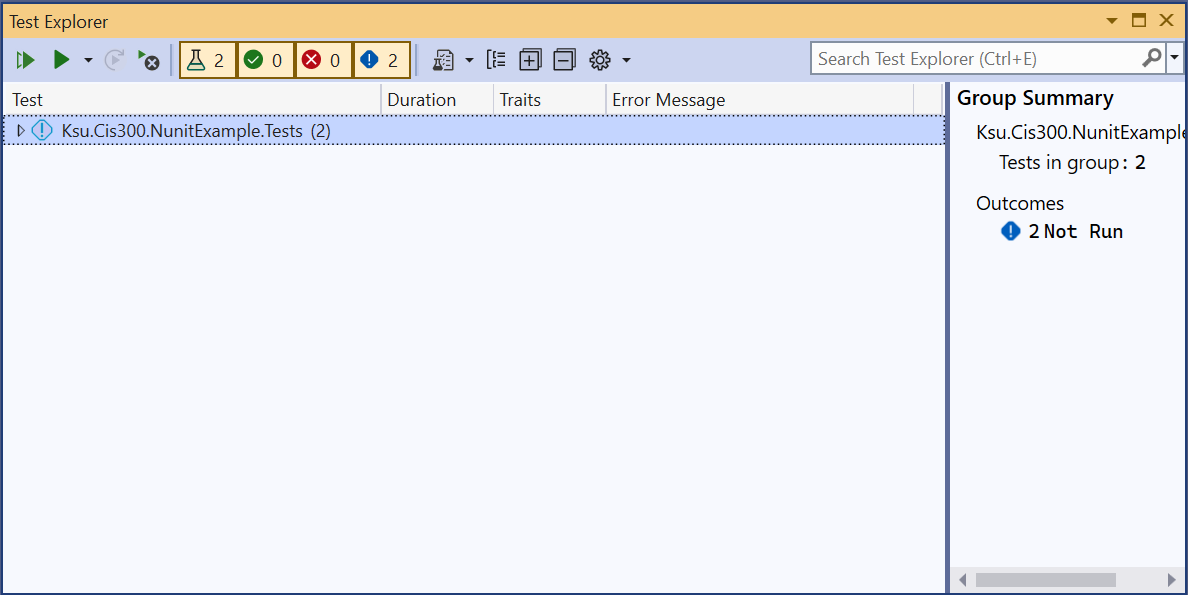

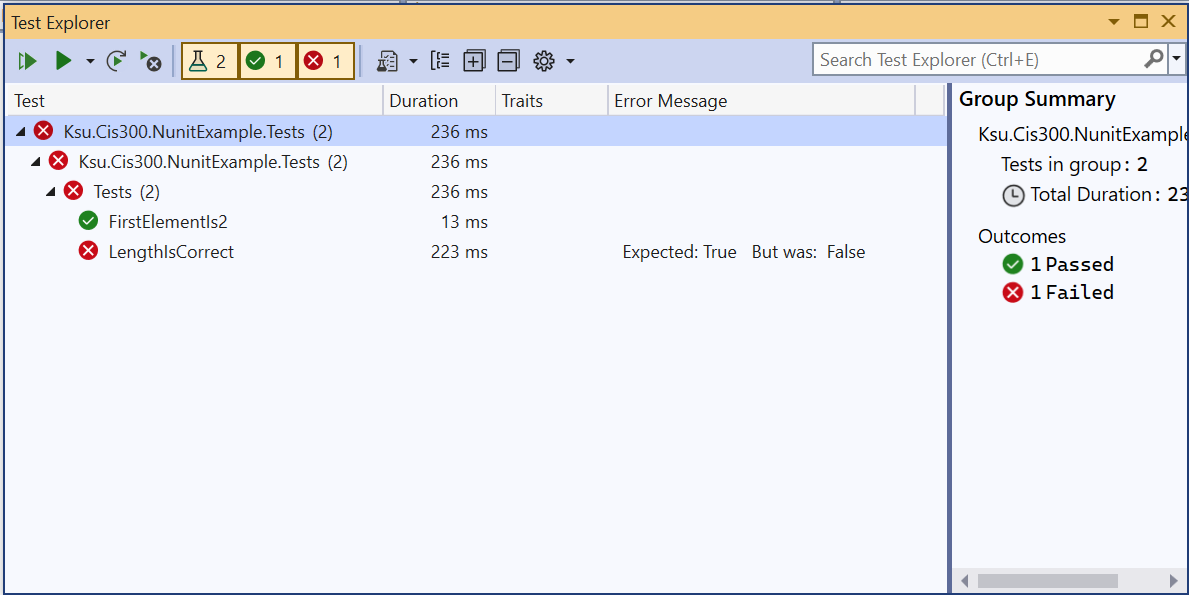

}