Subsections of Getting Started

GitHub account

First, you will need to create a GitHub account here. If you already have one, you can use your existing account.

Sireum Logika

In CIS 301, we will use a tool called Logika, which is a verifier and a proof checker for propositional, predicate, and programming logic. You will need to install the VS Code-based Sireum IVE (Integrated Verification Environment), which contains Logika.

Getting Installer Script

Go here under “For CIS 301 Spring 2026” to install Logika.

Select either “Windows” or “macOS/Linux”. The instructions below should update based on your selection. Copy the command listed for your operating system.

Installing and Running Sireum Logika - Windows

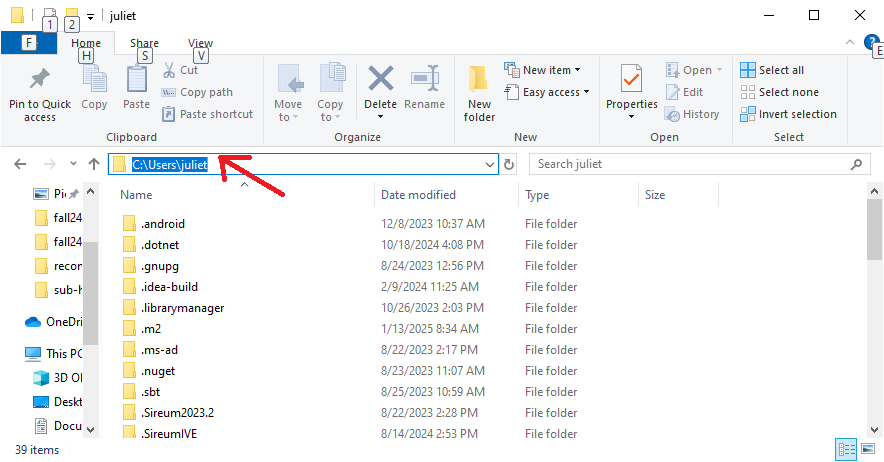

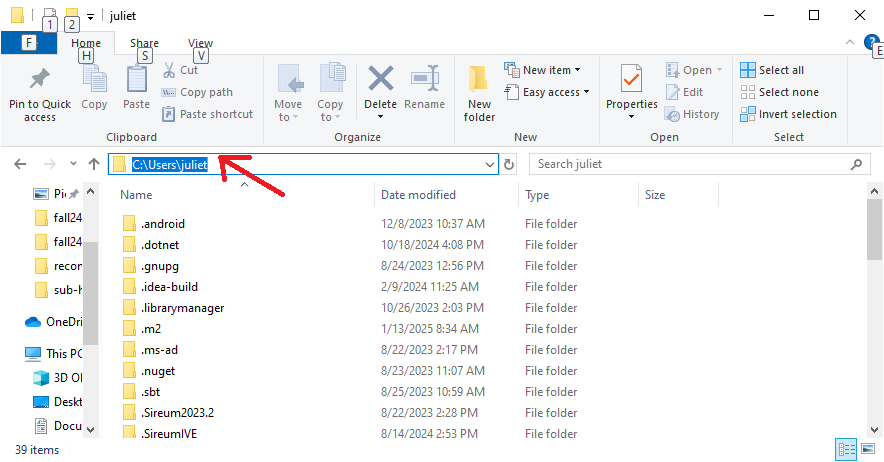

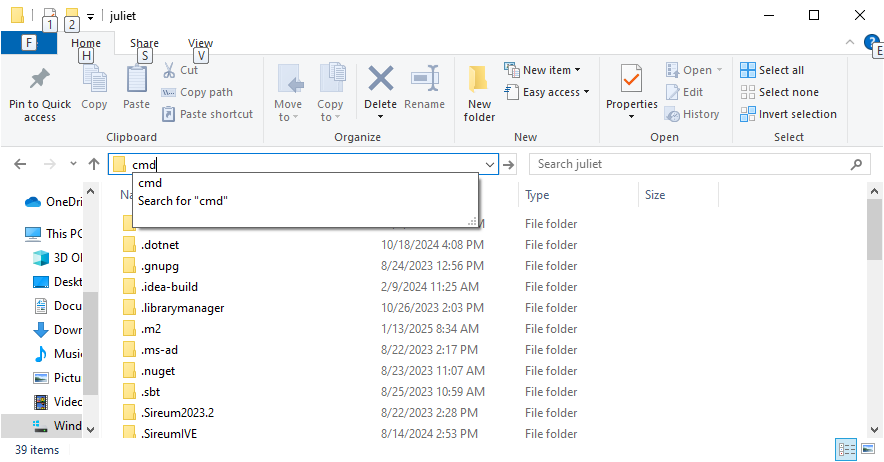

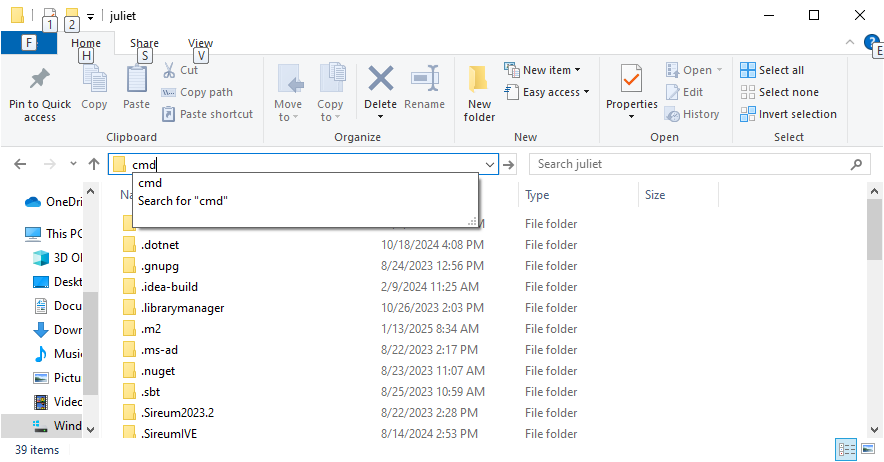

For Windows users, open a File Explorer and navigate to C:\Users\<yourAccountName>\Applications. Select the text in the address bar like this:

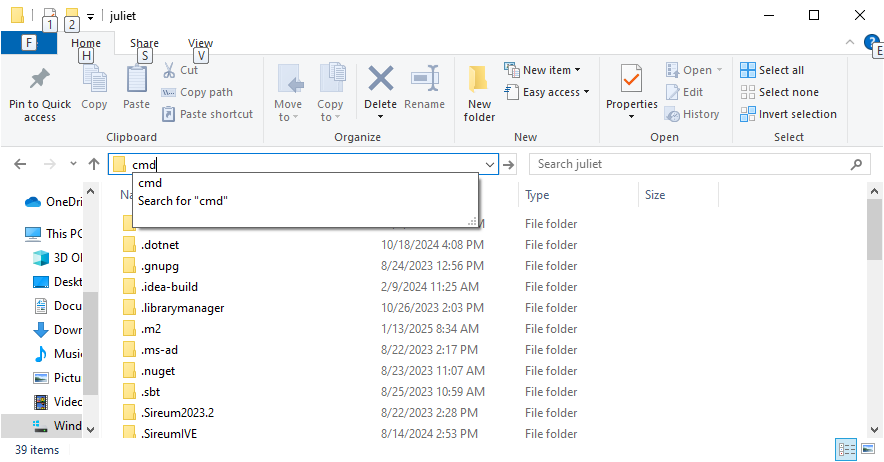

Type “cmd” to overwrite the selected text:

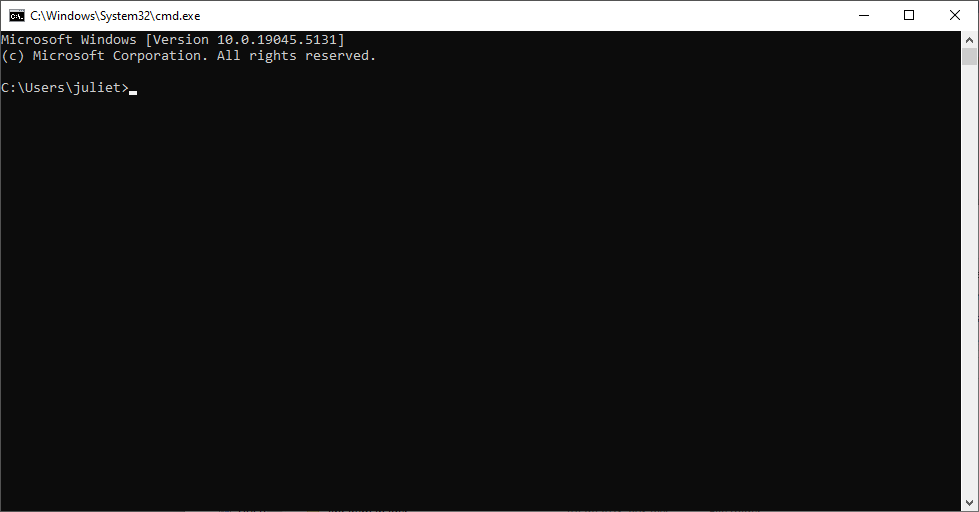

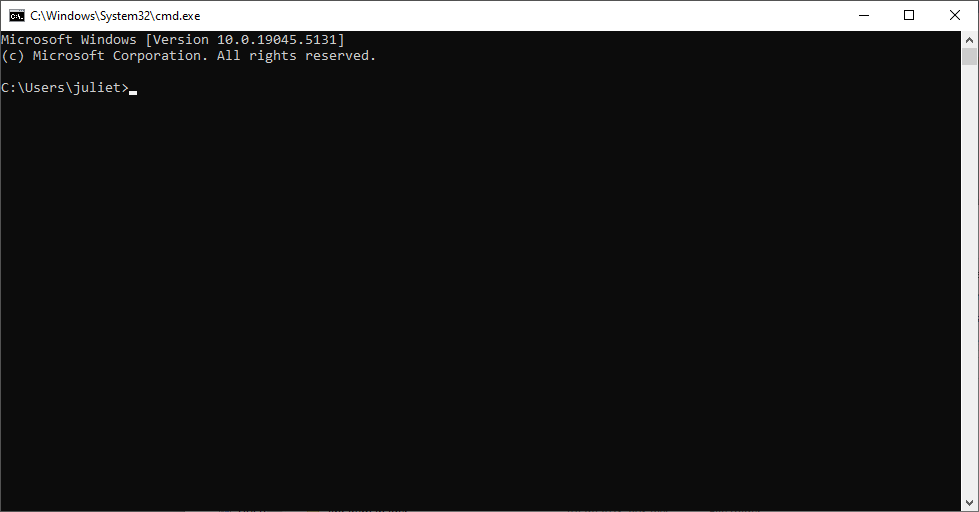

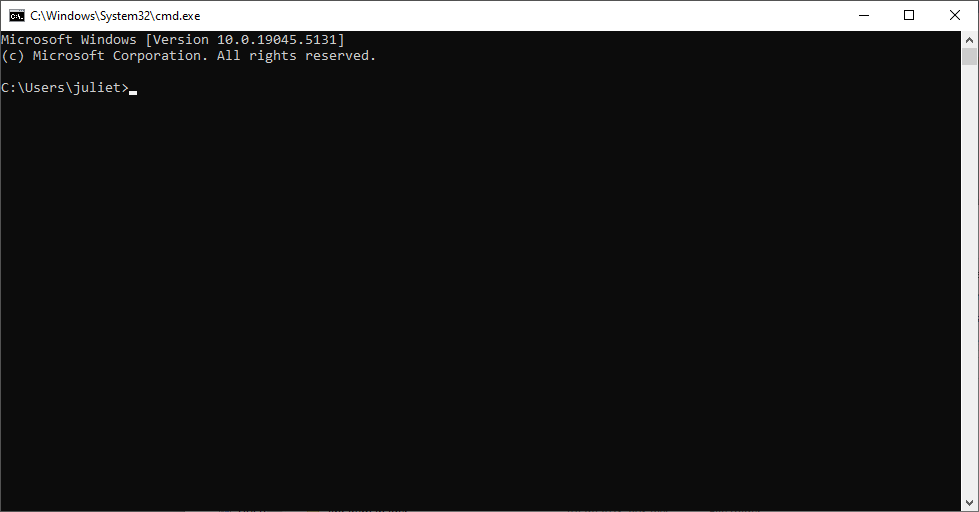

And hit Enter. It should open up a command prompt that is already in your C:\Users\<yourAccountName>\Applications folder:

In the command prompt, paste the installer command you copied in the previous step. You should immediately see feedback that your computer is fetching Sireum Logika.

Once the installation is finished, open a file explorer and navigate to C:\Users\<yourAccountName>\Applications\Sireum\bin\win\vscodium. In that folder, you should see the application “CodeIVE.exe”. Double-click that application to run it (if you see a popup that asks if you want to allow access to your computer, go ahead and choose that you want to do so).

You may find it handy to pin the “CodeIVE.exe” application to your taskbar. You can do so by right-clicking the “CodeIVE.exe” file and selecting “Pin to taskbar”.

Installation and Running Sireum Logika - Mac

For Mac users, open a terminal window and paste the command you copied in the previous step. You should immediately see feedback that your computer is fetching Sireum Logika.

Once the installation is finished, go to the finder and search for “CodeIVE”. You should see the application “CodeIVE.exe” - if you run it, it will open Sireum Logika.

How to Start Homework Assignments

To start a homework assignment (or to clone any existing repository, including homework solutions and lecture examples), first:

- Click to “accept the assignment” from the link in Canvas (if you are cloning a homework assignment or solution).

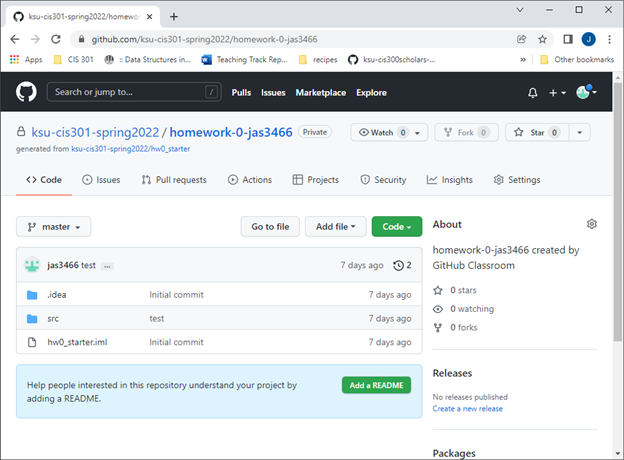

- Go to the URL created for your assignment (or to the URL of the repository you want to clone).

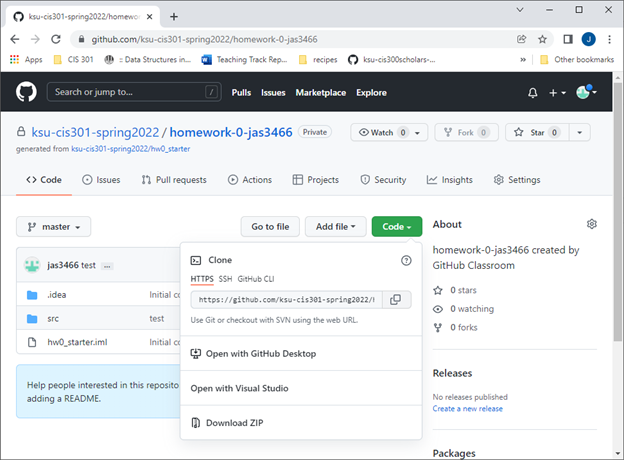

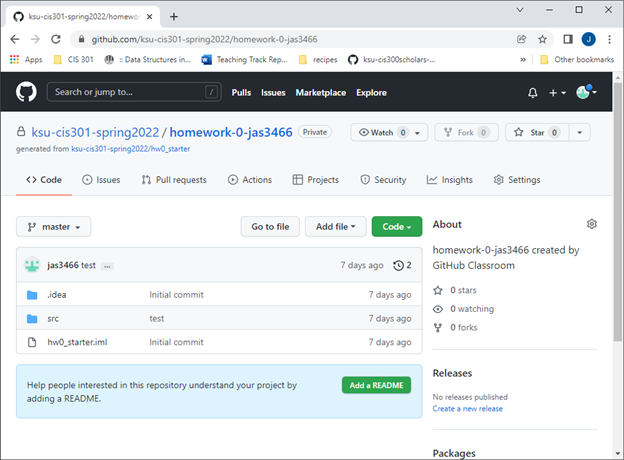

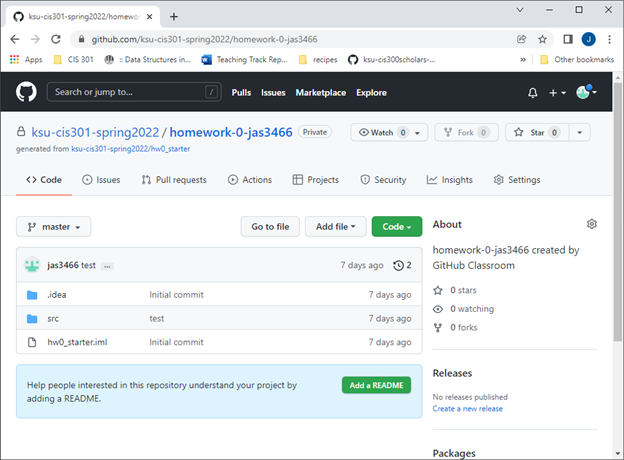

- You should be looking at a website that looks something like this:

Get repo URL

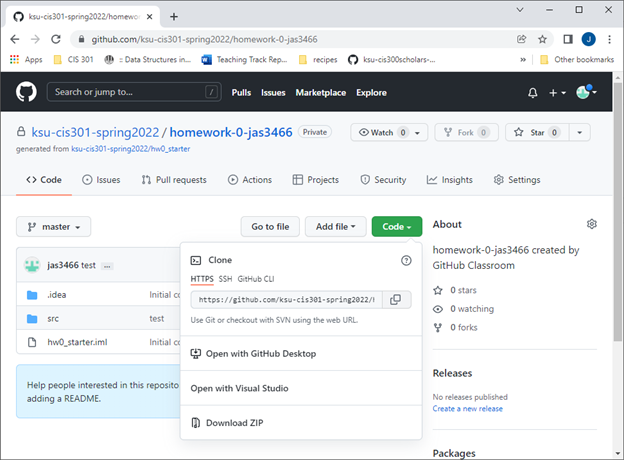

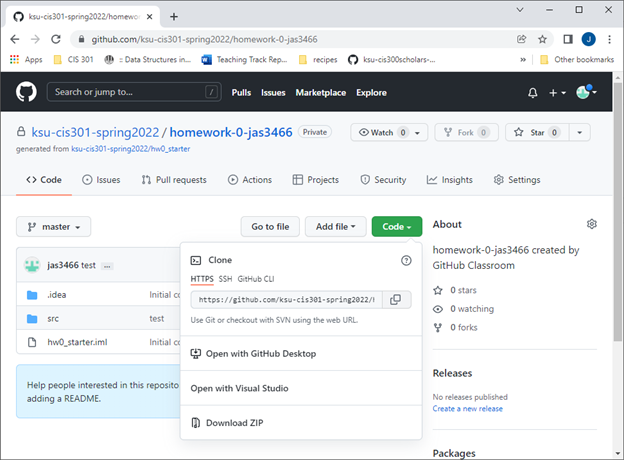

Click the Green Code button, so that you see something like this:

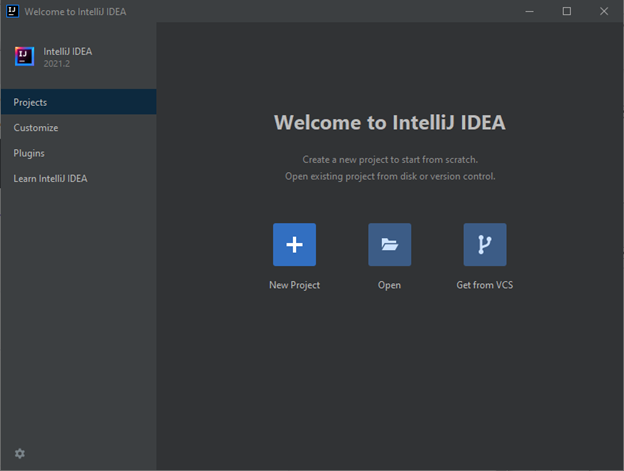

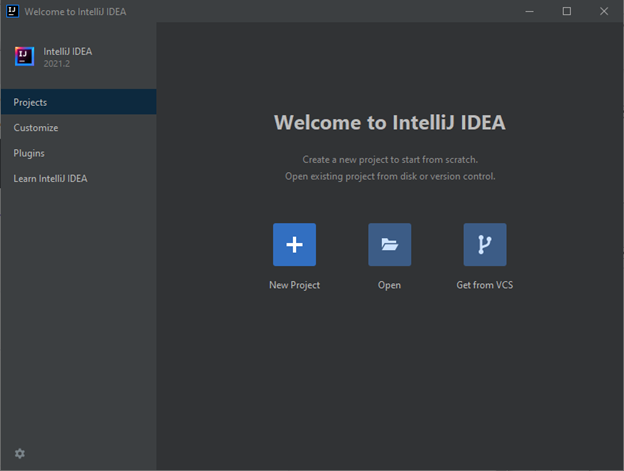

Next, open Sireum VS Codium (by running the CodeIVE application). You should see:

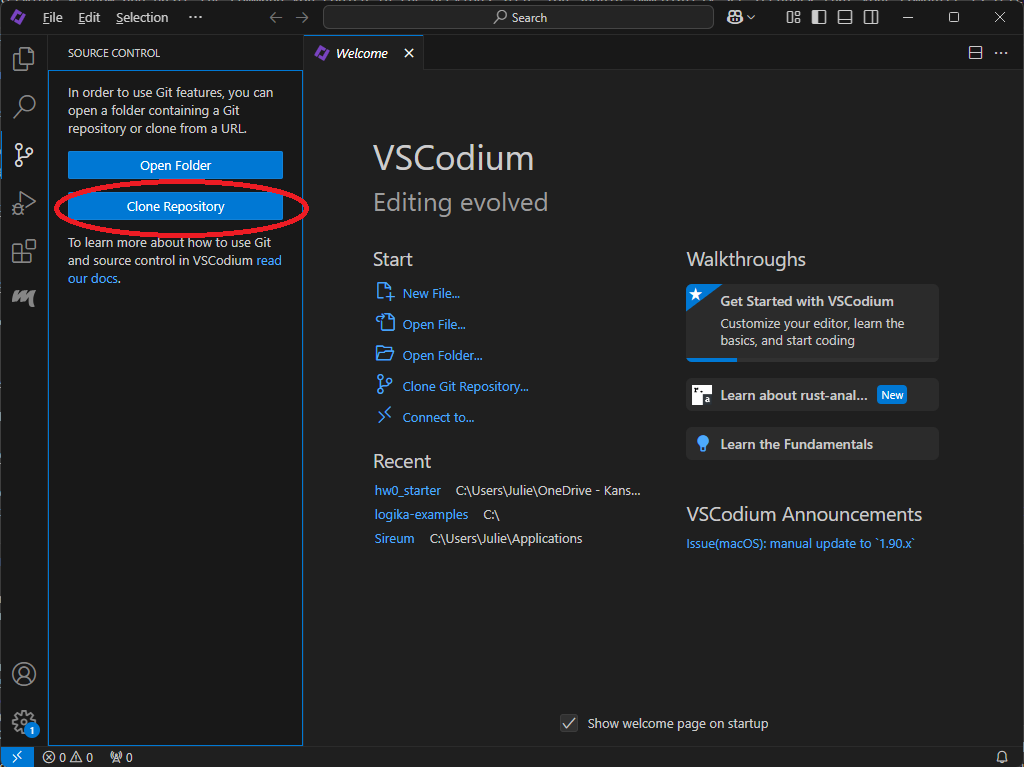

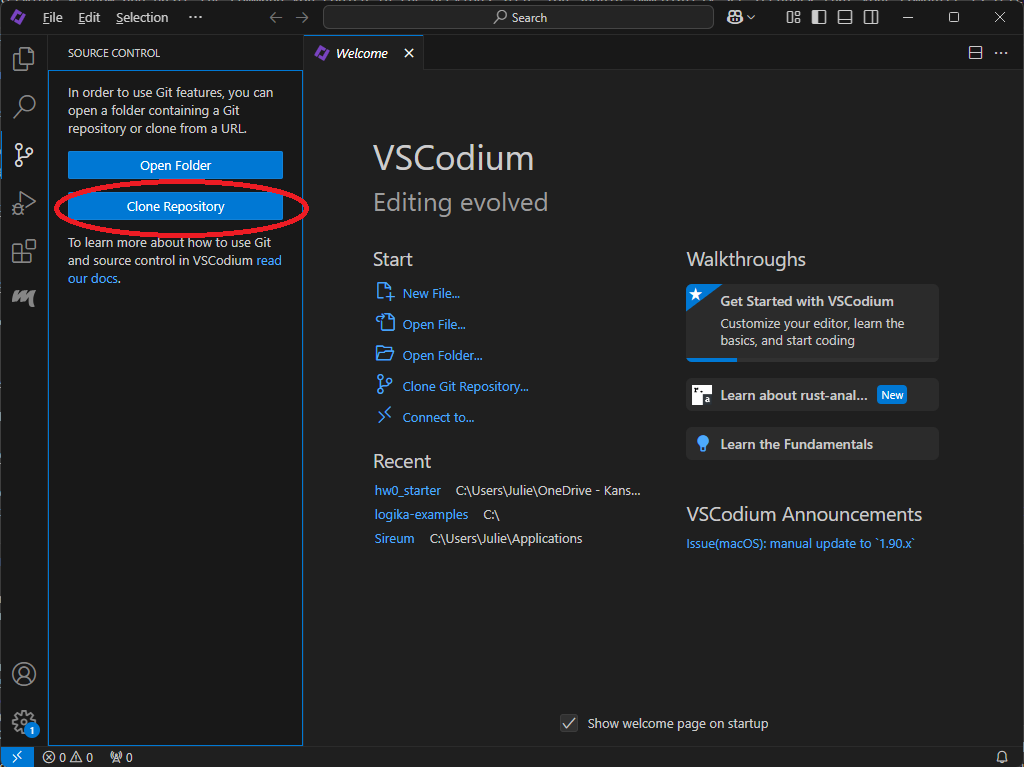

Next, click the third icon in the column on the left side (the one that looks like a circuit and says “Source Control” when you hover over it). You should see:

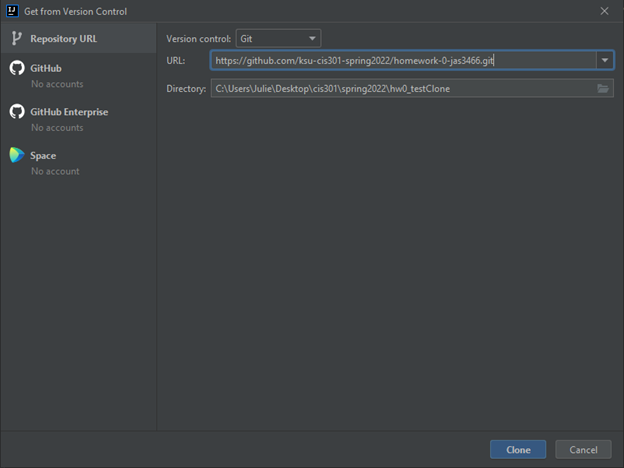

Click Clone Repository. Now you should see something like:

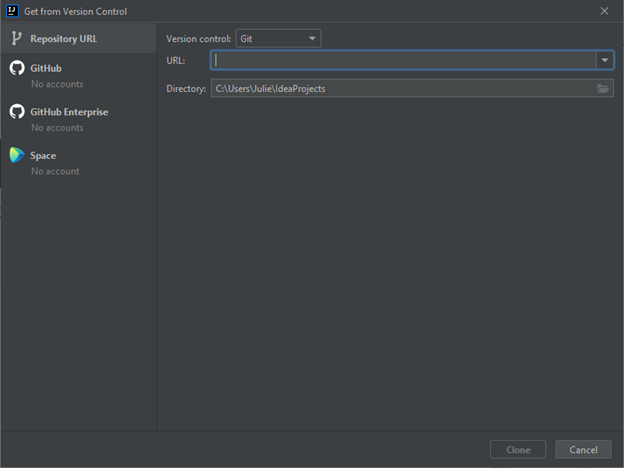

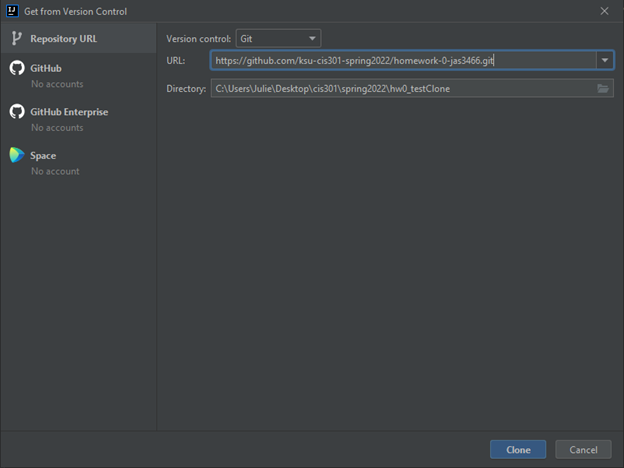

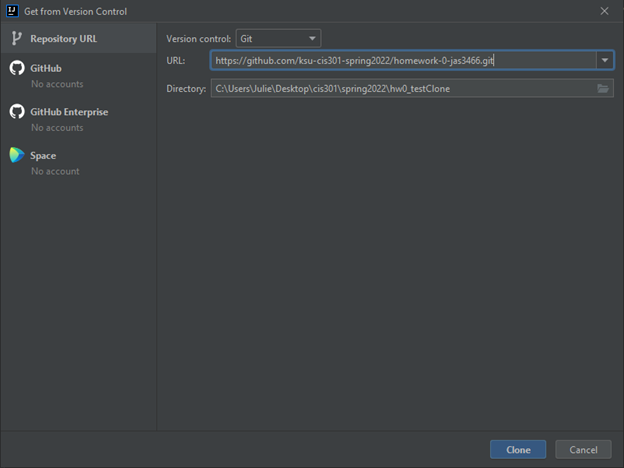

Paste the URL you copied from GitHub in the textbox indicated above. Click “Clone from URL”.

You will then be prompted to choose a folder to clone your repository into. Navigate to a folder on your computer specifically for CIS 301 (create one if it doesn’t exist). Create a new empty folder within that CIS 301 folder to hold this new project. Select that new folder in the selection dialog, so that you now have something like this:

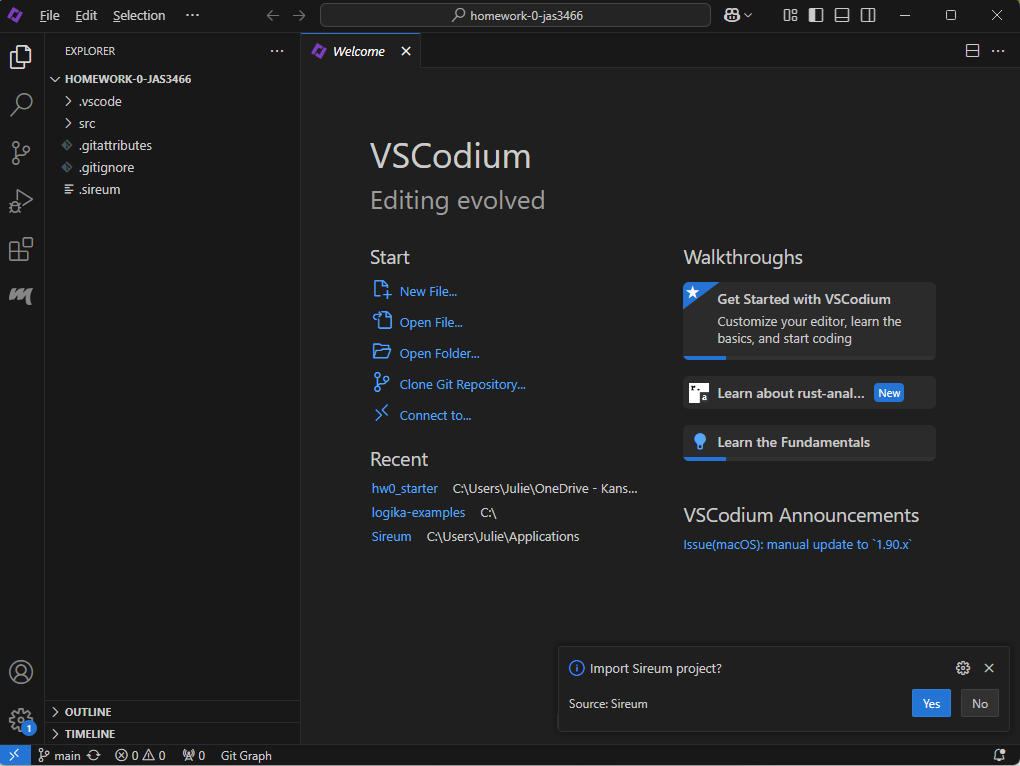

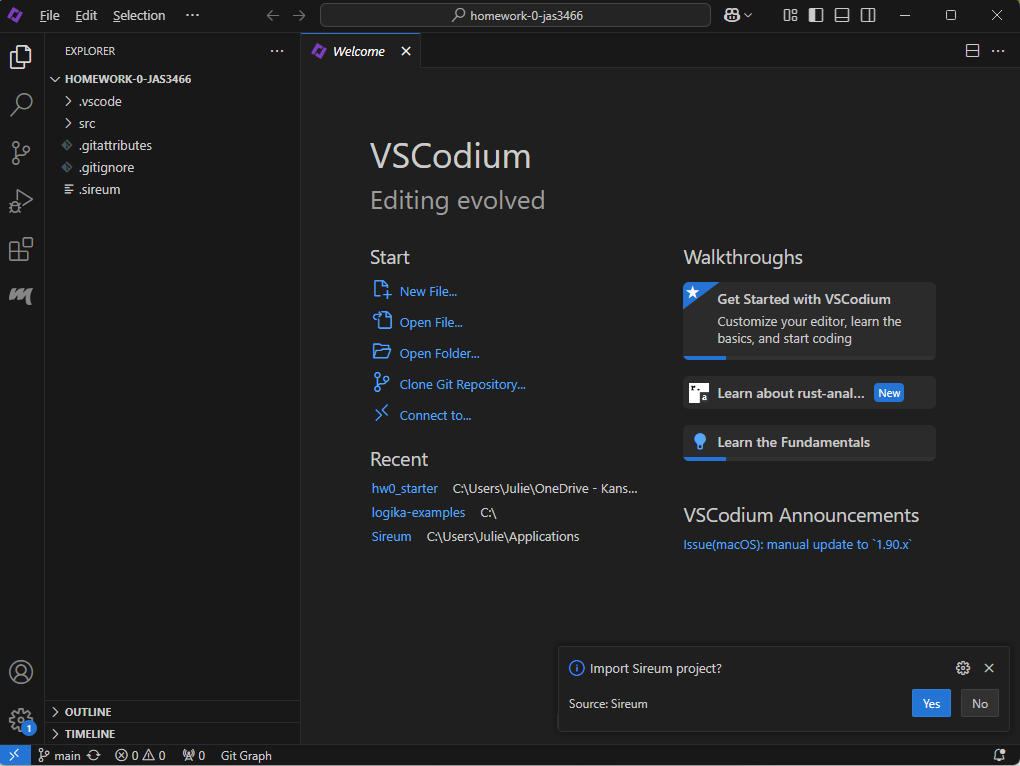

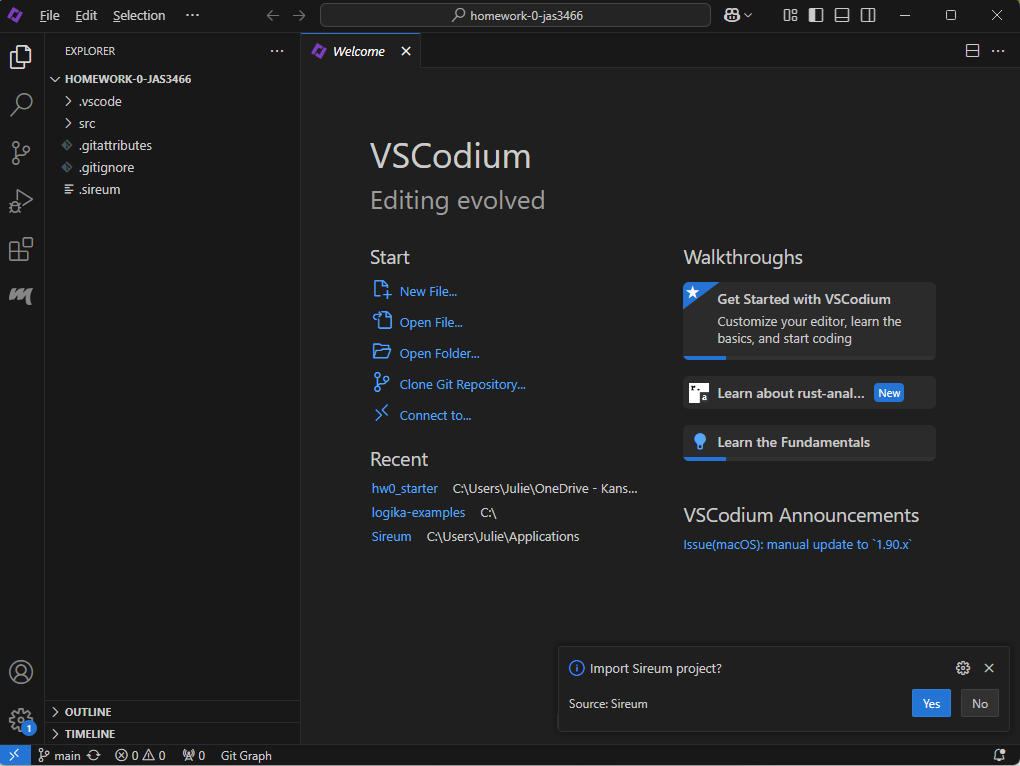

Click Select as Repository Destination. You should see a popup that asks if you want to open the cloned repository. Select “Open”. Next you should see something like:

If you see a popup in the bottom right that asks if you want to import a Sireum project, then click Yes.

Installing Sireum Fonts

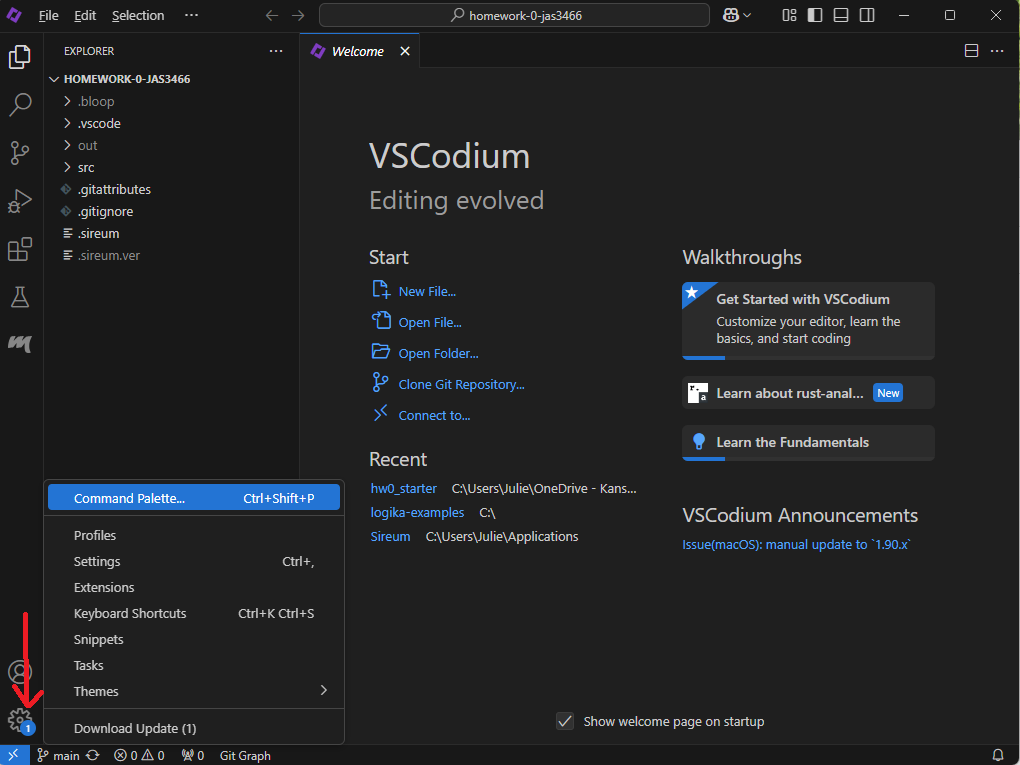

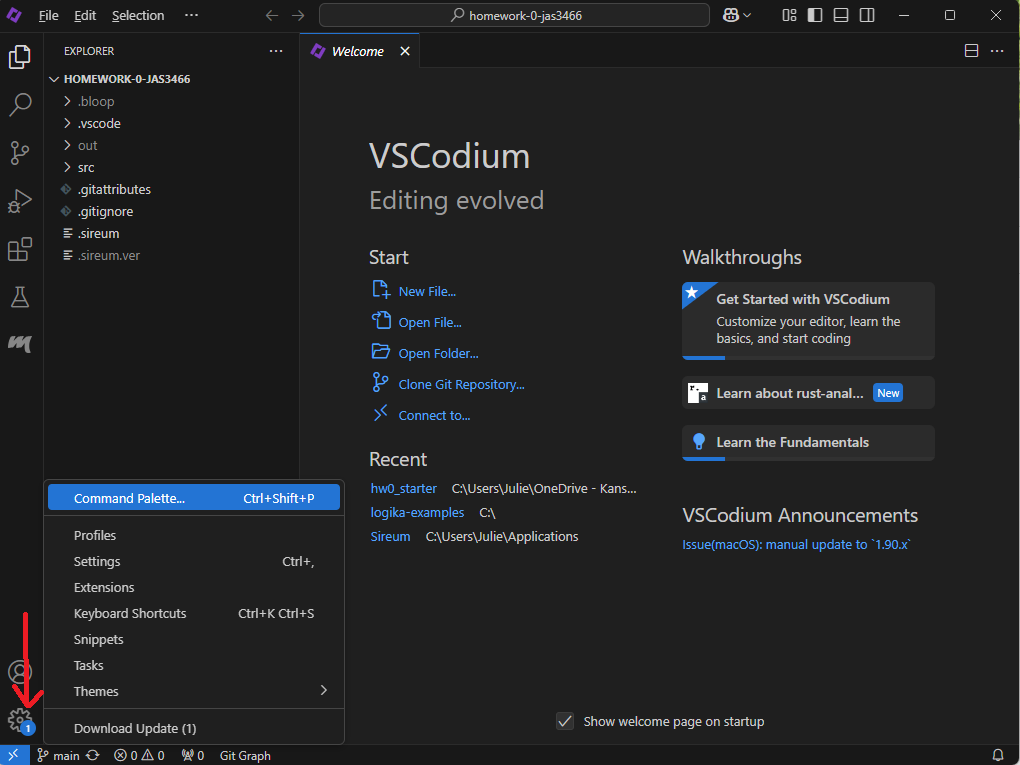

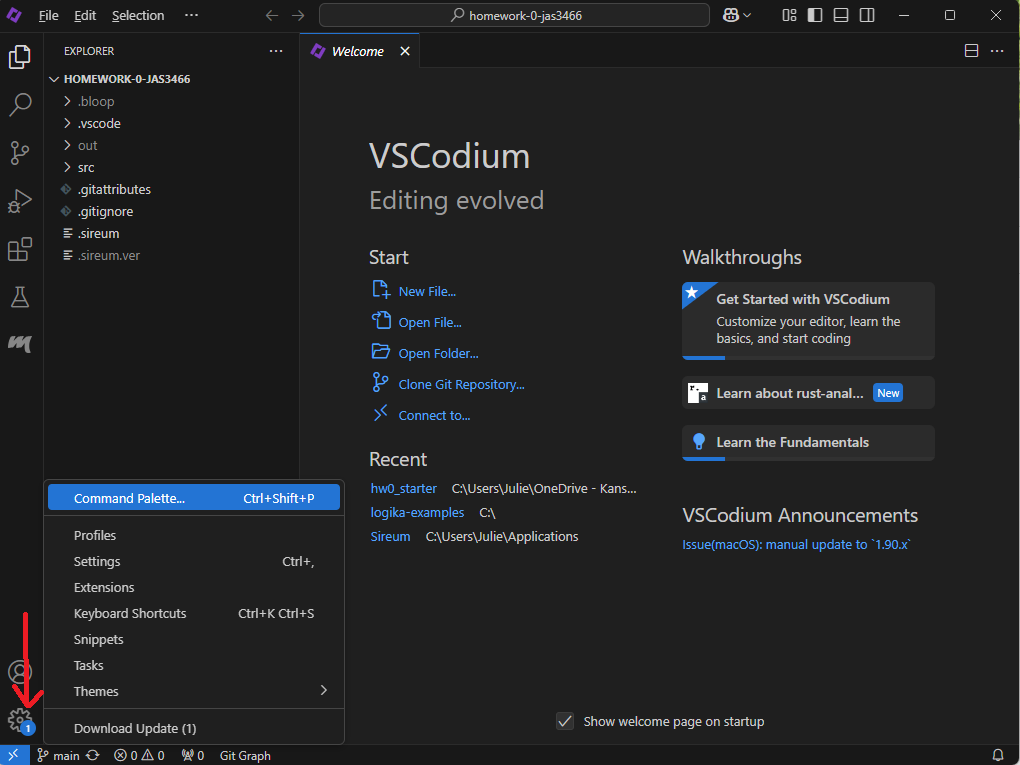

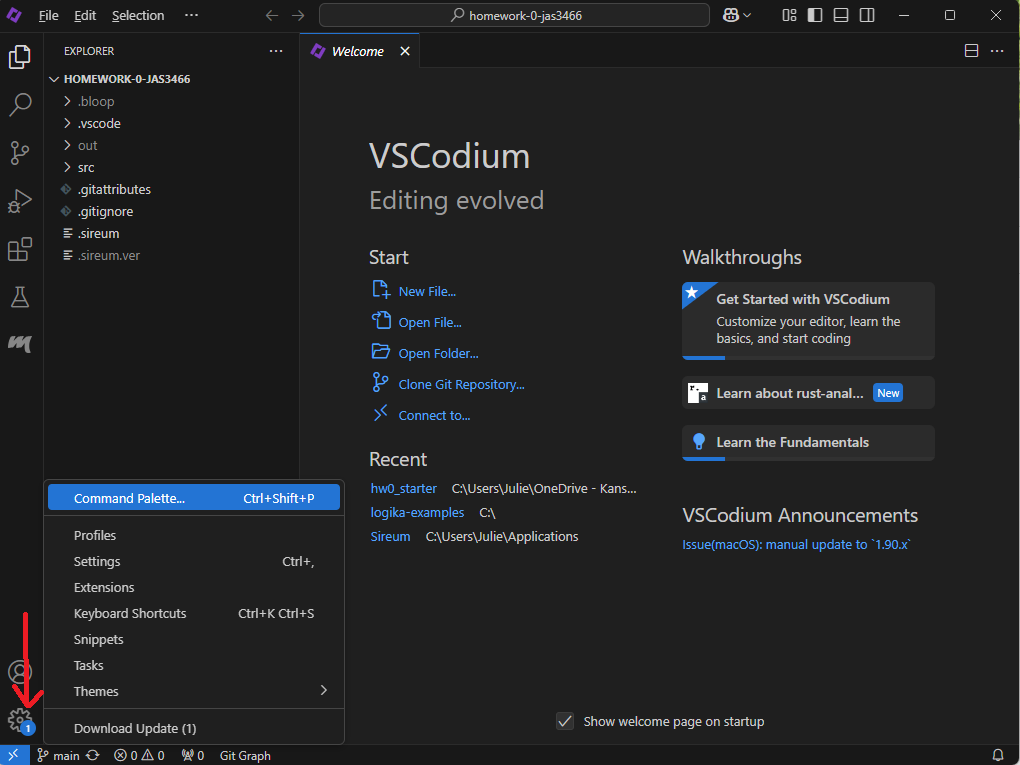

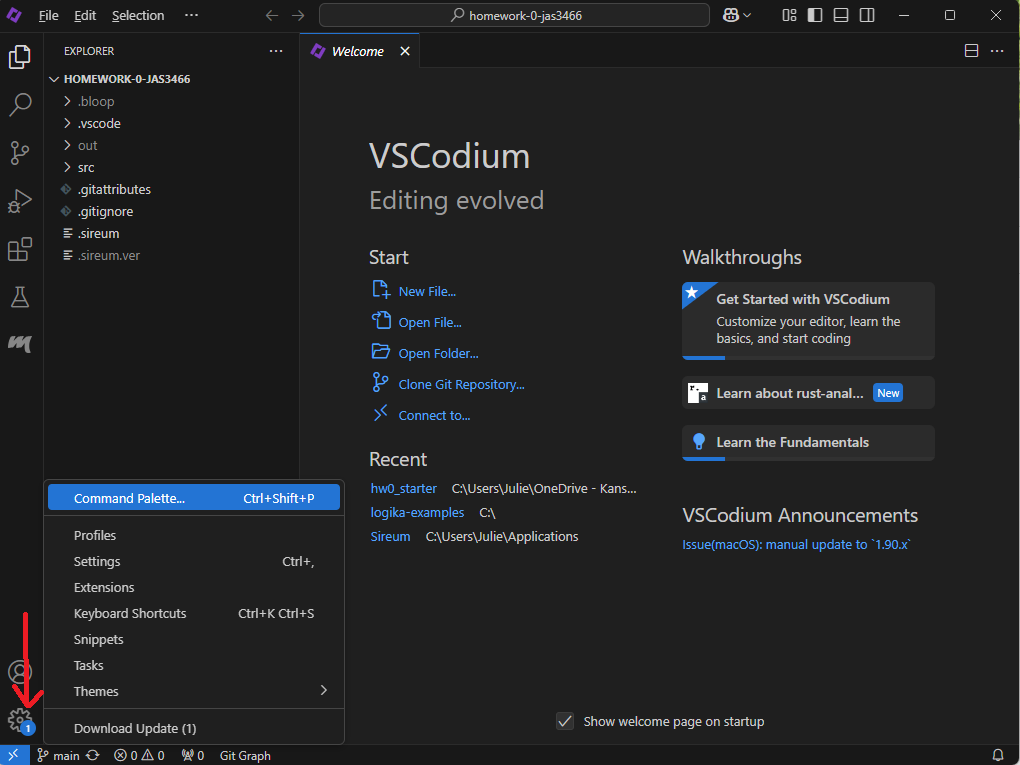

When you have Sireum VS Codium open, click the gear icon in the lower-right corner. Click the option that says “Command Palette”:

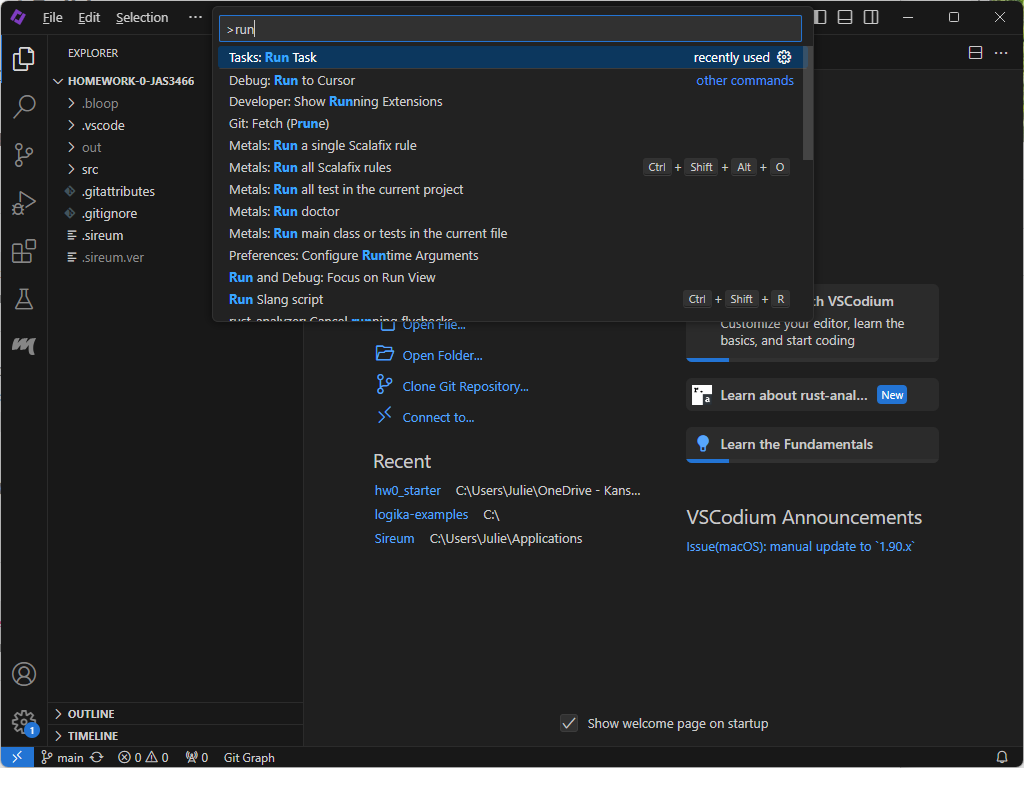

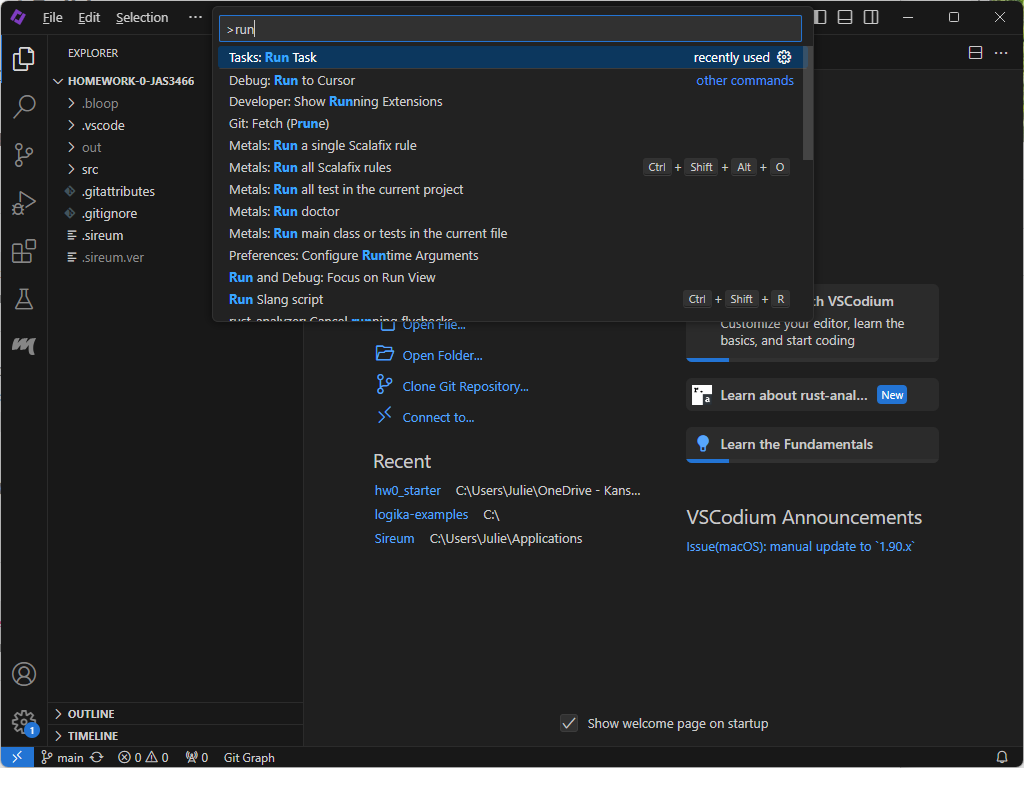

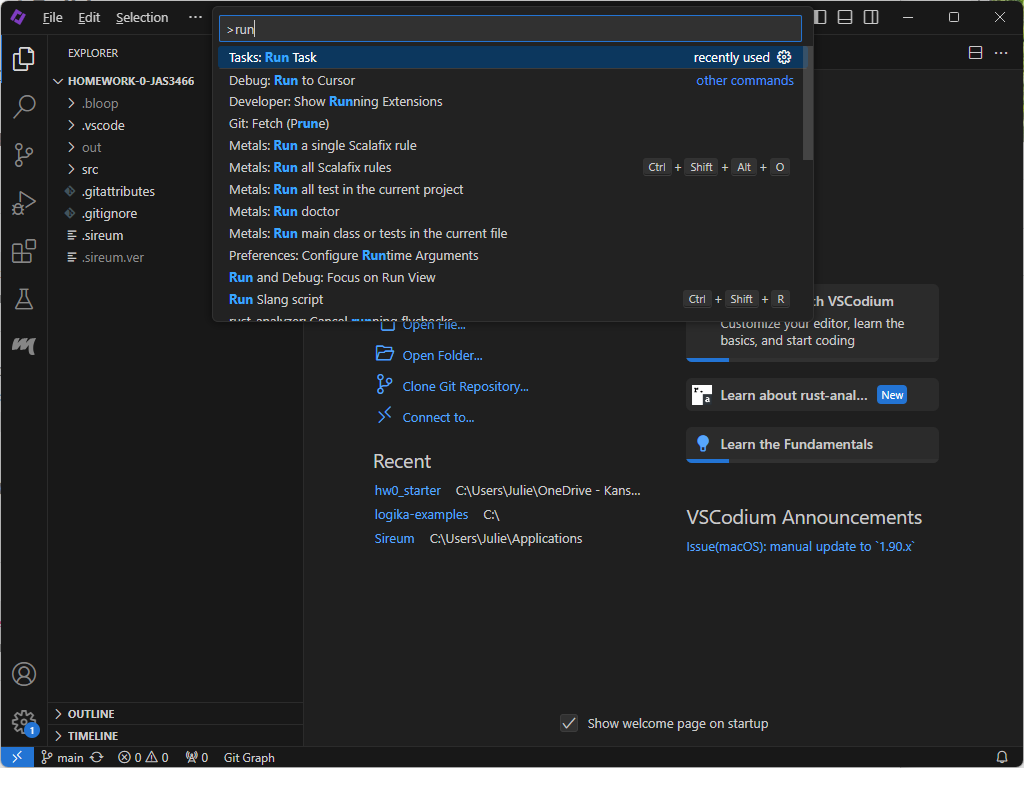

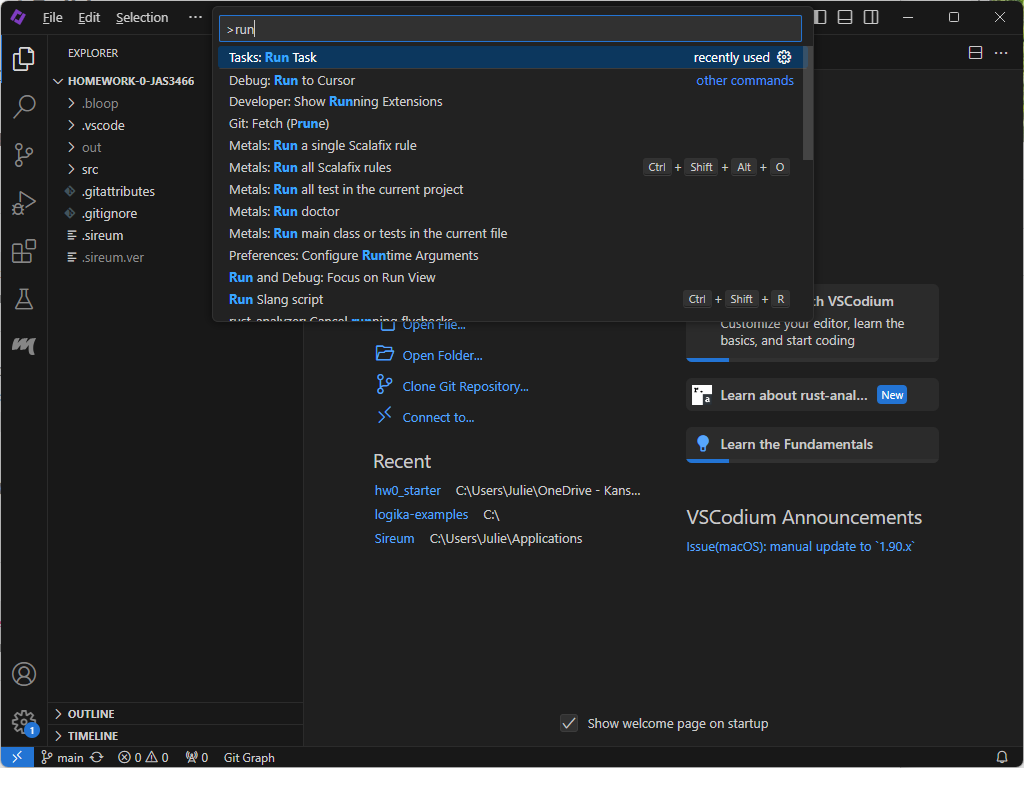

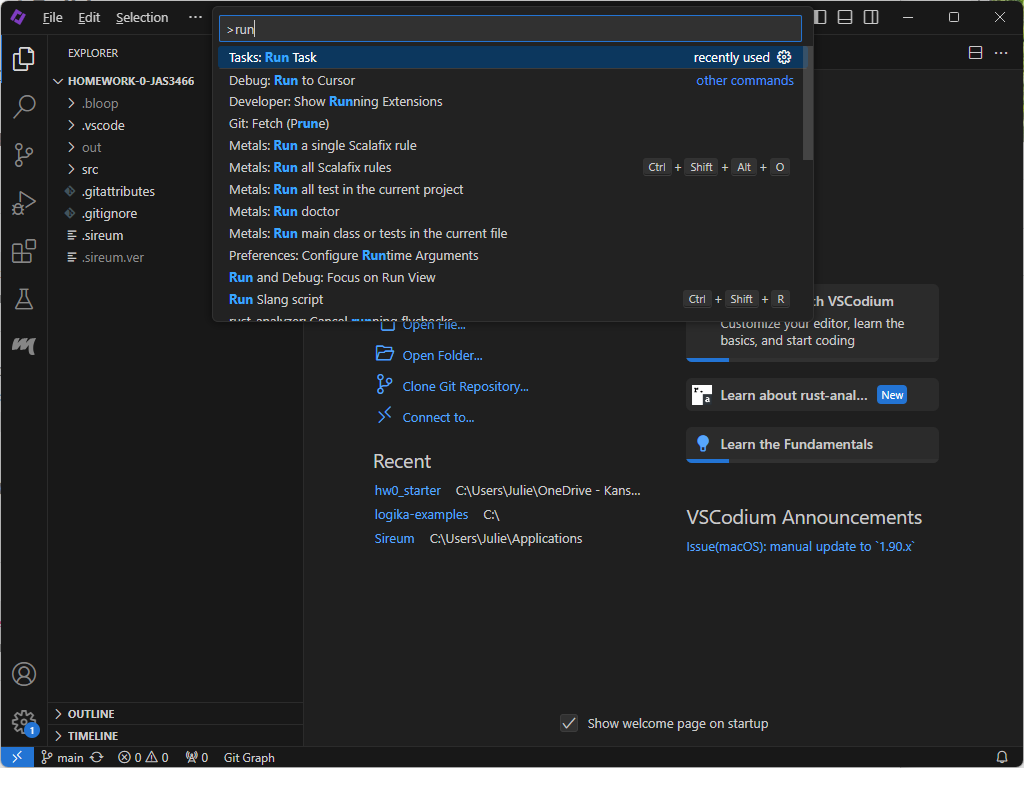

Type “run” in the resulting textbox. Click the option that says: “Tasks: Run Task”.

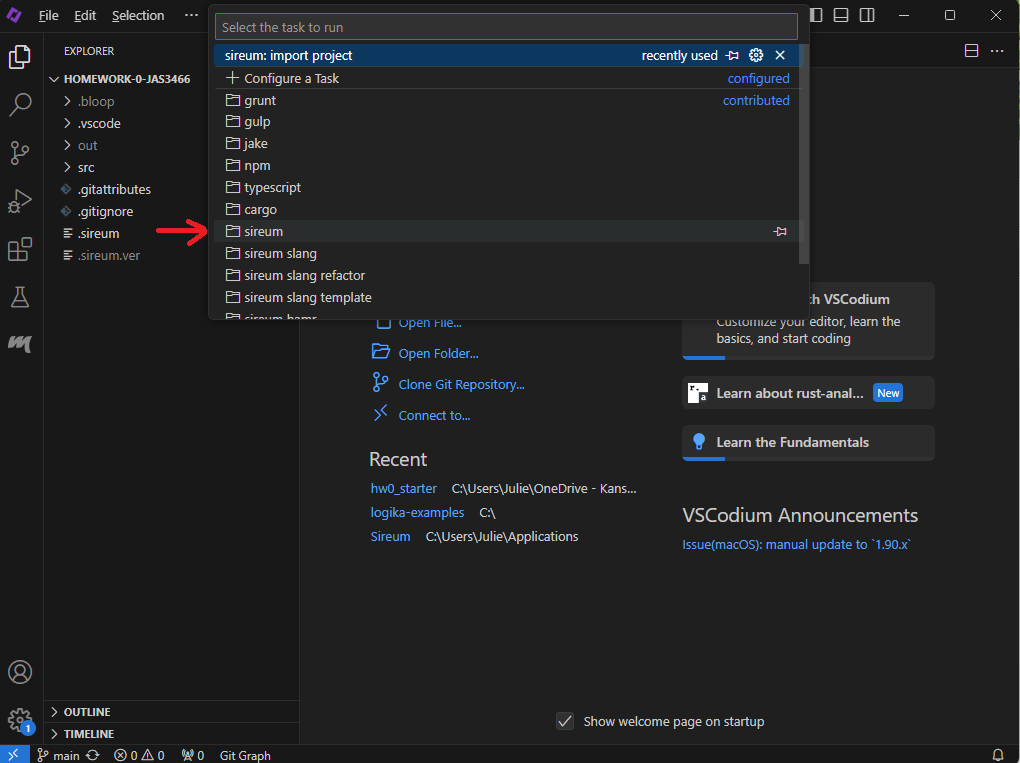

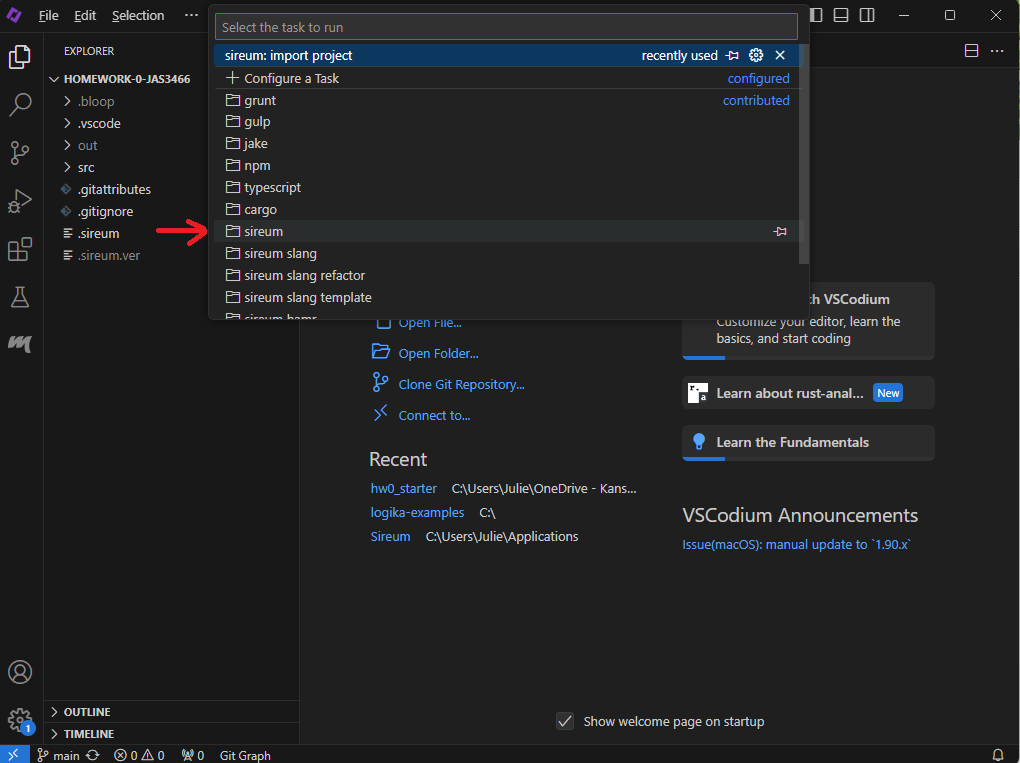

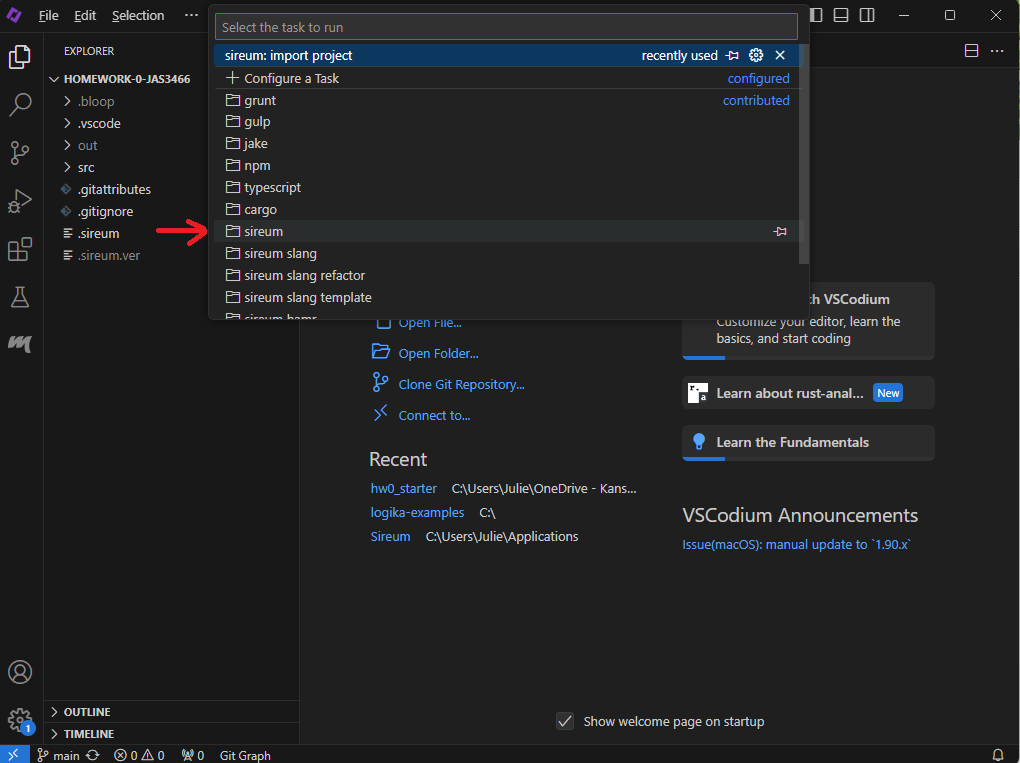

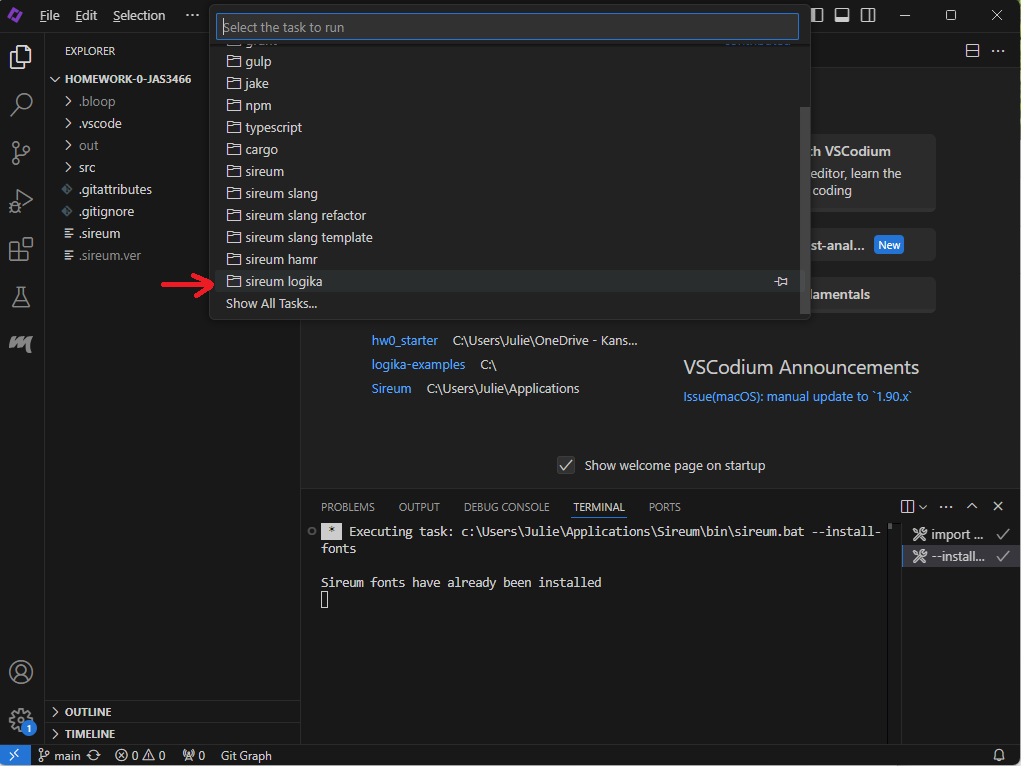

Next, click the “sireum” folder:

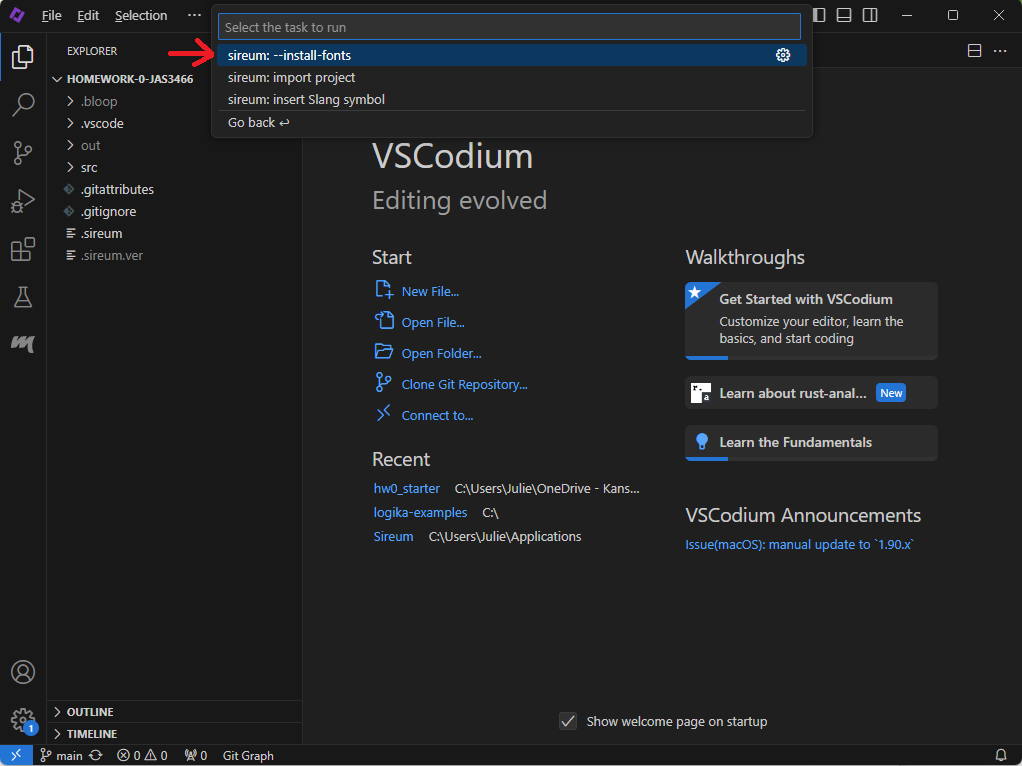

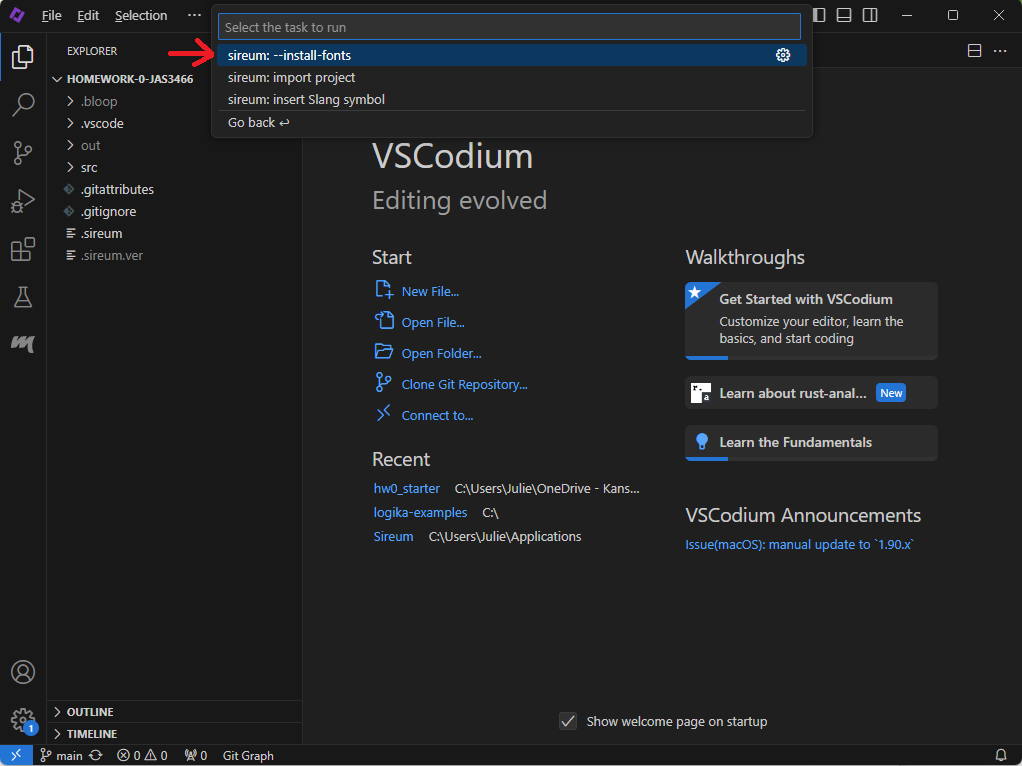

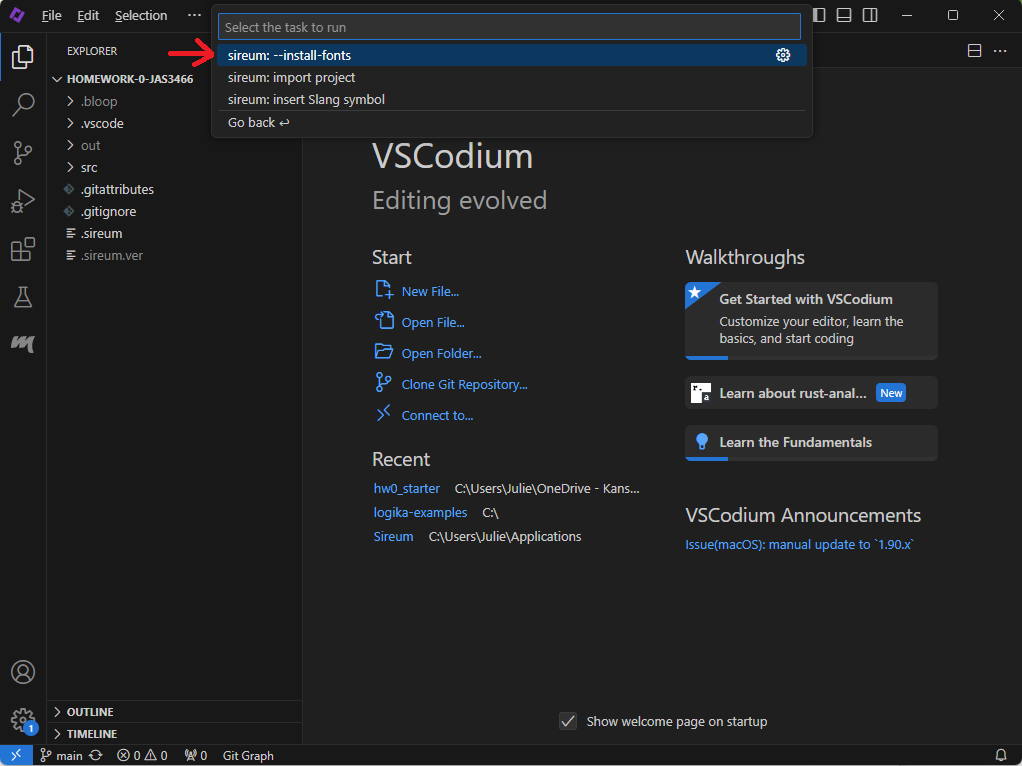

Finally, click “sireum: –install-fonts”:

You might be asked for the installation location of Sireum at this point – if so, it is C:\Users\<yourAccountName>\Applications\Sireum for Windows and /Users/<yourAccountName>/Applications/Sireum for Mac. Windows users might need to type the location directly into the address bar if you can’t find the Applications folder in the file explorer.

If the new fonts (which show logic symbols instead of characters like & and |) don’t immediately appear, try restarting Sireum VS Codium.

Running Logika Checker

When you are ready to verify a Logika truth table or proof, click the gear icon in the lower-right corner and select “Command Palette”:

Type “run” in the resulting textbox. Click the option that says: “Tasks: Run Task”.

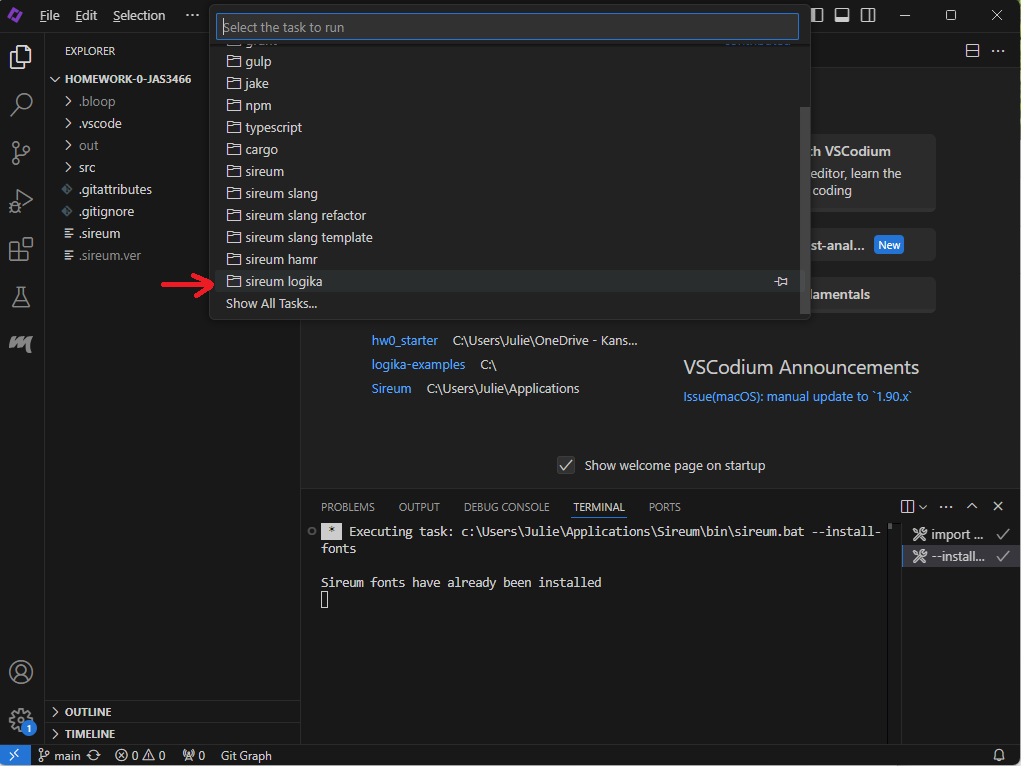

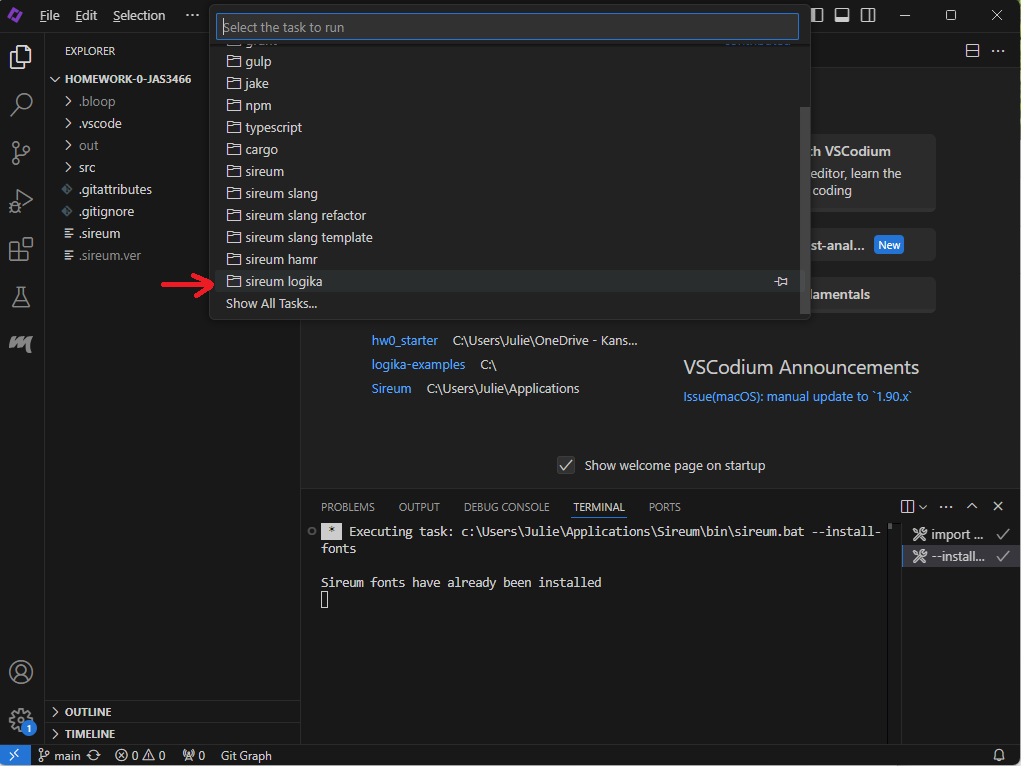

Next, click the “sireum logika” folder:

Finally, choose the option that says “sireum logika: verifier (file)”. You should see either a popup that says “Logika verified”, or you should see red error markings in your file indicating where the logic doesn’t hold up. You can hover over these red error markings to get popups with more information on the error.

As a shortcut to verify a proof or truth table, you can do: Ctrl-Shift-W on Windows or Command-Shift-W on Mac.

NOTE: You might be asked to choose the installation folder of Sireum the first time you run the Logika checker. If you are, the installation folder is C:\Users\<yourAccountName>\Applications\Sireum for Windows and /Users/<yourAccountName>/Applications/Sireum for Mac. Windows users might need to type the location directly into the address bar if you can’t find the Applications folder in the file explorer.

Committing and Pushing Changes

When you are finished working, commit and push your changes to GitHub. (I recommend doing this anytime you are at a stopping point, as well as when you are completely done.)

To do this, first save all your files (File-Save or Ctrl-S). Make sure none of the open file tabs have a solid circle next to the file name – this is an indication that they are unsaved. When everything is saved, open the integrated terminal in Code IVE by choosing View->Terminal. From there, type:

This will add all changes to the current commit. Then type:

git commit -m "descriptive message"

to create a local commit, where “descriptive message” is replaced with a message describing your changes (you DO need to include the quotations). Finally, push your local commit to your remote repository:

If you go to your GitHub repository URL and refresh the page, you should see the latest changes.

If you have any errors using a git command, refer to the next section (“git Install” for instructions on getting the command-line version of git).

Homework 0 will help you test the GitHub process.

Using Sireum in the Lab Classrooms

Sireum IVE (with Logika) is available in the CS computer lab classrooms (DUE 1114, 1116, and 1117). To find it, open a File Explorer, then open the C:\ drive. From there, navigate to “C:\Sireum\bin\win\vscodium” and run the “CodeIVE.exe” file. This will launch Sireum as usual.

If you want to complete your assignment using multiple computers, you can:

- Clone the repo using the process above (if it is your first time working on the current assignment on this computer)

- Commit/push your changes when you are ready to leave this computer

- Do Git->Pull to get your latest changes if you come back to a different computer

Remote Access

If you have any problems installing Sireum on your own computer, you can access it remotely from the CS department. See here for information on remote access. When you connect to the department Window’s server, you can follow the instructions above to find Sireum as you would on a lab computer.

git Install

Verify a git install

You will likely already have a command-line version of git installed on your machine. To check, open a folder in VS Code, display the integrated terminal, and type:

You should see a version number printing out. If you see that, git is already installed.

If you see an error that git is unrecognized, then you will need to install it. Go here to download and install the latest version.

Windows users may need to add the git.exe location to the system Path environment variables. Most likely, git.exe will be installed to C:\Program Files\Git\bin. Check this location, copy its address, and type “Environment variables” in the Windows search. Click “Environment Variables” and find “Path” under System variables. Click “Edit…”. Verify that C:\Program Files\Git\bin (or whatever your git location) is the last item listed. If it isn’t, add a new entry for C:\Program Files\Git\bin.

Subsections of Basics and Logic Puzzles

Basic Logical Reasoning

What is logical reasoning?

Logical reasoning is an analysis of an argument according to a set of rules. In this course, we will be learning several sets of rules for more formal analysis, but for now we will informally analyze English sentences and logic puzzles. This will help us practice the careful and rigorous thinking that we will need in formal proofs and in computer science in general.

Premises and conclusions

A premise is a piece of information that we are given in a logical argument. In our reasoning, we assume premises are true – even if they make no sense!

A conclusion in a logical argument is a statement whose validity we are checking. Sometimes we are given a conclusion, and we are trying to see whether that conclusion makes sense when we assume our premises are true. Other times, we are asked to come up with our own (valid) conclusion that we can deduce from our premises.

Example

Suppose we are given the following premises:

- Premise 1: If a person wears a red shirt, then they don’t like pizza.

- Premise 2: Fred is wearing a red shirt.

Given those pieces of information, can we conclude the following?

Fred doesn’t like pizza.

Yes! We take the premises at face value and assume them to be true (even though it is kind of ridiculous that shirt color has anything to do with a dislike of pizza). The first premise PROMISES that any time we have a person with a red shirt, then that person does not like pizza. Since Fred is such a person, we can conclude that Fred doesn’t like pizza.

Logical arguments with “OR”

Interpreting English sentences that use the word “or” can be tricky – the or can either be an inclusive or or an exclusive or. In programming, we are used to using an inclusive or – a statement like p || q is true as long as at least one of p or q is true, even if both are true. The only time a statement like p || q is false is if both p and q are false.

In English, however, the word “or” often implies an exclusive or. If a restaurant advertises that customers can choose “chips or fries” as the side for their meal, they are certainly not intending that a customer demand both sides.

However, since this course is focused on formal logic and analyzing computer programs and not so much on resolving language ambiguity, we will adopt the stance that the word “or” always means inclusive or unless otherwise specified.

Or example #1

With that in mind, suppose we have the following premises:

- I have a dog or I have a cat.

- I do not have a cat.

What can we conclude?

The only time an “or” statement true is when at least one of its parts is true. Since we already know that the right side of the or (“I have a cat”) is false, then we can conclude that the left side MUST be true. So we conclude:

I have a dog.

In general, if you have an or statement as a premise and you also know that one side of the or is NOT true, then you can always conclude that the other side of the or IS true.

Or example #2

Suppose we have the following premises:

- I have a bike or I have a car.

- I have a bike.

Can we conclude anything new?

First of all, I acknowledge that the most natural interpretation of the first premise is an exclusive or – that I have EITHER a bike OR a car, but not both. I think that is how most people would naturally interpret that sentence as well. However, in this course we will always consider “or” to be an inclusive or, unless we specifically use words like “but not both”.

With that in mind, the second premise is already sufficient to make the first premise true. Since I have a bike, the statement “I have a bike or I have a car” is already true, whether or not I have a car. Because of this, we can’t draw any further conclusions beyond our premises.

Or example 3

Suppose we have the following premises:

- I either have a bike or a car, but not both.

- I have a bike.

What can we conclude?

This is the sentence structure I will use if I mean an exclusive or – “either p or q but not both”.

In this setup, we CAN conclude that I do not have a car. This is because an exclusive or is FALSE when both sides are true, and I already know that one side is true (I have a bike). The only way for the first premise to be true is when I do not also have a car.

Logical arguments with if/then (aka implies, →)

Statements with of the form, if p, then q are making a promise – that if p is true, then they promise that q will also be true. We will later see this structure using the logical implies operator.

If/then example 1

Suppose we have the following premises:

- If it is raining, then I will get wet.

- It is raining.

What can I conclude?

The first premises PROMISES that if it is raining, then I will get wet. Since we assume this premise is true, then we must keep the promise. Since the second premise tells us that it IS raining, then we can conclude:

I will get wet.

If/then example 2

Suppose we have the following premises:

- If I don’t hear my alarm, then I will be late for class.

- I am late for class.

Can we conclude anything new?

The first premise promises that if I don’t hear my alarm, then I will be late for class. And if we knew that I didn’t hear my alarm, then we would be able to conclude that I will be late for class (in order to keep the promise).

However, we do NOT know that I don’t hear my alarm. All we are told is that I am late for class. I might be late for class for many reasons – maybe I got stuck in traffic, or my car broke down, or I got caught up playing a video game. We don’t have enough information to conclude WHY I’m late for class, and in fact we can’t conclude anything new at at all.

If/then example 3

Suppose we have the following premises:

- If I don’t hear my alarm, then I will be late for class.

- I’m not late for class.

What can we conclude?

This is a trickier example. We saw previously that the first premise promised that anytime I didn’t hear my alarm, then I would be late for class. But we can interpret this another way – since I’m NOT late for class, then I must have heard my alarm. After all, if I DIDN’T hear my alarm, then I would have been late. But I’m not late, so the opposite must be true. So we can conclude that:

I hear my alarm.

Reframing an if/then statement like that is called writing its contrapositive. Any time we have a statement of the form if p, then q then we can write the equivalent statement if not q, then not p.

Knights and Knaves

We will now move to solving several kinds of logic puzzles. While these puzzles aren’t strictly necessary to understand the remaining course content, they require the same rigorous analysis that we will use when doing more formal truth tables and proofs. Plus, they’re fun!

The puzzles in this section and the rest of this chapter are all either from or inspired by: What is the Name of This Book?, by Raymond Smullyan.

Island of Knights and Knaves

This section will involve knights and knaves puzzles, where we meet different inhabitants of the mythical island of Knights and Knaves. Each inhabitant of this island is either a knight or a knave.

Knights ALWAYS tell the truth, and knaves ALWAYS lie.

Example 1

Can any inhabitant of the island of Knights and Knaves say, “I’m a knave”?

-→ Click for solution

No! A knight couldn’t make that statement, as knights always tell the truth. And a knave couldn’t make that statement either, since it would be true – and knaves always lie.

Example 2

You see two inhabitants of the island of Knights and Knaves – Ava and Bob.

- Ava says that Bob is a knave.

- Bob says, “Neither Ava nor I are knaves.”

What types are Ava and Bob?

-→ Click for solution

Suppose Ava is a knight. Then her statement must be true, so Bob must be a knave. In this case, Bob’s statement would be a lie (since he is a knave), which is what we want.

Let’s make sure there aren’t any other answers that work.

Suppose instead that Ava is a knave. Then her statement must be a lie, so Bob must be a knight. This would mean that Bob’s statement should be true, but it’s not – Ava is a knave.

We can conclude that Ava is a knight and Bob is a knave.

Example 3

If you see an “or” statement in a knights and knaves puzzle, assume that it means an inclusive or. This will match the or logical operator in our later truth tables and proofs, and will also match the or operator in programmimg.

You see two different inhabitants – Eve and Fred.

- Eve says, “I am a knave or Fred is a knight.”

What types are Eve and Fred?

-→ Click for solution

Suppose first that Eve is a knight. Then her statement must be true. Since she isn’t a knave, the only way for her statement to be true is if Fred is a knight.

Let’s make sure there aren’t any other answers that work.

Suppose instead that Eve is a knave. Already we are in trouble – Eve’s statement is already true no matter what type Fred is. Since Eve would lie if she was a knave, we know she must not be knave.

We can conclude that Eve and Fred are both knights.

Example 4

You see three new inhabitants – Sarah, Bill, and Mae.

- Sarah tells you that only a knave would say that Bill is a knave.

- Bill claims that it’s false that Mae is a knave.

- Mae tells you, “Bill would tell you that I am a knave.”

What types are Sarah, Bill, and Mae?

-→ Click for solution

Before starting on this puzzle, it might help to rephrase Sarah’s and Bill’s statements. Sarah’s statement that only a knave would say that Bill is knave is really saying that it is FALSE that Bill is a knave (since knaves lie). Another way to say it’s false that Bill is a knave is to say that Bill is a knight. Similarly, we can rewrite Bill’s statemnet to say that Mae is a knight.

Now we have the following statements:

- Sarah tells you that Bill is a knight.

- Bill claims that Mae is a knight.

- Mae tells you, “Bill would tell you that I am a knave.”

Suppose Sarah is a knight. Then her statement is true, so Bill must also be a knight. This would mean Bill’s statement would also be true, so Mae is a knight as well. But Mae says that Bill would say she’s a knave, and that’s not true – Bill would truthfully say that Mae is a knight.

Suppose instead that Sarah is a knave. Then her statement is false, so Bill must be a knave. This would make Bill’s claim false as well, so Mae must be a knave. Mae knows that Bill would say she was a knight (since Bill is a knave, and would lie), and if Mae was a knave then she would indeed lie and say that Bill would say she was a knave.

We can conclude that all three are knaves.

Other Puzzles

We will look at a variety of other logic puzzles, each of which involve some statements being false and some statements being true.

Lion and Unicorn

The setup for a Lion and Unicorn puzzle can vary, but the idea is that both Lion and Unicorn have specific days that they tell only lies, and other specific days that they only tell the truth.

Here is one example:

Lion always lies on Mondays, Tuesdays, and Wednesdays.

Lion always tells the truth on other days.

Unicorn always lies on Thursdays, Fridays, and Saturdays, and always tells the truth on other days.

On Sunday, everyone tells the truth.

Lion says: “Yesterday was one of my lying days."

Unicorn says: “Yesterday was one of my lying days, too.”

What day is it?

-→ Click for solution

To solve this puzzle, we consider what Lion’s and Unicorn’s statements would mean on each different day of the week.

Suppose it is Sunday. Then Lion’s statement would be a lie (Lion does not lie on Saturday), and yet Lion is supposed to be telling the truth on Sunday.

Suppose it is Monday. Then both Lion’s and Unicorn’s statements would be lies, since they both told the truth yesterday (Sunday).

Suppose it is either Tuesday or Wednesday. Then Lion’s statement would be true – but Lion is supposed to lie on both Tuesday and Wednesday.

Suppose it is Thursday. Then Lion’s statement would be true (Wednesday was one of their lying days), which is good since Lion is supposed to be telling the truth on Thursdays. Similarly, Unicorn’s statement would be false (Unicorn does not lie on Thursdays), which works out since Unicorn DOES lie on Thursdays.

Suppose it is either Friday or Saturday. Then Lion’s statement would be a lie (Lion doesn’t lie on either Thursday or Friday), but Lion should be telling the truth on Friday and Saturday.

We can conclude that it must be Thursday.

Tweedledee and Tweedledum

The Tweedledee and Tweedledum puzzles originate from Through the Looking-glass and What Alice Found There, by Lewis Carroll. There are different versions of these puzzles as well, but all of them involve the identical twin creatures, Tweedledee and Tweedledum. Like with Lion and Unicorn, there are different days on which Tweedledee and Tweedledum either only lie or only tell the truth (and often one creature is lying while the other is telling the truth).

Example 1

Consider this puzzle:

Tweedledee and Tweedledum are identical. You know that one of them lies Mon/Tues/Wed,and that the other lies Thurs/Fri/Sat. (They tell the truth on non-lying days.)

You don’t know which is which.

You see both of them together.

The first one says: “I’m Tweedledum."

The second one says: “I’m Tweedledee.”

Which is which? What day is it?

-→ Click for solution

Answer: Since the two creatures gave different answers, we can conclude that they must both be lying or both telling the truth. (Otherwise, both creatures would give you the same name.) Sunday is the only such day.

Each is telling the truth, so the first twin is Tweedledum and the second is Tweedledee.

Example 2

Consider a second puzzle, with the same setup as to which days each twin lies and tells the truth.

You know that either Tweedledum or Tweedledee has lost a rattle. You find it, and want to return it to the correct one. You don’t know what day it is, but are sure that it isn’t Sunday (so one must be lying and one must be telling the truth).

The first one says: “Tweedledee owns the rattle.”

The second one says: “I’m Tweedledee ¬”

Who gets the rattle?

-→ Click for solution

To solve this puzzle, we can explore the possibilities for each twin lying or telling the truth.

Suppose the first twin is telling the truth. Since it isn’t Sunday, we know the second twin must be lying. If the second twin’s statement is a lie, then the second is Tweedledum. Since the first twin is telling the truth, then they are Tweedledee (and the owner of the rattle).

Suppose instead that the first twin is lying. Again, since it isn’t Sunday, we know the second twin must be telling the truth. This would make the second twin Tweedledee, and the first twin Tweedledum. It would also mean that TweedleDUM owns the rattle (since the first statement is a lie), which is the first twin.

We don’t have enough information to determine which twin is which, but it doesn’t matter – in both cases, the first twin is the owner of the rattle.

Portia’s Caskets

This type of puzzle originates from The Merchant of Venice, by William Shakespeare. In the play, Portia’s father devised riddles to test potential suitors for his daughter.

Here is one such puzzle:

There are three caskets – one gold, one silver, and one lead. One of the caskets contains a portrait (of Portia). Each casket has a message on it, and you know that at most one of the messages is true.

Gold casket message: “The portrait is in this casket.”

Silver casket message: “The portrait is not in this casket.”

Lead casket message: “The portrait is not in the gold casket.”

Where is the portrait?

-→ Click for solution

To solve this puzzle, we recognize that there are only three possibilities – the portrait must be in either the gold casket, the silver casket, or the lead casket. We consider the implications of each:

Suppose the portrait is in the gold casket. Then the messages on both the gold and silver caskets would be true. This isn’t possible, as we know that at most one of the messages is true.

Suppose instead that the portait is in the silver casket. Then the messages on the gold and silver caskets would be false, and the message on the lead casket would be true. Only one message is true, so this is a possibility.

Finally, suppose the portrait is in the lead casket. Then the messages on all three caskets would be true, so this isn’t possible.

We conclude that the portrait must be in the silver casket.

Subsections of Truth Tables

Operators and Circuits

In this chapter, we review basic notions about gates and learn the relationship between circuits and assignment-based computer programs. This sets the stage for analyzing modern programs.

Logical operators

There are four basic logic gates, with corresponding logical operators:

| Meaning | Logical Operator | Logic Gate |

|---|

| p AND q | p ∧ q |

|

| p OR q | p ∨ q |

|

| NOT p | ¬p |

|

| p IMPLIES q | p → q |

|

In the above drawings, the input wires are labelled with the names P and Q. The output that is computed is emitted from the rightmost wire which exits the gate. For these simple gates, it is possible to exhaustively test every permutation of potential inputs and summarize results in a table, called a truth table.

Let’s examine the AND gate. The AND gate emits a high voltage (1) exactly when high voltages are sensed at input wires P and Q; otherwise low voltage (0) is emitted. The gate’s physical behavior is summarized by in the following table:

AND: P Q |

-------------

1 1 | 1

1 0 | 0

0 1 | 0

0 0 | 0

Truth tables

For the remainder of this course, we will use T (read “true”) for 1 and F (read “false”) for 0. This is because we will examine applications that go far beyond circuit theory and base-two arithmetic. Here are the truth tables for the AND, OR, NOT and IMPLIES gates:

AND: P Q |

-------------

T T | T

T F | F

F T | F

F F | F

OR: P Q |

-------------

T T | T

T F | T

F T | T

F F | F

NOT: P |

-------------

T | F

F | T

IMPLIES: P Q |

-----------------

T T | T

T F | F

F T | T

F F | T

A few comments:

The OR gate is inclusive – as long as one of its inputs is true, then its output is true.

You might be confused by the IMPLIES gate. We’ll cover it in detail below.

In the next section, we will learn to write our truth tables in a slightly different format so they can be automatically checked by Sireum Logika.

Implies operator

The implies operator can be difficult to understand. It helps to think of it as a promise: we write P → Q, but we mean If P is true, then I promise that Q will also be true. If we BREAK our promise (i.e., if P is true but Q is false), then the output of an implies gate is false. In every other situation, the output of the implies gate is true.

As a reminder, here is the truth table for the implies operator, → :

P Q | P → Q

-----------------

T T | T

T F | F

F T | T

F F | T

It is likely clear why P → Q is true when both P and Q are true – in this situation, we have kept our promise.

It is also easy to understand why P → Q is false when P is true and Q is false. Here, we have broken our promise – P happened, but Q did not.

In the other two cases for P → Q we have that P is false (and Q is either true or false). Here, P → Q is true simply because we haven’t broken our promise. In these cases, the implication is said to be vacuously true because we have no evidence to prove that it is false.

Circuits

We can also compose the gates to define new operations.

For example, this circuit:

Written ¬(P ∧ Q), defines this computation of outputs:

P Q | ¬(P ∧ Q)

-----------------

T T | F

T F | T

F T | T

F F | T

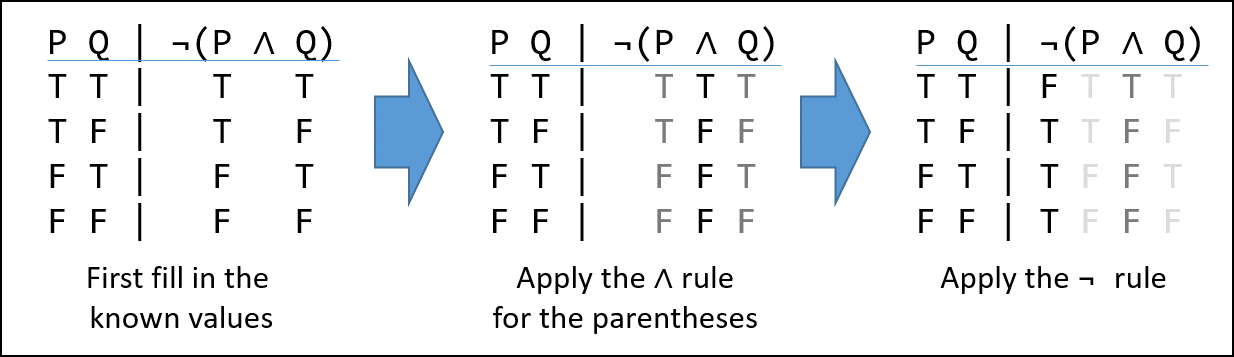

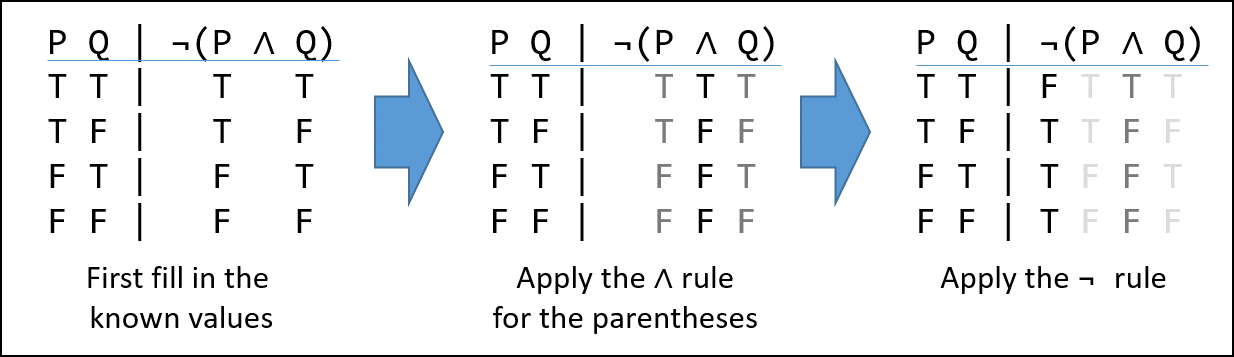

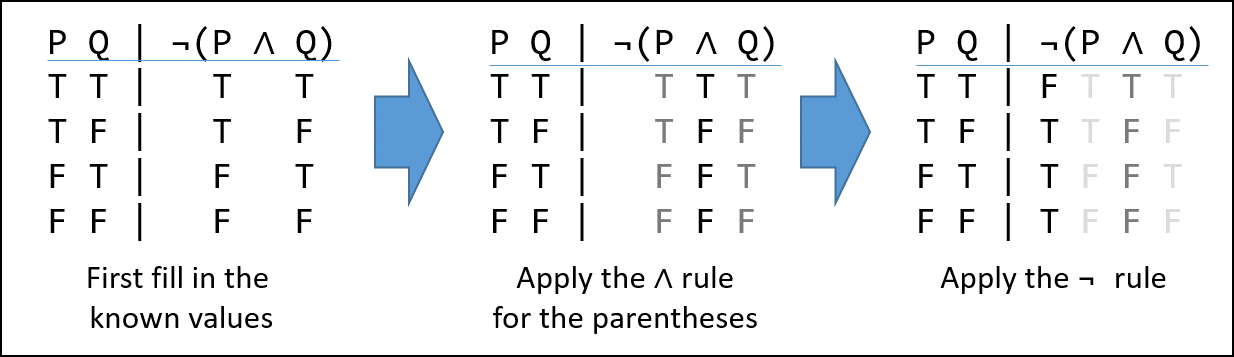

We can work out the outputs in stages, like this:

We begin by writing the value of each set of inputs on the left, under their corresponding symbol on the right. Next we apply the operator (gate) with the highest precedence (covered in Operator Precedence in the next section). In our case the () make the AND ( ∧ ) symbol the highest.

A truth assignment is a unique permutation of the possible inputs for a system. For the ∧-gate, it is a 2-variable sequence. Considering the first row we see we have T ∧ T. Looking that up in the ∧-gate truth table we see the result is also “T”, and we record that under the ∧ symbol. We do the same thing all the other truth assignments.

After the initial transcribing of the truth values under their respective variables, we look up the truth-values in the gate tables, not the variables. Also observe that while ∧ is symmetric – i.e. T ∧ F and F ∧ T are both false – the IMPLIES gate is not.

Now we look up the value under the ∧ symbol in the ¬ gate table. In the first row we see that the truth assignment for the first row, “T”, is “F” and record it under the ¬ symbol. Do this for every row and we are done.

Truth Tables in Logika

Now that we’ve seen the four basic logic gates and truth tables, we can put them together to build bigger truth tables for longer logical formulae.

Operator precedence

Logical operators have a defined precedence (order of operations) just as arithmetic operators do. In arithmetic, parentheses have the highest precedence, followed by exponents, then multiplication and division, and finally addition and subtraction.

Here is the precedence of the logical operators, from most important (do first) to least important (do last):

- Parentheses

- Not operator,

¬ - And operator,

∧ - Or operator,

∨ - Implies operator,

→

For example, in the statement (p ∨ q) ∧ ¬p, we would evaluate the operators in the following order:

- The parentheses (which would resolve the

(p ∨ q) expression) - The not,

¬ - The and,

∧

Sometimes we have more than one of the same operator in a single statement. For example: p ∨ q ∨ r. Different operators have different rules for resolving multiple occurrences:

- Multiple parentheses - the innermost parentheses are resolved first, working from inside out.

- Multiple not (

¬ ) operators – the rightmost ¬ is resolved first, working from right to left. For example, ¬¬p is equivalent to ¬(¬p). - Multiple and (

∧ ) operators – the leftmost ∧ is resolved first, working from left to right. For example, p ∧ q ∧ r is equivalent to (p ∧ q) ∧ r. - Multiple or (

∨ ) operators – the leftmost ∨ is resolved first, working from left to right. For example, p ∨ q ∨ r is equivalent to (p ∨ q) ∨ r. - Multiple implies (

→ ) operators – the rightmost → is resolved first, working from right to left. For example, p → q → r is equivalent to p → (q → r).

Top-level operator

In a logical statement, the top-level operator is the operator that is applied last (after following the precedence rules above).

For example, in the statement:

p ∨ q → ¬p ∧ r

We would evaluate first the ¬, then the ∧, then the ∨, and lastly the →. Thus the → is the top-level operator.

Classifying truth tables

In our study of logic, it will be convenient to characterize logical formula with a description of their truth tables. We will classify each logical formula in one of three ways:

- Tautology - when all truth assignments for a logical formula are true

- Contradictory - when all truth assignments for a logical formula are false

- Contingent - when some truth assignments for a logical formula are true and some are false.

For example, p ∨ ¬ p is a tautology. Whether p is true or false, p ∨ ¬ p is always true.

On the other hand, p ∧ ¬ p is contradictory. Whether p is true or false, p ∧ ¬ p is always false.

Finally, something like p ∨ q is contingent. When p and q are both false, then p ∨ q is false. However, p ∨ q is true in every other case.

If all truth assignments for a logical formula are True, the formula is said to be a tautology.

Logika syntax

From this point forward, the course will expect you to use Logika formatted truth tables. The Logika truth table for the formula ¬(p ∧ q) is:

*

-----------------

p q # !(p & q)

-----------------

T T # F T T T

T F # T T F F

F T # T F F T

F F # T F F F

-----------------

Contingent

T: [T F] [F T] [F F]

F: [T T]

Logika truth tables have standard format (syntax) and semantic meanings. All elements of the truth table must be included to be considered correct.

The first line should have a single asterisk (*) over the top-level operator in the formula.

Next is a line of - (minus sign) characters, which must be at least as long as the third line to avoid getting errors.

The third line contains variables | formula. As Logika uses some capital letters as reserved words, you should use lower-case letters as variable names. Additionally, variables should be listed alphabetically.

The fourth line is another row of -, which is the same length as the second line.

Next come the truth assignments. Under the variables, list all possible combinations of T and F. Start with all T and progress linearly to all F. (T and F must be capitalized.)

After the Truth assignments is another row of -. Using each truth assignment, fill in truth assignments (T or F) under each operator in the formula in order of precedence (with the top-level operator applied last). Optionally, you can fill in the values for each variable under the forumla (as in the example above). However, it is only required that you fill in the truth assignments under each operator. Be careful to line up the truth assignments DIRECTLY below each operator, as Logika will reject truth tables that aren’t carefully lined up.

Under the truth assignments, put another line of - (minus sign) characters, which should be the same length as the second line.

Finally, classify the formula as either Tautology (if everything under the top-level operator is T), Contradictory (if everything under the top-level operator is F), or Contingent (if there is a mix of T and F under the top-level operator). If the formula is contingent, you must also list which truth assignments made the formula true (i.e., which truth assignments made the top-level operator T) and which truth assignments made the formula false. Follow the image above for the syntax of how to list the truth assignments for contingent examples.

Logical operators in Logika

You may notice that the example above appears to use the ! operator for NOT and the & operator for AND. However, what is shown above demonstrates what we TYPE into Logika, and not what is actually displayed. If we copy and paste the example into a new Logika file, it will look like:

*

-----------------

p q # ¬(p ∧ q)

-----------------

T T # F T T T

T F # T T F F

F T # T F F T

F F # T F F F

-----------------

Contingent

T: [T F] [F T] [F F]

F: [T T]

Here is a summary of what keys to type in Logika for each traditional logical operator:

| Logical operator | What to TYPE in Logika | What you will SEE in Logika |

|---|

| NOT | ! | ¬ |

| OR | | | ∨ |

| AND | & | ∧ |

| IMPLIES | ->: | →: |

In the remainder of this book, my examples will be of what you will SEE in Logika.

Logika mishandling of implies operator

While the correct order of operations for logical operations is NOT, AND, OR, IMPLIES (from highest precedence to lowest precedence), the Logika tool does not handle the precedence of the implies operator correctly. The characters used for the implies operator in Logika are ->: – however, since Logika takes advantage of Scala’s operator precedence, this means that it interprets an implies operation as having the same (higher) precedence as a minus operation. In this class, we will always use parentheses to force the implies to have the correct precedence.

This incorrect precedence will only be present in our unit on truth tables – other proofs in Logika will use a different operator for implies (__>:) to avoid the issue of being interpreted as a minus operation. (We cannot use __>: in truth tables as the rendering makes the column alignment unclear.)

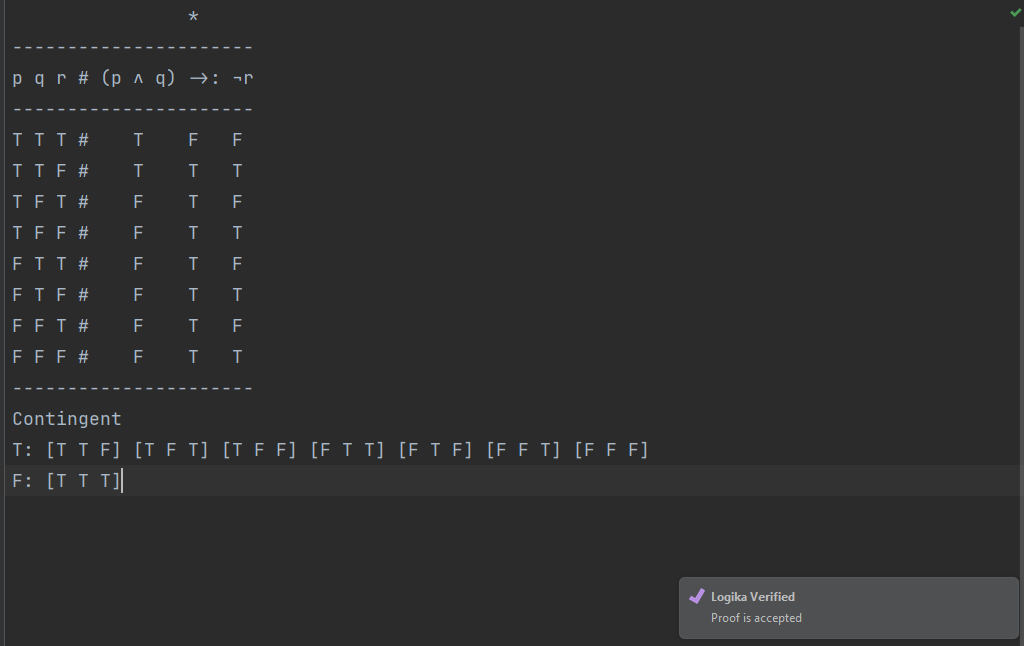

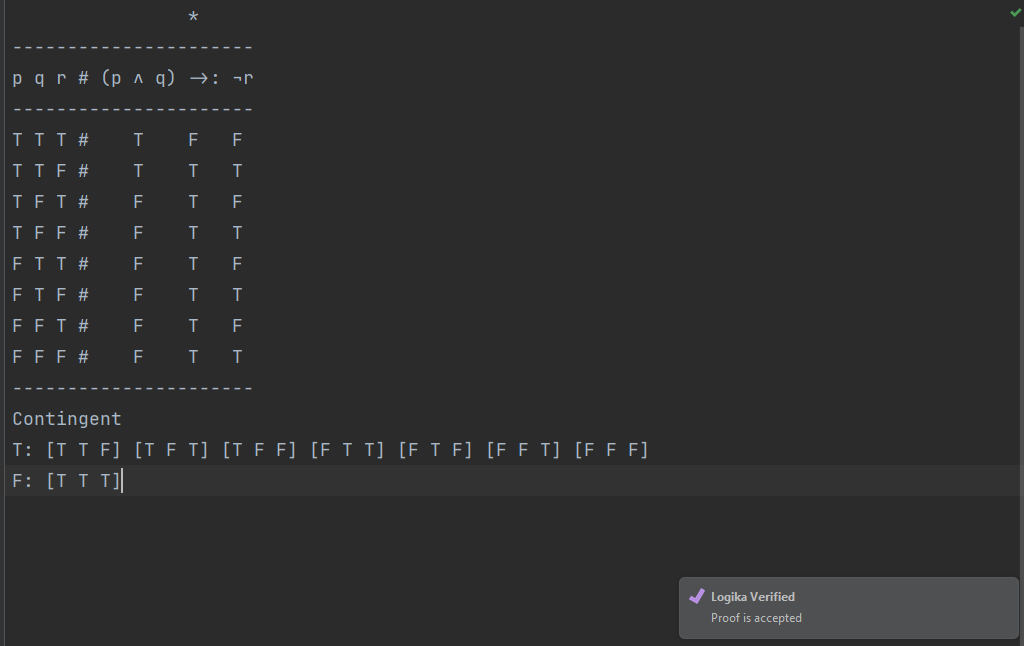

Example

Suppose we want to write a Logika truth table for:

First, we make sure we have a new file in Sireum with the .logika extension. Then, we construct this truth table shell:

*

----------------------

p q r # (p ∧ q) →: ¬r

----------------------

T T T #

T T F #

T F T #

T F F #

F T T #

F T F #

F F T #

F F F #

----------------------

In the table above, we noticed that the → operator was the top-level operator according to our operator precedence rules.

Next, we fill in the output for the corresponding truth assignment under each operator, from highest precedence to lowest precedence. First, we evaluate the parentheses, which have the highest precedence. For example, we put a T under the ∧ in the first row, as p and q are both T in that row, and T ∧ T is T:

*

----------------------

p q r # (p ∧ q) →: ¬r

----------------------

T T T # T

T T F # T

T F T # F

T F F # F

F T T # F

F T F # F

F F T # F

F F F # F

----------------------

In this example, we are only filling in under each operator (instead of also transcribing over each variable value), but either approach is acceptable.

Next, we fill in under the ¬ operator, which has the next-highest precedence:

*

----------------------

p q r # (p ∧ q) →: ¬r

----------------------

T T T # T F

T T F # T T

T F T # F F

T F F # F T

F T T # F F

F T F # F T

F F T # F F

F F F # F T

----------------------

Then, we fill in under our top-level operator, the →. Notice that we must line up the T/F values under the → in the →: symbol. For example, we put a F under the →: on the first row, as (p ∧ q) is T there and ¬r is F, and we know that T→F is F because it describes a broken promise.

*

----------------------

p q r # (p ∧ q) →: ¬r

----------------------

T T T # T F F

T T F # T T T

T F T # F T F

T F F # F T T

F T T # F T F

F T F # F T T

F F T # F T F

F F F # F T T

----------------------

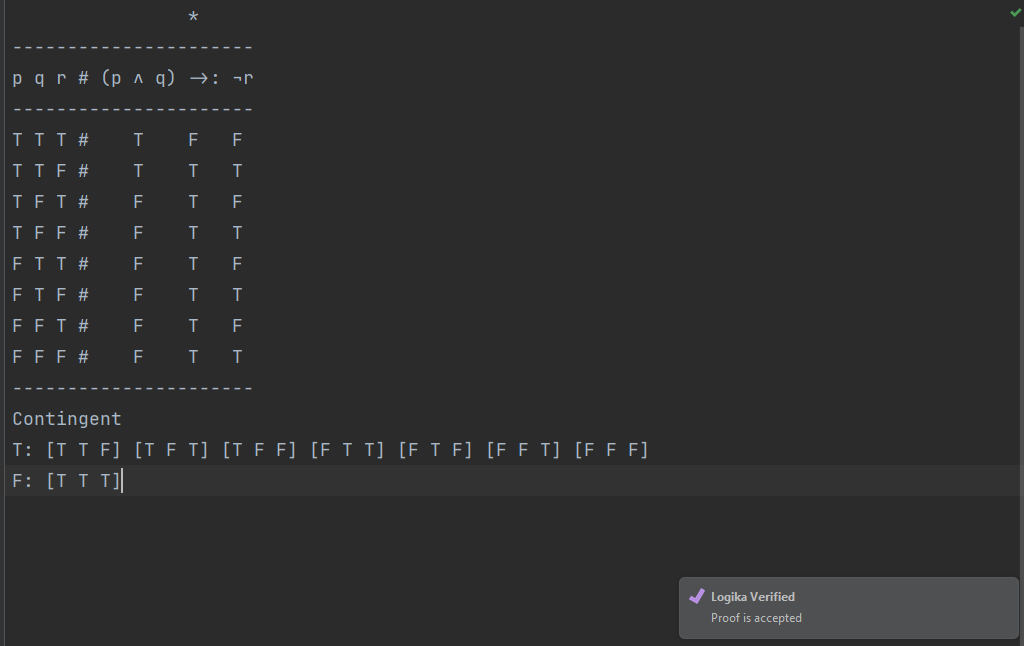

Lastly, we examine the list of outputs under the top-level operator. We see that some truth assignments made the formula true, and that others (one) made the formula false. Thus, the formula is contingent. We label it as such, and list which truth assignments made the formula true and which made it false:

*

----------------------

p q r # (p ∧ q) →: ¬r

----------------------

T T T # T F F

T T F # T T T

T F T # F T F

T F F # F T T

F T T # F T F

F T F # F T T

F F T # F T F

F F F # F T T

----------------------

Contingent

T: [T T F] [T F T] [T F F] [F T T] [F T F] [F F T] [F F F]

F: [T T T]

If you typed everything correctly and run a Logika check (Ctrl-Shift-W in Windows and Command-Shift-W on Mac), you should see a popup in Sireum logika that says: “Logika Verified” (yours will not have the purple checkmark, but will have the same text)

If you instead see red error markings, hover over them and read the explanations – it means there are errors in your truth table.

Satisfiability

We say that a logical statement is satisfiable when there exists at least one truth assignment that makes the overall statement true.

In our Logika truth tables, this corresponds to statements that are either contingent or a tautology. (Contradictory statements are NOT satisfiable.)

For example, consider the following truth tables:

*

-----------------------

p q r # p →: q V ¬r ∧ p

-----------------------

T T T # T T F F

T T F # T T T T

T F T # F F F F

T F F # T T T T

F T T # T T F F

F T F # T T T F

F F T # T F F F

F F F # T F T F

------------------------

Contingent

T: [T T T] [T T F] [T F F] [F T T] [F T F] [F F T] [F F F]

F: [T F T]

And

*

------------

p # p V ¬p

------------

T # T F

F # T T

-------------

Tautology

Both of these statements are satisfiable, as they have at least one (or more than one) truth assignment that makes the overall statement true.

Logical Equivalence

Two (or more) logical statements are said to be logically equivalent IFF (if and only if, ↔) they have the same truth value for every truth assignment; i.e., their truth tables evaluate exactly the same. (We sometimes refer to this as semantic equivalence.)

An example of logically equivalent statements are q ∧ p and p ∧ (q ∧ p):

*

--------------

p q # (p ∧ q)

--------------

T T # T

T F # F

F T # F

F F # F

---------------

Contingent

T: [T T]

F: [F F] [F T] [T F]

*

-------------------

p q # p ∧ (q ∧ p)

-------------------

T T # T T

T F # F F

F T # F F

F F # F F

--------------------

Contingent

T : [T T]

F : [F F] [F T] [T F]

In these examples, notice that exactly the same set of truth assignments makes both statements true, and that exactly the same set of truth assignments makes both statements false.

Finding equivalent logical statements of fewer gates (states) is important to several fields. In computer science, fewer states can lead to less memory, fewer operations and smaller programs. In computer engineering, fewer gates means fewer circuits less power and less heat.

Common equivalences

We can similarly use truth tables to show the following common logical equivalences:

- Double negative:

¬ ¬ p and p - Contrapositive:

p → q and ¬ q → ¬ p - Expressing an implies using an OR:

p → q and ¬ p ∨ q - One of DeMorgan’s laws:

¬ (p ∧ q) and ( ¬ p ∨ ¬ q) - Another of DeMorgan’s laws:

¬ (p ∨ q) and ( ¬ p ∧ ¬ q)

Expressing additional operators

The bi-implication (↔) and exclusive or (⊕) operators are not directly used in this course. However, we can simulate both operators using a combination of ¬, ∧, ∨, and →:

p ↔ q, which means “p if and only if q”, can be expressed as (p → q) ∧ (q → p)p ⊕ q, which means “p exclusive or q”, can be expressed as (p ∨ q) ∧ ¬(p ∧ q)

Semantic Entailment

Definition

We say a set of premises, p1, p2, …, pn semantically entail a conclusion c, and we write:

if whenever we have a truth assignment that makes p1, p2, …, pn all true, then c is also true for that truth assignment.

(Note: we can use the ASCII replacement |= instead of the Unicode ⊨, if we want.)

Showing semantic entailment

Suppose we have premises p ∧ q and p → r. We want to see if these premises necessarily entail the conclusion r ∧ q.

First, we could make truth tables for each premise (being sure to list the variables p, q and r in each case, as that is the overall set of variables in the problem):

//truth table for premise, p ∧ q

*

----------------------

p q r # p ∧ q

----------------------

T T T # T

T T F # T

T F T # F

T F F # F

F T T # F

F T F # F

F F T # F

F F F # F

----------------------

Contingent

T: [T T T] [T T F]

F: [T F T] [T F F] [F T T] [F T F] [F F T] [F F F]

//truth table for premise, p → r

*

----------------------

p q r # p →: r

----------------------

T T T # T

T T F # F

T F T # T

T F F # F

F T T # T

F T F # T

F F T # T

F F F # T

----------------------

Contingent

T: [T T T] [T F T] [F T T] [F T F] [F F T] [F F F]

F: [T T F] [T F F]

Now, we notice that the truth assignment [T T T] is the only one that makes both premises true. Next, we make a truth table for our potential conclusion, r ∧ q (again, being sure to include all variables used in the problem):

//truth table for potential conclusion, r ∧ q

*

----------------------

p q r # r ∧ q

----------------------

T T T # T

T T F # F

T F T # F

T F F # F

F T T # T

F T F # F

F F T # F

F F F # F

----------------------

Contingent

T: [T T T] [F T T]

F: [T T F] [T F T] [T F F] [F T F] [F F T] [F F F]

Here, we notice that the truth assignment [T T T] makes the conclusion true as well. So we see that whenever there is a truth assignment that makes all of our premises true, then that same truth assignment also makes our conclusion true.

Thus, p ∧ q and p → r semantically entail the conclusion r ∧ q, and we can write:

Semantic entailment with one truth table

The process of making separate truth tables for each premise and the conclusion, and then examining each one to see if any truth assignment that makes all the premises true also makes the conclusion true, is fairly tedious.

We are trying to show that IF each premise is true, THEN we promise the conclusion is true. This sounds exactly like an IMPLIES statement, and in fact that is what we can use to simplify our process. If we are trying to show that p1, p2, …, pn semantically entail a conclusion c (i.e., that p1, p2, ..., pn ⊨ c), then we can instead create ONE truth table for the statement:

(p1 ∧ p2 ∧ ... ∧ pn) → c

If this statement is a tautology (which would mean that anytime all the premises were true, then the conclusion was also true), then we would also have that the premises semantically entail the conclusion.

In our previous example, we create a truth table for the statement (p ∧ q) ∧ (p → r) → r ∧ q:

*

--------------------------------------

p q r # (p ∧ q) ∧ (p →: r) →: r ∧ q

--------------------------------------

T T T # T T T T T

T T F # T F F T F

T F T # F F T T F

T F F # F F F T F

F T T # F F T T T

F T F # F F T T F

F F T # F F T T F

F F F # F F T T F

---------------------------------------

Tautology

Then we see that it is indeed a tautology.

Subsections of Propositional Logic Translations

Propositional Atoms

Definition

A propositional atom is statement that is either true or false, and that contains no logical connectives (like and, or, not, if/then).

Examples of propositional atoms

For example, the following are propositional atoms:

- My shirt is red.

- It is sunny.

- Pigs can fly.

- I studied for the test.

Examples of what are NOT propositional atoms

Propositional atoms should not contain any logical connectives. If they did, this would mean we could have further subdivided the statement into multiple propositional atoms that could be joined with logical operators. For example, the following are NOT propositional atoms:

- It is not summer. (contains a not)

- Bob has brown hair and brown eyes. (contains an and)

- I walk to school unless it rains. (contains the word

unless, which has if…then information)

Propositional atoms also must be either true or false – they cannot be questions, commands, or sentence fragments. For example, the following are NOT propositional atoms:

- What time is it? (contains a question - not a true/false statement)

- Go to the front of the line. (contains a command - not a true/false statement)

- Fluffy cats (contains a sentence fragment - not a true/false statement)

Identifying propositional atoms

If we are given several sentences, we identify its propositional atoms by finding the key statements that can be either true or false. We further ensure that these statements do not contain any logical connectives (and, or, not, if/then information) - if they do, we break the statement down further. We then assign letters to each proposition.

For example, if we have the sentences:

My jacket is red and green. I only wear my jacket when it is snowing. It did not snow today.

Then we identify the following propositional atoms:

p: My jacket is red

q: My jacket is green

r: I wear my jacket

s: It is snowing

t: It snowed today

Notice that the first sentence, “My jacket is red and green”, contained the logical connective “and”. Thus, we broke that idea into its components, and got propositions p and q. The second sentence, “I only wear my jacket when it is snowing”, contained if/then information about when I would wear my jacket. We broke that sentence into two parts as well, and got propositions r and s. Finally, the last sentence, “It did not snow today”, contained the logical connective “not” – so we removed it and kept the remaining information for proposition t.

Each propositional atom is a true/false statement, just as is required.

In the next section, we will see how to complete our translation from English to propositional logic by connecting our propositional atoms with logical operators.

NOT, AND, OR Translations

Now that we have seen how to identify propositional atoms in English sentences, we will learn how to connect these propositions with logical operators in order to complete the process of translating from English to propositional logic.

NOT translations

When you see the word “not” and the prefixes “un-” and “ir-”, those should be replaced with a NOT operator.

Example 1

For example, if we have the sentence:

I am not going to work today.

Then we would first identify the propositional atom:

p: I am going to work today

and would then use a NOT operator to express the negation. Our full translation to propositional logic would be: ¬p

Example 2

As another example, suppose we have the sentence:

My sweater is irreplaceable.

We would identify the propositional atom:

p: My sweater is replaceable.

And again, our complete translation would be: ¬p

AND translations

When you see the words “and”, “but”, “however”, “moreover”, “nevertheless”, etc., then the English sentence is expressing a conjunction of ideas. When translating to propositional logic, all of these words should be replaced with a logical AND operator.

It might seem strange that the sentences “It is cold and it is sunny” and “It is cold but it is sunny” should be translated the same way – but really, both sentences are expressing two facts:

- It is cold

- It is sunny

Using “but” instead of “and” in English adds a subtle comparison of the first fact to the second fact, but such nuances are beyond the capabilities of propositional logic (and are somewhat ambiguous anyway).

Example 1

Suppose we want to translate the following sentence to propositional logic:

I like cake but I don't like cupcakes.

We would first identify two propositional atoms:

p: I like cake

q: I like cupcakes

We would then translate the clause “I don’t like cupcakes” to ¬q, and then would translate the connective “but” to a logical AND operator. We would finish with the following translation:

Example 2

Suppose we want to translate the following sentence to propositional logic:

The school doesn't have both a pool and a track.

We would first identify two propositional atoms:

p: The school has a pool

q: The school has a track

We would then see that we are really taking the sentence, “The school has a pool and a track” and negating it, which leaves us with the following translation:

OR translations

When you see the word “or” in a sentence, or some other clear disjunction of statements, then you will translate it to a logical OR operator. Because the word “or” in English can be ambiguous, We first need to determine whether the “or” is inclusive (in which case we would replace it with a regular OR operator) or exclusive (in which case we need to add a clause to explicitly express that both statements cannot be true).

As we saw in section 1.1, the word “or” in an English sentence is usually meant to be exclusive. However, because the logical OR is INclusive, and since the purpose of this class is not to have you wrestle with subtleties of the English language, then you can assume that an “or” in a sentence is inclusive unless clearly stated otherwise.

Inclusive OR statements

Suppose we want to translate the following sentence to propositional logic:

You watch a movie and/or eat a snack.

We would first identify two propositional atoms:

p: You watch a movie

q: You eat a snack

The “and/or” in our sentence makes it extremely clear that the intent is an inclusive or, since the sentence is true if you both watch a movie and eat a snack. This leaves us with the following translation:

Exclusive OR statements

In this class, if the meaning of “or” in a sentence is meant to be exclusive, then the sentence will clearly state that the two statements aren’t both true.

For example, suppose we want to translate the following sentence to propositional logic:

On Saturday, Jane goes for a run or plays basketball, but not both.

We would first identify two propositional atoms:

p: Jane goes for a run on Saturday

q: Jane plays basketball on Saturday

We then apply our equivalence for simulating an exclusive or operator, which we saw in section 2.4. This leaves us with the following translation:

Implies Translations

In this section, we will learn when to use an implies (→) operator when translating from English to propositional logic. In general, you will want to use an implies operator any time a sentence is making a promise – if one thing happens, then we promise that another thing will happen. The trick is to figure out the direction of the promise – promising that if p happens, then q will happen is subtly different from promising that if q happens, then p will happen.

Look for the words “if”, “only if”, “unless”, “except”, and “provided” as clues that the propositional logic translation will use an implies operator.

IF p THEN q statements

An “IF p THEN q” statement is promising that if p is true, then we can infer that q is also true. (It is making NO claims about what we can infer if we know q is true.)

For example, consider the following sentence:

If it is hot today, then I'll get ice cream.

We first identify the following propositional atoms:

p: It is hot today

q: I'll get ice cream

To determine the order of the implication, we think about what is being promised – if it is hot, then we can infer that ice cream will happen. But if we get ice cream, then we have no idea what the weather is like. It might be hot, but it might also be cold and I just decided to get ice cream anyway. Thus we finish with the following translation:

Alternatively, if we don’t get ice cream, we can be certain that it wasn’t hot – since we are promsied that if it is hot, then we will get ice cream. So an equivalent translation is:

(This form is called the contrapositive, which we learned about it in section 2.4). We’ll study it and other equivalent translations more in the next section.)

p IF q statements

A “p IF q” statement is promising that if q is true, then we can infer that p is also true. (It is making NO claims about what we can infer if we know p is true.) Equivalent ways of expressing the same promise are “p PROVIDED q” and “p WHEN q”.

For example, consider the following sentence:

You can login to a CS lab computer if you have a CS account.

We first identify the following propositional atoms:

p: You can login to a CS lab computer

q: You have a CS account

To determine the order of the implication, we think about what conditions need to be met in order for me to be promised that I can login. We see that if we have a CS account, then we are promised to be able to login. Thus we finish with the following translation:

In this example, if we knew we could login to a CS lab computer, we wouldn’t be certain that we had a CS account. There might be other reasons we can login – maybe you can use your eID account instead, for example.

Alternatively, if we can’t login, we can be certain that we don’t have a CS account. After all, we are guaranteed to be able to login if we do have a CS account. So another valid translation is:

p ONLY IF q

A “p ONLY IF q” statement is promising that the only time p happens is when q also happens. So if p does happen, it must be the case that q did too (since p can’t happen without q happening too).

For example, consider the following sentence:

Wilma eats cookies only if Evelyn makes them.

We first identify the following propositional atoms:

p: Wilma eats cookies

q: Evelyn makes cookies

What conditions need to be met for Wilma to eat cookies? If Wilma is eating cookies, Evelyn must have made cookies – after all, we know that Wilma only eats Evelyn’s cookies. Thus we finish with the following translation:

Equivalently, we are certain that if Evelyn DOESN’T make cookies, then Wilma won’t eat them – since she only eats Evelyn’s cookies. We can also write:

However, if we know that Evelyn makes cookies, we can’t be sure that Wilma will eat them. We know she won’t eat any other kind of cookie, but maybe Wilma is full today and won’t even eat Evelyn’s cookies…we can’t be sure.

p UNLESS q, p EXCEPT IF q

The statements “p UNLESS q” and “p EXCEPT IF q” are equivalent…and both can sometimes be ambiguous. For example, consider the following sentence:

I will bike to work unless it is raining.

We first identify the following propositional atoms:

p: I will bike to work

q: It is raining

Next, we consider what exactly is being promised:

- If it isn’t raining, will I bike to work? YES ¬ I promise to bike to work whenever it isn’t raining.

- If I don’t bike to work, is it raining? YES ¬ I always bike when it’s not raining, so if I don’t bike, it must be raining.

- If it’s raining, will I necessarily not bike to work? Well, maybe? Some people might interpret the sentence as saying I’ll get to work in another way if it’s raining, and others might think no promise has been made if it is raining.

- If I bike to work, is it necessarily not raining? Again, maybe? It’s not clear if I’m promising to only bike in non-rainy weather.

As we did with ambiguous OR statements, we will establish a rule in this class for intrepeting “unless” statements that will let us resolve ambiguity.

If you see the word unless when you are doing a translation, replace it with the words unless possibly if.

If we rephrase the sentence as:

I will bike to work *unless possibly if* it is raining.

We see that there is clearly NO promise about whether I will bike in the rain. I might, or I might not – but the only thing that I am promising is that I will bike if it is not raining, or that IF it’s not raining, THEN I will bike. With that in mind, we can complete our translation:

Equivalently, if we don’t bike, then we are certain that it must be raining – since we have promised to ride our bike every other time. We can also write:

Equivalent Translations

As we saw in section 2.4), two logical statements are said to be logically equivalent if and only if they have the same truth value for every truth assignment.

We can extend this idea to our propositional logic translations – two (English) statements are said to be equivalent iff they have the same underlying meaning, and iff their translations to propositional logic are logically equivalent.

Common equivalences, revisited

We previously identified the following common logical equivalences:

- Double negative:

¬ ¬ p and p - Contrapositive:

p → q and ¬ q → ¬ p - Expressing an implies using an OR:

p → q and ¬ p ∨ q - One of DeMorgan’s laws:

¬ (p ∧ q) and ( ¬ p ∨ ¬ q) - Another of DeMorgan’s laws:

¬ (p ∨ q) and ( ¬ p ∧ ¬ q)

Equivalence example 1

Suppose we have the following propositional atoms:

p: I get cold

q: It is summer

Consider the following three statements:

- I get cold except possibly if it is summer.

- If it’s not summer, then I get cold.

- I get cold or it is summer.

We translate each sentence to propositional logic:

I get cold except possibly if it is summer.

p → ¬q- Meaning: I promise that if I get cold, then it must not be summer…because I am always cold when it’s not summer.

If it’s not summer, then I get cold.

¬q → p- Meaning: I promise that anytime it isn’t summer, then I will get cold.

I get cold or it is summer.

p V q- Meaning: I’m either cold or it’s summer…because my being cold is true every time it isn’t summer.

As we can see, each of these statements is expressing the same idea.

Equivalence example 2

Suppose we have the following propositional atoms:

p: I eat chips

q: I eat fries

Consider the following two statements:

- I don’t eat both chips and fries.

- I don’t eat chips and/or I don’t eat fries.

We translate each sentence to propositional logic:

These statements are clearly expressing the same idea – if it’s not the case that I eat both, then it’s also true that there is at least one of the foods that I don’t eat. This is an application of one of DeMorgan’s laws: that ¬ (p ∧ q) is equivalent to ( ¬ p ∨ ¬ q).

If we were to create truth tables for both ¬(p ∧ q) and ¬p V ¬q, we would see that they are logically equivalent (that the same truth assignments make each statement true).

Equivalence example 3

Using the same propositional atoms as example 2, we consider two more statements:

- I don’t eat chips or fries.

- I don’t eat chips and I don’t eat fries.

We translate each sentence to propositional logic:

These propositions are clearly expressing the same idea – I have two foods (chips and fries), and I don’t eat either one. This demonstrates another of DeMorgan’s laws: that ¬ (p ∨ q) is equivalent to ( ¬ p ∧ ¬ q). If we were to create truth tables for each proposition, we would see that they are logically equivalent as well.

Knights and Knaves, revisited

Recall the Knights and Knaves puzzles from section 1.2. In addition to solving these puzzle by hand, we can devise a strategy to first translate a Knights and Knaves puzzle to propositional logic, and then solve the puzzle using a truth table.

Identifying propositional atoms

To translate a Knights and Knaves puzzle to propositional logic, we first create a propositional atom for each person that represented whether that person was a knight. For example, if our puzzle included the people “Adam”, “Bob”, and “Carly”, then we might create propositional atoms a, b, and c:

a: Adam is a knight

b: Bob is a knight

c: Carly is a knight

Translating statements

Once we have our propositional atoms, we can translate each statement in the puzzle to propositional logic. For each one, we want to capture that the statement is true IF AND ONLY IF the person speaking is a knight. (That way, the statement would be false whenever the person was not a knight – i.e., when they were a knave.) We recall that we can express if and only if using a conjunction of implications. So if we want to write p if and only if q, then we can say (p → q) ∧ (q → p).

As an example, suppose we have the following statement:

Adam says: Bob is a knight and Carly is a knave.

Adam’s statement should be true if and only if he is a knight, so we can translate it as follows:

(a → (b ∧ ¬c)) ∧ ((b ∧ ¬c) → a)

Which reads as:

If I am a knight, then Bob is a knight and Carly is a knave. Also, if Bob is a knight and Carly is a knave, then I am a knight.

We repeat this process for each statement in the puzzle. Finally, since we solve a Knights and Knaves puzzle by finding a truth assignment (i.e., assignment of who is a knight and who is a knave) that works for ALL statements, then we finish by AND-ing together our translations for each speaker. When we fill in the truth table for our final combined proposition, then a valid solution to the puzzle is any truth assignment that makes the overall proposition true. If it was a well-made puzzle, then there should only be one such truth assignment.

Full example

Suppose we meet two people on the Island of Knights and Knaves – Ava and Bob.

Ava says, "Bob and I are not the same".

Bob says, "Of Ava and I, exactly one is a knight."

We first create a propositional atom for each person:

a: Ava is a knight

b: Bob is a knight

Then, we translate each statement:

- Bob and I are not the same

- Translation:

(a → (a ∧ ¬b V ¬a ∧ b)) ∧ ((a ∧ ¬b V ¬a ∧ b) → a) - Meaning: If Ava is a knight, then either Ava is a knight and Bob is a knave, or Ava is a knave and Bob is a knight (so they aren’t the same type). Also, if Ava and Bob aren’t the same type, then Ava must be a knight (because her statement would be true).

- Bob says, “Of Ava and I, exactly one is a knight.

- Bob is really saying the same thing as Ava…if exactly one is a knight, then either Ava is a knight and Bob is a knave, or Ava is a knave and Bob is a knight.

- Translation:

(b → (a ∧ ¬b V ¬a ∧ b)) ∧ ((a ∧ ¬b V ¬a ∧ b) → b)

We combine our translations for Ava and Bob and end up with the following propositional logic statement:

(a → (a ∧ ¬b V ¬a ∧ b)) ∧ ((a ∧ ¬b V ¬a ∧ b) → a) ∧ (b → (a ∧ ¬b V ¬a ∧ b)) ∧ ((a ∧ ¬b V ¬a ∧ b) → b)`

We then complete the truth table for that proposition:

*

---------------------------------------------------------------------------------------------------------------

a b | (a →: (a ∧ ¬b V ¬a ∧ b)) ∧ ((a ∧ ¬b V ¬a ∧ b) →: a) ∧ (b →:(a ∧ ¬b V ¬a ∧ b)) ∧ ((a ∧ ¬b V ¬a ∧ b) →: b)

---------------------------------------------------------------------------------------------------------------

T T | F F F F F F F F F F F F T F F F F F F F F F F F F F T

T F | T T T T F F T T T T F F T T T T T T F F F T T T F F F

F T | T F F T T T F F F T T T F F T F F T T T F F F T T T T

F F | T F T F T F T F T F T F T T T F T F T F T F T F T F T

---------------------------------------------------------------------------------------------------------------

Contingent

T: [F F]

F: [T T] [T F] [F T]

And we see that there is only one truth assignment that satisfies the proposition – [F F], which corresponds to Ava being a knave and Bob being a knave.

Conclusion

As you can see, solving a Knights and Knaves problem by translating each statement to propositional logic is a tedious process. We ended up with a very involved final formula that made filling in the truth table somewhat arduous. Such problems are usually much simpler to solve by hand – but this process demonstrates that we can apply a systematic approach to solve Knights and Knaves problems with translations and truth tables.

Subsections of Propositional Logic Proofs

Introduction

While we can use truth tables to check whether a set of premises entail a conclusion, this requires testing all possible truth assignments – of which there are exponentially many. In this chapter, we will learn the process of natural deduction in propositional logic. This will allow us to start with a set of known facts (premises) and apply a series of rules to see if we can reach some goal conclusion. Essentially, we will be able to see whether a given conclusion necessarily follows from a set of premises.

We will use the Logika tool to check whether our proofs correctly follow our deduction rules. HOWEVER, these proofs can and do exist outside of Logika. Different settings use slightly different syntaxes for the deduction rules, but the rules and proof system are the same. We will merely use Logika to help check our work.

Sequents, premises, and conclusions

A sequent is a mathematical term for an assertion or an argument. We use the notation:

The p0, p1, …, pm are called premises and c is called the conclusion. The ⊢ is called the turnstile operator, and we read it as “prove”. The full sequent is read as:

Statements p0, p1, ..., pm PROVE c

A sequent is saying that if we accept statements p0, p1, …, pm as facts, then we guarantee that c is a fact as well.

For example, in the sequent:

The premises are: p → q and ¬q, and the conclusion is ¬p.

(Shortcut: we can use |- in place of the ⊢ turnstile operator.)

Sequent validity

A sequent is said to be valid if, for every truth assignment which make the premises true, then the conclusion is also true.

For example, consider the following sequent:

To check if this sequent is valid, we must find all truth assignments for which both premises are true, and then ensure that those truth assignments also make the conclusion true.

Sequent validity in Logika using truth tables

We can use a different kind of truth table to prove the validity of a sequent in Logika:

* * *

---------------------------

p q # (p →: q, ¬q) ⊢ ¬p

---------------------------

T T # T F F

T F # F T F

F T # T F T

F F # T T T

---------------------------

Valid [F F]

Notice that instead of putting just one logical formula, we put the entire sequent – the premises are in a comma-separated list inside parentheses, then the turnstile operator (which we type using the keys |- in Logika), and then the conclusion. We mark the top-level operator of each premise and conclusion.

Examining each row in the above truth table, we see that only the truth assignment [F F] makes both premises (p → q and ¬q) true. We look right to see that the same truth assignment also makes the conclusion ( ¬p) true, which means that the sequent is valid.

Proving sequents using natural deduction

Now we will turn to the next section of the course – using natural deduction to similarly prove the validity of sequents. Instead of filling out truth tables (which becomes cumbersome very quickly), we will apply a series of deduction rules to allow us to conclude new claims from our premises. In turn, we can use our deduction rules on these new claims to conclude more and more, until (hopefully) we are able to claim our conclusion. If we can do that, then our sequent was valid.

Logika natural deduction proof syntax

We will use the following format in Logika to start a natural deduction proof for propositional logic. Each proof will be saved in a new file with a .sc (Scala) extension:

// #Sireum #Logika

//@Logika: --manual --background type

import org.sireum._

import org.sireum.justification._

import org.sireum.justification.natded.prop._

@pure def ProofName(variable1: B, variable2: B, ...): Unit = {

Deduce(

(comma-separated list of premises with variable1, variable2, ...) ⊢ (conclusion)

Proof(

//the actual proof steps go here

)

)

}

Once we are inside the Proof element (where the above example says “the actual proof steps go here”), we complete a numbered series of steps. Each step includes a claim and corresponding justification, like this:

// #Sireum #Logika

//@Logika: --manual --background type

import org.sireum._

import org.sireum.justification._

import org.sireum.justification.natded.prop._

@pure def ProofName(variable1: B, variable2: B, ...): Unit = {

Deduce(

(comma-separated list of premises with variable1, variable2, ...) ⊢ (conclusion)

Proof(

1 ( claim_a ) by Justification_a,

2 ( claim_b ) by Justification_b,

...

736 ( conclusion ) by Justification_conc

)

)

}

Each claim is given a number, and these numbers are generally in order. However, the only rule is that claim numbers be unique (they may be out of order and/or non-consecutive). Once we have justified a claim in a proof, we will refer to it as a fact.

We will see more details of Logika proof syntax as we progress through chapter 4.

Premise justification

The most basic justification for a claim in a proof is “premise”. This justification is used when you pull in a premise from the sequent and introduce it into your proof. All, some or none of the premises can be introduced at any time in any order. Please note that only one premise may be entered per claim.

For example, we might bring in the premises from our sequent like this (the imports, proof function definition, deduce call, and formatter changes are omitted here for readability):

(p, q, ¬r) ⊢ (p ∧ q)

Proof(

1 ( p ) by Premise,

2 ( q ) by Premise,

3 ( ¬r ) by Premise,

...

)

We could also bring in the same premise multiple times, if we wanted. We could also use non-sequential line numbers, as long as each line number was unique:

(p, q, ¬r) ⊢ (p ∧ q)

Proof(

7 ( p ) by Premise,

10 ( q ) by Premise,

2 ( ¬r ) by Premise,

8 ( p ) by Premise,

...

)

We could only bring in some portion of our premises, if we wanted:

(p, q, ¬r) ⊢ (p ∧ q)

Proof(

1 ( p ) by Premise,

...

)

But we can only list one premise in each claim. For example, the following is not allowed:

(p, q, ¬r) ⊢ (p ∧ q)

Proof(

1 ( p, q, ¬r ) by Premise, //NO! Only one premise per line.

...

)

Deduction rules

The logical operators (AND, OR, NOT, IMPLIES) are a kind of language for building propositions from basic, primitive propositional atoms. For this reason, we must have laws for constructing propositions and for disassembling them. These laws are called inference rules or deduction rules. A natural deduction system is a set of inference rules, such that for each logical operator, there is a rule for constructing a proposition with that operator (this is called an introduction rule) and there is a rule for disassembling a proposition with that operator (this is called an elimination rule).

For the sections that follow, we will see the introduction and elimination rules for each logical operator. We will then learn how to use these deduction rules to write a formal proof showing that a sequent is valid.

AND Rules

In this section, we will see the deduction rules for the AND operator.

AND introduction

Clearly, when both p and q are facts, then so is the proposition p ∧ q. This makes logical sense – if two propositions are independently true, then their conjunction (AND) must also be true. The AND introduction rule, AndI, formalizes this:

P Q

AndI : ---------

P ∧ Q

We will use the format above when introducing each of our natural deduction rules:

P and Q are not necessarily individual variables – they are placeholders for some propositional statement, which may itself involve several logical operators.- On the left side is the rule name (in this case,

AndI) - On the top of the right side we see what we already need to have established as facts in order to use this rule (in this case,

P and also Q ). These facts can appear anywhere in our scope of the proof, in whatever order. (For now, all previous lines in the proof will be within our scope, but this will change when we get to more complex rules that involve subproofs). - On the bottom of the right side, we see what we can claim by using that proof rule.

Here is a simple example of a proof that uses AndI. It proves that if propositional atoms p, q, and r are all true, then the proposition r ∧ (q ∧ p) is also true:

(p, q, r) ⊢ (r ∧ (q ∧ p))

Proof(

1 ( p ) by Premise,

2 ( q ) by Premise,

3 ( r ) by Premise,

4 ( q ∧ p ) by AndI(2, 1),

5 ( r ∧ (q ∧ p) ) by AndI(3, 4)

)

You can read line 4 like this: “from the fact q stated on line 2 and the fact p stated on line 1, we deduce q ∧ p by applying the AndI rule”. Lines 4 and 5 construct new facts from the starting facts (premises) on lines 1-3.

Note that if I had instead tried:

(p, q, r) ⊢ (r ∧ (q ∧ p))

Proof(

1 ( p ) by Premise,

2 ( q ) by Premise,

3 ( r ) by Premise,

4 ( q ∧ p ) by AndI(1, 2),

...

)

Then line 4 would not have been accepted. The line numbers cited after the AndI rule must match the order of the resulting AND statement. The left-hand side of our resulting AND statement must correspond to the first line number in AndI justification, and the right-hand side of our resulting AND statement must correspond to the second line number in the justification:

...

4 ( p ) by (some justification),

5 ( q ) by (some justification),

6 ( p ⋀ q ) by AndI(4, 5),

...

9 ( q ⋀ p ) by AndI(5, 4),

...

AND elimination