Subsections of Introduction

Course Introduction

YouTube VideoResources

Video Script

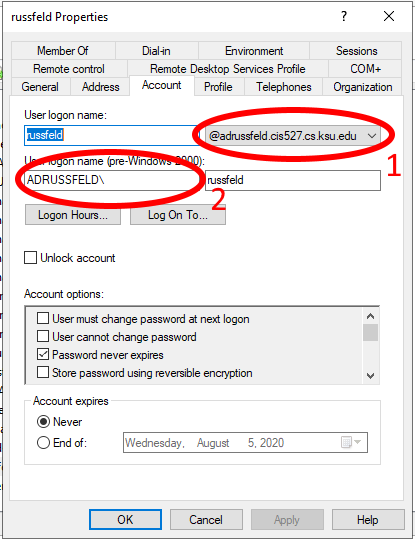

Welcome to CIS 527 - Enterprise System Administration and CC 510 - Computer Systems Administration. Even though these are two different courses in the catalog, they teach the same content and will use the same Canvas course. So, anywhere you see CIS 527 in this course, you can also mentally substitute CC 510 in its place.

My name is Russell Feldhausen, and I’ll be your instructor for this course. My contact information is shown here, and is also listed on the syllabus, and on the home page of the course on K-State Canvas. My email address is russfeld@ksu.edu, and it is the official method of communication for matters outside of this course, since it allows me to have a record of our conversations and respond when I’m available. However, I’ll also be available via the K-State CS Discord server and on Microsoft Teams, so you can easily chat with me there.

We also have one teaching assistant this semester - Josh Barron. He’ll also be available to answer questions, help with lab assignments, and will be doing some of the lab grading as well.

For communication in this course, there are two basic methods that I recommend. For general questions about the course, content, getting help with labs, and any other items relevant to the course, I encourage you to use the Ed Discussion message board. This allows all of us to communicate in a single space, and it also means that any questions I answer will immediately be available for the whole class. For personal issues, grading questions, or if you have something that is a “to-do” item for me, please email the course email, which is cis527-help@ksuemailprod.onmicrosoft.com. While I will strive to check Ed Discussion often, I’ve found that sometimes tasks can get lost in the discussion, so having an email in my inbox to prompt me to follow up is very helpful.

I am working remotely out of my home in Kansas City, so I won’t be available on campus very often during the semester. I do have an office, and I will be there usually on Mondays to meet with folks on campus and hold office hours. I’ll be sure to announce those times as they are scheduled.

In addition, I must give credit to several people for helping me develop the content in this course. First and foremost is Seth Galitzer, the CS system administrator, as well as several of my former students and teaching assistants.

For a brief overview of the course, there are 7 lab modules, plus a final project, that you’ll be responsible for completing. The modules are configured in K-State Canvas as gated modules, meaning that you must complete each item in the module in order before continuing. There will be one module due each week, and you may work ahead at your own pace. When you are ready to have a lab graded, you’ll schedule a time to meet interactively with me either in person or remotely. Finally, all work in this course must be completed and all labs graded by no later than the Friday of finals week.

Looking ahead to the rest of this first module, you’ll see that there are a few more items to be completed before you can move on. In the next video, I’ll discuss a bit more information about navigating through this course on Canvas and using the videos posted on YouTube.

One thing I highly encourage each of you to do is read the syllabus for this course in its entirety, and let me know if you have any questions. My view is that the syllabus is a contact between me as your teacher and you as a student, defining how each of us should treat each other and what we should expect from each other. I have made a few changes to my standard syllabus template for this course, and those changes are clearly highlighted. Finally, the syllabus itself is subject to change as needed as we adapt to this new course layout and format, and all changes will be clearly communicated to everyone before they take effect.

The grading in this course is very simple. Each of the 7 lab assignments will be worth 10% of your grade, for a total of 70%. Likewise, there are 15 quizzes, each one worth .66% for a total of 10%. We’ll also have a few guided discussions throughout the class, and your participation in each discussion will be 2% of your grade for a total of 10%. Finally, the final project is worth another 10% of your grade. There will be some extra credit points available, mainly through the Bug Bounty assignment, which you will review as part of this module. Lastly, the standard “90-80-70-60” grading scale will apply, though I reserve the right to curve grades up to a higher grade level at my discretion. Therefore, you will never be required to get higher than 90% for an A, but you may get an A if you score slightly below 90% if I choose to curve the grades.

Since this is a completely online course, you may be asking yourself what is different about this course. First off, you can work ahead at your own pace, and turn in work whenever you like before the due date. However, as discussed before, you must do all the readings and assignments in order before moving on, so you cannot skip ahead.

In addition, due to the flexible online format of this class, there won’t be any long lecture videos to watch. Instead, each module will consist of several short videos, each focused on a particular topic or task. Likewise, there won’t be any textbooks formally used, but you’ll be directed to a bevy of online resources for additional information.

What hasn’t changed, though, is the basic concept of a college course. You’ll still be expected to watch or read about 6 hours of content to complete each module. In addition to that, each lab assignment may require anywhere from 1 to 6 hours of work to complete. If you plan on doing a module every week, that roughly equates to 6 hours of content and 6 hours of homework each week, which is the expected workload from a 3 credit hour college course during the summer.

Also, while some of the quizzes will be graded automatically, much of the grading will still be done directly by me or my GTA. This includes the lab assignments. For each lab assignment, you’ll schedule a time to meet with us either in person or remotely to review your work for that lab. Finally, we’ll still be available to meet with you online for virtual office hours as needed. Of course, you can always contact me via email if you have any questions. In fact, since I regularly work from home anyway, I’ll probably be easier to contact than some of my fellow faculty!

For this class, each student is required to have access to a personal computer. It should have plenty of RAM and CPU power to host several virtual machines concurrently. In addition, you’ll need some virtual machine software, which is available for free to K-State CS students. I’ll discuss how to acquire and install that software in the first lab module. Finally, you’ll also need access to a high-speed connection capable of web conferencing with video. If you have any concerns about meeting these requirements, please contact me ASAP! We may have options available through some on-campus resources to help you out.

Finally, as you are aware, this course is always subject to change. While we have taught this class several times before, there may be a few hiccups as we get started due to new software and situations. The best advice I have is to look upon this graphic with the words “Don’t Panic” written in large, friendly letters, and remember that it’ll all work out in the end as long as you know where your towel is.

So, to complete this module, there are a few other things that you’ll need to do. The next step is to watch the video on navigating Canvas and using the YouTube videos, which will give you a good idea of how to most effectively work through the content in this course.

To get to that video, click the “Next” button at the bottom right of this page.

Navigating Canvas & YouTube

YouTube VideoVideo Script

This course makes extensive use of several features of Canvas which you may or may not have worked with before. To give you the best experience in this course, this video will briefly describe those features and the best way to access them.

When you first access the course on Canvas, you will be shown this homepage, with my contact information and any important information about the course. This is a quick, easy reference for you if you ever need to get in touch with me.

Let’s walk through the options in the main menu to the left. First, any course announcements will be posted in the Announcements section, which is available here. Those announcements will also be configured to send emails to all students when they are posted, though in your personal Canvas settings you can disable email notifications if you so choose. Please make sure you check here often for any updates to course information.

The next section is Modules, which is where you’ll primarily interact with the course. You’ll notice that I’ve disabled several of the common menu items in this course, such as Files and Assignments. This is to simplify things for you as students, so you remember that all the course content is available in one place.

When you first arrive at the Modules section, you’ll see all of the content in the course laid out in order. If you like, you can minimize the modules you aren’t working on by clicking the arrow to the left of the module name.

As you look at each module, you’ll see that it gives quite a bit of information about the course. At the top of each module is an item telling you what parts of the module you must complete to continue. In this case, it says “Complete All Items.” Likewise, the following modules may list a prerequisite module, which you must complete before you can access it.

Within each module is a set of items, which must be completed in listed order. Under each item you’ll see information about what you must do in order to complete that item. For many of them, it will simply say “view,” which means you must view the item at least once to continue. Others may say “contribute,” “submit,” or give a minimum score required to continue. For assignments, it also helpfully gives the number of points available, and the due date.

Let’s click on the first item, Course Introduction, to get started. You’ve already been to this page by this point. Most course pages will consist of an embedded video, followed by links to any resources used or referenced in the video, including the slides and a downloadable version of the video. Finally, a rough video script will be posted on the page for your quick reference.

While I cannot force you to watch each video in its entirety, I highly recommend doing so. The script on the page may not accurately reflect all of the content in the video, nor can it show how to perform some tasks which are purely visual.

When you are ready to move to the next step in a module, click the “Next” button at the bottom of the page. Canvas will automatically add “Next” and “Previous” buttons to each piece of content which is accessed through the Modules section, which makes it very easy to work through the course content. I’ll click through a couple of items here.

At any point, you may click on the Modules link in the menu to the left to return to the Modules section of the site. You’ll notice that I’ve viewed the first few items in the first module, so I can access more items here. This is handy if you want to go back and review the content you’ve already seen, or if you leave and want to resume where you left off. Canvas will put green checkmarks to the right of items you’ve completed.

Finally, you’ll find the usual Canvas links to view your grades in the course, as well as a list of fellow students taking the course.

Let’s go back to the Course Introduction page, and look at some of the features of the YouTube video player that may be useful to you as you go through this course.

When you load the player, it gives you a control toolbar at the bottom with several options. From here, you can restart the video, control playback speed, adjust the volume, enable subtitled captions, see information about the video, open the video in a new window, and more.

Every video in this course will have subtitles available, though the subtitles may be slightly garbled as they are automatically transcribed from the audio. I will do my best to edit them to be as accurate as possible. This will make the videos accessible to all students. In addition, studies have shown that a large number of video viewers prefer to have subtitles available even if they do not have any hearing impairment. So, please feel free to enable subtitles at any time.

In addition, I will attempt to speak as clearly as possible when creating the videos. If you feel that I am going too fast, or that you can keep up with me going more quickly, feel free to adjust the playback speed as needed. I have been known to absorb lecture videos at 1.5x playback speed myself, depending on the content.

Finally, you are welcome to download the videos from YouTube if desired, based on your internet connection or other factors. If you are interested in getting these videos in another format, please contact me.

Hopefully this gives you a good idea of the different resources available in this course. To complete the first module, click the next button below to continue with the next item.

Where to Find Help

YouTube VideoResources

Video Script

As you work on the materials in this course, you may run into questions or problems and need assistance. This video reviews the various types of help available to you in this course.

First and foremost, anytime you have a questions or need assistance in the course, please post in the course Discord room. It is the best place to go to get help with anything related to this course, from the tutorials and projects to issues with Codio and Canvas. Before you post on Discord, take a minute to look around and make sure the question has not already been posted before. It will save everyone quite a bit of time.

There are a few major reasons we’ve chosen to use Discord in this program. Our goal is to respond as quickly as possible, and having a single place for all questions allows the instructors and the TAs to work together to answer questions and solve problems quickly. As an added bonus, it reduces the amount of email generated by the class. Discord includes lots of features to make your messages easily readable using both markdown and code blocks. Finally, by participating in discussions on Discord and helping to answer questions from your fellow students, you can earn extra credit points!

Of course, if you aren’t able to find an answer in either of those places, the next step would be to search online for an answer. For better or worse, in the real world as a system administrator, many times you simply won’t know the answer, and will be tasked with finding the best one available. Learning how to leverage online search engines is a powerful skill to develop, and one major desired outcome of this course is to help you get better at finding and evaluating online resources for your work.

If all else fails, please email me and let me know. Make sure you clearly explain your question and the steps you’ve taken to solve it thus far. If I feel it can be easily answered by one of the earlier steps, I may redirect you back to those before answering it directly. But, at times there are indeed questions that come up that don’t have an easy answer, and I’m more than happy to help answer them as needed.

Beyond Discord, there are a few resources you should be aware of. First, if you have any issues working with K-State Canvas, the YouTube Videos, K-State IT resources, or any other technology related to the delivery of the course, your first source of help is the K-State IT Helpdesk. They can easily be reached via email at helpdesk@ksu.edu. Beyond them, there are many online resources for using Canvas and YouTube, many of which are linked in the resources section below the video. As a last resort, you may contact me via email, but in most cases I will not be able to help and will simply redirect you to the K-State helpdesk for assistance.

Next, we have grading and administrative issues. This could include problems or mistakes in the grade you received on a lab, missing course resources, or any concerns you have regarding the course and the conduct of myself and your peers. Since this is an online course, you’ll be interacting with us on a variety of online platforms, and sometimes things happen that are inappropriate or offensive. There are lots of resources at K-State to help you with those situations. First and foremost, please email me as soon as possible and let me know about your concern, if it is appropriate for me to be involved. If not, or if you’d rather talk with someone other than me about your issue, I encourage you to contact either your academic advisor, the CS department staff, College of Engineering Student Services, or the K-State Office of Student Life. Finally, if you have any concerns that you feel should be reported to K-State, you can do so at https://www.k-state.edu/report/. That site also has links to a large number of resources at K-State that you can use when you need help.

Finally, if you find any errors or omissions in the course content, or have suggestions for additional resources to include in the course, please email me. There are some extra credit points available for helping to improve the course, so be on the lookout for anything that you feel could be changed or improved.

So, in summary, if you have any issues using Canvas or accessing the course content, contact the K-State Helpdesk as a first step. If you run into issues with the labs, your first step should be to consult the course modules and discussion forums. For grading questions and errors in the course content, contact me directly. For other issues, please contact either myself or your academic advising resources as appropriate.

Our goal in this program is to make sure that you have the resources available to you to be successful. Please don’t be afraid to take advantage of them and ask questions whenever you want.

What You'll Learn

YouTube VideoResources

Video Script

Before you begin this course, here is a quick overview of what you’ll be learning and why it is important.

First, the labs. Lab 1 - Secure Workstations, is all about installing and configuring computers for your end users to use in a large organization. This is a great place to start in system administration, because it is very similar to working on your own, personal computer. You’ll install an operating system, learn about its various parts, and configure a secure and stable workstation. If you’ve taken my CIS 225 course, this is basically a review of a large portion of that material.

Lab 2 - Configuration Management, builds on the first lab by showing how you can use tools to automate much of the workstation configuration process. For this class, we’ll be learning how to use Puppet, but many other tools exist for this task. At the end of this lab, you should have a script available that will automatically perform most of the configuration you did in lab 1.

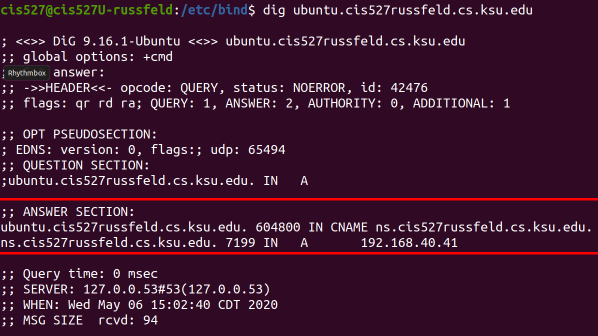

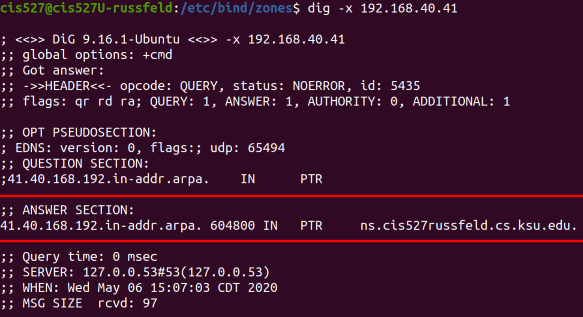

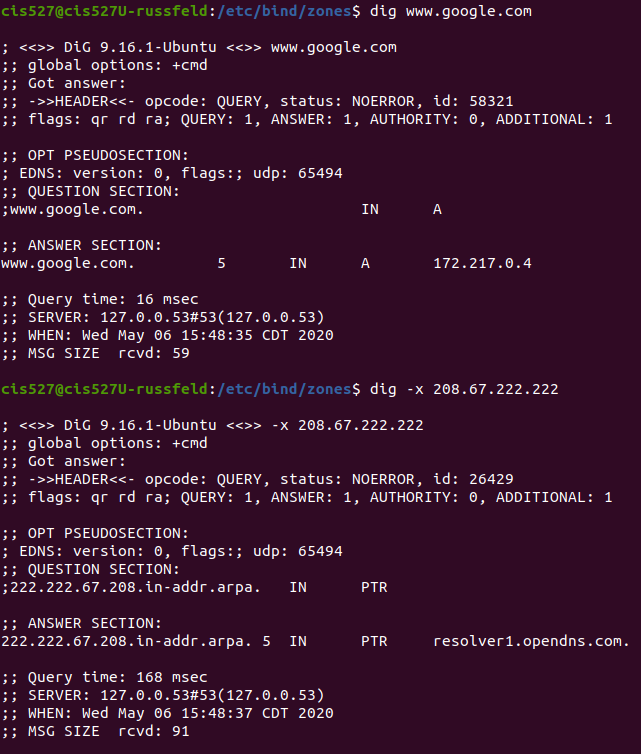

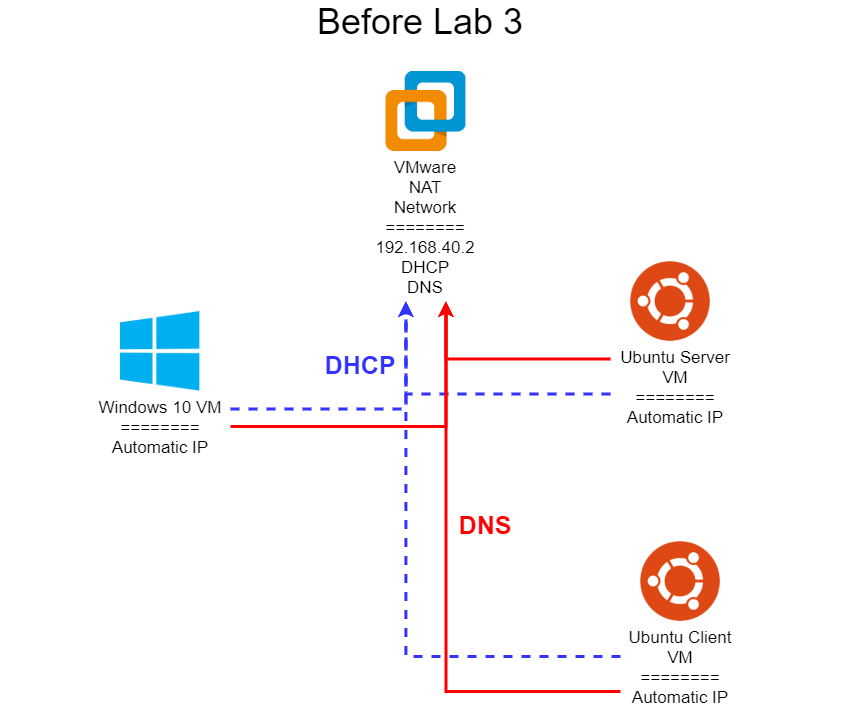

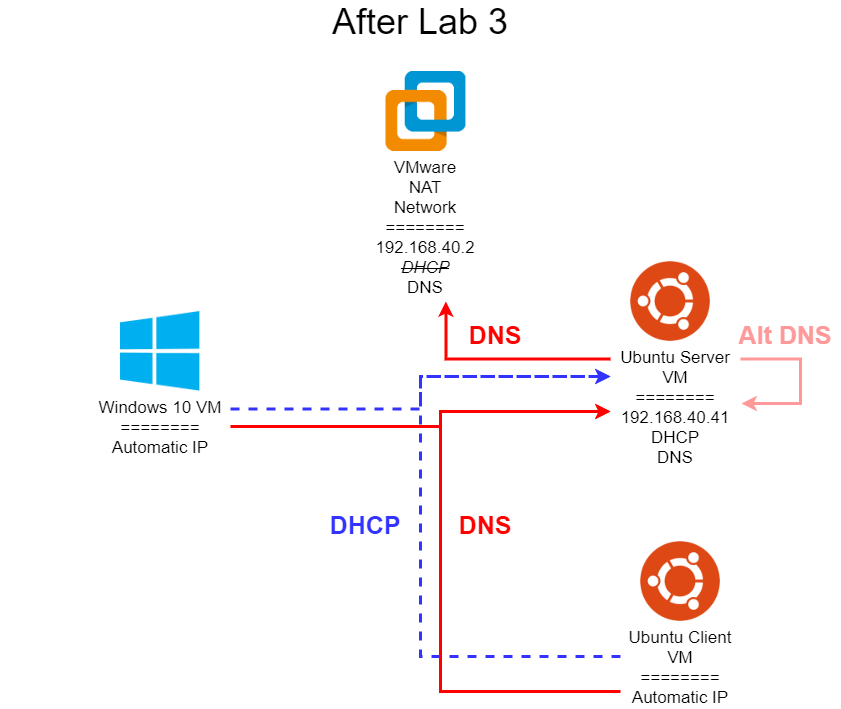

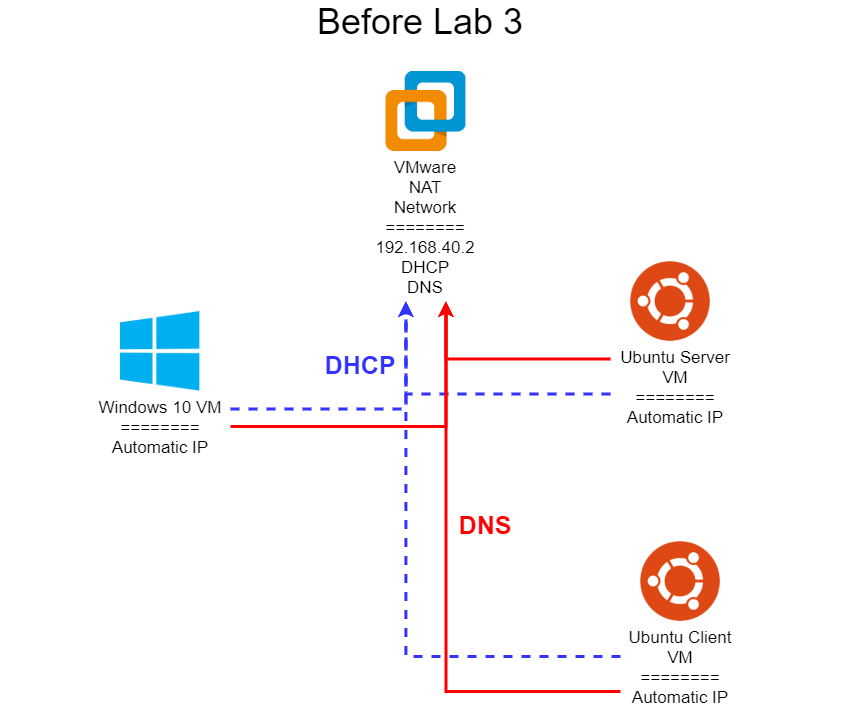

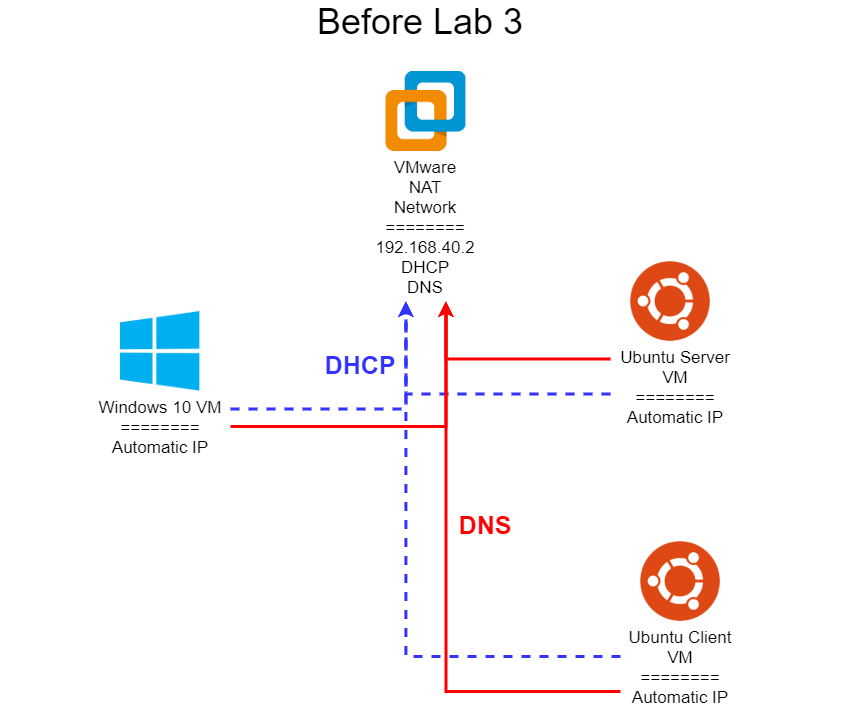

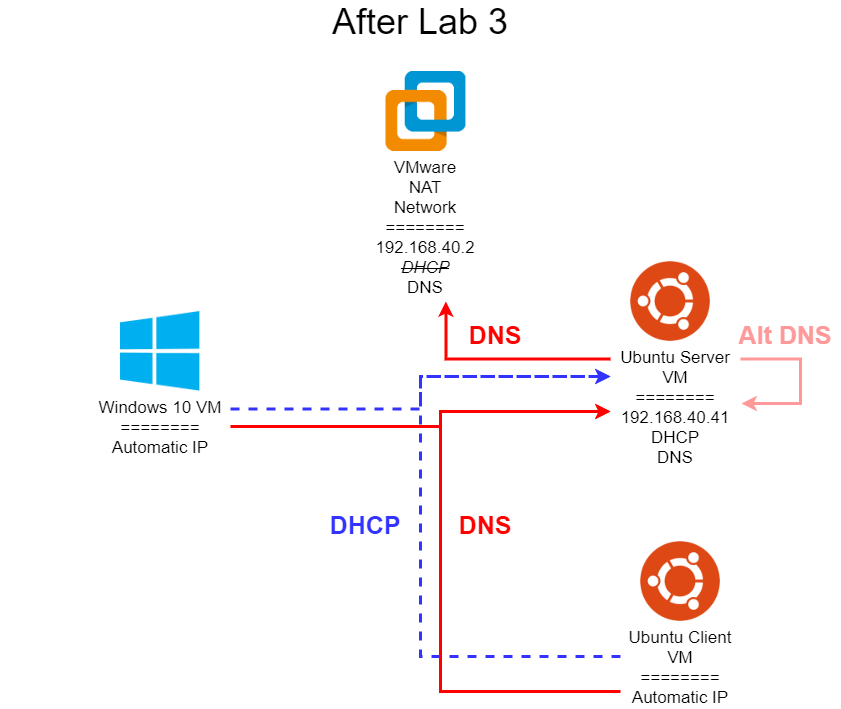

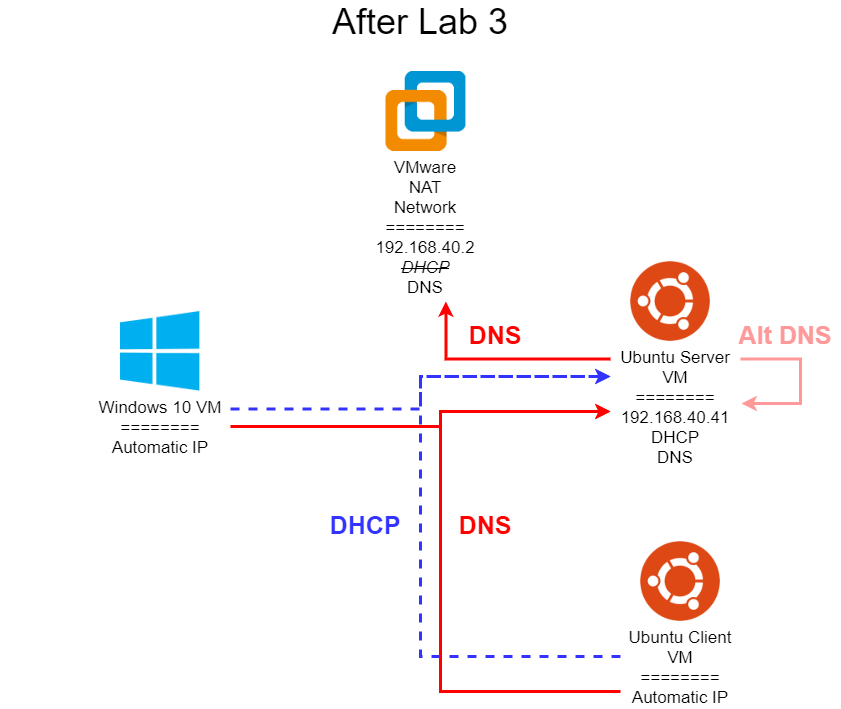

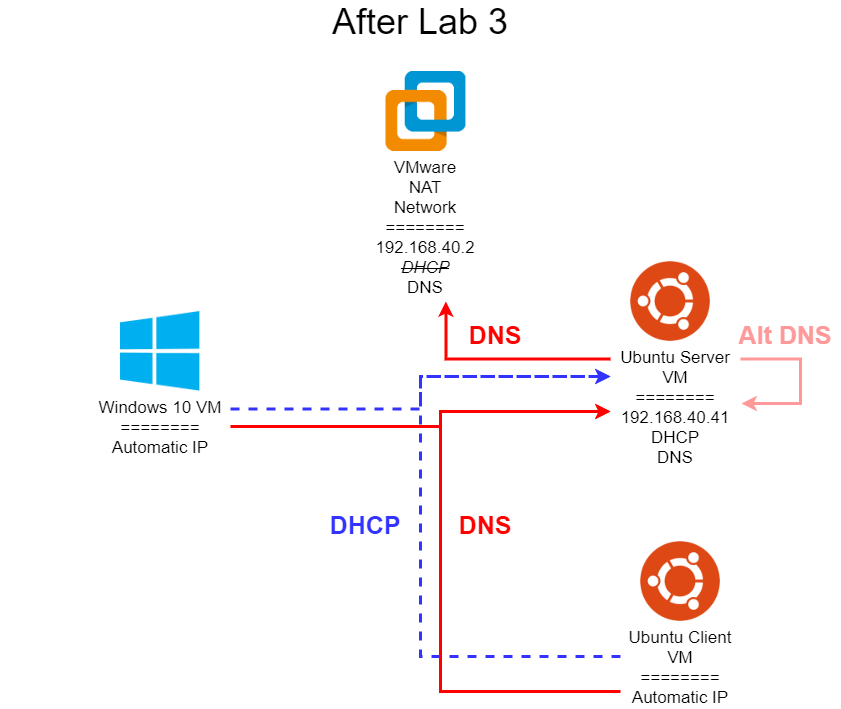

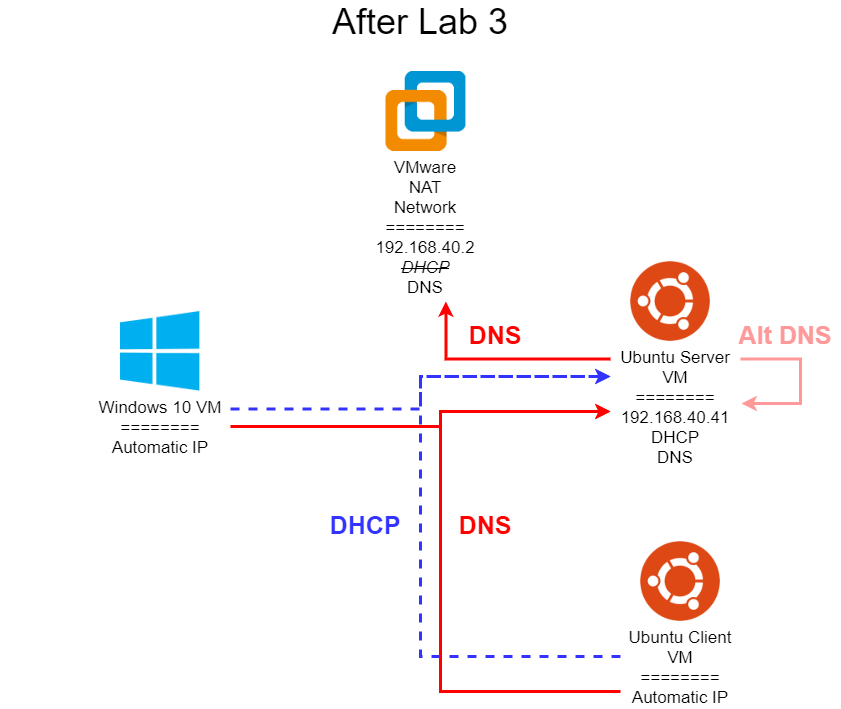

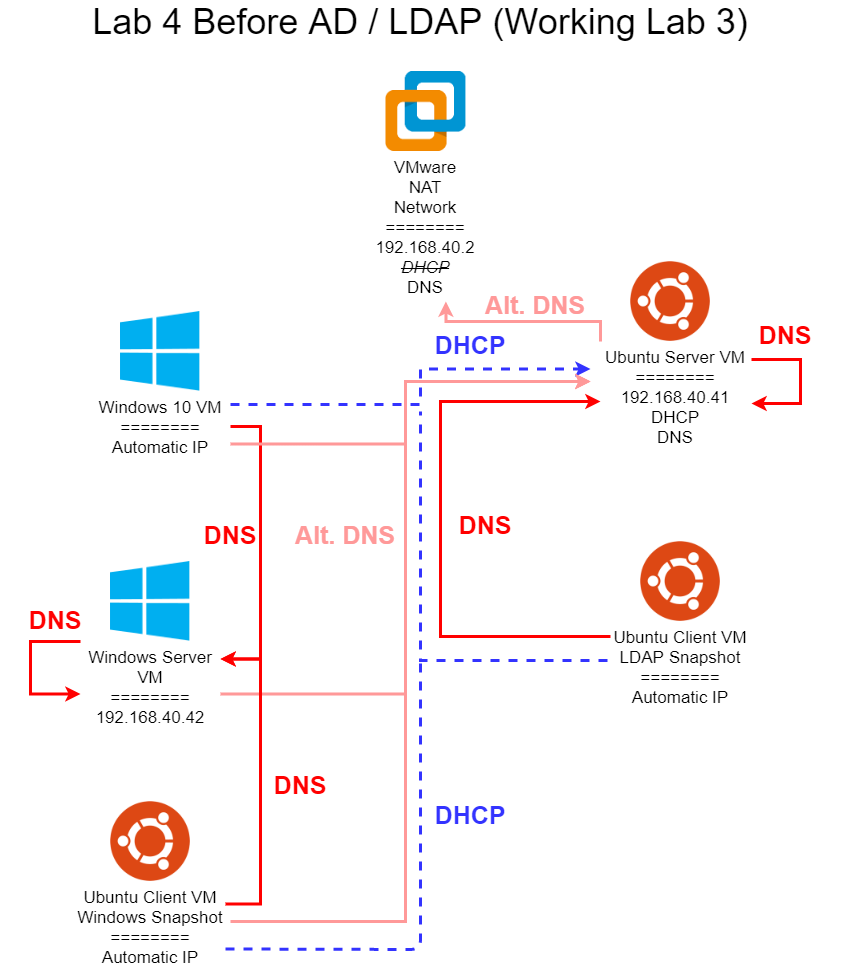

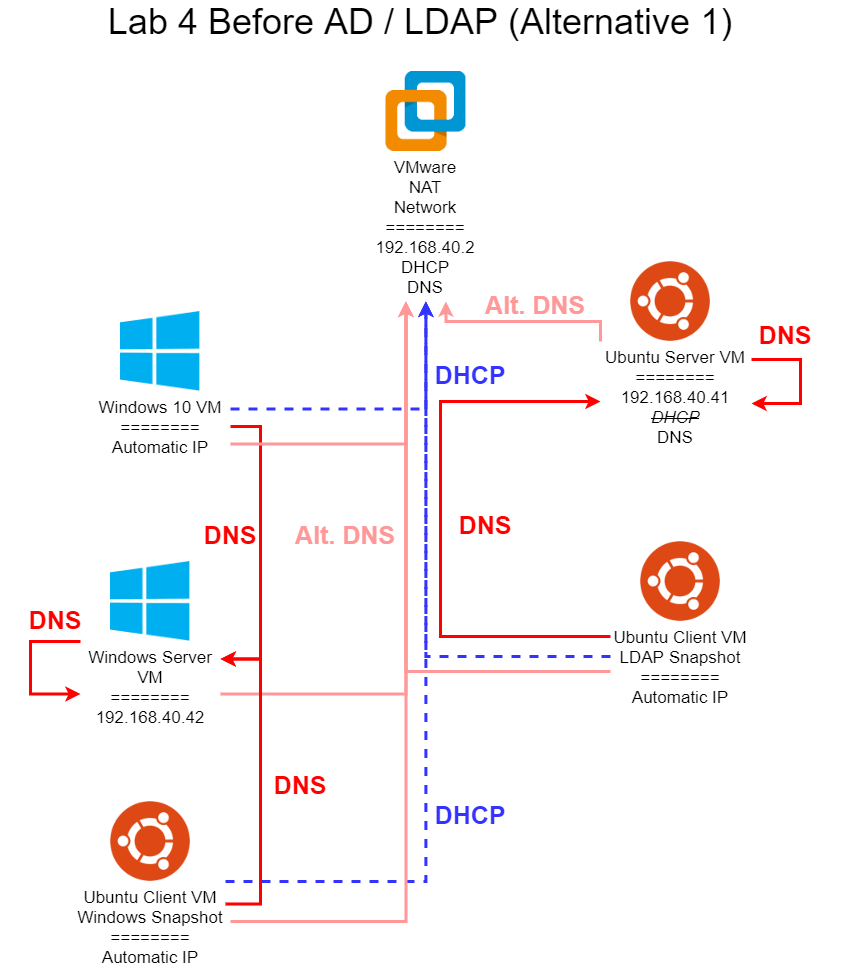

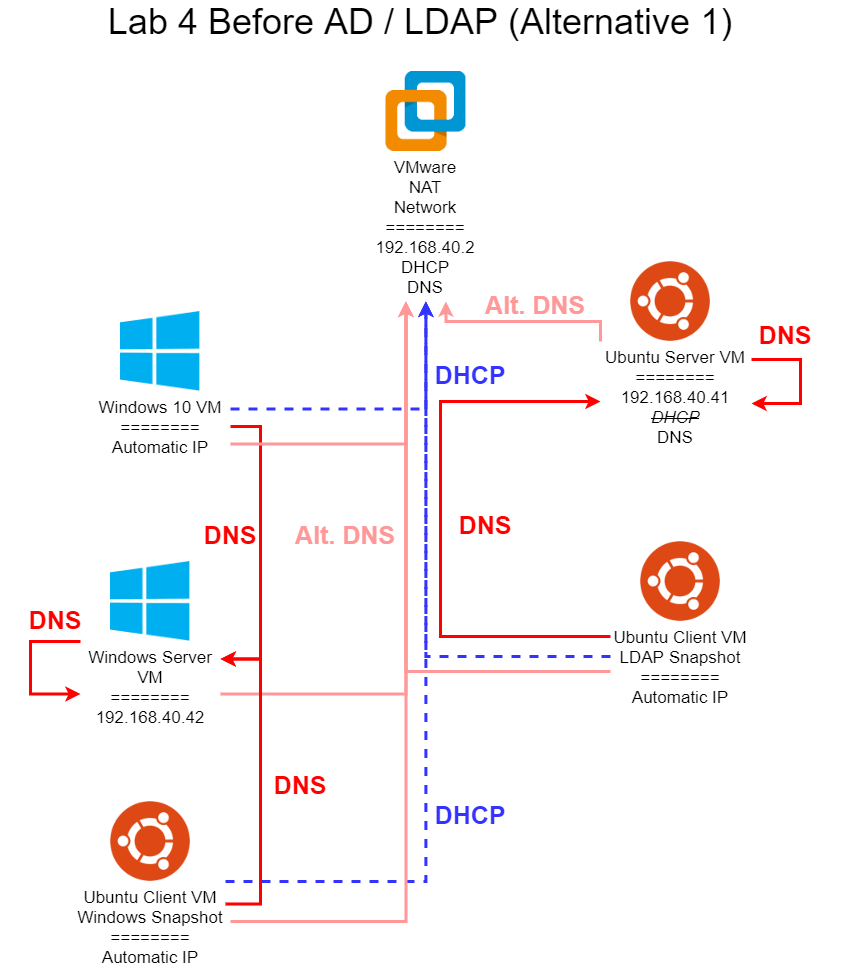

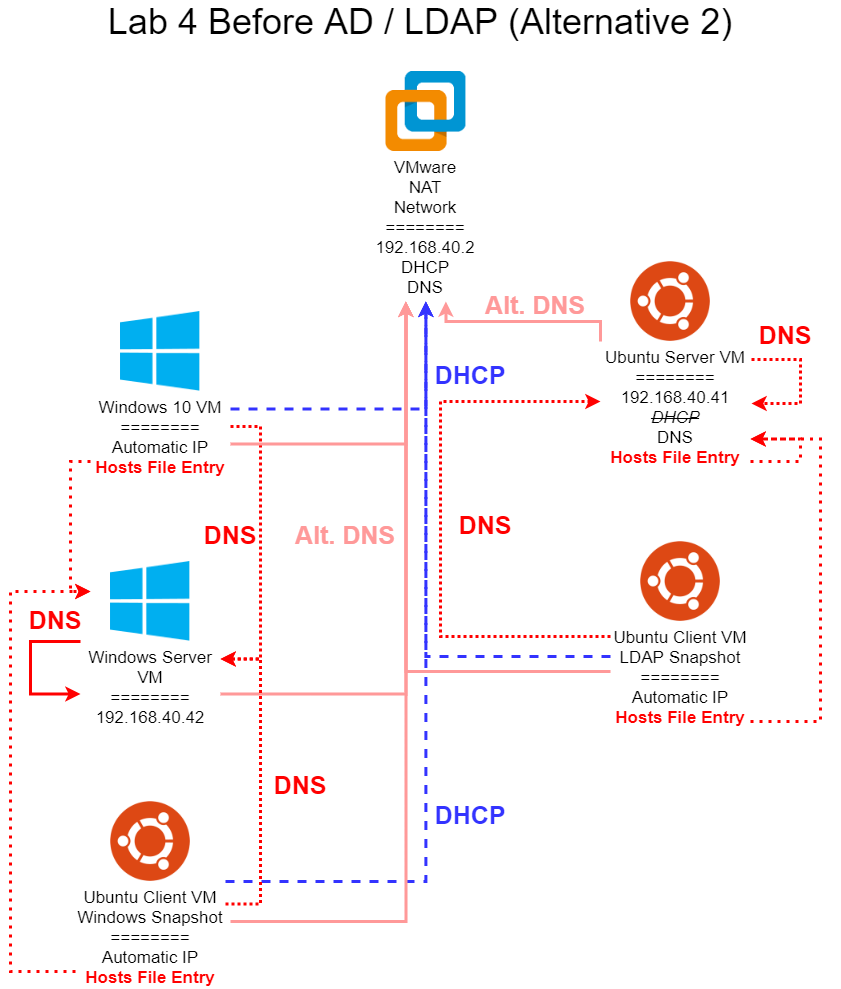

Lab 3 is all about networking. Most modern computers are completely useless without access to network resources, so this is a vital part of system administration. In this lab, you’ll configure a network for your virtual machines, as well as several networking services for them to use. We’ll also be exploring several common networking protocols, such as HTTP, DNS, and DHCP, using tools such as Wireshark to capture and inspect individual network packets. Some of this material comes directly from CIS 525, but with a focus more on system administration than building networked programs.

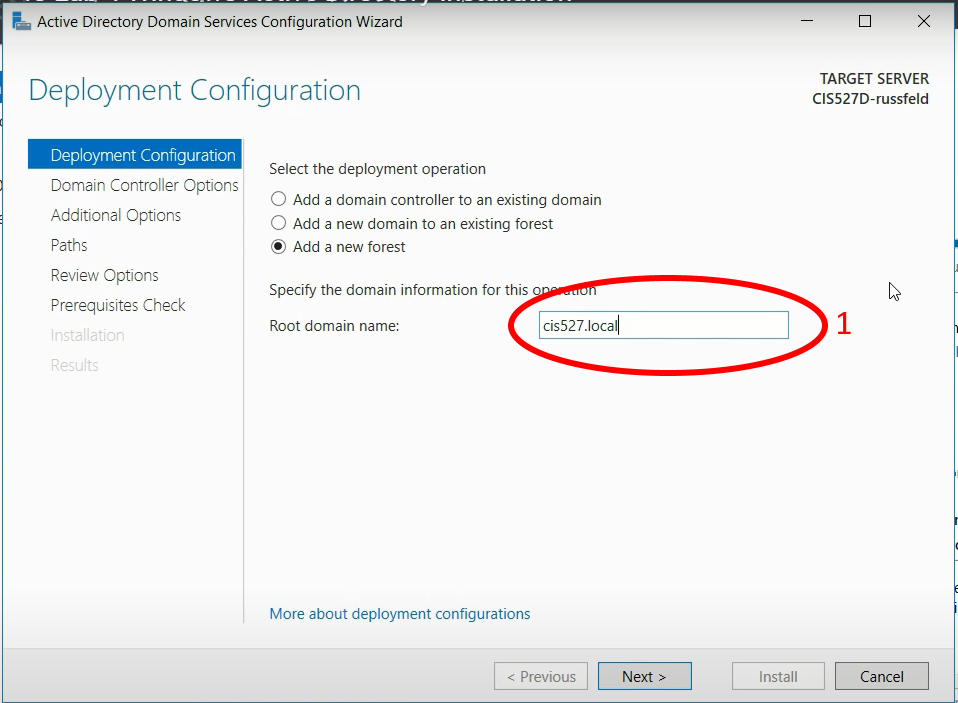

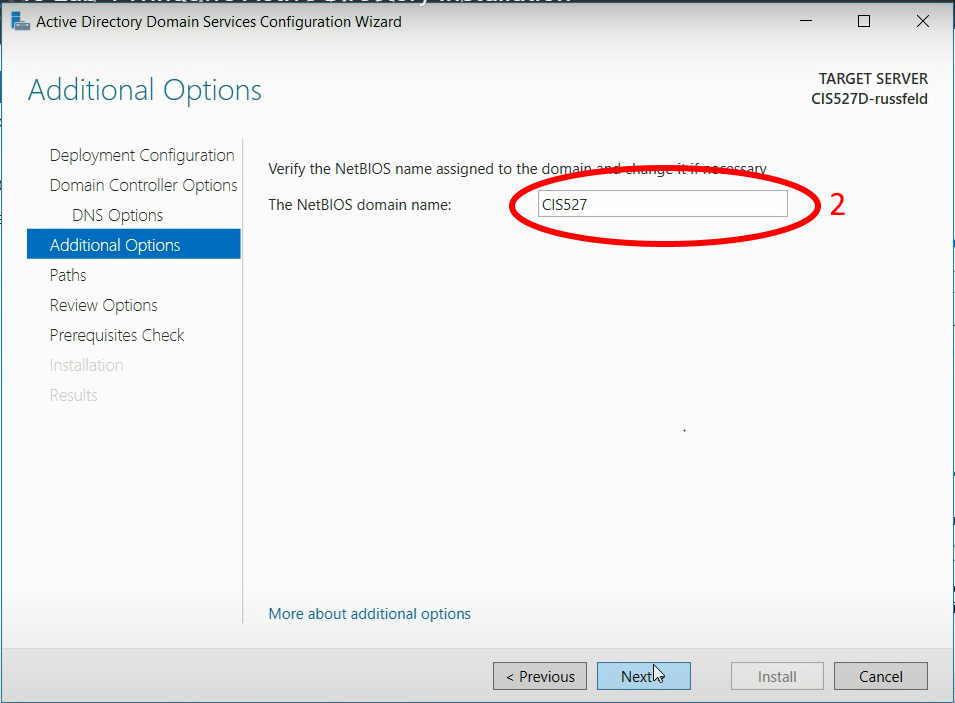

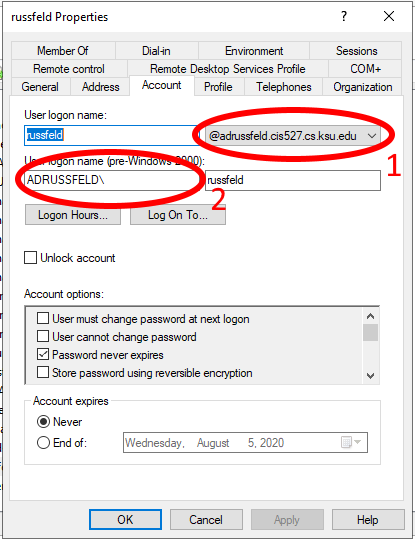

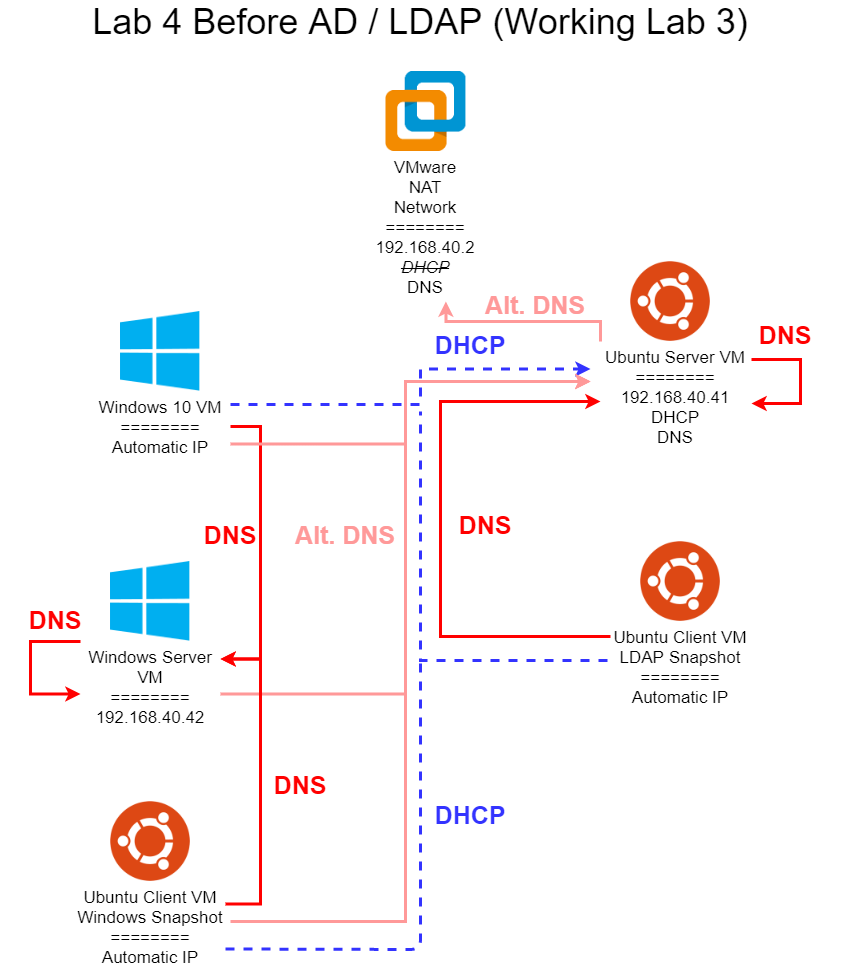

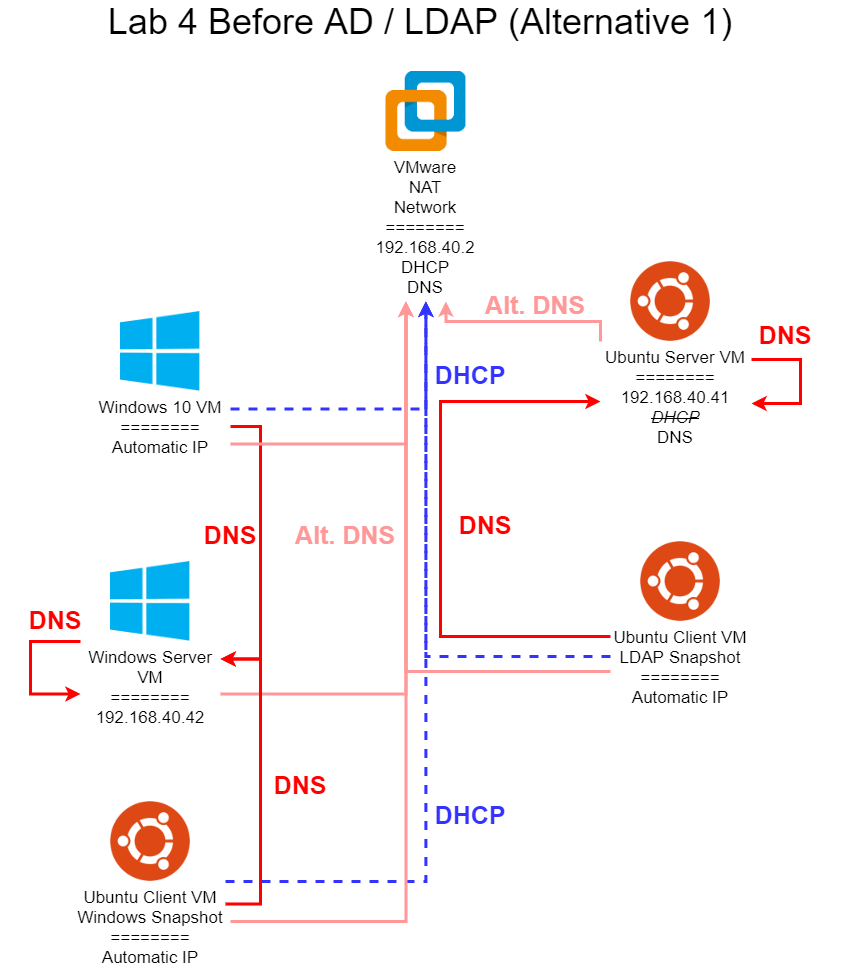

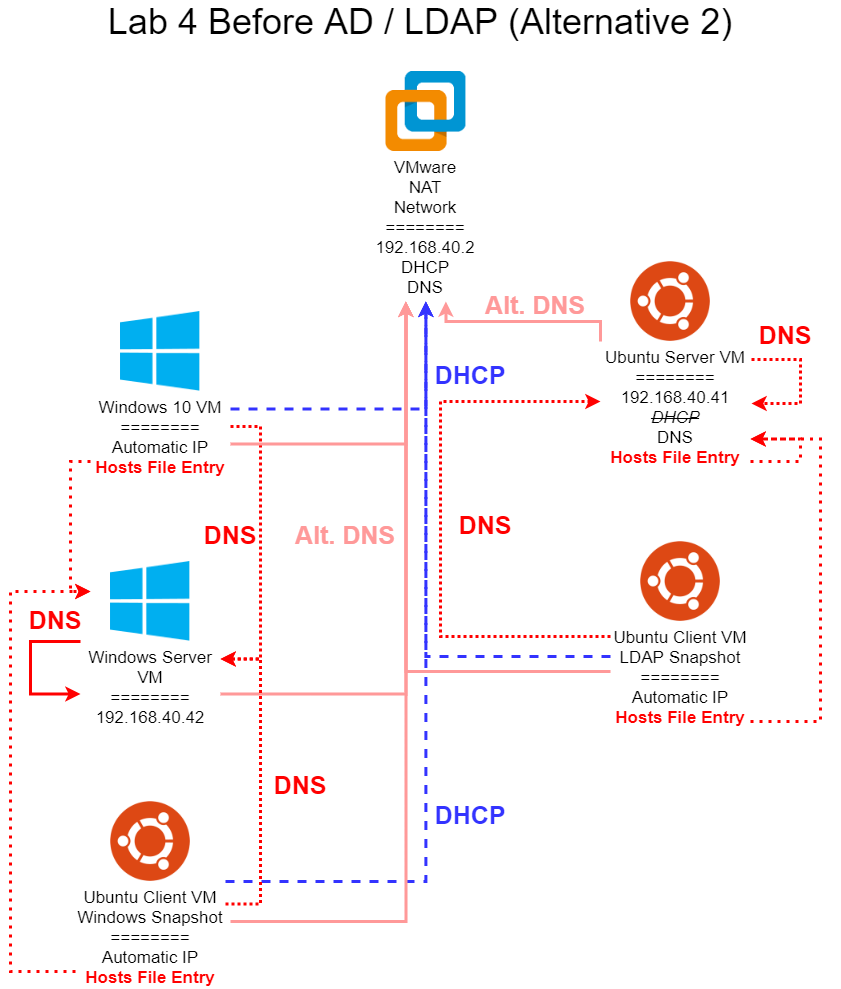

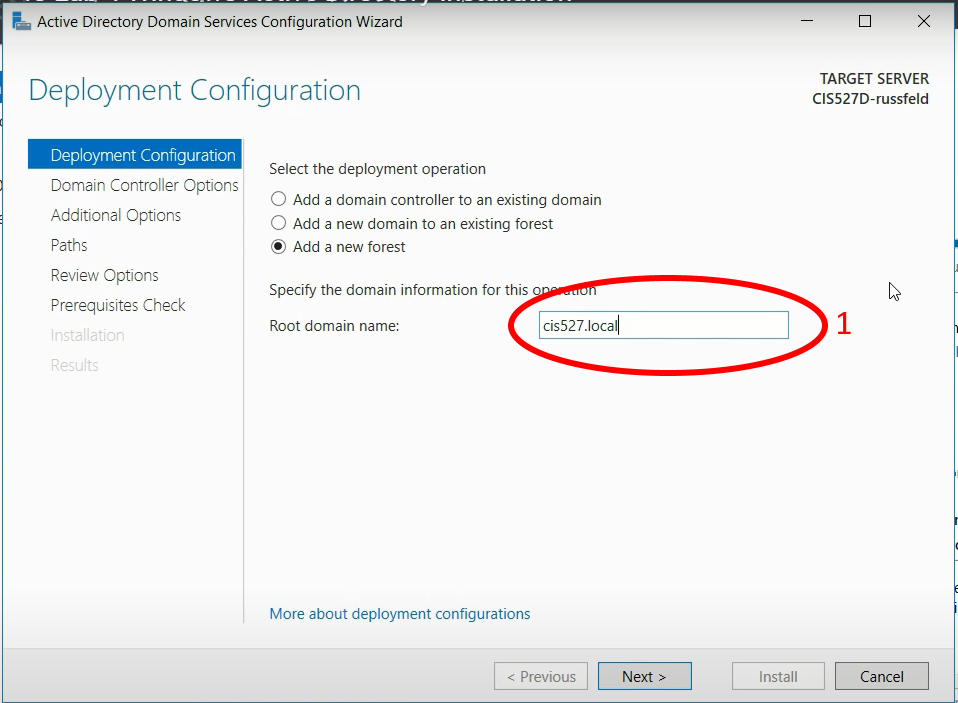

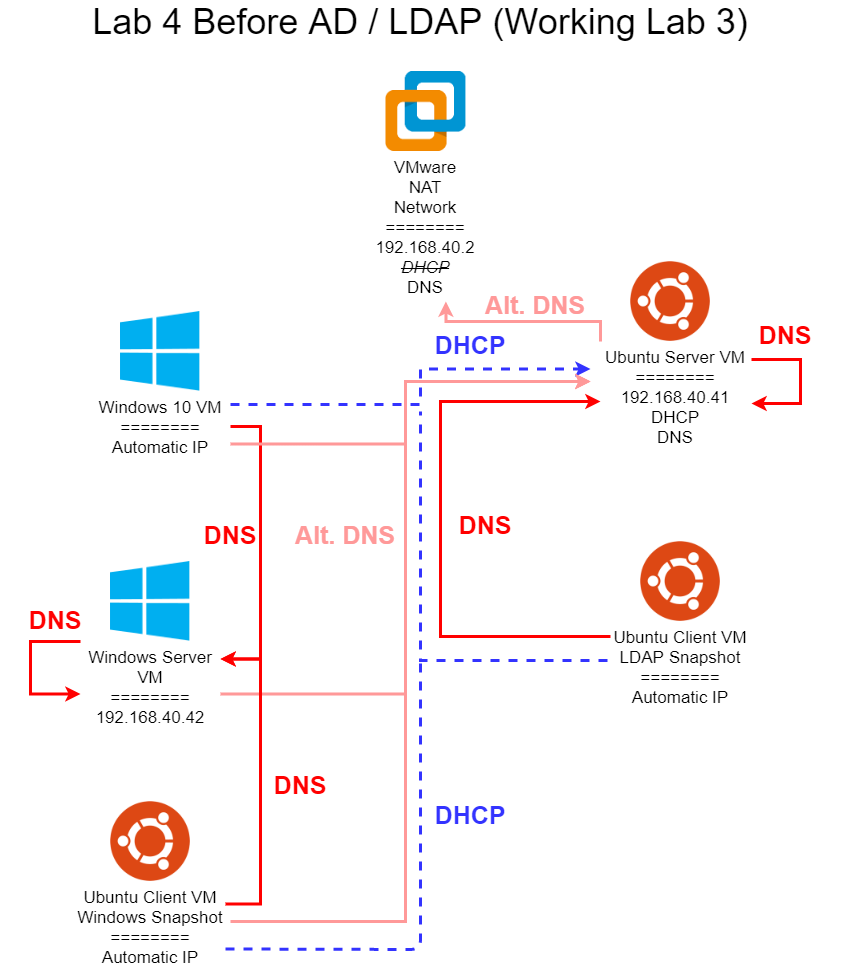

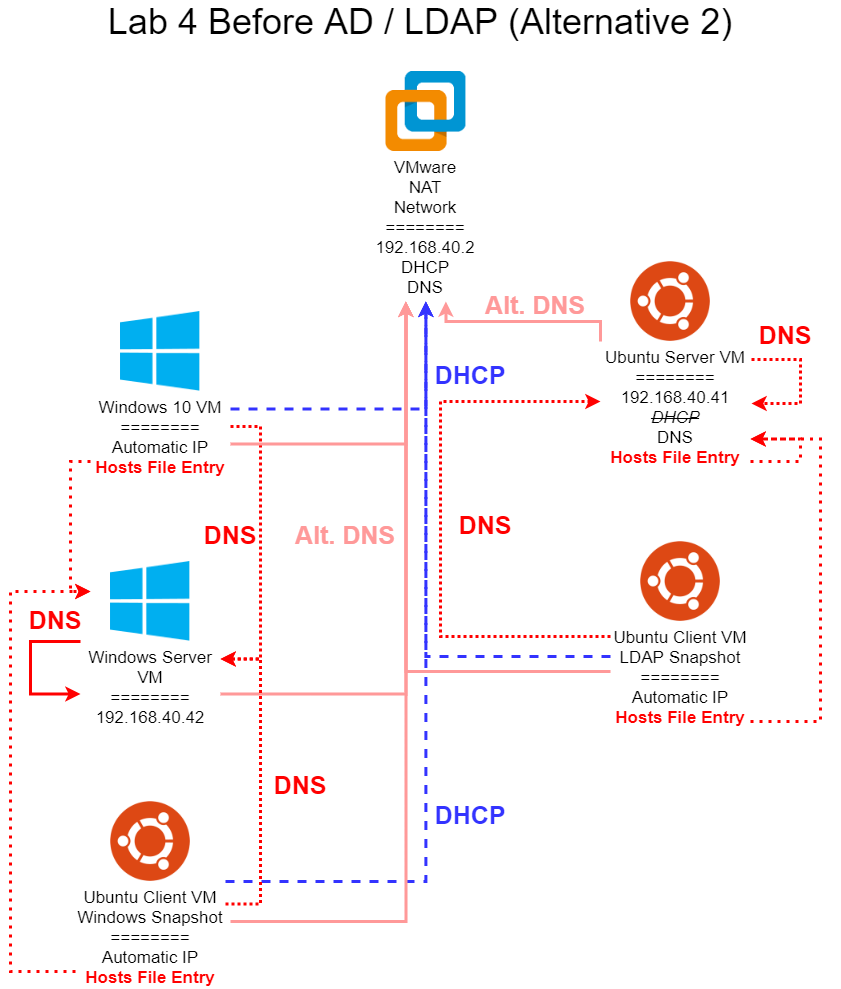

Lab 4 expands upon the previous labs by creating centralized directory services for your workstations, Using such a service, users can use a single username and password to access resources throughout an organization, much like your eID is used here at K-State. This lab is notoriously one of the most difficult and frustrating labs to complete in this course, but I believe it is also one of the most useful ones as well.

Lab 5 shifts the focus in this class toward the cloud. Many companies today operate some, if not all, of their centralized assets in the cloud. In this lab, you’ll configure several cloud resources, and we’ll discuss the tradeoffs and differences between cloud resources and other traditional computing resources.

Lab 6 focuses on building servers for a variety of enterprise use cases, including file servers, application servers, web servers, and more. We’ll discuss what it takes to provide a large number of resources for an organization, and you’ll get hands-on experience working with several of them.

The final lab introduces many smaller concerns for system administrators, but each of them is vital to creating truly stable and effective resources. You’ll learn about state-of-the-art techniques in backups, system monitoring, developer operations (or DevOps), and some of the theory behind being an effective system administrator through ITIL, formerly the Information Technology Infrastructure Library.

At the end of the semester, you’ll complete a final project that integrates and demonstrates all of your knowledge about system administration. You’ll be asked to communicate information effectively, show that you can perform your own research and analysis, and more. The final project module is already available on K-State Online for you to view, so I recommend you review it soon.

At this point, you may be asking yourself why this information is so important to learn? Here are a few thoughts from my own experience to help answer that question.

First and foremost, computers are ubiquitous in today’s world. There is hardly a career left that doesn’t involve technology in some way, from engineering and science to agriculture and even hotel and restaurant management. By learning how to work closely with these tools, you’ll have an indispensable skill set for your entire career, no matter what path you choose.

Likewise, this class gives you a chance to hone your own technical skills. Students who have previously taken this class have reported that the information covered was very helpful for them during their internships and job searches, and they were quickly able to understand how to use the corporate systems available to them.

Similarly, you’ll also learn how to maximize your effectiveness on your personal computer, through the use of scripting, virtual machines, and operating system features. It will truly take you from a normal computer user to a power user.

In addition, throughout the class you’ll be honing your communication skills, which is constantly cited as one of the most important soft skills for finding a job in the tech industry. If you are able to communicate better than your peers, you’ll be that much closer to landing your dream job.

Speaking of which, there are lots of great careers out there if you do decide to pursue system administration. Every company both large and small has need of someone with this particular skill set, and each industry has a unique set of challenges and requirements. A system administrator will always be in demand, and I can guarantee that the job is never boring.

You’ll also be able to put your skills and knowledge to good use by helping others discover how to use their computing resources most effectively. It is a great opportunity to share your knowledge with others and build those working relationships.

Finally, yes, you may even find yourself in a management position, or you may be asked to work with management. Having a strong understanding of every aspect of an organization’s technical infrastructure and what’s out there can help you make good decisions and lead effectively. There are countless stories of companies who miss out on the latest technological advances, only to find themselves left behind or worse. In the resources section below the video, you can read more about what happened to Circuit City, a large electronics retailer that chose to become complacent, and ended up bankrupt.

With that in mind, I hope you are excited to continue this course and start working on the first module.

Fall 2025 Syllabus

CIS 527 - Enterprise Systems Administration

CC 510 - Computer Systems Administration

Previous Versions

This syllabus covers both courses. They are taught using the same content.

- Instructor: Mr. Russell Feldhausen (russfeld AT ksu DOT edu)

- Office: DUE 2213, but I mostly work remotely from Kansas City, MO

- Phone: (785) 292-3121 (Call/Text)

- Website: https://russfeld.me

- Virtual Office Hours: By appointment via Zoom. Book time to meet with me

Teaching Assistant

Preferred Methods of Communication:

- Email: Email is the official method of communication for this course. Any emails sent to the instructor regarding this course should be answered within one class day. Please use the official course email address: cis527-help@ksuemailprod.onmicrosoft.com

- Ed Discussion: For short questions and discussions of course content and assignments, Ed Discussion is preferred since questions can be asked once and answered for all students. Students are encouraged to post questions there and use that space for discussion, and the instructor will strive to answer questions there as well.

- Phone/Text: Emergencies only! I will do my best to respond as quickly as I can.

Prerequisites

- CIS 527: CIS 300.

- CC 510: CC 310 or CIS 300.

Students may enroll in CIS or CC courses only if they have earned a grade of C or better for each prerequisite to those courses.

Course Description

Computer information systems form the backbone of many large organizations, and many students will be called upon in their careers to help create, manage and maintain these large systems. This course will give students knowledge and experience working with enterprise level computer systems including workstation management, file servers, web servers, networking devices, configuration management, monitoring, and more. We will mainly focus on the GNU/Linux and Microsoft Windows server software, and much of the learning will take place in hands-on lab activities working directly with these systems. In addition, students will be responsible for developing some technical documentation and communicating information about their systems in a variety of ways. Finally, throughout the course students will be exposed to a variety of information directly from system administrators across campus.

Student Learning Outcomes

After completing this course, a successful student will be able to:

- Understand the major components of an enterprise level computer network and server system

- Design and implement a simple enterprise level server system and network, as well as provision workstations on that network quickly and easily

- Communicate information about enterprise systems clearly and effectively to users of all skill levels and interests

- Develop ways to increase efficiency by automating tasks whenever possible using scripting and configuration management tools

- Understand and describe security risks in any enterprise system and any ways that they can be mitigated

- Show how to monitor enterprise systems for problems and use that information to locate and fix any issues within the system

- Work with cloud technologies and describe how they can be integrated into an enterprise information technology setup

Major Course Topics

- Configuration Management using Puppet

- Creating Secure Workstations (CIS 225 overview/review)

- Setting up an Enterprise Directory Service & Single Sign On

- Enterprise File Sharing

- Web & Application Servers

- Core Networking Services (DHCP, DNS, ICMP, etc.)

- System Monitoring & Maintenance

- Backup Strategies

- The Cloud & DevOps

Course Structure

This course is being taught 100% online and mostly asynchronous. There may be some bumps in the road. Students will work at their own pace through several modules, with due dates for completion of each module given. Material will be provided in the form of recorded videos, links to online resources, and discussion prompts. Each module will include a hands-on lab assignment, which will be graded interactively by the instructor or TAs. Assignments may also include written portions or presentations, which will be submitted online.

The course will also include a final project and presentation. More information about this can be found in the final project module on Canvas.

The Work

There is no shortcut to becoming a great programmer or system administrator. Only by doing the work will you develop the skills and knowledge to make you a successful system administrator. This course is built around that principle, and gives you ample opportunity to do the work, with as much support as we can offer.

Lectures & Quizzes: Each module will include many lectures and quizzes. The goal is to introduce you to a new topic and provide ample background information, then check for your understanding of the core concepts through the quiz. Many lectures include links to additional resources that you are welcome to review if you want to dig deeper into a particular topic. Those additional resources may also be useful when completing the lab assignments.

Lab Assignments: Throughout the semester you will be building a non-trivial system architecture iteratively; every week a new lab assignment will be due. Each lab builds upon the prior lab’s infrastructure, so it is critical that you complete each lab in a timely manner! This process also reflects the way system administration is done in the real world - breaking large projects into more readily achievable milestones helps manage the development process.

Following along that real-world theme, labs will mostly be graded on whether they achieve the goals as described in the lab assignment. You can think of each lab assignment as a directive given to you by your supervisor - if you meet those requirements, you are successful; however, if your system fails to meet those requirements, then it is not useful at all, even if it is partially complete. In practice, you may earn some partial credit for attempting a portion of a lab, but the majority of points will require full functionality.

Final Project: At the end of this course, you will design and evaluate a final project of your choosing to demonstrate your ability. This project can link back to your interest or other fields, and will serve as a capstone project for this course.

Grading

In theory, each student begins the course with an A. As you submit work, you can either maintain your A (for good work) or chip away at it (for less adequate or incomplete work). In practice, each student starts with 0 points in the gradebook and works upward toward a final point total earned out of the possible number of points. In this course, each assignment constitutes a portion of the final grade, as detailed below:

- 70% - Lab Assignments* (7 labs, 10% each lab)

- 10% - Quizzes (15 quizzes, 0.66% each)

- 10% - Discussions (5 discussions, 2% each)

- 10% - Final Project

All group work will include a REQUIRED peer evaluation component which can adjust that portion of the individual’s grade up to 50%. If a student should fail to contribute to a group assignment at all, their grade for that assignment will be reduced to a zero. Failure to complete the peer evaluation will result in a 10% grade deduction for that assignment.

Letter grades will be assigned following the standard scale:

- 90% - 100% → A

- 80% - 89.99% → B

- 70% - 79.99% → C

- 60% - 69.99% → D

- 00% - 59.99% → F

Submission, Regrading, and Early Grading Policy

As a rule, submissions in this course will not be graded until after they are due, even if submitted early. Students may resubmit assignments many times before the due date, and only the latest submission will be graded. For assignments submitted via GitHub release tag, only the tagged release that was submitted to Canvas will be graded, even if additional commits have been made. Students must create a new tagged release and resubmit that tag to have it graded for that assignment.

Once an assignment is graded, students are not allowed to resubmit the assignment for regrading or additional credit without special permission from the instructor to do so. In essence, students are expected to ensure their work is complete and meets the requirements before submission, not after feedback is given by the instructor during grading. However, students should use that feedback to improve future assignments and milestones.

For the project milestones, it is solely at the discretion of the instructor whether issues noted in the feedback for a milestone will result in grade deductions in a later milestones if they remain unresolved, though the instructor will strive to give students ample time to resolve issues before any additional grade deductions are made.

Likewise, students may ask questions of the instructor while working on the assignment and receive help, but the instructor will not perform a full code review nor give grading-level feedback until after the assignment is submitted and the due date has passed. Again, students are expected to be able to make their own judgments on the quality and completion of an assignment before submission.

That said, a student may email the instructor to request early grading on an assignment before the due date, in order to move ahead more quickly. The instructor’s receipt of that email will effectively mean that the assignment for that student is due immediately, and all limitations above will apply as if the assignment’s due date has now passed.

Collaboration Policy

In this course, all work submitted by a student should be created solely by the student without any outside assistance beyond the instructor and TA/GTAs. Students may seek outside help or tutoring regarding concepts presented in the course, but should not share or receive any answers, source code, program structure, or any other materials related to the course. Learning to debug problems is a vital skill, and students should strive to ask good questions and perform their own research instead of just sharing broken source code when asking for assistance.

That said, the field of system administration requires the use of lots of online documentation and reference materials, and the point of the class is to learn how to effectively use those resources instead of “reinventing the wheel from scratch” in each assignment. Whenever content in an assignment is taken from an outside source, this should be noted somewhere in the assignment.

Late Work

Warning

While my original intent was to have this course completely asynchronous and self-paced, I’ve found that students prefer having more strict deadlines than more flexibility, and many times they will perform better in the course when deadlines are enforced. Therefore, deadlines will be strictly enforced this semester. Read this late work policy very carefully! If you are unsure how to interpret it, please contact the instructors via email. Not understanding the policy does not mean that it won’t apply to you!

Due to the asynchronous nature of this course, staying on task and keeping up with deadlines is very important. Therefore, all course work must be submitted, and all interactively graded materials must be graded with the instructor or TA, on or before the posted due date to receive full credit. For labs, it is not simply enough to contact the instructor/TA asking to schedule a grading time before the due date; the grading itself must be completed before the due date in order to be considered “on time”.

Any work submitted and graded after the due date is subject to a deduction of 10% of the total points possible on the assignment for each class day that the assignment is late. For example, if an assignment is due on a Friday and is submitted the following Tuesday, it will be subject to a reduction of 20% of the total points possible, or 10% for each class day it was late (Monday and Tuesday in this example). Grading done on non-class days will be considered to have been submitted on the next available class day. Deductions for non-class days will still be automatically entered by Canvas - contact the instructor to have these deductions removed.

These deductions will only be applied to grades above 50% of the total points on the assignment. So, if you scored higher than 50%, your grade will be reduced by the late penalty down to a minimum grade of 50%. If you scored lower than 50% on the assignment, no deductions will be applied.

Also, note that several labs in this class require successful completion of previous labs. If you are behind and choose to skip a lab assignment to catch up, you may still have to make up some or all of that work in order to complete a later lab. You may contact the instructor to discuss options for obtaining model solutions to previous labs if needed.

All course work must be submitted, and all interactively graded materials must be graded with the instructor, on or before the last day of the semester in which the student is enrolled in the course in order for it to be graded on time. No late work will be accepted after that date.

If you have extenuating circumstances, please discuss them with the instructor as soon as they arise so other arrangements can be made. If you know you have upcoming events that will prevent you from completing work in this course, you should contact the instructor ASAP and plan on working ahead before your event instead of catching up afterwards. If you find that you are getting behind in the class, you are encouraged to speak to the instructor for options to catch up quickly.

Incomplete Policy

Students should strive to complete this course in its entirety before the end of the semester in which they are enrolled. However, since retaking the course would be costly and repetitive for students, we would like to give students a chance to succeed with a little help rather than immediately fail students who are struggling.

If you are unable to complete the course in a timely manner, please contact the instructor to discuss an incomplete grade. Incomplete grades are given solely at the instructor’s discretion. See the official K-State Grading Policy for more information. In general, poor time management alone is not a sufficient reason for an incomplete grade.

Unless otherwise noted in writing on a signed Incomplete Agreement Form, the following stipulations apply to any incomplete grades given in this course:

- Students who request an incomplete will have their final grade capped at a C.

- Students will be given a maximum of 8 calendar weeks from the end of the enrolled semester to complete the course. It is expected that students have completed at least half of the course in order to qualify for an incomplete.

- Students understand that access to instructor and TA assistance may be limited after the end of an academic semester due to holidays and other obligations.

- For CC courses only:

- Students may receive at most two incompletes in Computational Core courses throughout their time in the program.

- Any modules in a future CC course which depend on incomplete work will not be accessible until the previous course is finished

- For example, if a student is given an incomplete in CC 210, then all modules in CC 310 will be inaccessible until CC 210 is complete

Recommended Texts & Supplies

To participate in this course, students must have access to a modern web browser and broadband internet connection. All course materials will be provided via Canvas. Modules may also contain links to external resources for

additional information, such as programming language documentation.

The online textbook for this course can be found at https://textbooks.cs.ksu.edu/cis527/. All relevant pages from the textbook are also embedded into the appropriate Canvas modules.

Students in this course are expected to have access to a computer with virtual machine software (VMware, Virtual Box, Parallels, or other) installed and running. The computer should be capable of running multiple VMs simultaneously, which usually means having 8GB of RAM and a moderately powerful processor. Contact the instructor if you have questions or concerns.

All K-State Computer Science students have access to free software from Microsoft and VMWare. More information can be found on the K-State CS Support Website.

Since this class covers such a wide range of material, no single textbook will suffice. Therefore, students who would like a textbook should refer to resources available through the K-State Library and other online resources. The O’Riley For Higher Education digital library contains an entire catalog of books published on that platform, and it is a great resource for this course.

We will also use several online resources as needed.

This book contains useful information for anyone thinking about pursuing a career in system administration or information technology in general:

“The Practice of System and Network Administration” by Thomas Limoncelli, Christina Hogan and Strata Chalup.

ISBN 0321492668 - eBook Editions Available - Amazon Link

Subject to Change

The details in this syllabus are not set in stone. Due to the flexible nature of this class, adjustments may need to be made as the semester progresses, though they will be kept to a minimum. If any changes occur, the changes will be posted on the K-State Canvas page for this course and emailed to all students.

Standard Syllabus Statements

Info

The statements below are standard syllabus statements from K-State and our program. The latest versions are available online here.

Academic Honesty

Kansas State University has an Honor and Integrity System based on personal integrity, which is presumed to be sufficient assurance that, in academic matters, one’s work is performed honestly and without unauthorized assistance. Undergraduate and graduate students, by registration, acknowledge the jurisdiction of the Honor and Integrity System. The policies and procedures of the Honor and Integrity System apply to all full and part-time students enrolled in undergraduate and graduate courses on-campus, off-campus, and via distance learning. A component vital to the Honor and Integrity System is the inclusion of the Honor Pledge which applies to all assignments, examinations, or other course work undertaken by students. The Honor Pledge is implied, whether or not it is stated: “On my honor, as a student, I have neither given nor received unauthorized aid on this academic work.” A grade of XF can result from a breach of academic honesty. The F indicates failure in the course; the X indicates the reason is an Honor Pledge violation.

For this course, a violation of the Honor Pledge will result in sanctions such as a 0 on the assignment or an XF in the course, depending on severity. Actively seeking unauthorized aid, such as posting lab assignments on sites such as Chegg or StackOverflow, or asking another person to complete your work, even if unsuccessful, will result in an immediate XF in the course.

This course assumes that all your course work will be done by you. Use of AI text and code generators such as ChatGPT and GitHub Copilot in any submission for this course is strictly forbidden unless explicitly allowed by your instructor. Any unauthorized use of these tools without proper attribution is a violation of the K-State Honor Pledge.

We reserve the right to use various platforms that can perform automatic plagiarism detection by tracking changes made to files and comparing submitted projects against other students’ submissions and known solutions. That information may be used to determine if plagiarism has taken place.

Students with Disabilities

At K-State it is important that every student has access to course content and the means to demonstrate course mastery. Students with disabilities may benefit from services including accommodations provided by the Student Access Center. Disabilities can include physical, learning, executive functions, and mental health. You may register at the Student Access Center or to learn more contact:

- Manhattan/Olathe/Global Campus – Student Access Center

- K-State Salina Campus – Julie Rowe; Student Success Coordinator

Students already registered with the Student Access Center please request your Letters of Accommodation early in the semester to provide adequate time to arrange your approved academic accommodations. Once SAC approves your Letter of Accommodation it will be e-mailed to you, and your instructor(s) for this course. Please follow up with your instructor to discuss how best to implement the approved accommodations.

Expectations for Conduct

All student activities in the University, including this course, are governed by the Student Judicial Conduct Code as outlined in the Student Governing Association By Laws, Article V, Section 3, number 2. Students who engage in behavior that disrupts the learning environment may be asked to leave the class.

Mutual Respect and Inclusion in K-State Teaching & Learning Spaces

At K-State, faculty and staff are committed to creating and maintaining an inclusive and supportive learning environment for students from diverse backgrounds and perspectives. K-State courses, labs, and other virtual and physical learning spaces promote equitable opportunity to learn, participate, contribute, and succeed, regardless of age, race, color, ethnicity, nationality, genetic information, ancestry, disability, socioeconomic status, military or veteran status, immigration status, Indigenous identity, gender identity, gender expression, sexuality, religion, culture, as well as other social identities.

Faculty and staff are committed to promoting equity and believe the success of an inclusive learning environment relies on the participation, support, and understanding of all students. Students are encouraged to share their views and lived experiences as they relate to the course or their course experience, while recognizing they are doing so in a learning environment in which all are expected to engage with respect to honor the rights, safety, and dignity of others in keeping with the K-State Principles of Community.

If you feel uncomfortable because of comments or behavior encountered in this class, you may bring it to the attention of your instructor, advisors, and/or mentors. If you have questions about how to proceed with a confidential process to resolve concerns, please contact the Student Ombudsperson Office. Violations of the student code of conduct can be reported using the Code of Conduct Reporting Form. You can also report discrimination, harassment or sexual harassment, if needed.

Netiquette

Info

This is our personal policy and not a required syllabus statement from K-State. It has been adapted from this statement from K-State Online, and theRecurse Center Manual. We have adapted their ideas to fit this course.

Online communication is inherently different than in-person communication. When speaking in person, many times we can take advantage of the context and body language of the person speaking to better understand what the speaker means, not just what is said. This information is not present when communicating online, so we must be much more careful about what we say and how we say it in order to get our meaning across.

Here are a few general rules to help us all communicate online in this course, especially while using tools such as Canvas or Discord:

- Use a clear and meaningful subject line to announce your topic. Subject lines such as “Question” or “Problem” are not helpful. Subjects such as “Logic Question in Project 5, Part 1 in Java” or “Unexpected Exception when Opening Text File in Python” give plenty of information about your topic.

- Use only one topic per message. If you have multiple topics, post multiple messages so each one can be discussed independently.

- Be thorough, concise, and to the point. Ideally, each message should be a page or less.

- Include exact error messages, code snippets, or screenshots, as well as any previous steps taken to fix the problem. It is much easier to solve a problem when the exact error message or screenshot is provided. If we know what you’ve tried so far, we can get to the root cause of the issue more quickly.

- Consider carefully what you write before you post it. Once a message is posted, it becomes part of the permanent record of the course and can easily be found by others.

- If you are lost, don’t know an answer, or don’t understand something, speak up! Email and Canvas both allow you to send a message privately to the instructors, so other students won’t see that you asked a question. Don’t be afraid to ask questions anytime, as you can choose to do so without any fear of being identified by your fellow students.

- Class discussions are confidential. Do not share information from the course with anyone outside of the course without explicit permission.

- Do not quote entire message chains; only include the relevant parts. When replying to a previous message, only quote the relevant lines in your response.

- Do not use all caps. It makes it look like you are shouting. Use appropriate text markup (bold, italics, etc.) to highlight a point if needed.

- No feigning surprise. If someone asks a question, saying things like “I can’t believe you don’t know that!” are not helpful, and only serve to make that person feel bad.

- No “well-actually’s.” If someone makes a statement that is not entirely correct, resist the urge to offer a “well, actually…” correction, especially if it is not relevant to the discussion. If you can help solve their problem, feel free to provide correct information, but don’t post a correction just for the sake of being correct.

- Do not correct someone’s grammar or spelling. Again, it is not helpful, and only serves to make that person feel bad. If there is a genuine mistake that may affect the meaning of the post, please contact the person privately or let the instructors know privately so it can be resolved.

- Avoid subtle -isms and microaggressions. Avoid comments that could make others feel uncomfortable based on their personal identity. See the syllabus section on Diversity and Inclusion above for more information on this topic. If a comment makes you uncomfortable, please contact the instructor.

- Avoid sarcasm, flaming, advertisements, lingo, trolling, doxxing, and other bad online habits. They have no place in an academic environment. Tasteful humor is fine, but sarcasm can be misunderstood.

As a participant in course discussions, you should also strive to honor the diversity of your classmates by adhering to the K-State Principles of Community.

Discrimination, Harassment, and Sexual Harassment

Kansas State University is committed to maintaining academic, housing, and work environments that are free of discrimination, harassment, and sexual harassment. Instructors support the University’s commitment by creating a safe learning environment during this course, free of conduct that would interfere with your academic opportunities. Instructors also have a duty to report any behavior they become aware of that potentially violates the University’s policy prohibiting discrimination, harassment, and sexual harassment, as outlined by PPM 3010.

If a student is subjected to discrimination, harassment, or sexual harassment, they are encouraged to make a non-confidential report to the University’s Office of Civil Rights and Title IX (OCR & TIX) using the online reporting form. Incident disclosure is not required to receive resources at K-State. Reports that include domestic and dating violence, sexual assault, or stalking, should be considered for reporting by the complainant to the Kansas State University Police Department or the Riley County Police Department. Reports made to law enforcement are separate from reports made to OIE. A complainant can choose to report to one or both entities. Confidential support and advocacy can be found with the K-State Center for Advocacy, Response, and Education (CARE). Confidential mental health services can be found with Lafene Counseling and Psychological Services (CAPS). Academic support can be found with the Student Support and Accountability (SSA) office. SSA is a non-confidential resource. OCR & TIX also provides a comprehensive list of resources on their website. If you have questions about non-confidential and confidential resources, please contact OCR & TIX at civilrights@k-state.edu or (785) 532–6220.

Academic Freedom Statement

Kansas State University is a community of students, faculty, and staff who work together to discover new knowledge, create new ideas, and share the results of their scholarly inquiry with the wider public. Although new ideas or research results may be controversial or challenge established views, the health and growth of any society requires frank intellectual exchange. Academic freedom protects this type of free exchange and is thus essential to any university’s mission.

Moreover, academic freedom supports collaborative work in the pursuit of truth and the dissemination of knowledge in an environment of inquiry, respectful debate, and professionalism. Academic freedom is not limited to the classroom or to scientific and scholarly research, but extends to the life of the university as well as to larger social and political questions. It is the right and responsibility of the university community to engage with such issues.

Campus Safety

Kansas State University is committed to providing a safe teaching and learning environment for student and faculty members. In order to enhance your safety in the unlikely case of a campus emergency make sure that you know where and how to quickly exit your classroom and how to follow any emergency directives. Current Campus Emergency Information is available at the University’s Advisory webpage.

Student Resources

K-State has many resources to help contribute to student success. These resources include accommodations for academics, paying for college, student life, health and safety, and others. Check out the Student Guide to Help and Resources: One Stop Shop for more information.

Student Academic Creations

Student academic creations are subject to Kansas State University and Kansas Board of Regents Intellectual Property Policies. For courses in which students will be creating intellectual property, the K-State policy can be found at University Handbook, Appendix R: Intellectual Property Policy and Institutional Procedures (part I.E.). These policies address ownership and use of student academic creations.

Mental Health

Your mental health and good relationships are vital to your overall well-being. Symptoms of mental health issues may include excessive sadness or worry, thoughts of death or self-harm, inability to concentrate, lack of motivation, or substance abuse. Although problems can occur anytime for anyone, you should pay extra attention to your mental health if you are feeling academic or financial stress, discrimination, or have experienced a traumatic event, such as loss of a friend or family member, sexual assault or other physical or emotional abuse.

If you are struggling with these issues, do not wait to seek assistance.

For Kansas State Salina Campus:

For Global Campus/K-State Online:

University Excused Absences

K-State has a University Excused Absence policy (Section F62). Class absence(s) will be handled between the instructor and the student unless there are other university offices involved. For university excused absences, instructors shall provide the student the opportunity to make up missed assignments, activities, and/or attendance specific points that contribute to the course grade, unless they decide to excuse those missed assignments from the student’s course grade. Please see the policy for a complete list of university excused absences and how to obtain one. Students are encouraged to contact their instructor regarding their absences.

Copyright Notice

© The materials in this online course fall under the protection of all intellectual property, copyright and trademark laws of the U.S. The digital materials included here come with the legal permissions and releases of the copyright holders. These course materials should be used for educational purposes only; the contents should not be distributed electronically or otherwise beyond the confines of this online course. The URLs listed here do not suggest endorsement of either the site owners or the contents found at the sites. Likewise, mentioned brands (products and services) do not suggest endorsement. Students own copyright to what they create.

Subsections of Secure Workstations

Introduction

YouTube VideoResources

Video Script

Welcome to the first module in this class! Module 1 is all about creating secure workstations. In this module, you’ll learn how to install virtual machine software, install the operating systems we’ll be using in the class, and then configure several aspects of the operating systems. You’ll create users, manage file permissions, install software, and secure those systems.

Before we begin, here is a short overview of one major concept in this module - the operating system. Every computer you use has some sort of operating system installed, even if you don’t realize it. For this module, we’ll be using the two most commonly used operating systems in industry today, Microsoft Windows 10 and Ubuntu Linux. I’m guessing that most of you have used at least one of these systems before, and in fact many of you are probably using one of them now.

Operating systems make up the core of a modern computer. This diagram shows exactly where the operating system fits in a larger hierarchy. A computer consists of hardware, and the operating system is the program that runs directly on the hardware. It is responsible for interfacing with the hardware, and running the applications needed by the user. On the very first computers, each application was itself an operating system. This allowed the programs to directly interface with the hardware, but the major drawback was that only one program could run at a time, and the computer had to be restarted between each program. In addition, each program would need to be customized to match the hardware it was running on.

By using an operating system, applications can be much more generalized, and multiple applications can be running at the same time. Meanwhile, hardware can be changed, sometimes even while the computer is running, and the operating system will manage the necessary interfaces to use that hardware. It is a very efficient system.

The major part of the operating system is called the kernel. It is the part specifically responsible for creating the interface between user applications and the hardware on the system. In fact, most of what you may consider an operating system is in fact applications running on the kernel. The start menu, control panel, and registry are actually applications in Windows. Because of this, there is some disagreement over whether they are considered part of the operating system or not. The same discussion has been going on for years in the Linux community. See the resources section for links to the discussion of GNU vs. Linux.

For this course, anything referring to the kernel or programs typically bundled along with the kernel will be considered the operating system. This follows the typical convention in most system administrator resources online.

If you are interested in learning about how operating system are built and how the kernel functions, consider taking CIS 520: Operating Systems.

Next, we’ll start discussing Windows 10 and Ubuntu Linux in detail.

Assignment

Warning

This is the assignment page for Lab 1. It is placed before the rest of the module’s content so you may begin working on it as you review the content. Click Next below to continue to the rest of the module.

Lab 1 - Secure Workstations

Instructions

Create two virtual machines meeting the specifications given below. The best way to accomplish this is to treat this assignment like a checklist and check things off as you complete them.

If you have any questions about these items or are unsure what they mean, please contact the instructor. Remember that part of being a system administrator (and a software developer in general) is working within vague specifications to provide what your client is requesting, so eliciting additional information is a very necessary skill.

Note

To be more blunt - this specification may be purposefully designed to be vague, and it is your responsibility to ask questions about any vagaries you find. Once you begin the grading process, you cannot go back and change things, so be sure that your machines meet the expected specification regardless of what is written here. –Russ

Also, to complete many of these items, you may need to refer to additional materials and references not included in this document. System administrators must learn how to make use of available resources, so this is a good first step toward that. Of course, there’s always Google!

Time Expectation

This lab may take anywhere from 1 - 6 hours to complete, depending on your previous experience working with these tools and the speed of the hardware you are using. Installing virtual machines and operating systems is very time-consuming the first time through the process, but it will be much more familiar by the end of this course.

Software

This lab is written with the expectation that most students will be using VMware Workstation or VMware Fusion to complete the assignment. That software is available free of charge on the Broadcom Website ( Download Workstation and Download Fusion ) and is free for personal and academic use, and it is highly recommended for students who are new to working with virtual machines, since most of the assignments in this class are tailored to the use of that platform.

If you are using another virtualization platform, you may have to adapt these instructions to fit. If you are unsure about any specification and how it applies to your setup, please contact the instructor.

You will also need installation media for the following operating systems:

- Windows 11 Version 24H2 or later - Visit the Azure Dev Tools for Teaching page from Microsoft to download this software. See the Azure Dev Tools page on the CS Support Wiki for instructions.

- Look for Windows 11 Education, Version 24H2 on the list of software available on the Azure Dev Tools site.

- File Name:

en-us_windows_11_consumer_editions_version_24h2_x64_dvd_1d5fcad3.iso - SHA 256 Hash:

b56b911bf18a2ceaeb3904d87e7c770bdf92d3099599d61ac2497b91bf190b11 - Your file may vary as Microsoft constantly updates these installers.

- You may choose to upgrade to a later version of Windows 11 if prompted while installing updates, such as 25H1.

- Ubuntu 24.04 LTS (Noble Numbat) or later - Download from Ubuntu, an official Ubuntu Mirror (this is usually the fastest option), or the K-State CS Mirror (this is great if you are on campus).

- File Name:

ubuntu-24.04.3-desktop-amd64.iso - SHA 256 Hash:

faabcf33ae53976d2b8207a001ff32f4e5daae013505ac7188c9ea63988f8328 - If a point release is available (ex: 24.04.4), feel free to us that version. Do not upgrade to a newer LTS or non-LTS release such as Ubuntu 25.04, as those versions may have significant changes that are not covered in these assignments.

Prior Versions

The original course materials were developed for Windows 10 Version 1803 and Ubuntu 18.04 LTS. Some course materials may still show the older versions. Students should use the software versions listed in bold above if at all possible, as these assignments have been verified using those versions. If not, please contact the instructor for alternative options. If you find any errors or issues using the updated versions of these systems, please contact the instructor.

Task 0: Install Virtualization Software

Install the virtualization software platform of your choice. It must support using Windows 11 and Ubuntu 24.04 as a guest OS. In general, you’ll need the latest version of the software.

VMware Workstation or VMware Fusion is recommended and available free of charge on the VMware Website and is free for personal and academic use. Download Workstation or Fusion Here

You may need to install the latest version available for download and then update it within the software to get to the absolute latest version that supports the latest guest OS versions.

VMWare Licenses

VMWare licenses are now free for personal and academic uses starting in 2024. You should be able to download the latest version and install it without a product key. - Russ

Task 1: Create a Windows 11 Virtual Machine

Create a new virtual machine for Windows 11. It should have 60 GB of storage available. If given the option, do not pre-allocate the storage, but do allow it to be separated into multiple files. This will make the VM easier to work with down the road. It should also have at least 2 GB of RAM. You may allocate more RAM if desired. You may also allocate additional CPU cores for better performance if desired.

Install Windows 11 in that virtual machine to a single partition. You may use the express settings when configuring Windows. Do not use a Microsoft account to sign in! Instead, create a local (non-Microsoft) account as defined below. You may also be asked to set the computer name, which is given below.

Note

Windows 11 has made it even more difficult to create a local account when installing. A video in this chapter discusses the process for Windows 10, but an extra step is now needed for Windows 11. Refer to this guide from Tom’s Hardware. Notice on that page that the command used in step 3 is OOBE\BYPASSNRO (without a space).

Windows 11 may also require 4 GB of RAM allocated to install. I believe that once you’ve installed Windows 11 you can reduce the RAM allocated in VMWare to 2 GB and it should work well.

Configure the Windows 11 Virtual Machine as specified below.

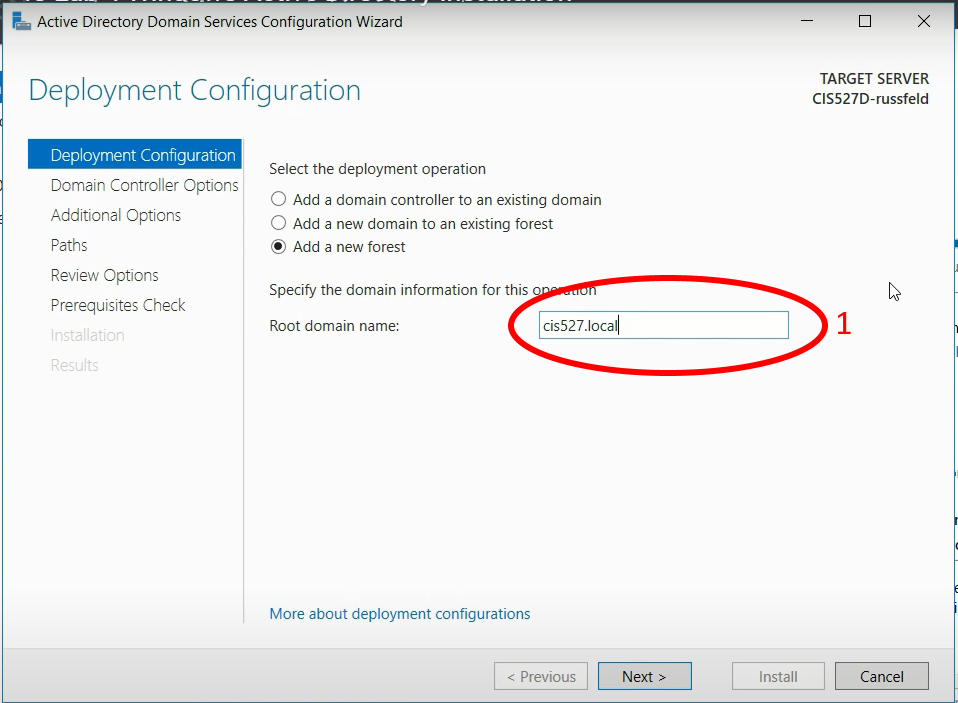

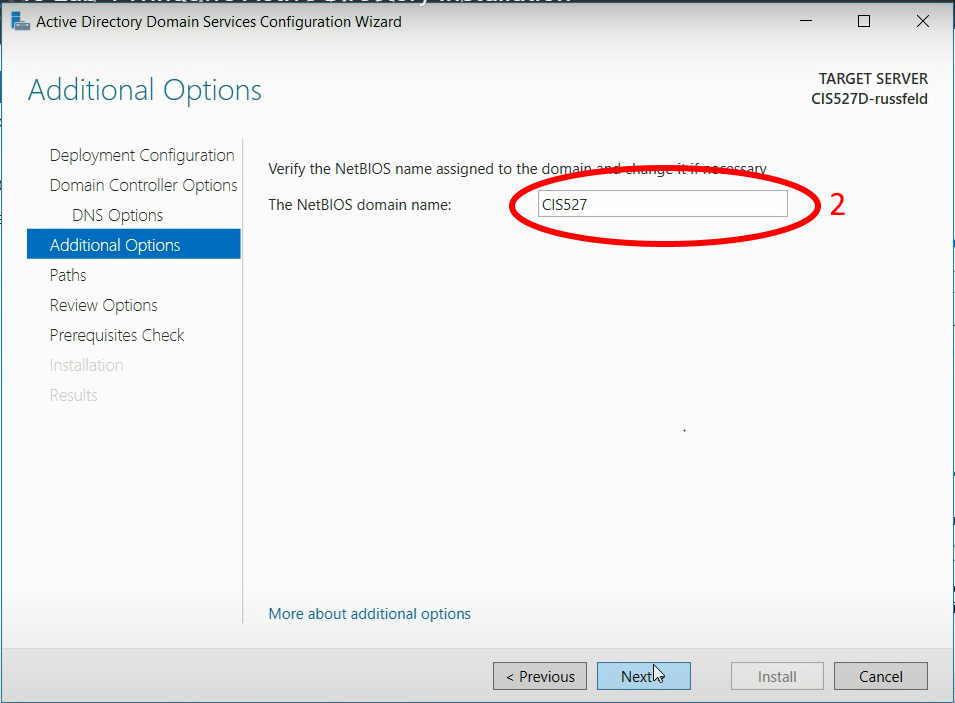

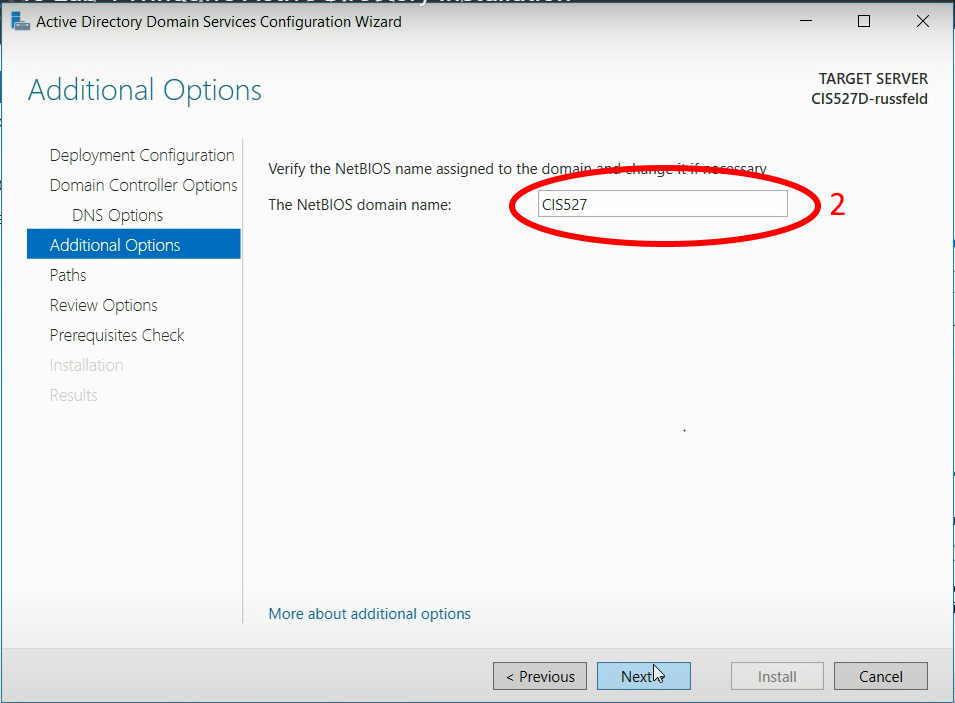

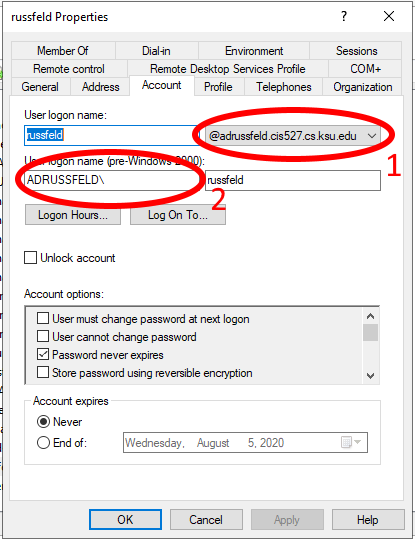

Computer Name: cis527w-<your eID> (example: cis527w-russfeld)

Don’t Forget To Set Computer Name!

This is very important, as it allows us to track your virtual machine on the K-State network in case something goes wrong in a later lab. By including both the class and your eID, support staff will know who to contact. A majority of students have missed this step in previous semesters, so don’t forget! The computer name must be changed after the Windows installation is complete –Russ

Primary User Account:

- Username:

cis527 | Password: cis527_windows - It should be a member of Administrators & Users groups

Other User Accounts:

AdminAccount | AdminPassword123 (Administrators & Users group)NormalAccount | NormalPassword123 (Users group)GuestAccount | GuestPassword123 (Guests group only)EvilAccount | EvilPassword123 (Users group)

Install Software

Configure Firewall

- Make sure Windows Firewall is enabled

- Allow all incoming connections to port 80 (for IIS)

Tip

You can test this by accessing the Windows VM IP Address from Firefox running on your Ubuntu VM, provided they are on the same virtual network.

Install Windows Updates: Run Windows Update and reboot as necessary until all available updates are installed.

Multiple Updates Required!

Even though you may have installed a particular version of Windows, such as 24H2, you should run updates repeatedly until there are no more updates available. You may end up installing at least one major update rollup. Keep going until you are sure there are no more updates to be found.

Automatic Updates: Make sure the system is set to download and install security updates automatically.

Task 3: Windows Files & Permissions

Warning

Read the whole task before you start! You have been warned. –Russ

Create the folder C:\cis527. It should be owned by the cis527 account, but make sure all other users can read and write to that folder.

Within C:\cis527, create a folder for each user created during task 2 except for cis527, with the folder name matching the user’s name. Make sure that each folder is owned by the user of the same name, and that that user has full permissions to its namesake folder.

- For example, user

AdminAccount should have a folder C:\cis527\AdminAccount and have full ownership and permissions on that folder.

Create a group named AdminGroup containing cis527 and AdminAccount, and set permissions on C:\cis527 for that group to have full access to each folder created in C:\cis527.

- In this example, the folder

AdminAccount should still be owned by AdminAccount and that account should have explicit full access, but the AdminGroup group should also have full access to that folder. - No other user should be able to access any other user’s folder. For example,

EvilAccount cannot access GuestAccount’s folder, but AdminAccount and cis527 can, as well as GuestAccount, who is also the owner of its own folder.

Tip

When you create a group and add a user to that group, it does not take effect until you reboot the computer.

In each subfolder of C:\cis527, create a text file. It should have the same owner and access permissions as the folder it is contained in. The name and contents of the text file are up to you.

Tip

Use either the cis527 or AdminAccount account to create these files, then modify the owner and permissions as needed. Verify that they can only be accessed by the correct users by logging in as each user and seeing what can and can’t be accessed by that user, or by using the permissions auditing tab. Many students neglect this step, leaving the file owner incorrect.

Don’t remove the SYSTEM account or the built-in Administrator account’s access from any of these files. Usually this is as simple as not modifying their permissions from the defaults.

See this screenshot and this screenshot for what these permissions should look like in PowerShell. This was created using the command Get-ChildItem -Recurse | Get-Acl | Format-List in PowerShell. These screenshots are from an earlier version of this lab using different paths and usernames, but the permissions structure is the same.

Task 4: Create an Ubuntu 24.04 Virtual Machine

Create a new virtual machine for Ubuntu 24.04 Desktop. It should have 30 GB of storage available. If given the option, do not pre-allocate the storage, but do allow it to be separated into multiple files. This will make the VM easier to work with down the road. It should also have at least 2 GB of RAM. You may allocate more RAM if desired. You may also allocate additional CPU cores for better performance if desired.

Note

Ubuntu 24.04 seems to be really RAM hungry right now, so I recommend starting with 2 GB of RAM if you have 8 GB or more available on your system. The installer may freeze if you try to install with only 1 GB of RAM allocated. Once you have it installed, you may be able to reduce this at the expense of some performance if you are short on available RAM (as it will use swap space instead). In Ubuntu, swap should be enabled by default after you install it, but you can learn more about it and how to configure it here. When we get to Module 5 and discuss Ubuntu in the cloud, we’ll come back to this and discuss the performance trade-offs in that scenario. –Russ

Install Ubuntu 24.04 Desktop in that virtual machine to a single partition. You may choose to use a minimal install when prompted. You will be asked to create a user account and set the computer name. Use the information given below.

Tip

The Ubuntu installation will sometimes hang when rebooting after installation in a VM. If that happens, wait about 30 seconds, then click VM > Power > Restart Guest in VMware (or similar) to force a restart. It should not harm the VM.

Configure the Ubuntu 24.04 Virtual Machine as specified below.

Computer Name: cis527u-<your eID> (example: cis527u-russfeld)

Don’t Forget To Set Computer Name!

This is very important, as it allows us to track your virtual machine on the K-State network in case something goes wrong in a later lab. By including both the class and your eID, support staff will know who to contact. A majority of students have missed this step in previous semesters, so don’t forget! You should be prompted for a computer name as part of the installation process, but it will try to auto-complete it based on the chosen username and must be changed. –Russ

Primary User Account:

- Username:

cis527 | Password: cis527_linux - The account should have Administrator type or be in the

sudo group

Other User Accounts:

adminaccount | AdminPassword123 (Administrator type or sudo group)normalaccount | NormalPassword123 (Normal type)guestaccount | GuestPassword123 (Normal type)evilaccount | EvilPassword123 (Normal type)

Install Software

- Open VM Tools (

open-vm-tools-desktop) (recommended) -OR- VMware Tools (do not install both) - Mozilla Firefox (

firefox) - Mozilla Thunderbird (

thunderbird) - Apache Web Server (

apache2) - Synaptic Package Manager (

synaptic) - GUFW Firewall Management Utility (

gufw) - ClamAV (

clamav)

Configure Firewall

- Make sure Ubuntu Firewall (use

ufw, not iptables) is enabled - Allow all incoming connections to port 80 (for Apache)

Tip

You can test this by accessing the Ubuntu VM IP Address from Firefox on your Windows VM, provided they are on the same virtual network.

Install Updates: Run system updates and reboot as necessary until all available updates are installed.

Automatic Updates: Configure the system to download and install security updates automatically each day.

Task 6: Ubuntu Files & Permissions

Warning

Read the whole task before you start! You have been warned. –Russ

Create a folder /cis527 (at the root of the system, not in a user’s home folder). Any user may read or write to this folder, and it should be owned by root:root (user: root; group: root).

Within /cis527, create a folder for each user created during task 5 except for cis527, with the folder name matching the user’s name. Make sure that each folder is owned by the user of the same name, and that that user has full permissions to its namesake folder.

- For example, user

adminaccount should have a folder /cis527/adminaccount and have full ownership and permissions on that folder.

Create a group named admingroup and set permissions on each folder using that group to allow both cis527 and adminaccount to have full access to each folder created in /cis527.

- In this example, the folder

adminaccount should still be owned by adminaccount and that account should have explicit full access, but the admingroup group should also have full access to that folder. - No other user should be able to access any other user’s folder. For example,

evilaccount cannot access guestaccount’s folder, but adminaccount and cis527 can, as well as guestaccount, who is also the owner of its own folder.

Tip

When you create a group and add a user to that group, it does not take effect until you reboot the computer.

In each subfolder of /cis527, create a text file. It should have the same owner and access permissions as the folder it is contained in. The name and contents of the text file are up to you.

Tip

Use either the cis527 or adminaccount account to create these files, then modify the owner, group, and permissions as needed. Verify that they can only be accessed by the correct users by logging in as each user and seeing what can and can’t be accessed by that user, or by using the su command to become that user in the terminal. Many students neglect this step, leaving the file owner incorrect.

See this screenshot for what these permissions may look like in Terminal. This was created using the command ls -lR in the Linux terminal. These screenshots are from an earlier version of this lab using different paths and usernames, but the permissions structure is the same.

Task 7: Make Snapshots

For each of the virtual machines created above, create a snapshot labelled Lab 1 Submit in your virtualization software before you submit the assignment. The grading process may require making changes to the VMs, so this gives you a restore point before grading starts.

Task 8: Schedule A Grading Time

Contact the instructor and schedule a time for interactive grading. You may continue with the next module once grading has been completed.

Virtualization & VMware

Note

As of 2024, VMWare Workstation and VMWare Fusion are free for personal and academic use. You can download them directly from the VMWare website.

Resources

Video Script

In this class, we will be making heavy use of virtualization software to allow us to run multiple operating systems simultaneously on the same computer. This video provides a quick overview of what virtualization is and how it works.

First, a simple view of how a computer works. In essence, whenever you tell the computer to run a program, you are actually telling the operating system what to do. It will then load the requested application software into memory, and begin executing it. The software will send instructions through the operating system to the hardware, describing what actions to take. The hardware will then use electronic circuits to perform those operations.

This diagram shows that a computer would look like without virtualization. This describes how most computers work today, and it has been this way for over 30 years.