Subsections of Software Engineering

Introduction

YouTube VideoResources

Video Script

Welcome back everyone. In this video we’re gonna be talking about software engineering. Now you should remember this particular machine the ENIAC, which was the first electronic computer in the United States. Programming the ENIAC was a really slow and laborious process though. To load a program, ENIAC’s programmers Pictured here is Gloria Ruth Gordon, and, well, later Bolotsky, and Esther Gordon would physically rewire the hardware and a new configuration corresponding to the calculations indicated by the programmers. And so you can see them here moving actually the wires from different plug boards for the computer to actually make different calculations and operations work. So the task was made even more difficult by the secrecy involved with the machine. Initially, programmers weren’t even able to see the schematics of the machine and had to pass their wiring instructions belong to the technicians. So the programmers didn’t weren’t even instructed on how the actual machine was built or how it worked. And they couldn’t even actually run their program themselves. So you could imagine that that would be a really difficult task to actually accomplish writing successful programs for a computer that you didn’t even know how it worked.

But this was very similar to Alan Turing’s original conception of the his famous Turing machine. And this machine had carried out hardwired instructions on data encoded as zeros and ones on an infinitely long paper tape. Turing had an epiphany with this machine, realizing that by making this machine read instructions on specific sequences of zeros and ones, as his machine booted, he could encode the program directly on the infinite tape with the data it was actually operating on. Now, this is a huge leap, because before right with this, we had a tyranny of numbers problem where the We’re just getting so complex, that it became nearly impossible to make anything substantial in today’s terms of software.

But now that we can actually store the actual software alongside with the data actually being used the started to simplify the process. These ideas were incorporated into electronic computers by several computer scientists, including J. Presper Eckert and John Mauchly, inventors of the ENIAC computer, as well as John Von Neumann, who was the first to publish such an article about this architecture in this paper, the first draft of a report on The Ed vac. For this reason, a computer architecture allowing for stored programs is referred to as the Von Neumann architecture, and is the basis for modern digital computers. Stored programs also allowed us to develop bootstrap code or libraries of common procedures that can be reused for future programs. And we’re loaded into the computer as it was warmed up. This is an important predecessor to modern programming libraries and operating systems. So as you can imagine a lot of these early computers, you ended up having to actually program basic operations anytime you actually wanted to work with something. So a lot of the software, a lot of things were done by hand, a lot of the things that we take for granted like user input, a lot of the mathematical operations that we use in modern programming languages, all of these libraries that we utilize to make our software a lot easier to write, and which allows them to be extremely functional and easy to read. And these weren’t existing or at least didn’t exist in a lot of the earlier software that was actually being developed. So our ability to store programs along with data and store programs, along with the actual system itself. Made a lot to future programs a lot easier and faster to write.

But the next major invention in software design was the development of programming languages and the associated technologies of compilers and interpreters that allowed programmers to write programs and a higher level programming language that would then later be translated into machine language for a stored program computer. Pictured here is Grace Hopper, the creator of the first higher order programming language flow Matic and influential co creator of COBOL. A very popular or was very popular business programming language. The development of programming languages is especially important in that it allowed us to develop abstractions for simplifying development of software and allowing us to express significantly more complex ideas in computer code without a significant amount of more lines of information and code that had to be made with the software.

But as programming languages diverse From their mathematical roots, and became more expressive, new challenges arose in making sure that programs were clear and easy to understand. This is further complicated by increasingly sophisticated nature of software that sought to accomplish more than earlier programs had ever had. Furthermore, the growing industry demand for software developers had led to often incompletely trained programmers entering the field. And so if you could even code a little bit, you probably could land a programming job. This is a pretty important turning point in the industry here because we had a rapid growth of actual technology. So not too long after world war two ended, electronic computers started to become smaller and more popular and they all of a sudden didn’t take entire rooms to actually build right they weren’t the size of school buses anymore, especially as we approached the personal computer era.

We needed a lot more people to actually program there was a significantly higher demand for software and the demand for that software was even greater yet as far as the functionality would actually go for that particular software. So, as a result of all this sloppy programming, poorly understood designs and really the lack of systematic planning and execution, with these poorly trained programmers led to an era of our field being labeled as the software crisis. This spurred the development of a lot of different new technologies and approaches and approaches for developing software.

Some of the key projects from this period include the IBM O’s 360 project that ran drastically over budget while employing over 1000 programmers, and famously the fair act 25 radiation machines which would regularly display an error to their operators. So as a nurse would come in, and try to to administer a dose of radiation to let’s say, a cancer patient, they would then try redoing the treatment because there was an error on the machine on on the screen or whatever. And they wouldn’t realize that the machine had already delivered a dose of radiation, which led to a lot of patients actually dying or being crippled.

Throughout the software crisis, a lot of important computer scientists made a lot of developments in contributions to software engineering, and one of the more important computer scientists of the day, like Edgar Dykstra and Nicolas Wirth sought to address these challenges through language design and better education for fledging budgeting computer scientists. This 1968 letter to the editor of the ACM journal by Dykstra underscores his concerns with the goto statement, which allowed for a very disjointed style of programming that would make programs difficult to debug and understand as the go to statement would allow you to jump back and forth between steps in your program without any concern for anything else. This and readability of and usability concerns as well as efficiency issues became one of the driving forces behind the evolution of programming languages. Where most currently use programming languages don’t even have a go to statement like Python, and many of the many of the other popular languages that we use today. Other improvements included the addition of modules and information hiding, introduced by David Parnas concepts that eventually involve evolved into what we refer to now as the object oriented programming languages.

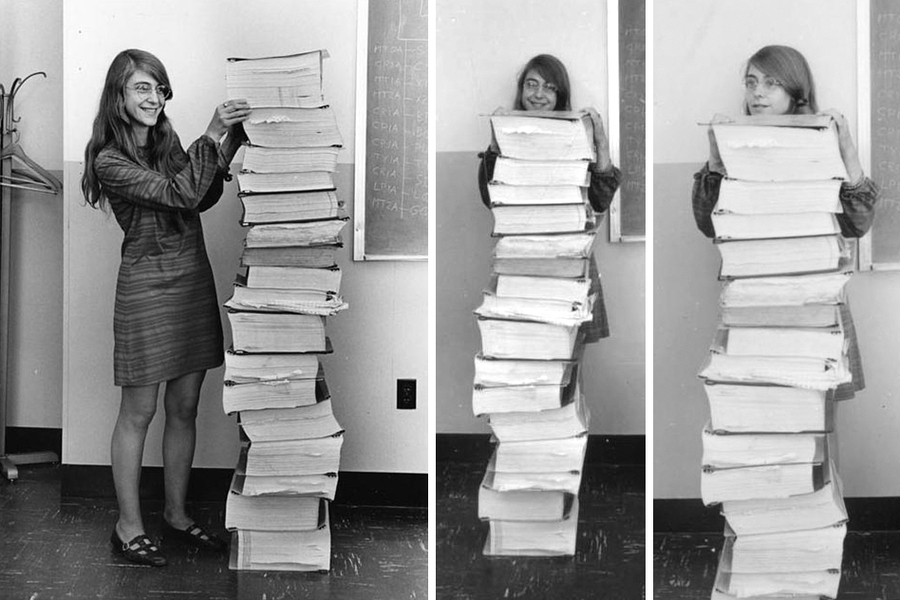

In the same year, Margaret Hamilton, one of the NASA engineers responsible for simulating the Apollo missions on a computer. One of the most involved computer simulations attempted to that time, coined the term software engineering to describe the role she had played The stack of documents Next to her is actually one of the simulation results from that effort, which helps underscore just how large software projects were growing at that time.

Margaret Hamilton - 2017 CHM Fellow

YouTube VideoSoftware Development Life Cycle Part 1

YouTube VideoResources

Video Script

Now that we’ve talked a bit a little bit about the history of software development and software engineering and how it’s evolved over the years, what are some of the key activities that we actually engage with in this process. Now, you’ve already used and worked with a lot of these already, even if you haven’t really made the connection to them yet. Software Engineering overall will come with quite a few different processes along with it. But overall, you can kind of expect these six different stages.

Now, to begin the process off, we’re going to start with requirements gathering are going to actually go around and collect information about the software that we’re actually being tasked with to develop. So this involves with going and meeting with clients meeting with users meeting with business folks meeting with just about Anybody and everybody that might be a stakeholder or involved with actually using that software or funding that particular kind of project.

Now, once we have gathered all of the requirements for the software, then we can make the jump into the software design. So here we’re going to start to architect the project and lay it out overall, but we haven’t actually even done any programming yet. That doesn’t actually typically happen until the design has been actually completed. So once we actually start the implementation, then after the code has been finished, or at least a stage of the code has been finished, that code is then split off into the testing phase. Sometimes the implementation and testing phase, you might see done as one as if you’re on a small team or depending on the kind of approach you’re actually taking to the development lifecycle.

But after the software has been fully tested, then it gets deployed or installed. And then it goes into like a maintenance mode, right. So a lot of times you get the first Windows updates and various other software updates on mobile apps and games and everything in between. So that’s the maintenance phase, right, where we’re trying to update patch, fix anything that may actually been broken, or things that we didn’t catch during the testing phase. But you see that now I’m back at the requirements gathering.

Most of the software development life cycles that we actually see an encounter are a never ending cycle. Software is never perfect the first time around that we actually build it. So more often than not after creating what we refer to as a prototype. We’re going to end up going back to the requirements gathering stage, talk to our customers, talk to our consumers, talk to our stakeholders, and give another shot at it. Whether or not whether it’s just a, a simple update, or feature addition, or even a complete rewrite in some cases if we didn’t get it right the first time. But let’s take a look at some of the early software development life cycles that were developed. Now, you’ll notice that these each of the activities that we just talked about before lend themselves very well to a systematic step by step approach, where each activity is followed by the next and we kind of loop back onto this.

This is essentially the approach that was developed as a standard strategy for software engineering after the work by Margaret Hamilton in the early 1960s. And over the next few decades, an approach that we call the waterfall model of software development for reasons obvious from the graphic, where each phase flows from the next into a highly linear fashion now In the waterfall development, as I mentioned, right, this is a very linear process. This isn’t like the software development lifecycle that we’ve showcased earlier, where we start with the requirements gathering. And we cycle through each phase. And we end back up at the beginning of the waterfall method assumes that each phase is done in one shot, so there’s no reduce.

So we gather the requirements and design the software, implement it, test it, so verification, and then we go into the maintenance mode. So of course, we install and deploy the software in between there. But once we’ve deployed the software and enter maintenance mode, we’re not going to actually be going back and doing more requirements gathering or redesigning anything, it’s just some simple bug fixes at that point. So, it emphasizes the waterfall model emphasizes that the actions are arranged as discrete phases in sequential order, although depending on the model that you actually look at some splashback is acceptable. So if you imagine an actual waterfall, some water gets splashed back up and falls back down. So maybe we get to the implementation phase, but we recognize that our design is severely flawed. So we may bump back up, change the design a little bit, and go back down into the actual coding phase.

But here, the entire system, and the waterfall model is implemented, designed and deployed all at once. So planning, scheduling, target dates, budgets, all of those things are set in advance. Most of the time, these are often set by contracts. So there’s really no flexibility there with as far as funding and deployment dates go. A lot of times with the waterfall model, there’s a lot of extensive documentation and very tight control. So this is a very heavily managed process. So this is really useful for processes that require a little A lot of structure. And we’ll talk about some other models here in a little bit that have a lot less structure, and that offer a much more flexible approach to software design.

Additionally, during this time, scientific management techniques were adapted for use in the management of software developments. One of the landmark books capturing this evolution was Barry Boehm’s Software Engineering Economics book, where he argued for considering trade offs between adapting existing software and creating new software on economic terms. And so we use statistical analyses to develop equations like this one, seeking to equate development time in main years to the metric of software lines of code, or SLOC. Now, there are there’s a lot of debate around measuring the productivity of let’s say, developers and cost of software based off of the number of lines of code that are actually produced. And there’s a lot of fallacies behind that metric as well. But a lot some people actually some fall into that trap.

Another book from that particular time period refutes some of those both some of those arguments as I mentioned, they were very controversial. But this book, the mythical man month, details many software engineering management fallacies. Like, for example, adding more programmers to a late project will absolutely right help it finish sooner, but not so much. In practice, bringing new developers up to speed consumes much of the current team’s time, and overall slows the development. So let’s say you’ve been working on this really big important project for nine months. But you are a couple, maybe a month or two behind schedule. So management comes in brings in new developers to try to help get you back on track. But it actually ends up putting you back farther in your time schedule because you had to stop your productivity to get the new people caught back up to speed.

Another fallacy here that is discussed in the mythical man month is the use of crunch time. Crunch Time is typically portrayed as an increase in productivity, right. So, you know, spending, you know, 60 to 80 hours a week to meet that deadline. So, pulling 18-20 hour days, programming all night, I’m sure you have probably done this when studying for a really big exam. Crunch Time doesn’t necessarily increase productivity. Studies have actually shown that a breakeven point for let’s say software development lies around about 35 hours. There’s been research that shows about 30 a year your average limit for a software developer is about 30. 35 hours of programming per week. Beyond that, a fatigue programmers introduce more bugs into their code at a faster rate than they’re actually removed. So that ends up costing a lot more money and time because they’re, you know, fatigued. Developers that have just been coding for too long or going to be more prone to produce bad code. I’m sure some of you some some of us have experienced this as a student, as I mentioned, as you’re studying, you know, when you’re really exhausted, or have had way too much caffeine are way too much coffee. You may not be studying as efficiently or or productive. Your productivity may not increase proportionally to the amount of time that you’re you’re actually spending versus the amount of time you’re spending when you weren’t exhausted.

Software Development Life Cycle Part 2

YouTube VideoResources

Video Script

Let’s take a look into a few other different methodologies in software development. So this one is in complete contrast to the waterfall model of software development and pretty much all other development methodologies. Because this methodology, there really isn’t anything to it. It’s generally known as cowboy coding or code and fix. Now, this in many ways, is the anti software engineering approach, where planning, testing documentation at pretty much everything, all of that is ignored, or immediately writing code. And predictably, this leads to a lot of what we refer to as spaghetti code. And so it’s just mangled structure that has really no clear way to it at sprinkled with sub optimal algorithms memory leaks, structural issues is just a mess, right? Imagine getting out a box of Christmas lights that were just thrown into a box and in January, and you go back in December, try to put those Christmas lights up. And magically, they’ve aimed together into this big ball that takes hours upon hours to actually unravel. And sometimes it’s just easier to throw it away and start from scratch.

Even more unfortunately, with this methodology of cowboy coding, this is actually unfortunately very much how most students learn to approach software development and their early assignments. And it’s often carried with them into industry. So the sooner that we can actually start to teach design structure and this process, the better habits that you will develop, especially as you start getting closer to working to working to an internship and into To a full time job.

But not surprisingly, issues in software engineering are typically coupled with periods of surging growth for a field of computer science. And so this graph here based off of data collected by a tabulate survey tracks the growth of Bachelor’s of Computer Science degrees since 1966, up through about 2010. Now, you’ll notice the two very clear spikes in this graph, one peaking around 1985 and the second around 2004. That first rise was a big issue here for K state and many other universities that that matter, to and to meet the growing demand of students with the limited faculty available to graduate students taught and many of the undergraduate courses, similar measures were employed at other institutions likely compromising the quality of preparation for an entire generation of software engineers because people were thrown into teaching positions that really weren’t that well qualified to teach those particular topics are experienced enough.

A similar trend occurred during the second peak, although thankfully not so much with us. But hopefully you can maybe guess what the driving force was behind that growth rates at that time, right. So this peaked around 2004. That was around the.com. Boom. So the first search that we saw began in the early 1980s when the personal computer started to be introduced. And so that spikes the first surge and the demand for computer science degrees, and then the second peak reflects the dot com bubble. So as the internet came out in 1990, we saw a huge burst in the late 90s of companies thinking, Ah, well, we can make it big if we get on the internet. Okay, and so we saw a huge surge In the need for computer science. But after the.com bubble burst, which burst in the early 2000s, we saw a rapid decline in the demand for computer science degrees. But thankfully, over the past few years, we’ve since then we have seen a increase, and the demand for computer science or an increase in the number of degrees awarded in computer science. And we haven’t seen that die off quite yet.

But with the growth of the internet, Internet companies and the eventual collapse and the success of all the survivors to underscore the need for a more reactive and flexible style of software development that was possible with the waterfall model. So the dot com boom, sparked a lot of different kinds of needs of technology and software, especially with the invention of the internet, of which we had never actually developed software for something like that before. So the web needed to be what robust, secure and most importantly, scalable. And so that meant cowboy coding couldn’t meet that need either, even though it was super flexible, because we could just start with no planning whatsoever. But that often led to very insecure software and software that really didn’t scale well. So new strategies as a result of the dot com boom, emerged to create more robust software engineering approaches, and these were started to be employed more widely.

One of these of course, was just simply prototyping which is especially useful for developing experimental or difficult to estimate systems. And so the idea here right is to build a prototype, establish the base functionality, complexity and worth wildness of the of the specific product right. So this begins with implementation or coding and then leads to other activities, like requirements gathering and software design. So try something out and see if it works. See If the Customer likes it. If they liked Or let’s say they like this, but not that let’s keep things that they like and throw away the stuff they don’t want. So this is pretty much the cowboy coding or the code and fixed process with a more developed and structured approach, right. It’s not just a standalone process. There’s a lot more involved and there’s a lot more structure here.

Software Development Life Cycle Part 3

YouTube VideoResources

Video Script

The iterative development process, or the iterative development model breaks down software development into much smaller segments. This allows for a lot more change and variation to the development cycle. So the iterative development process works by working through many waterfalls. And so if you look at each one of these chunks here, each one of these stages, they’re pretty much the waterfall process. And so overall requirements are gathered, then we analyze those requirements develop, implement test, and this is a cycle right? In this case, our waterfall is circular so we can keep on trying Go Go, go, go go. And then we’re going to release a prototype or a version of our product. As we go through this each time a release, we demonstrate that to our customer. And get feedback and then we’ll work that back into our next cycle. And so we just keep on iterating this until we are doing our final release to our customer.

One more different developments that we want to talk about this time is actually proposed by Boehm, this combines prototyping and the waterfall development is very similar idea to iterative design. The spiral development model focuses on risk assessment and management by breaking projects into smaller parts, allowing for the evaluation and change as each part is individually finished. And so this works off of four main segments. So you are going to determine the objectives, alternatives and constraints of that particular iteration. So that small part, evaluate alternatives, identify and resolve those risks. So we want to try to match negates the risks because those risks, right, the more risk, the more cost is actually involved with the project. And then you’re going to develop and plan for the next iteration. So as you start to cycle out here, right, so you’re starting small, the center here, you’re going to start with very small projects, right. And as you complete those smaller projects, the base of the foundation of your software that’s going to slowly grow out, and you’re slowly going to introduce more things that are needed in the project, like different models, different prototypes, more testing, and the closer you get to the outside edge of the spiral, the closer you’re going to get to deployment. But this is again, right like iterative design, much more flexible, and overall, much more appropriate to what modern software actually lends itself to.

But even more so after this We actually come into something that’s very popular industry today called the Agile process. These methodologies that we’ve talked about so far were to being developed by software engineers and high level planning positions. A new philosophy was emerging from the rank and file developers which, as expressed in this Agile Manifesto, the manifesto reads as we are uncovering better ways of developing software, by doing it and helping others to do it. Through this work, we have come to value individuals and interactions over processes and tools, working software over comprehensive documentation, customer collaboration over contract negotiation, and responding to change over following a plan. That is, while there is value in the items on the right, we value the items on the left more. So while agile is often thought of as, as a software engineering process By itself, it’s not. Rather it’s a guiding philosophy that can be applied in conjunction with many other software development approaches. That said, it clearly works better with something like iterative or spiral development or any other reactive styles of software engineering than the more rigid approaches that embodied the waterfall approach. And so as part of the reading, I’m going to actually ask that you go check out the Agile manifesto.org website and honor they have all the principles that are assessed with agile development and kind of embody what agile actually means.

But overall in the Agile process, you’ll typically see something like this agile as it stands right is the philosophy behind it as extreme flexibility. In this sense, you work off of mostly what we refer to as sprints. So these small Sprint’s will always have some sort of deliverable. product to the consumer or the customer. So it’s looks very similar to what we see with the software development lifecycle and a lot of the iterative or spiral processes where you brainstorm or gather requirements, you design prototype, develop, you QA. So you test and test and test and test. And then you deploy. And as you deploy, you do a little bit more testing, and you deliver that product to your customer. Or maybe you deliver that to the next person up in your organization. And all that feedback is then worked back into the next iteration into your next sprint. And so typically, what you’ll see with Agile process of the Agile process or Agile software development is that each sprint will focus on one or two small features of the software. And once that feature is actually completed, then the that particular developer will move on To the next sprint with the next development of either that same feature with modifications that were given to them by the consumer, or moving on to after completing that feature moving on to the next.

Another improvement that came in the mid 90s was the development of a suite of standardized diagrams for modeling software. The Unified Modeling Language, or UML. UML allowed for complex software systems to be diagram discussed and shared between developers and an easier to interpret form. Because before then there was no standard for documentation or not necessarily, but there was a lot of complex software being developed and each programmer would document and diagram in their own unique way. Just like what we would have, we have our own unique way of speaking and writing. Each developer had their own way. of not only just writing their own code, but also diagramming and and discussing about that code. And so UML offer a standardized way to actually share the overall structure interactions. Things like the activity or sequence or flow of the program among different developers, actually was someone who’s actually been trained with you to interpret and read UML could understand the software at a much higher level, a much deeper level, even without knowing how to actually program. But for now, this is going to conclude our discussion on software engineering, software development. There’s obviously a lot more here that we did not cover. In future classes we’ll take a much deeper dive into the architecture, modeling and development of software.

Software Engineering (Crash Course)

YouTube VideoPattern on the Stone Reading

Read Pattern on the Stone, Chapter 8.