Subsections of Artificial Intelligence

The Rise of Artificial Intelligence | Off Book | PBS Digital Studios

YouTube VideoIntroduction to AI

YouTube VideoResources

Video Script

Welcome back everyone. In this video, we’re going to be taking a look at artificial intelligence. But before we get to the artificial part, what is actually intelligence? So what does it mean to be intelligent? This is a question I ask all of my students from the kindergarteners that I do outreach with all the way up into college classes like this, and I get a very wide range of responses here. And usually the default answer that I get is intelligent means someone is smart, right? But it’s not necessarily always the case, right? A person who’s smart or has an high IQ is capable of doing math, really good at remembering information, and creation of information. But it’s not necessarily intelligent behavior. So intelligence involves a lot of different things, including reasoning and problem solving, which of course, smart people are able to do those things as well. Our ability to reason to a certain degree and problem solved to a certain degree is definitely an intelligent behavior. When you encounter a situation in you’re environment, and you reason and solve that particular problem to get around that particular obstacle. And we see these types of behaviors and all sorts of beings, right? Not just humans, but it is documented quite frequently in the animal kingdom as well. Intelligent behavior also involves our ability to construct knowledge, right? So as we learn from our environment, right? Learning is also another intelligent behavior. But as we start to interact from our environment, we’re going to gather data, information, things that we see, taste, hear, touch, all of those things, our ability to perceive from our environment, which is also an intelligent behavior, our senses, but that information needs to go somewhere, right? An intelligent being like an animal or human is going to be able to take that information and construct knowledge to a certain degree.

Now the level of intelligence can impact what kind of knowledge that can be constructed, probably some of the most basic ones, right? Even my kid right now we have those buckets, right, that have little different shape holes on top, and you have a bunch of different blocks. So the square block goes into the square hole, and the star block goes into the star hole. And it takes a little while, right? That is information that you can kind of get from trial and error, trying to put the square block in a triangle hole isn’t going to work out very well, but eventually you’ll figure out that, hey, the square goes into the square hole, and so on. Right? So that is one of our base forms of being able to construct knowledge and learn from our environment. Our ability to perceive also impacts our ability to learn and construct that knowledge. So the first time that you ever touched a hot stove by accident or a hot pan, right, that pain is something that we remember and is ingrained in us. So before that ever happens, we don’t really truly learn what it means to touch something that’s very hot. And we don’t sense that as a true thing of danger until that is actually touched. And then we get that pain reception. And we hopefully learn to not touch that hot pan again, even though we do that probably by accident. And hopefully we don’t do that on purpose again. So intelligent behavior, right? Not necessarily someone who’s smart, although smart people are definitely intelligent and exhibit intelligent behavior, but intelligent behavior isn’t necessarily connected to how smart someone is. But if that is intelligence, what does it mean to have artificial intelligence? Well, on a very basic level, right, is a non-biological thing that has or exhibits forms of intelligence, right, a machine right or robot or computer or phone, whatever it may be something that is not living a does exhibit some form of intelligence. Now, it may not be total human intelligence, but it may be partial human intelligence.

So a lot of this came from a man by the name of John McCarthy, who really was the creator or really helped start the field of AI. So in 1956, john McCarthy, who worked at Dartmouth College at the time, helped to organize a conference to discuss the idea of artificial intelligence. And so the 50s, this is really after world war two was over, and everyone was home and the big computers that we had created for the war, are now no longer being used for military purposes, but are starting to be transformed into industrial purposes. John McCarthy also had a lot of other things credited to him, including the Lisp programming language, but primarily he is credited for helping organize the idea of artificial intelligence and so on. During that summer of 1956, several leading minds in the world of AI at the time, although AI really wasn’t solidified at that point, but many of the people who had interest in this type of idea gathered for a very several long week brainstorming session, essentially, little mini conference. Attendees included john McCarthy, of course, Alan Newell, Herbert Simon, Claude Shannon, who we’ve talked about before Marvin Minsky, and quite a few others. I’ll talk about a lot of those folks here later on and the following videos. But pictured here are some of the surviving members of the conference from at least in 2006, when they’re celebrating their 50th anniversary. But at this conference, overall, a lot of the groundwork and the ideas of artificial intelligence were first introduced. And it really helped to shape the field for many years to come.

So we had this discussion already of what is intelligence and what is artificial intelligence. According to john McCarthy, it is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related similar to the task of using computers to understand human intelligence. But AI does not have to confine itself to methods that are biologically observable. I really like that particular part there that is not biologically observable, right? There’s a lot of things in the world that are intelligent behaviors, right? Especially a lot of animals, cells, all sorts of things. But there’s a lot of things that machines can do that biological processes can’t as well. What does that mean for the definition of intelligence? Well, John McCarthy continued here to say that intelligence is the computational part of the ability to achieve goals in the world. Varying kinds of degrees of intelligence occur in many people, animals, and even some machines, as we talked about with artificial intelligence. But there’s really no true solid definition of intelligence, and particularly when it doesn’t depend on human intelligence, because a lot of times, of course, we as humans are defining intelligence. And most of the time, we’re defining intelligence in terms of our intelligence. So the problem is that we cannot yet characterize in general what kinds of computational procedures we want to call intelligent. We understand some mechanisms of intelligence and not others. So we don’t really truly fully understand the human brain yet and a lot of the nuances of our own human intelligence. So it’s kind of hard to quantify intelligence as a whole, especially artificial intelligence when we’re trying to transition into human-like AI.

John McCarthy (1927-2011): Artificial Intelligence

Watch through 5:38

YouTube VideoAre Machines Intelligent?

YouTube VideoResources

Video Script

But let’s continue our talk here a little bit more about intelligent behavior and what that means with human behavior as well, and when we’re trying to actually make AI that seems human. And so one of the problems with AI is that it forces the computer to mimic all human behavior, not just intelligence. And so when we start to create an AI for it to truly be human like, right, it has to do all of our own behavior. And so things like slow response times, typos, commonly misheld conceptions that aren’t true, connecting words like I’m doing right now. All of these things are human behavior that may not be considered intelligent, or clean, or you know “perfect”, but it’s something that a successful AI agent must be able to do in order to pass what we call the Turing test. And we’ll talk about that here in a second. But likewise, an AI must also act, sometimes really, as if it can’t solve some problems that are perfectly well within its abilities to actually be able to solve, but simply because those problems are unsolvable by human intelligence or unsolvable in the amount of time it could take the AI or computer to actually solve. So if you’re trying to make an AI that is trained to behave and act like a human, it needs to do as humans do, and it can’t be perfect.

So that is where Alan Turing comes into play. And we’ve already talked about Alan Turing to some degree, especially with the Bombe and his work with the Enigma machine, as well as the Turing machine. But he also wrote in a paper, “I propose to consider the question can machines think?” This is a quote from a 1950 paper that he wrote, called “Computing Machinery and Intelligence”, which he opened with that statement and tried to open up some discussion, right? He was deeply interested in the field of artificial intelligence, but unfortunately, there really was no good way to determine if a system was truly intelligent at the time. And so in that paper, he describes one way to test machine’s intelligence, which is now known as the Turing test. The basic idea of a Turing test is as follows. You have one person in a room with a computer capable of simple text-based chat, so they’re sitting on a terminal at a computer, and they’re trying to talk in this chat room or to someone else, and that someone else will be a computer or could be a computer that will try to pass itself off as a human by responding to the prompts from the tester. The tester then must determine whether or not he or she is conversing with a computer or a real person. And now there really hasn’t been someone who has truly passed the Turing test completely with flying colors. And the Turing test as a whole is biased, and there’s some problems with it. And so it’s not perfect either. There have been some algorithms that have passed the Turing test to a certain degree, but there are some problems here.

So can you think of any of those problems that a Turing test may actually have? Some of those problems are actually exhibited from a variant of the Turing test called the Chinese room. So the Chinese room experiment was proposed by John Seely in 1980, in his paper, “Minds, Brains and Programs”. In this setup, an English speaking person is placed in a room with sufficient supplies and a set of instructions completely written in English, that directs them to accept Chinese language characters as input and output a result or response of Chinese characters. On the other side of the wall as a native Chinese speaker, performing what we refer to as a Turing test. And in this case, the person is convinced that the person on the other side of the wall is indeed a human. However, the computer being a human that only speaks English is completely unaware of the conversation that is taking place in Chinese. So in this case, is the machine, right or the person intelligent, or merely just so advanced at following instructions that it appears to be intelligent? So this is a trick right? Are we just good at giving the computer instructions to mimic human behavior or is it just someone who’s good at following instructions? Now, following instructions, there’s some intelligent behavior involved with that, although not a whole lot, but it’s not exhibiting the full intelligent range, right? So there’s some of the fundamental issues with the Turing test and things like that of trying to truly quantify whether or not an AI is a human or not. But this leads to an endless debate right between strong AI and weak AI.

When you think of an AI in movies, this is usually or typically a strong AI, which is designed to completely mimic or surpass human intelligence. Now, this is most movies, right? And most movies that you watch, they’re going to be AI that can converse perfectly in English and answer all questions, and even look up things that instantaneously that at the moment no machine is actually possible. Iron Man is a perfect example with his AI Jarvis or Friday later on and some of the later movies. And so unfortunately, really at this time, strong AI is just not a reality. And there’s even some debate of whether or not it’s actually possible. Most of the AI that we deal with today is in the form of a weak AI. This is also called narrow AI, which is designed to perform only a subset of intelligent actions. Now, just because something is a weak AI doesn’t mean that it’s not possible of good quality intelligent actions. A lot of times weak AI is extremely good at one particular kind of behavior, but not so great at others.

Intelligent Agents

YouTube VideoResources

Video Script

Differentiation here between strong and weak AI starts to lead to the idea of intelligent agents. Intelligent agents are entities that can perceive things about its environment through sensors and act upon that environment with effectors, hopefully in a way that can be perceived by the actual agent. And so what do you think are some examples of these intelligent agents that you’ve seen in your lives recently? I know for me, I have things like Amazon Alexa or smartwatches. Basically, anything that has a sensor and acts upon its own and its environment is going to be in some form of intelligent agent. Now a lot of these are going to be categorized as weak AI, right? So it’s very good at one thing. A simple example is a thermostat, or even a Roomba. Roombas are really good at vacuuming your floor, but not so great at opening your door for you, or cooking your food or telling you what temperature it is in your house.

Each robotic agent or intelligent agent is going to have four primary functions. The ability to perceive things from its environment. So what it can sense, although granted, an agent isn’t going to be able to sense everything about its environment. Sensors are really what it can understand. So what it can sense, what it can understand, right, or what it can understand is only what it senses it knows about. Actions- so what it can do on its environment. And really, an agent is only going to be able to know a subset of all possible actions, it’s not going to be able to know all the things that are possible, right? Depending on the kind of AI and use of that particular agent. Some of those actions or environments become extremely complex, even in the game, something like checkers. A simple game overall, but it becomes extremely complex if you’re trying to go through all possible board configurations. The last major function of an agent are its effectors. So how it can do things. Really, and sometimes it may not be capable of doing enough in its environment, but these are the things that it’s actually able to do in its environment, or how it can do those things in its environment.

Now, this also brings us into discussion of how an AI should act, right? A weak AI, strong AI, whatever you’re trying to talk about here. But this brings us into a discussion on how an agent or an AI agent should be able to act. Now a rational agent, which is what we perceive as intelligent is concerned with doing the right thing, given what it believes, from what it perceives. Given the information that it can gather from its environment, and all of the information that previously knows, it should be concerned about doing the right thing with that information, the act upon that information. But as you can guess it may not know everything, but it will try to do the best it can. Now, we can also measure the utility of an agent by measuring its maximum success. So how good is that? That is what is the right thing? And how do we figure out how well it did that thing? So to understand the effectiveness of artificial agents or intelligence, we have to ask ourselves questions about our beliefs, the uncertainty of knowledge, and how its represented and how we can reason and learn from it. And by studying our own rational behaviors, that is why we as humans do the things that we do, and we can hopefully better understand what goes on into the decision making process and how we can build better rational agents. But in that sense as well, right? If we’re trying to build perfect AI, our human bias gets into the AI that we build. And so one AI, this is why different robotic vacuums behave in sometimes entirely differently, because different humans are building different models of vacuums, or thermostats, or whatever kind of AI you’re looking at. And that is based off of that particular person’s experiences, research and knowledge. And that bias is going to lead into different kinds of utility, different measurements of success, different actions, and different rational behavior.

A couple of very simple examples here of categories of agents that we may see in weak AI, something like a simple reflex agent, which just simply does an action based off of the things that collects or senses from its environment. This is something as simple as a automatic door so you walk into Walmart, and the door opens for you. Right, awesome magic door that opens for you. There is no one that has to open the door for you. But that is a basic AI right? It is something that can perceive from its environment and act upon it. Now it doesn’t really know what its action does. It does know that the door is open, but it doesn’t know what that actually means for the environment. It doesn’t know that it’s letting humans into the room, and it doesn’t know that it’s, you know, letting energy out, for example, as a basic automatic door. And we could do something a little bit more advanced like a learning agent, which is going to be able to learn from its actions and do better the next time, some form of critic or utility is going to be involved here. Something like a smart thermostat in your home. So a Nest thermostat or Ecobee whatever smart thermostat you may be aware of, but those smart thermostats are going to try to learn your behaviors- when are you out of your house?, the temperature of the environment outside of your house, how comfortable you like to have your house, whether like it colder or warmer, what room you’re in, in the house. So it can make that room more comfortable than the rest of the house. So you save on energy. And those sorts of things are going to start learning your behavior or other types of behavior so it can better its particular results, right? It’s so a lot of these AI are basing their decision based off of the utility. The utility, the measurement of success, that it’s actually been programmed to to look at.

AI Advancements

YouTube VideoResources

Video Script

So let’s take a look at some early attempts of artificial intelligence. So Alan Newell and Herbert Simon were a couple of early researchers in AI. In 1955, they wrote a program designed to mimic the problem solving skills of a human being, and called it the logic theorist. This program is now widely considered to be the first AI program, it was used to prove theorems in the book of Principia Mathematica, and actually created a much more elegant proof for some of the theorems than the author wrote for the book. At the time, interest was swirling around the growing idea of AI, and the experts decided to come together and discuss that topic at length. This is a conference that John McCarthy had wanted and successfully organized. There’s a lot of different types of AI that have been developed over the years. And there’s a lot of different methodologies behind them to make them artificially intelligent. But a lot of this deals with how we represent knowledge and information for the AI. So there’s a ton of information in the world, even something as simple as a smartwatch that’s detecting your heart rate and oxygen levels and things like that. It ends up being a lot of information over the course of a small period. And so how do we represent that knowledge in order for our AI to actually be able to consume it and make good rational decisions from it.

That also includes a search of that information, so how do we find and dig our way through all of that data, which includes expert systems. So a really good example of an expert system is Amazon. So it learns your shopping habits and recommends items to you. And that recommender system is really what tries to learn your likes, and dislikes, and what you might need to buy, or want to buy next. The ability to plan right? To set out a course between information between two points. Reasoning, machine learning, which we’ll talk about here in a little bit with neural networks, special topics like a natural language processing, so AI that can understand human speech, which for us is not as difficult, but for a machine and for a computer understanding human like speech, and producing human like speech is an incredibly difficult problem.

So to dive a bit deeper into the topic of AI, we’re going to look at the last tool mentioned called neural networks. In 1969, Marvin Minsky, one of the founders of MIT’s AI lab, wrote a book called perceptrons that laid the groundwork for this idea of a neural network. Now, what are neural networks? So the idea behind a neural network lies behind the power of individual neurons, and the connections between them. Each neuron is capable of doing a certain task, and then its output is passed on to other neurons. The strength of a neural network comes in the form of the connections between neurons. If one of them tends to give correct answers to a problem, other neurons will be more likely to use its output based on the strength of the connection between them. And the process of strengthening good connections and weakening bad ones is how neural networks are able to learn how to do particular tasks. And this is really kind of how we’re trying to simulate the human brain, right? We have in our brain, we have lots of neurons and synapses and those synapses, those connections are a representation of the knowledge and things that we actually learned throughout our life. And so how do we actually get a computer to imitate that particular idea? Now, neural networks have been out for quite some time. But more recently, this has been the idea behind deep learning. Deep learning works at a very basic level, expanding the network to have numerous different layers to actually learn from. Deep learning is very much like an artificial neural network, but lots and lots of different layers. And each layer may actually be a different learning algorithm that is producing that particular output.

An example of neural network is here about classifying camouflage tanks. So in this experiment, the researchers wanted to create a neural network that would classify pictures of tanks hiding in trees from pictures of just trees. So we have pictures of trees and pictures of tanks in trees. This was a really interesting problem for the government at the time, and it worked pretty well for the original photos. But when the researchers brought in a new set of photos to test it on, the results were no better than random. The reason behind this behavior is that the original photos that the AI were trained on, were taken all on sunny days for the tanks, all the pictures trees were taken on cloudy days. And so what they really built here was a machine that determined whether or not it was sunny or cloudy. And so this is really kind of a funny ending result here about an AI that did a really good job, right? It was given information and without information, it classified these pictures. And based off of the pictures themselves, the classification of sunny or not sunny became a lot easier or more prevalent than tank or no tank. And so this is a really good example of how AI is really only as smart as, currently, as smart as how we program it or what we tell it to do. And sometimes it ends up finding things out or doing things that we totally didn’t expect. And sometimes it turns out for the better.

So let’s take a better look at some other AI that is a little bit more modern, or a little bit more recent. So in 1997, so this is still pretty old, a little over 20 years old now, Deep Blue, a AI that was developed by IBM beat Garry Kasparov at chess. So Garry Kasparov was a world class chess player. This is a very big achievement at the time because chess again, like many other games can end up being far more complex than what you actually think. So IBM’s next AI venture was IBM’s Watson. Watson was a research project that started out in 2006. And its goal was to be able to learn from the internet. So basically be able to answer lots of questions based off of the information that it can actually scrape from the internet. So basically, Wikipedia type information, things that you search on Google, that sort of thing. A really huge achievement of IBM’s Watson was in 2011, beat Ken Jennings in Jeopardy, which Ken Jennings at the time, if you never watched Jeopardy, or haven’t watched Jeopardy for a while, Ken Jennings was one of the best players in jeopardy at the time. So after IBM’s Watson beat Jeopardy, IBM kind of repurposed the AI to start targeting things like the medical field. So being a computer that is able to answer or intelligently answer medical questions, also things like industrial questions. So it’s basically been in a continuous innovation project where it is basically an AI that is essentially better than your Google search. So not only does searching the internet for information, but actually coming up with this specific answer for the question.

Now even more impressive, Google’s DeepMind project had this AI called AlphaGo. And in 2015, this was the first AI to ever beat a professional human player in Go. Go is a really ancient game that originated in China with a board with a bunch of squares on it, and the task here is to end up with the most colored stones on the board. So there’s white and black stones, and it’s kind of like reverse if you’ve ever played Reversi, but a lot more complex. AlphaGo continued its winning streak by defeating the world champion at Go in 2016. So this is a huge achievement, because again, right, this is the first AI to ever beat a human player in Go. But the real achievement here is that Go is an extremely complex game. It has 10 to the power of 170 possible board configurations. And so an AI that can actually play a game better than a human player at this, or professional at this, is really quite an achievement, because computationally wise it’s practically impossible to look at all board configurations instantaneously at any time for all the moves. So AlphaGo really started to train itself. How it works is it played variations of itself, millions upon millions upon millions of times, to start to learn different techniques and strategies to actually play Go. And AlphaGo was later expanded into an algorithm called alphaZero, which played games like chess and checkers. This is a little bit different than most algorithms or AI’s that play chess and checkers, where those just generates the possible game trees and choose the best move. But alpha zero is deep learning base, so it plays with a little bit more strategy instead of just looking five or six minutes ahead.

Now we could talk on and on and on about the uses of AI and machine learning because it’s pretty much ubiquitous in our current life. But things like Microsoft Connect was a huge innovation that is able to track and map out a human skeleton so you can move and interact, right? This is kind of a big portion or big push into things like AI virtual or augmented reality. Things like Apple Siri or Amazon, Alexa or Cortana all are natural language processing AI applications. So AI that is able to understand and answer human speech questions which are really impressive and improve on it on a daily basis. Things like Wolfram Alpha. AI is pretty much everywhere right has so many interesting capabilities and applications and really have started to become integrated and ingrained in our daily life. ASIMO A S I M O Honda is another example of an attempt at artificial intelligence in this time in a very human like form, this has so many interesting capabilities. And they hope that one day can be used to assist humans in everyday life. Just like a lot of the smart home devices and things like that have started to integrate into our daily routines. The hope here is that we have robotic agents and AI that is able to further assist us in our daily tasks.

AI in Healthcare

YouTube VideoMarI/O - Machine Learning for Video Games

YouTube VideoIBM's Watson

YouTube VideoDeepMind

Summary of AI

YouTube VideoResources

Video Script

So in review, we’ve talked about Alan Turing and the Turing test, along with John Cirillo in Chinese room with the problems and issues that the Turing test actually has. Then, we talked about Newell and Simon’s logic theorist, one of the first AI programs ever to be put out there. Then, we talked a lot about the Dartmouth research projects where organizing the conference to start to kind of build out the idea of AI, and the vast amount of different subtopics, and tools, and projects, and applications of AI, along with things like neural networks with Marvin Minsky, and a bit about the current state of AI. So a lot of things like Deep Blue, AlphaGo, AI in the medical field. So there’s a vast range of different possibilities and applications that we’re currently experiencing, including things like self driving cars. So what’s missing from this discussion, because we could talk for a very long time about AI, and just purely just the applications of AI.

So things like philosophical implications of AI, ethical implications. So this is a really big one right now with self driving cars. So if you’ve ever heard of the moral machine, this is a project out there, I would encourage you to go check this out. But it’s for a self driving car. If you’re presented with a situation where, regardless of the decision you make, you’re going to kill something. What do you kill? Do you swerve to miss grandma crossing the streets? But when you swerve, you’re going to hit the little girl playing hopscotch on the sidewalk? Or do you swerve the other way and hit the dog walker walking down the street with a pack of cute little puppies? Or do you just speed on through the intersection and hit grandma? There’s a lot of moral implications here. Because, you know, when it’s a human making that decision, generally speaking, the human is going to be at fault, but when an AI makes that kind of decision, which involves human life, or other forms of life, who’s at fault? Is it the programmer who told the AI to do that type of behavior? Do we put the car in jail? Right? There’s a lot of ethical implications there around AI and the choice of life or death.

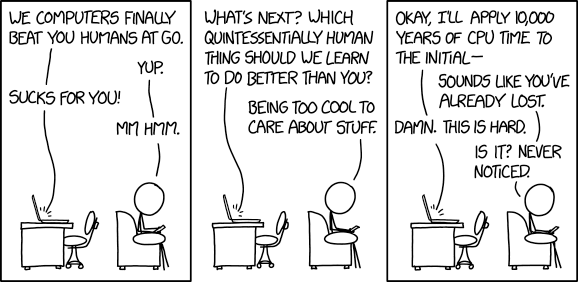

Solvability, right, are there things that a human can do, but the AI can’t, or vice versa? Singularity, the idea that we will reach a point where technology is growing faster than what we can actually do. So it basically grows out of control. And this is kind of like the robot overlords situation. If you’ve ever watched the movie I, Robot with Will Smith. That’s a really kind of good example of singularity and human-like intelligence and robotics. Like robots that have or AI has emotion and things like that. It kind of relates to consciousness as well, right? So we relate to humans being conscious. How to make an AI that has a conscience, or that human-like emotion and human level decision? But again, these are some really deep topics that we could talk a lot about. But these are just some points to kind of remember, as you’re kind of going about learning about AI watching the videos that we have for this particular module and moving forward into a world where AI is as ubiquitous and ingrained into our daily routines, and it’s going to continue that way even more so as as we go farther into the future.

The Moral Machine

What happens when our computers get smarter than we are? | Nick Bostrom

YouTube VideoMachine Learning & Artificial Intelligence: Crash Course Computer Science #34

YouTube VideoMachine Learning: Living in the Age of AI | A WIRED Film

YouTube VideoAlphaGo - The Movie | Full award-winning documentary

YouTube VideoDeepMind StarCraft II Demonstration

YouTube VideoAI vs. AI. Two chatbots talking to each other

YouTube VideoNine Algorithms that Changed the Future Ch 6 - Pattern Recognition

Nine Algorithms that Changed the Future Ch 6 - Pattern Recognition