Subsections of Search and Information Retrieval

Relational Data

YouTube VideoResources

Video Script

Welcome back everyone. In this video, we’re going to be taking a look at information retrieval. But before we get to that point, we need to start talking about databases and how data is actually stored. So for this example, we’re going to be working on our own social network called K-Stater. Specifically, we’ve been asked to help design a way to keep track of all the data on a site, like a social network, like K-Stater should have. So if you take a few minutes and brainstorm what kind of data or imagine what kind of information we may need, for example, of course, we’ll need to have some sort of way to track our users like maybe your eID, name, birthday, major, and maybe even just your phone number. And, you know, of course, we’ll need a lot of other information to make a full website like this work. But we can start off with the basics right, just keeping track of our users. But since we want to keep track of all that information, we need some way to actually store it. And so there’s tons of different ways that we could actually store that on our computers, right, of how our computer systems are designed to store information in that way.

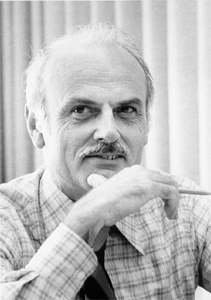

But thankfully, there was a huge change in how the data on our computers are actually stored, or at least how we even look at data stored on our computer. And that’s mostly thanks to this man here, Edgar F. Codd. While working for IBM in the late 1960s and early 70s, Codd began working on the ideas of relating to the storage and arrangement of data in computer systems. And in 1970, he published a paper called a relational model of data for large shared data banks that laid out this grand idea for a better way of storing data. That idea led to the creation of what we call a relational database. This is a form of database that is by far the most common in the world today. And if you’ve ever used things like Microsoft Access, or MySQL, or something like that, Microsoft Access is a simple type of relational database. That is pretty easy to use for most people just like what you would open up and use with Microsoft Word or even Excel, and Excel to some extent, depending on how you have your sheet set up is like a relational database, or at least relational data in some sense.

The idea behind a relational database revolves around three different terms, a relation, a tuple, and an attribute. A row in a table is called a tuple. It represents a single item that is stored in the database. In a simple example each person stored in our database could be a just a single tuple. Right, or if we are storing addresses for our users, so that address itself would be one tuple, or one row in our database. Each row or tuple has many attributes represented by columns in the table. An attribute could be something like a name, address, or like a street, zip code, phone number, one single piece of information that is associated with that one particular row or record in. The table itself is called a relation because it relates different attributes together uniquely, in order to describe objects as tuples. So our relation as a whole, right, a relational database. So each attribute strung together in a single tuple is like information related information, right? So we have we have a user, our user may have a name, a username, an email, and all sorts of different types of information. Each of those attributes are related to each other, or in an ideal situation of how we design our database. But relating those different attributes together is really kind of what we are, what we benefit, or what we get out of something like relational database, because it makes our data much easier to store because we store like information with like information, right, so all of those records that have similar information, like all of the user information, and it makes it a lot easier to store and search. Especially in a modern age where we have such large amounts of information, having this highly structured data makes it significantly easier to work with and use as you utilize apps like Facebook and Twitter.

But let’s do some hands on work with a relational database. So since we’re creating a social networking site, probably one of the first relations we’ll need to create is for the user. Right, because a social network is pretty much useless without some people to actually use it. So here’s one potential way that we could set that up. The data here is all nicely arranged into rows and columns, and in theory, right should be easy to look things up. Maybe not. Right? At least the way that I have it currently. So what if you wanted to find all of the students here in our in our user database, or our user relation here that are majoring in computer science? How would I go about doing that? Take a moment here to pause the video and take a look at a table here and see if you can find all of the users or come up with a way to find all of the users who majored in computer science. Hopefully you found all of the users there’s that do did majored in computer science is pretty straightforward, right? But for us humans, and for the small number of users, but for a computer to search our table here to find all of the computer science majors, it becomes a little bit more complicated. Obvious, my name is in there, Josh, first row there. My major is comp. sci, which is short for computer science. And we don’t need the information science or Information Systems majors there.

But we have another computer science major there, gameguy down there, his major is computersci, all one word. And so while both of those users are computer science majors, the listing or how can the computer science major is actually listed as entirely different. That makes the solution for a computer much more difficult to actually write because now we have to take into account in this situation, all of the different spellings and arrangements of the computer science major. So this particular relational table or this particular relation, we would refer to as not normalized so the data isn’t normalized, the data isn’t uniform. And so why do we want to normalize the data? Well, big obvious reason that we just experienced is normalized data makes it a lot easier to actually work with. So those data anomalies, right, the differentiation between compsci, CS, computersci, computer science, all of those differentiations, they’ll mean the same thing. But it becomes a lot harder and more difficult to use, and it makes it more prone to error as well. It also makes redesigning your information a lot easier. More often than not, when you’re actually working with an application or designing an application that’s working with a database, you’ll end up finding that your use cases change over time. And so more often than not, you have to go back in and redesign your database or redesign your tables in order to add new columns or attributes or entirely new relationships.

And so having normalized data to begin with makes that task significantly easier because you don’t have any data anomalies in an ideal world that can throw a wrench into your plan. But one of the other things that normalizing our data can actually accomplish is mirroring real world concepts. So we don’t want to store information into our relation in a random computerized way. Because that’s not the real goal that we’re trying to accomplish with these databases. The goal here is to store information that is significantly easier not just for the computer to actually search through, but also humans as well. And so we want to be able to store our relations in a way that mimic what we would normally store that information in real life. For example, addresses and things like that we don’t have need to come up with these crazy elaborate ways to actually store an address. But we want to make sure that it’s stored in a way that makes sense. Of course, and lastly, this also simplifies the search queries that we actually do on our relations or our data. So those that question that I asked of find all the users that majored in computer science is a lot easier if all of the computer science majors have the exact same spelling of the computer science major. And so having normalized information and normalized data also makes our search tasks significantly easier. So let’s take a look at a way that we can actually start to fix our unnormalized relation.

So first off, let’s take a look at the major column because that’s what we encountered first, and that’s what we tried to search first. So what can we do to make sure that the data in that column is easy to search through or easy to query? Now, if you pause to kind of think of a few ideas here, one potential way that I have listed here is to add an ID for each major. And so essentially, what we end up having here is a second relation. So we split the major off into a second table or a second relation, that acts as a lookup table. Okay, so if you imagine using your basic form online, when you’re signing up for stuff, a lot of times you’ll encounter drop down boxes, right? Those act like simple lookup tables, right, you are given a specific set of things to choose from, instead of typing in free text. Free text, when we’re talking about storing things inside of a table, if we want that free text to be uniform, we can’t rely on our users to enter that for us, we have to suggest and complete that and give them the options to actually select from. But since we have a separate table, here, we need something that’s called a primary key that identifies each record uniquely so we can include it in the other table. It also makes it easier to search.

Each relation in your relational database should have a primary key. And that primary key uniquely identifies each record. So each record in a table or an in relation should be able to be uniquely identified by any number of attributes. So a primary key does not have to be one single attribute. But it can be multiple attributes combined together that are unique. And without that uniqueness, there’s no possible way for us to uniquely retrieve any single record from our database. So that particular part is extremely important as far as relational database goes. What we have here in our new table is our major ID. And you can actually use that to look up the actual abbreviation or the actual major itself. So major ID in this table, this is our major table now. This column is going to be the primary key. And over here, we can just assume that our user ID is our primary key, because everyone at K-State has a unique eID. So we can safely assume that that is our primary key that uniquely identifies each user. But instead of having the major column here in our user table, we now have major ID. And so when a user actually selects their major, you can imagine they would be selecting this through a drop down box. But that is replaced not with the actual major, but the ID of that major.

So if we wanted to see what major John was, we take the major ID 3, look that up in our major table. And that tells us that John is an Information Systems major. And that information systems major has an abbreviation of IS. But this makes it significantly easier for us to look up all of the computer science majors because all we need to do is look up find computer science in our major table, what is that ID and then search that ID in our user table. And so that process is made significantly easier now. But let’s also talk about the birthday column, if we can think of different ideas to how to represent that. Because if you look over here, on our birthday column, we have a whole bunch of different variety of ways that we have for our birthday. So June 13th, June 1, February 2nd, Dec 26, Dec. 18th with a period 18th. So we have abbreviations for the months, days, with the th, nd. So there’s lots of different variations here, we and we don’t even have just the pure numbers yet, which you assume that people would add as well. So there is no uniform way of representing the birthday now. But what we could do is add that to our interface, right, we don’t have to necessarily purely rely on our database, although we could enforce a specific format for our column on the database side. But we can also enforce that when the date is actually entered. So we can have like a little calendar picker or something like that, or an algorithm actually checks the text before it’s actually saved. So just something to watch out for when you’re working with information.

It is a very good practice to keep our data normalized, to keep everything not just unique but evenly distributed or even format street same format for everything because if a human’s going through here and reading this, of course, we could also we can all see what the birthday is and understand what each of these mean. But when we’re querying our database or if our algorithm or computers looking at these records, it becomes significantly more difficult to actually understand. But let’s look at another example. What happens if we try to add a phone number to our user table? Now I’ve stripped off some of the other columns here just to make this example a little bit easier to see and fit onto one slide.

But let’s try to add our phone number. So but how many phone numbers does the typical person have these days? Well, landlines really aren’t that that common anymore, but people still have them. I have one in my office. But I also have, you know, my cell phone, I have a digital telephone number as well, and a couple others, right. So someone might have multiple, just even multiple wireless or cell phone numbers without even considering a landline. And so adding multiple phone numbers for a particular user can become a little bit difficult to actually do. And so if we try to just simply add, say, phone number one, phone number two, things get complicated really quickly, because if someone has more than one phone number, we had to add another column for their second one and another one for their third and so on. And so that doesn’t really become a very good practice. Because, in reality, we don’t want to have to add another call to our database or to our table every time we need to add a an extra phone number for a particular user. So like what we did before, let’s try to put that into a another table, its own table. So one of the ways that we could do this is just have a phone number table. and in this situation, Our phone number is going to be the unique or primary key. And then we have the users that connect the relationships together. So we have a we have many users, right to one phone number.

And this works, but still really doesn’t solve our problems, does it? We still have the same issue before, instead of having having to add multiple phone numbers, multiple phone columns, we now have to add multiple user columns, because right, what if an entire family signs up for our social network? That family might share a phone number, so each person in that family is going to have the same phone number. So every time another person from that family signs up, we’re going to have to add another user to that phone number. So we end up with pretty much the same problem that we did before. So if we flipped it again, right, we can still have our phone number table. But let’s flip that. What if we use the user ID for the primary key in both situations, so primary key over here is our user, right? But we’re going to connect that to a user in our phone table. But now instead of just having only the user ID as our primary key, we have the user ID and the phone number as our primary key.

So if we use both of those to uniquely identify a phone record, that becomes a little bit more easier to do, because we don’t duplicate any information here. Although, of course, we do duplicate the user ID down here, which is perfectly fine. But this makes it more easy to search, right. So this is a one to many type relationship, where we have one user too many phone numbers. So we have one user here, but many phone numbers here. And so that describes the relationship between these two tables. And so we can see it over here. Since the user ID and the phone number are unique together, we can have the same phone number for multiple users. So we have phone number 5134. So gameguy has two phone numbers, but Sharpie also shares the same number as gameguy here. Now this is just a simple example of how we might store a large amount of information or related information into a database that is easily retrievable by a computer.

Searching the Web

YouTube VideoResources

Video Script

So in this video, we’re going to reiterate a little bit what we talked about with our relational database, right? Because the whole point of the relational database is that we can store lots of information in a very structured way that is easily searchable. That’s really important in today’s world, because we have the World Wide Web, right, the internet, it’s huge. Now that we know how to store data in a reasonable fashion, we have to talk about where most of the data in the world is actually stored. And for that, really is just the internet. So at the highest level, right, we can think of the internet as a very big, very big, completely unstructured database of all of the information in the world, right, because pretty much almost everything is connected to the internet anymore. Not everything, but pretty much. Some parts of it are very structured, and very well formed and easily searchable. But generally, it will never be as well structured as we’d like. And one structure might be entirely different than another. And so it’s not going to be very uniform across the entire internet. However, we are pretty much constantly using tools like Google to find information and the vast amount of data that is out there on the web, and it does a pretty good job, doesn’t it? When you do a Google search most of the time you find what you’re looking for. So how do we go about finding information in a big giant unstructured pile of data that really is pretty much like finding a needle in a haystack, as far as Internet is concerned. So that task is the job of information retrieval specialist, and the algorithms and research they do. In a base form, right, let’s say we want to find some information on a very simple Internet, maybe this is back in the early early, early days, where there was pretty much nothing out there.

So our internet has three pages, only three pages, and those three pages have very little amount of information. And so we want to find out some information about all this data that is on the web. So we want to run these search queries. And you can kind of imagine these being just put into a Google search box. Okay, so we want to find all the pages that have cats, dogs, dog sat, “dog stood”, cat or mat, and cat and mat. But how could we do that? How could we find all of the pages that match these queries? Well, the most basic or straightforward way to do it is just to read through all the pages and find all the occurrences right. But in reality, the internet is huge, this approach works perfectly fine for what we currently have, right? We only have three pages, I can read this in like under five seconds and find all the results right. But doing that process on the actual internet is a really bad idea, because it’s huge and would take forever so your searches would just never complete. And so we need a better way of looking at data in order to answer all of our questions very quickly and accurately to that.

So the first step here, then is to try to simplify the data. So instead of scanning each character, each word, every single time we tried to run a search or query, we can actually do what we call indexing. For each word, we could create a list that a list that contains which document that word is actually listed in. This indexed collection becomes a little bit easier to search because now we don’t have to look at all of the words in all of the web pages, we’re just looking at this index list. So which queries that we had before? Which of these queries can be actually answered with this information? Well, we can find cat right because cat is one of the words that we have sat there. index. And if we look at cat here, you can see that cat occurs in our document 1, and then cat also occurs and document 3. But in order to find cat, we didn’t have to read the entirety of one we didn’t have to read two. And we didn’t have to read the entirety of three that is already done for us. We just find the word in our list, and then we know already which pages that actually occurs in. But most of these can work just fine, right? Even dog sat, dog space sat, so we’re trying to find any of any occurrences of dog and any occurrences of sat here so quickly. Number 3 here would return to 3. And then it would also return 1-3. So, so one, two and three would be returned entirety for that query there. But cat or matt also works. Cat and mat. So pages that have both cat and mat.

Overall, we can answer all these queries, right? Except for “dog stood”. So “dog stood” doesn’t work. Why? If you ever ran a Google search before, so if you type in just a two word search query is looking for any occurrences in any position on any page. So if I search for just dog space sat, it’s looking for any occurrences of dog and any occurrences of sat on that webpage. But if I use quotation marks, it’s looking for position, it’s looking for position, right, it’s looking for the word dog, immediately followed by a space, followed by the word stood. So now we’re dealing with the position of the words in the webpage. We can’t answer information like that with what we have. Because all we are actually storing here is the word itself, and then if it occurs on and what page it actually occurs on on the in the web, and not the position of where that word occurs on in that page. So in order to do that, we need to modify our index algorithm a little bit. So to improve our indexing algorithm, we could store the location of the word within the document, along with the document number in our index.

So using this information, we can answer all of our queries now, right? How would we actually do that? Well, let’s skip all the rest of them, because we already know we can answer everything else. But let’s look at dog stood. So first we’re looking for for dog, right, so imagine what our algorithm is looking for here, we’re looking for dog stood dog, followed by the word stood. So we can look at dog; dog occurs at two, two. So document two, position two, and document three, position six. So that’s well and good. And so we also need to find stood. So we find stood at document two, three, and document three, three. So dog and stood occur both in both two, documents two and three. So let’s look at the position of those words. So stood obviously is the second position and dog is the first position, so we need to have dog followed by stood. So if we look at the second number here, which is the position of the word, so we have dog that occurs at position two here, and stood that occurs at position three here. So since dog is immediately followed by stood, document two would be a valid page that matches that query. But if we look at document three, dog occurs at position six, and stood occurs at position three. And so since stood does not come after dog in document three, that is not a valid page that matches our search results, or our search query. So in a very, very basic way, this is what your Google search query is actually doing on the internet, it’s trying to, or what Google is doing is trying to basically index the entire internet, so that when you run a search query, it knows where that text is actually occurring in the web, and you can run more intelligent queries like this to find a little bit more accurate information and narrow down your search results.

So if we made an algorithm to actually do execute what we just did with dog stood, it would look something like this in a very formal sense. We haven’t shown you any formal algorithms in this structure yet. A formal definition of an algorithm will look like this, where we have input, output and the algorithm steps itself. So this is kind of like pseudocode here. So the input or in other words, called the precondition describes what the input will actually be to this algorithm or the expected input. So in this case, we’re expecting a two word phrase in the form of word one followed by word two, and this is in a quoted string. And the output or the post condition describes the expected or produced output from the algorithm. So given this this input, we’re going to produce this output, and our output is going to be an answer list of all of the numbers of the webpages that contain the phrase. And then the steps that we have here are exactly what I did just before, where we are going to index the web here. So the page number position number pairs for word one, so find all the matches for word one in our index and find all the matches for word two in our index. And then we’re going to go through each of those page number position pairs to see if we have a word that happens right after it. So for each pair in list one, see if there is a pair in list two such that the page number is the same as the one in list one. And the position number is immediately after the position number in the first page position pair. Looks a little bit more complicated than what I actually did before.

So that is a big step that we’re kind of making here and formalizing our algorithm definition. But you’ll see this a lot in computer science, especially as you move forward in our courses. But we can’t answer all of our search queries using this particular algorithm. This particular algorithm is expecting our word one word to our two word phrase here. And so it works perfectly fine for when we’re trying to look up the phrase cat sat, using our indexing list or our indexed list. But cat space stood doesn’t match our precondition, because it doesn’t have the quotation marks around it. We could answer cat stood, but we couldn’t answer cat or stood.

So how could we modify our algorithm in order to handle the other different types of queries? And so there’s a lot of things that we would have to think about when we did that, we would have to make sure we’re modifying our precondition for it to accept different types of search queries. And then we’re going to also have to modify our algorithm itself in order to handle those different types of search queries. So this is just some more complexities of actually formalizing an algorithm definition to make sure and one of the reasons why we would actually do that is to make sure our to make sure to verify that our input and output is correct. So making sure our algorithm does what we want it to actually do.

The Internet: How Search Works

YouTube VideoPage Rank

YouTube VideoResources

Video Script

Welcome back. In this video, we’re going to be taking a deeper look into what kind of queries we can actually ask of Google or searching the web. So we’ve already looked at simple queries like cat, dog, or cat and dog, or cat or dog. But that’s really not as interesting of a question to ask of the internet, right? When we’re wanting to find all of the webpages that are about cats, not just have the occurrences of the word cat, but are about cats. And so how do you know which web pages are more likely to be about cats and webpages that are more likely to be about dogs? And how could you tell the difference? And how would you modify our search algorithm that we had talked about earlier to account for that. So this ranking of the internet becomes a little bit more complicated than our simple straight up text queries.

But as we learned in the HTML lectures, pages in the world wide web have structure to them in the form of HTML tags. And so we could add more information about those tags called metawords to our indexing algorithm. And so now we could could re query the index to find specific words in. for example, the title. So in theory, right at a very simple explanation is a article that contained the word cat in the title is probably more likely to be about cats than it is dogs, even though dog was found on that webpage somewhere in the text. But again, this is a very simple approach, right? The metawords help our algorithm, help our searching, but it’s not going to be a pure ranking system, right? Because basing our questions and answers off of just the text, and these tags won’t yield very efficient results. And it won’t yield a very good collection or a very good curated, top 10 list of dogs? And how do we rank them in priority from our search? Because obviously, there’s a lot of cats and dogs on the internet. So how does one webpage about cats come first, early web search engines dealt with. So as the web got larger and larger, it was more and more difficult to find good pages in all of the bad or useless ones.

One of the early search engines was actually called AltaVista, it grew very quickly at first. So in 1996, it had five servers, 210 gigs of search engine that was constantly indexing the web, late 90s. So having 500 gigs of storage, and 130 gigs of RAM was big, right? This was a huge, huge set of servers, and very powerful machine in order to index web. And it was able to handle 13 million queries daily. So considering this the late 90s, Internet was still relatively new, but this was also getting very close to the .com era. But as we mature into the .com era, Alta Vista was eclipsed by another. So Yahoo, came in and bought them out in 2003, and then eventually shut them down in 2011. But Alta Vista was one of the first major successful search engines that we had for the internet.

We’ll transition into technology that was developed and published in a paper called the Anatomy of a Large-Scale Hypertextual Web Search Engine that provided the answer to our question of how do we rank the internet. They created a new algorithm called PageRank, named after Page himself, that could rank search engine results based off of their authority of the page that was actually found But let’s see how this actually works.

So, as you know, the worldwide web is interconnected ith hyperlinks. So if we had two hypothetical web pages here, Ernie’s scrambled egg recipe and Bert’s scrambled egg recipe. And we could analyze those links that are going o and from that page to learn more about it. With our previous indexing algorithm, if we found eggs on both of these pages, so they’re technically about eggs, but which one should come first. Should Ernie’s come first in our search results, or Bert’s? Which one of these do you think would be the top result for a scrambled egg recipe and why? So in theory, right, Ernie’s scrambled recipe, even if it is better than Bert’s, Ernie s recipe only has one other web page that links to it. So this could be a comment, it could be another blogger, whatever it may be, maybe a Facebook post. But there’s only one person that has a link to that webpage. But Bert’s recipe is obviously more popular, or at least more well known, because it has three pages that link to it. So according to the page rank, here, Bert’s recipe has more authority about scrambled eggs, then Ernie as on scrambled eggs. So Bert’s would come first in our search result and Ernie’s would come next, if these were only two web pages about scrambled eggs. But unfortunately, the World Wide Web is not as nicely structured as we like. So it is highly dependent on the actual websites themselves and other things that become a lot more difficult to actually search through.

But what about this particular example, which page would be ranked higher? Well, we still have Ernie’s and Bert’s scrambled egg recipe, we have John and Alice here. John’s web page points to Ernie’s and Alice’s points to Bert’s. Ernie’s recipe only tried it once it’s not too bad. Alice is clearly a lot more excited about Bert’s recipe. Bert’s recipe is clearly one of the best recipes. So if we are just basing this off of the text, Alice has more authority or would have more authority than John, if we’re looking at the sentiment or the excitement here. But this becomes a problem if we’re just looking at links, though. Right? One link here, one link here. Which one comes first? How do we break that tie? Well, let’s take a look at it as far as the authority of the pages that actually link to it. So John and Alice both have a link to Ernie and Bert’s scrambled egg recipe.

So that’s one and one for both Ernie and Bert. But John is a new blogger; John doesn’t have very many followers so John does not have very much authority. So John has only two links pointing to his web page. But Alice is a really popular blogger. And so she has a lot of different pages, maybe she has 100 different pages that are pointing to that page. And so Alice ends up having more authority. And since Alice has more authority, a link from Alice to Bert’s scrambled egg recipe has far more weight than John linking to Ernie’s scrambled egg recipe. And so in that sense, Bert’s recipe would still come first in our page ranking algorithm over Ernie’s scrambled egg recipe because Alice has more weight, more importance, more authority than John’s page, even though Ernie and Bert have the same number of links to their websites.

But sadly, again, right the internet is not a nice tree structure like that either. Sometimes it’s full of these cyclic connections like this. So if we follow for example, A to B to E to A to B, E A to B to E, we get stuck in these cycles. And these cycles are what we call syncs. Okay, so they suck up all of the authority of the internet. Because our algorithm gets stuck So if our indexer is just sitting there out there on the eb following links, it’ll just et stuck in this cycle. It’ll follow that link over that cycle over and over and over and ver and over and over again. And so all of the authority gets consumed by that cycle. So we can’t just simply follow links on the web because we’ll get stuck in those an all other web pages will have no authority whatsoever.

So one of the ways that actually Page and Brin suggested is called this random surfer model. So imagining that you are simulating the random surfer, you’re going to start on some web page, totally at random on the internet, and then just start clicking on random links. And you’ll continue on that pattern until at some random point in the future, you teleport to a new page and start surfing again. So start on a page, start clicking links on that web page, and then, and then you’ll eventually get ported off into another web page. And then you’ll start this process over again. And so instead of just selecting each link, in a specific order on a web page, you’re going to randomly select links to actually follow, this will cause you to break out of those cycles. And if you do this enough time, keeping track of the percentage of visits that you made onto a particular web page, you can use those values as the rank of the page. So the randomness here, the more links that you have to a page, theoretically speaking, you are more likely to be randomly surfed to others.

So let’s take a look at a example of the random surfer w th PageRank. So here is our sample web that we’re actually crawling here. So we have site A goes to home has an about page, product page, and more on the product page, links to another we site. So site A has these four pages, and then the product page on site a links to site B. Now, let’s say we are just rolling a set of dice, and each of these web pages has a link and that die, the probability the number on that die will would have you either follow that link, or stay on that webpage, right. If multiple links on were, the number that was rolled on the die, depends on which link you actually follow. If you keep on randomly rolling the die, and following links here, which page actually has more authority in this particular example? So if we kept on doing our random page search, so let’s see, if we started at S te A, and we went to home, we could have, we have three different options here. So three outgoing links, and three incoming links. And same thing for about has one, two, three outgoing and three incoming, and so does the more web page. So it has three outgoing and three incoming.

A couple differences here: home has four incoming links, and product has four outgoing links. And so if we keep on randomly rolling our dice here, this is what the authority would actually look like. So if we went long enough, the about and more page would be identical in authority because due to probability, they’re equally as probable to be visited as the other because they bo h have three outgoing links, and three incoming links. The product page has a little bit more authority than the about and more page because there are three or four outgoing links, and only three incoming links. So they have a few extra inks on here that it can actually follow even though it causes the product page to go to site B. Site B only has one possible way of actually being visited, and so that has the smallest authority. But the homepage has the highest authority among all of the pages on site a because it has four incoming links and three outgoing links. So probability wise, it is more likely to be visited than all of the other pages here. And that’s the idea of the random surfer model, even though we have a cycle here. So from home, the more the product about home more product about. Even though we have cycles, the random surfer model is going to narrow down that cycle regardless of the cycle and narrow down which web page is actually more likely to be visited based off of the links that actually finds.

Another way you can do this is by simply generating all possible paths that can be found on the internet. And you can calculate the length of those paths and the percentage of the times that those particular pages show up in those paths. So if we calculate all the different paths that we can actually find here that are less than five, so five sites deep, we actually find that site a occurs more frequently in those past than all others. So this is a little bit different than the random surfer model. So this is a little bit more discreet, meaning that we have a little bit more of an order to things instead of everything being completely random. It does help solve the random surfer model, although we do have to limit the length of our path. So there is some downsides to this technique.

But of course, there’s a lot of different problems out there that could get in our way of actually searching data, right? Instead of just those cycles, right? We have a lot of other issues like spam. How do we take into account our all those ads that are on the internet that are just basically plugging the internet, although that’s, you know, how a lot of people make their money online. But how do you avoid spam? How do you avoid ads that you don’t want to search? How do you avoid malicious websites? You don’t want a malicious website to be the number one hit on Google. How do you take into account the size of the internet and reduce computation time? How do you search non textual information? Right? We have a lot of images and videos on the internet now. And audio, how do you take that into account? Structured versus unstructured data and a lot of lots of different other things. And that brings us really into the difference between what we had with web 1.0 to web 2.0. This has a such a major impact on how the world wide web works. And so after the .com bubble burst, no one really knew where the internet was headed. And a lot of different talks were being had about what the internet could be, or what it could be different, right?

Because at the time, the internet was a place where companies just push content to their users. But now the internet is a place where users can communicate and share their own content quickly and easily. And eventually, that led to this idea of web 2.0, which we’ve talked about before. But the reason that we bring this back up is that the web is a dynamic place now or the web is a more complicated place now. We have web 1.0. We had static web pages that didn’t change very often. And so that led to search algorithms that were significant easier to do because we were dependent mostly on text. And now we have dynamic web pages where the web page that I visit is entirely different than the webpage you visit even if it’s the same URL, because it depends on who’s logged in. It depends on your browsing history. Now we’re also looking at user generated content. So if you look at a Reddit post, right, Reddit trying to search Reddit, how does that show up in Google? Because new Reddit posts are made every single second. So how do you take that into account? How do you take into account multimedia pages, so things like YouTube videos, audio, songs, images, pictures of cats versus pictures of dogs when you’re trying to search that versus text. And this is an entirely different web that we live in now versus what we had in the 90s when early search engines were about. And so this is something that is going to be a continuous problem as we kind of move forward and as the web grows, but it’s just kind of highlighting the importance of this sophistication of the search engines that we are currently able to use.

Nine Algorithms that Changed the Future Ch 3 - Page Rank

Nine Algorithms that Changed the Future Ch 3 - Page Rank

Nine Algorithms that Changed the Future Ch 8 - Databases

Nine Algorithms that Changed the Future Ch 8 - Databases